iperf3 speeds confusing - fast = bad internet slow = good internet?

-

Yesterday I spent a lot of time going through my network settings and attempting to optimize certain things. I was running iperf3 on many of my VM's to see if I had any bottlenecks on my 10Gbe capable system (ESXi host).

pfsense being virtualized with two 10GBe NIC's passed through always seemed to deliver the speeds I needed for internet (1500/1000 fiber) - however I was surprised to see a fairly low iperf3 score of around 2.8-3Gbit/s

After some light reading I turned on all the offloading under Advanced > Networking and wow - iperf3 jumped right up to 13-15 Gbit/s!! I was blown away at that point and let it all be.

Everything seemed to be working fine - however today I seen a lot of failed attempts of friends and family to be able to initialize a connection to my plex server. this was surprisedin as on my LAN Plex was working fine, speed tests were fine, etc.

So I attempted it myself from my phone on LTE - sure enough it took forever to 'buffer' the first few seconds on low bit rate and high bitrate/original quality just never loaded.

I started to play with the 3 settings one by one until I found the combination that works.

All 3 offloads off > works

TSO + LRO = off and Hardware checksum = on > works

LRO + Hardware checksum = off and TSO = on > worksOf course my iperf3 speeds are back down to ~2.9Gbit/s and any other combination doesn't work.

I also found during this test that turning LRO is where I see the massive iperf3 increase back to 13-15 Gbit/s.

So, what am I missing here? How can worse iperf3 throughput numbers (which also has far more retries) not give me issues with throughput on the WAN side (coming in only?) vs much higher iperf3 numbers with far fewer retries essentially breaking the internet for at least plex/large throughputs.

My NIC's are 2x onboard 82599EB (x520?) SFP+ to a 10Gb 16XG Edgeswitch

8GB ram and 4v CPU to the Pfsense box.

Disabling Suricata and NG made no difference at all.iperf results:

Only LRO Offloading enabled: Connecting to host 10.10.0.254, port 5201 [ 4] local 10.10.1.10 port 46730 connected to 10.10.0.254 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 2.08 GBytes 17.9 Gbits/sec 0 1011 KBytes [ 4] 1.00-2.00 sec 1.43 GBytes 12.3 Gbits/sec 995 1.28 MBytes [ 4] 2.00-3.00 sec 1.43 GBytes 12.3 Gbits/sec 1132 1.00 MBytes [ 4] 3.00-4.00 sec 1.64 GBytes 14.1 Gbits/sec 0 1.13 MBytes [ 4] 4.00-5.00 sec 1.47 GBytes 12.6 Gbits/sec 616 574 KBytes [ 4] 5.00-6.00 sec 1.49 GBytes 12.8 Gbits/sec 0 1.15 MBytes [ 4] 6.00-7.00 sec 1.85 GBytes 15.9 Gbits/sec 0 1.27 MBytes [ 4] 7.00-8.00 sec 1.24 GBytes 10.7 Gbits/sec 0 1.31 MBytes [ 4] 8.00-9.00 sec 1.48 GBytes 12.7 Gbits/sec 843 1.20 MBytes [ 4] 9.00-10.00 sec 1.42 GBytes 12.2 Gbits/sec 0 1.20 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 15.5 GBytes 13.3 Gbits/sec 3586 sender [ 4] 0.00-10.00 sec 15.5 GBytes 13.3 Gbits/sec receiver iperf Done. Offloading disabled to have a properworking WAN: Connecting to host 10.10.0.254, port 5201 [ 4] local 10.10.1.10 port 47114 connected to 10.10.0.254 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 249 MBytes 2.09 Gbits/sec 1072 407 KBytes [ 4] 1.00-2.00 sec 355 MBytes 2.97 Gbits/sec 2626 506 KBytes [ 4] 2.00-3.00 sec 351 MBytes 2.94 Gbits/sec 2562 437 KBytes [ 4] 3.00-4.00 sec 362 MBytes 3.04 Gbits/sec 2005 416 KBytes [ 4] 4.00-5.00 sec 350 MBytes 2.93 Gbits/sec 2492 455 KBytes [ 4] 5.00-6.00 sec 360 MBytes 3.02 Gbits/sec 2616 298 KBytes [ 4] 6.00-7.00 sec 355 MBytes 2.98 Gbits/sec 2112 420 KBytes [ 4] 7.00-8.00 sec 358 MBytes 3.00 Gbits/sec 2374 413 KBytes [ 4] 8.00-9.00 sec 361 MBytes 3.03 Gbits/sec 2521 380 KBytes [ 4] 9.00-10.00 sec 369 MBytes 3.10 Gbits/sec 1419 366 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 3.39 GBytes 2.91 Gbits/sec 21799 sender [ 4] 0.00-10.00 sec 3.39 GBytes 2.91 Gbits/sec receiver -

@notorious_vr said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

Yesterday I spent a lot of time going through my network settings and attempting to optimize certain things.

Hi,

So, I haven’t found a better guide to what you set out to achieve:

https://calomel.org/freebsd_network_tuning.html

https://calomel.org/network_performance.html

+++edit:

we mention this a lot:

TSO / LRO is not good for you if you are a router (pfSense), if you are an endpoint = YES -

@daddygo thanks for the links...

However I suppose I don't see the relationship to my iperf3 speeds in this case.

BTW, the ESXi box is very capable in terms of CPU horsepower IMO:

2x Xeon E5-2695 v2 @ 2.40GHz

Don't get me wrong, my current config is obviously capable of saturating my internet connection (at least downstream) with speed tests consistently around 1500-1600 Mbit/s. But ingress isn't always so stable (which I know is not so easy).

I just did a quick iperf3 test with the offloads disabled (current working config).

Interestingly enough the slow iperf3 speeds are only TO pfsense (pfsense being the ipef3 server). Going the other way (CentOs box being the iperf3 server) I'm seeing very good speeds as I would expect.

I'm not quite sure where I can make the improvements reuiqred in the incoming connections to pfsense where I'm seeing only ~2.8Gbit/s

See results (from the CentOS box):

Connecting to host 10.10.0.254, port 5201 [ 4] local 10.10.1.32 port 40738 connected to 10.10.0.254 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 292 MBytes 2.45 Gbits/sec 34 509 KBytes [ 4] 1.00-2.00 sec 345 MBytes 2.89 Gbits/sec 215 431 KBytes [ 4] 2.00-3.00 sec 356 MBytes 2.99 Gbits/sec 78 361 KBytes [ 4] 3.00-4.00 sec 332 MBytes 2.79 Gbits/sec 57 318 KBytes [ 4] 4.00-5.00 sec 321 MBytes 2.68 Gbits/sec 69 393 KBytes [ 4] 5.00-6.00 sec 322 MBytes 2.71 Gbits/sec 134 501 KBytes [ 4] 6.00-7.00 sec 325 MBytes 2.73 Gbits/sec 42 498 KBytes [ 4] 7.00-8.00 sec 325 MBytes 2.73 Gbits/sec 113 452 KBytes [ 4] 8.00-9.00 sec 334 MBytes 2.80 Gbits/sec 82 458 KBytes [ 4] 9.00-10.00 sec 335 MBytes 2.81 Gbits/sec 26 540 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec 850 sender [ 4] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec receiver iperf Done. [root@dockersrv02 ~]# iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Accepted connection from 10.10.0.254, port 57649 [ 5] local 10.10.1.32 port 5201 connected to 10.10.0.254 port 64408 [ ID] Interval Transfer Bandwidth [ 5] 0.00-1.00 sec 1.04 GBytes 8.92 Gbits/sec [ 5] 1.00-2.00 sec 1.56 GBytes 13.4 Gbits/sec [ 5] 2.00-3.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 3.00-4.00 sec 1.65 GBytes 14.2 Gbits/sec [ 5] 4.00-5.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 5.00-6.00 sec 1.67 GBytes 14.3 Gbits/sec [ 5] 6.00-7.00 sec 1.64 GBytes 14.1 Gbits/sec [ 5] 7.00-8.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 8.00-9.00 sec 1.58 GBytes 13.6 Gbits/sec [ 5] 9.00-10.00 sec 1.64 GBytes 14.1 Gbits/sec [ 5] 10.00-10.20 sec 333 MBytes 14.3 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 5] 0.00-10.20 sec 0.00 Bytes 0.00 bits/sec sender [ 5] 0.00-10.20 sec 16.1 GBytes 13.5 Gbits/sec receiver ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- ^Ciperf3: interrupt - the server has terminated [root@dockersrv02 ~]# -

@notorious_vr said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

I'm not quite sure where I can make the improvements reuiqred in the incoming connections to pfsense where

We talked a lot here about this on the forum...

Always the configuration first, then the measurements (iPerf) can come.Yes this is different under Linux, as you can read in the description in the link.

I wouldn’t use hypervisor instead of bare metal for an important device like router + FW, but it’s a matter of taste, you don’t have redundancy.. (I hope you know that)

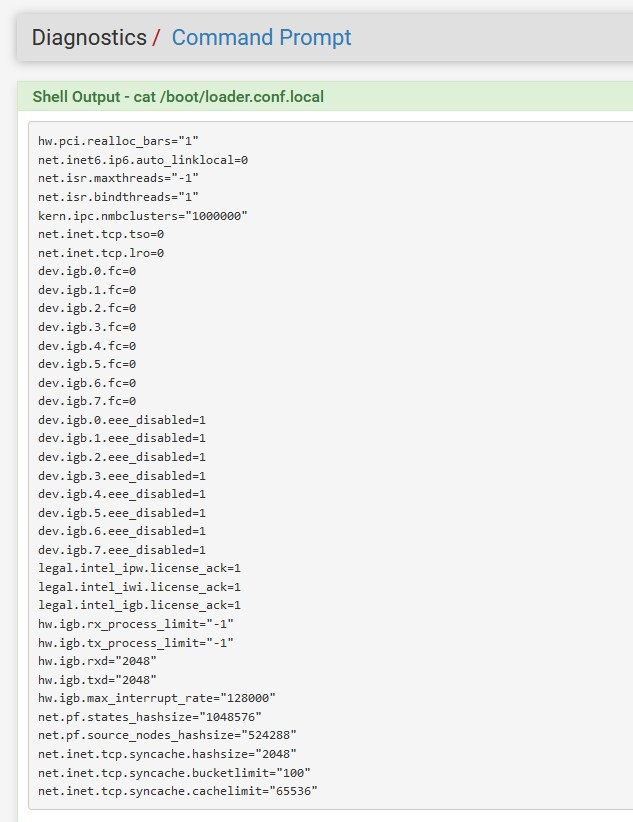

so i suggest you set your parameters first: loader.conf.local

such as:

- flowcontrol disable (dev.ixyz.0.fc=0)

- EEE disable (dev.ixyz.0.eee_disabled: value=1)

(these are explanatory values)

something like that, but implement it on your own hardware set:

+++edit:

test,.... can still be these:ifconfig -vma

dmesg | grep -i msi

vmstat -i

sysctl -a | grep num_queues -

@notorious_vr said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

@daddygo thanks for the links...

However I suppose I don't see the relationship to my iperf3 speeds in this case.

BTW, the ESXi box is very capable in terms of CPU horsepower IMO:

2x Xeon E5-2695 v2 @ 2.40GHz

Don't get me wrong, my current config is obviously capable of saturating my internet connection (at least downstream) with speed tests consistently around 1500-1600 Mbit/s. But ingress isn't always so stable (which I know is not so easy).

I just did a quick iperf3 test with the offloads disabled (current working config).

Interestingly enough the slow iperf3 speeds are only TO pfsense (pfsense being the ipef3 server). Going the other way (CentOs box being the iperf3 server) I'm seeing very good speeds as I would expect.

I'm not quite sure where I can make the improvements reuiqred in the incoming connections to pfsense where I'm seeing only ~2.8Gbit/s

See results (from the CentOS box):

Connecting to host 10.10.0.254, port 5201 [ 4] local 10.10.1.32 port 40738 connected to 10.10.0.254 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 292 MBytes 2.45 Gbits/sec 34 509 KBytes [ 4] 1.00-2.00 sec 345 MBytes 2.89 Gbits/sec 215 431 KBytes [ 4] 2.00-3.00 sec 356 MBytes 2.99 Gbits/sec 78 361 KBytes [ 4] 3.00-4.00 sec 332 MBytes 2.79 Gbits/sec 57 318 KBytes [ 4] 4.00-5.00 sec 321 MBytes 2.68 Gbits/sec 69 393 KBytes [ 4] 5.00-6.00 sec 322 MBytes 2.71 Gbits/sec 134 501 KBytes [ 4] 6.00-7.00 sec 325 MBytes 2.73 Gbits/sec 42 498 KBytes [ 4] 7.00-8.00 sec 325 MBytes 2.73 Gbits/sec 113 452 KBytes [ 4] 8.00-9.00 sec 334 MBytes 2.80 Gbits/sec 82 458 KBytes [ 4] 9.00-10.00 sec 335 MBytes 2.81 Gbits/sec 26 540 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec 850 sender [ 4] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec receiver iperf Done. [root@dockersrv02 ~]# iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Accepted connection from 10.10.0.254, port 57649 [ 5] local 10.10.1.32 port 5201 connected to 10.10.0.254 port 64408 [ ID] Interval Transfer Bandwidth [ 5] 0.00-1.00 sec 1.04 GBytes 8.92 Gbits/sec [ 5] 1.00-2.00 sec 1.56 GBytes 13.4 Gbits/sec [ 5] 2.00-3.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 3.00-4.00 sec 1.65 GBytes 14.2 Gbits/sec [ 5] 4.00-5.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 5.00-6.00 sec 1.67 GBytes 14.3 Gbits/sec [ 5] 6.00-7.00 sec 1.64 GBytes 14.1 Gbits/sec [ 5] 7.00-8.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 8.00-9.00 sec 1.58 GBytes 13.6 Gbits/sec [ 5] 9.00-10.00 sec 1.64 GBytes 14.1 Gbits/sec [ 5] 10.00-10.20 sec 333 MBytes 14.3 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 5] 0.00-10.20 sec 0.00 Bytes 0.00 bits/sec sender [ 5] 0.00-10.20 sec 16.1 GBytes 13.5 Gbits/sec receiver ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- ^Ciperf3: interrupt - the server has terminated [root@dockersrv02 ~]#@notorious_vr said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

@daddygo thanks for the links...

However I suppose I don't see the relationship to my iperf3 speeds in this case.

BTW, the ESXi box is very capable in terms of CPU horsepower IMO:

2x Xeon E5-2695 v2 @ 2.40GHz

Don't get me wrong, my current config is obviously capable of saturating my internet connection (at least downstream) with speed tests consistently around 1500-1600 Mbit/s. But ingress isn't always so stable (which I know is not so easy).

I just did a quick iperf3 test with the offloads disabled (current working config).

Interestingly enough the slow iperf3 speeds are only TO pfsense (pfsense being the ipef3 server). Going the other way (CentOs box being the iperf3 server) I'm seeing very good speeds as I would expect.

I'm not quite sure where I can make the improvements reuiqred in the incoming connections to pfsense where I'm seeing only ~2.8Gbit/s

See results (from the CentOS box):

Connecting to host 10.10.0.254, port 5201 [ 4] local 10.10.1.32 port 40738 connected to 10.10.0.254 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 292 MBytes 2.45 Gbits/sec 34 509 KBytes [ 4] 1.00-2.00 sec 345 MBytes 2.89 Gbits/sec 215 431 KBytes [ 4] 2.00-3.00 sec 356 MBytes 2.99 Gbits/sec 78 361 KBytes [ 4] 3.00-4.00 sec 332 MBytes 2.79 Gbits/sec 57 318 KBytes [ 4] 4.00-5.00 sec 321 MBytes 2.68 Gbits/sec 69 393 KBytes [ 4] 5.00-6.00 sec 322 MBytes 2.71 Gbits/sec 134 501 KBytes [ 4] 6.00-7.00 sec 325 MBytes 2.73 Gbits/sec 42 498 KBytes [ 4] 7.00-8.00 sec 325 MBytes 2.73 Gbits/sec 113 452 KBytes [ 4] 8.00-9.00 sec 334 MBytes 2.80 Gbits/sec 82 458 KBytes [ 4] 9.00-10.00 sec 335 MBytes 2.81 Gbits/sec 26 540 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec 850 sender [ 4] 0.00-10.00 sec 3.21 GBytes 2.76 Gbits/sec receiver iperf Done. [root@dockersrv02 ~]# iperf3 -s ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- Accepted connection from 10.10.0.254, port 57649 [ 5] local 10.10.1.32 port 5201 connected to 10.10.0.254 port 64408 [ ID] Interval Transfer Bandwidth [ 5] 0.00-1.00 sec 1.04 GBytes 8.92 Gbits/sec [ 5] 1.00-2.00 sec 1.56 GBytes 13.4 Gbits/sec [ 5] 2.00-3.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 3.00-4.00 sec 1.65 GBytes 14.2 Gbits/sec [ 5] 4.00-5.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 5.00-6.00 sec 1.67 GBytes 14.3 Gbits/sec [ 5] 6.00-7.00 sec 1.64 GBytes 14.1 Gbits/sec [ 5] 7.00-8.00 sec 1.66 GBytes 14.2 Gbits/sec [ 5] 8.00-9.00 sec 1.58 GBytes 13.6 Gbits/sec [ 5] 9.00-10.00 sec 1.64 GBytes 14.1 Gbits/sec [ 5] 10.00-10.20 sec 333 MBytes 14.3 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 5] 0.00-10.20 sec 0.00 Bytes 0.00 bits/sec sender [ 5] 0.00-10.20 sec 16.1 GBytes 13.5 Gbits/sec receiver ----------------------------------------------------------- Server listening on 5201 ----------------------------------------------------------- ^Ciperf3: interrupt - the server has terminated [root@dockersrv02 ~]#First golden rule if

iperf, do NOT put it on the pfSense box. That puts double-duty on the pfSense box and you get incorrect results. pfSense is a router, and thus is optimized for routing traffic through the box in one interface and out another. If it has to also take CPU time to be "iperf" as well, then things suffer. The pfSense developers have mentioned here many times to never put theiperfclient nor server on the pfSense box. Put theiperfpieces on other boxes, then route their traffic through pfSense (such as from one VLAN to another or one physical port to another). -

@bmeeks said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

First golden rule if iperf, do NOT put it on the pfSense box. That puts double-duty on the pfSense box and you get incorrect results. pfSense is a router, and thus is optimized for routing traffic through the box in one interface and out another. If it has to also take CPU time to be "iperf" as well, then things suffer. The pfSense developers have mentioned here many times to never put the iperf client nor server on the pfSense box. Put the iperf pieces on other boxes, then route their traffic through pfSense (such as from one VLAN to another or one physical port to another).

Well that makes zero sense to me. iperf (client and server) is installed by default in pfsense and is accessible via the diagnostic menu in the GUI.

Also we're not talking about some low power box, as you can see from the specs I listed above there is ZERO issues with horsepower here. ifperf isn't that resource hungry, I don't think the CPU even goes over 15% when I run the tests.

-

@notorious_vr said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

@bmeeks said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

First golden rule if iperf, do NOT put it on the pfSense box. That puts double-duty on the pfSense box and you get incorrect results. pfSense is a router, and thus is optimized for routing traffic through the box in one interface and out another. If it has to also take CPU time to be "iperf" as well, then things suffer. The pfSense developers have mentioned here many times to never put the iperf client nor server on the pfSense box. Put the iperf pieces on other boxes, then route their traffic through pfSense (such as from one VLAN to another or one physical port to another).

Well that makes zero sense to me. iperf (client and server) is installed by default in pfsense and is accessible via the diagnostic menu in the GUI.

Also we're not talking about some low power box, as you can see from the specs I listed above there is ZERO issues with horsepower here. ifperf isn't that resource hungry, I don't think the CPU even goes over 15% when I run the tests.

I'm simply repeating what the developers have posted here several times. There may well be more going on under the cover than the simple CPU usage stat shows. You said yourself, that putting the iperf server piece on a client (instead of pfSense) made a huge difference.

-

@bmeeks said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

I'm simply repeating what the developers have posted here several times. There may well be more going on under the cover than the simple CPU usage stat shows. You said yourself, that putting the iperf server piece on a client (instead of pfSense) made a huge difference.

I never said that?

I think you're misinformed here.

Thanks anyway.

-

@notorious_vr said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

@bmeeks said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

I'm simply repeating what the developers have posted here several times. There may well be more going on under the cover than the simple CPU usage stat shows. You said yourself, that putting the iperf server piece on a client (instead of pfSense) made a huge difference.

I never said that?

I think you're misinformed here.

Thanks anyway.

I don't want to argue, I was simply offering some advice. Here is what your post said --

Interestingly enough the slow iperf3 speeds are only TO pfsense (pfsense being the ipef3 server). Going the other way (CentOs box being the iperf3 server) I'm seeing very good speeds as I would expect.

-

@bmeeks said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

I don't want to argue, I was simply offering some advice. Here is what your post said --

So if you read that again - you will see that what I said is not what you think I said.

-

I would still like to see test through pfsense vs to or from pfsense.

This has been discussed many times. Unless you plan on moving files/data to or from actually pfsense.. Like running a proxy?

A more valid test to see if you are getting the speeds you should have is "through" pfsense..

-

@johnpoz said in iperf3 speeds confusing - fast = bad internet slow = good internet?:

I would still like to see test through pfsense vs to or from pfsense.

If you can give me an idea of how to perform that test I would be happy to do so.

Otherwise, yes I can get my full 1500/1000 (or slightly above on the downstream) WAN throughput consistently even with pfNG + Suricata running).

However just like the iperf tests show, the upload speeds are not quite as good as download/ingress speeds.

What I mean by that is - while I can generally get 1500-1600 Mbit/s down, the upload is rarely faster than 800-850 Mbit. I'm testing from a 10Gbe connected VM by the way. While understand there is overhead involved I couldn't help be curious about the iperf tests showing FAR slower network speeds TO pfsense compared to FROM pfsense.

I don't always put too much weight on internet speed testing as it's out of my control when it's past the gateway anyways.