problems with suricata 6.0.0

-

@bmeeks

I have trouble with this version

i have noticed that Suricata stop working after a few minutes

the service is still running but i think the traffic is not analized, I have no more alert until i restart the service

also if i press the restart or stop button the services are not stopped and I have duplicate instance running

The logs show nothing, only the normal startup sequence[2.5.0-DEVELOPMENT][root@pfSense.kiokoman.home]/root: ps aux | grep suricata root 97086 18.0 9.0 788500 747720 - Ss 13:19 92:00.65 /usr/local/bin/suricata -i vmx0 -D -c /usr/local/etc/suricata/suricata_55009_vmx0/suricata.yaml --pidfile /var/run/suricata_vmx055009.pid root 68543 17.9 6.0 539340 497500 - Ss 13:18 85:08.26 /usr/local/bin/suricata -i pppoe0 -D -c /usr/local/etc/suricata/suricata_3908_pppoe0/suricata.yaml --pidfile /var/run/suricata_pppoe03908.pid root 57800 16.9 8.4 746424 703284 - Ss 13:19 86:24.80 /usr/local/bin/suricata -i gif0 -D -c /usr/local/etc/suricata/suricata_8584_gif0/suricata.yaml --pidfile /var/run/suricata_gif08584.pid root 32950 0.0 0.9 105792 75764 - Rs 20:09 0:00.46 /usr/local/bin/suricata -i pppoe0 -D -c /usr/local/etc/suricata/suricata_3908_pppoe0/suricata.yaml --pidfile /var/run/suricata_pppoe03908.pidi'll try to uninstall cleaning all files and install again

-

The 6.0.0 Suricata version is truly new from upstream. Couple that with the FreeBSD-12.2/STABLE changes present in pfSense-2.5 and you have a potentially volatile cocktail indeed ...

.

.Which mode are you using? No blocking, Legacy Mode blocking or Inline IPS Mode?

I'm assuming the PPPoe interface is Legacy Mode as netmap and inline operation would not be supported there. What about the vmx interface?

When you say "logs show nothing", does that include both the pfSense system log AND the

suricata.logfile for the configured interface? -

all interface are in legacy mode, i'm reinstalling it right now ..

-

This recent bug reported upstream concerns me as well: https://redmine.openinfosecfoundation.org/issues/4108. Wonder if it is related, and how deep into Suricata's function does it go? It sounds sort of like you are seeing, but not exactly the same.

-

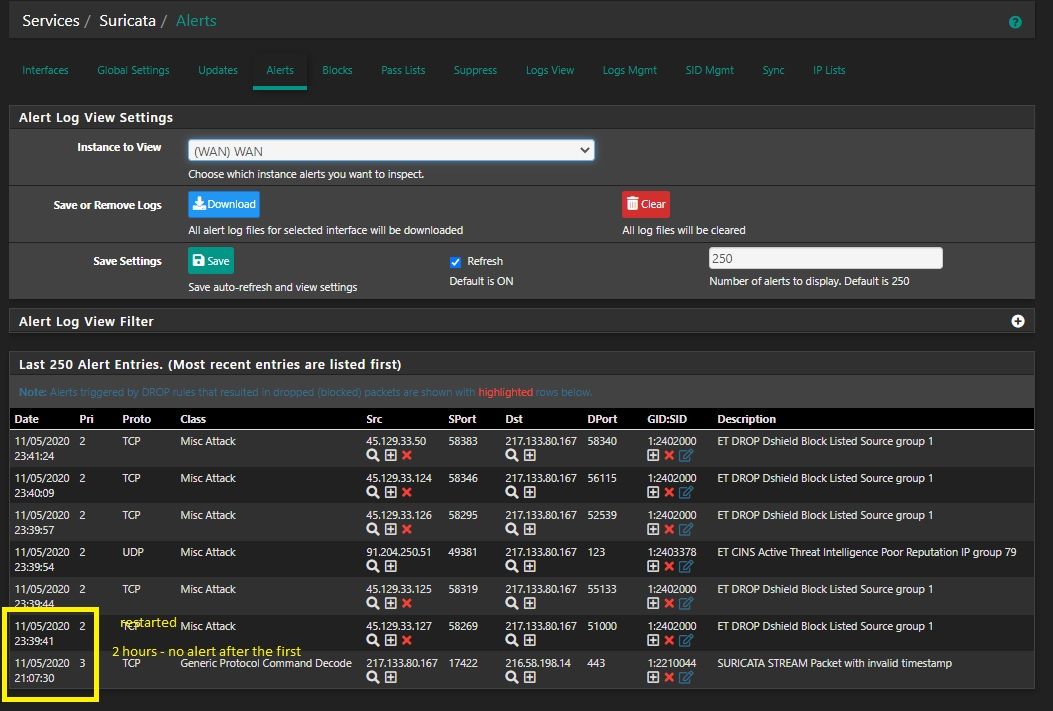

idk, i have alert and drop for few hours then it stop reporting anything,

truss show onlynanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.001000000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) -

The trigger for that bug I linked is "live reload" of the rules. Suricata can do that when enabled on the GLOBAL SETTINGS tab. Not saying this bug is your issue, but just curious. The bug is also reported to impact 4.1.9 and 5.0.4 Suricata versions (so all of the recently released updates).

-

uhm, i can try without live reload, granted that the duplicate instance bug does not show up again

-

I will fire up my pfSense-2.5 snapshot virtual machine with Suricata 6.0.0 on it and let that run for a while to see what happens.

-

ok, again, clean install, stopping and restarting the interface lead to a duplicate instance running for some time, it needs 1 minute (+/-) to stop the first instance

now it's running, let's see how long it lasts -

It is very apparent that Suricata 6.0.0 and netmap have problems. Likely the issues are in FreeBSD-12.2/STABLE as I don't immediately see any changes in the C source code for the netmap portion of the Suricata binary.

When I booted up my virtual machine that has Suricata-6.0.0 installed on it, the interface running Inline IPS Mode with the em NIC driver and an e1000 virtual NIC was throwing continuous "netmap buffer full" errors and was totally inaccessible.

I'm applying the latest pfSense-2.5 snapshot to the VM and will test with all the interfaces using Legacy Mode blocking.

When I initially tested the 6.0.0 update, I was using what was a current pfSense-2.5 snapshot at the time. Was a couple of weeks ago I think.

-

@kiokoman said in problems with suricata 6.0.0:

ok, again, clean install, stopping and restarting the interface lead to a duplicate instance running for some time, it needs 1 minute (+/-) to stop the first instance

now it's running, let's see how long it lastsI was able to reproduce the duplicate instances issue. I'm pretty positive that one is related to GUI code changes. Have not investigated exactly what it is yet. I believe the GUI is updating the icon to "stopped" before the actual process has fully shutdown, so if you see the icon change to "stopped" and then immediately click "start" you can wind up with duplicate instances. I tested the "restart" icon and did not notice a duplicate process. But I will do further testing to be sure.

-

you are right, for the other interfaces, it eventually stop. but not for the pppoe interface

[2.5.0-DEVELOPMENT][root@pfSense.kiokoman.home]/root: ps aux | grep suricata root 95762 15.9 2.0 214220 167412 - Ss 21:04 30:47.18 /usr/local/bin/suricata -i pppoe0 -D -c /usr/local/etc/suricata/suricata_43940_pppoe0/suricata.yaml --pidfile /var/run/suricata_pppoe043940.pid root 90673 0.0 0.0 11264 2508 0 S+ 23:33 0:00.00 grep suricataI waited 5 minutes to see if the process was killed

-

This looks more like an issue with PPPoE and Suricata. My test VM still appears to be working. But it's not PPPoE. It is a DHCP WAN and LAN.

I will let the VM run for quite a while and see if it still logs alerts.

-

Okay, I can confirm the loss of alerting capability and the long shutdown times. Something is wrong within the Suricata binary itself I think.

To be sure, I'm going to revert my VM to the suricata-5.0.3 binary and see if everything behaves better then. I have the ability to do that in my test environment.

The long shutdown time is very strange. Suricata almost immediately deletes the PID file in

/var/run, so that's why the GUI icon changes so fast. The GUI detects the PID file to know if the process is running or stopped. However, even though the PID file is quickly removed, the actual process hangs around for a lot longer before dying.Edit: one more data point. I have two interfaces configured with Suricata in that VM. One has zero rules configured besides the default built-in rules. The other interface has a number of rules categories enabled. The interface with just the built-in rules shuts down almost immediately as it should. The interface with more rules enabled is the one taking a long time to shutdown.

-

i have the problem also on lan interface (vmx0) no alert after some minutes

pppoe is (igb0 with vlan 835) passthrough, esxi 7.0u1truss show when it start alot of

mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624663552 (0x80fca7000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624667648 (0x80fca8000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624671744 (0x80fca9000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624675840 (0x80fcaa000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624679936 (0x80fcab000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624684032 (0x80fcac000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624688128 (0x80fcad000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624692224 (0x80fcae000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624696320 (0x80fcaf000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624700416 (0x80fcb0000) mmap(0x0,4096,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34624704512 (0x80fcb1000) munmap(0x819adb000,8192) = 0 (0x0) munmap(0x81a544000,8192) = 0 (0x0) munmap(0x819ab2000,8192) = 0 (0x0) munmap(0x819a81000,8192) = 0 (0x0) munmap(0x819a68000,8192) = 0 (0x0) munmap(0x819a77000,8192) = 0 (0x0) munmap(0x819d19000,4096) = 0 (0x0) munmap(0x819a57000,8192) = 0 (0x0) madvise(0x81b3c4000,12288,MADV_FREE) = 0 (0x0) madvise(0x816811000,12288,MADV_FREE) = 0 (0x0) madvise(0x819bed000,12288,MADV_FREE) = 0 (0x0) madvise(0x81b799000,12197888,MADV_FREE) = 0 (0x0) mmap(0x7fffdfbfc000,2101248,PROT_READ|PROT_WRITE,MAP_STACK,-1,0x0) = 140736947273728 (0x7fffdfbfc000) mprotect(0x7fffdfbfc000,4096,PROT_NONE) = 0 (0x0) thr_new(0x7fffffffdd40,0x68) = 0 (0x0) <new thread 100862> mmap(0x0,2097152,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34840248320 (0x81ca40000) nanosleep({ 0.000100000 }) = 0 (0x0) munmap(0x81ca40000,2097152) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) mmap(0x0,4190208,PROT_READ|PROT_WRITE,MAP_PRIVATE|MAP_ANON,-1,0x0) = 34842599424 (0x81cc7e000) nanosleep({ 0.000100000 }) = 0 (0x0) munmap(0x81cc7e000,1581056) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) munmap(0x81d000000,512000) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) write(1,"\^[[32m6/11/2020 -- 00:31:24\^[["...,148) = 148 (0x94) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) write(3,"6/11/2020 -- 00:31:24 - <Notice>"...,119) = 119 (0x77) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) thr_self(0x7fffffffccc0) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) __sysctl("kern.hostname",2,0x7fffffffcb80,0x7fffffffbe28,0x0,0) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) getpid() = 55820 (0xda0c) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) sendto(4,"<141>1 2020-11-06T00:31:24.88355"...,184,0,NULL,0) = 184 (0xb8) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) sigprocmask(SIG_SETMASK,{ SIGHUP|SIGINT|SIGQUIT|SIGILL|SIGTRAP|SIGABRT|SIGEMT|SIGFPE|SIGKILL|SIGBUS|SIGSEGV|SIGSYS|SIGPIPE|SIGALRM|SIGTERM|SIGURG|SIGSTOP|SIGTSTP|SIGCONT|SIGCHLD|SIGTTIN|SIGTTOU|SIGIO|SIGXCPU|SIGXFSZ|SIGVTALRM|SIGPROF|SI> nanosleep({ 0.000100000 }) = 0 (0x0) sigaction(SIGUSR2,{ 0x800a57140 SA_SIGINFO ss_t },{ SIG_IGN 0x0 ss_t }) = 0 (0x0) sigprocmask(SIG_SETMASK,{ SIGUSR2 },0x0) = 0 (0x0) sigprocmask(SIG_UNBLOCK,{ SIGUSR2 },0x0) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0) nanosleep({ 0.000100000 }) = 0 (0x0)after this you only have nanosleep and no more alert coming

-

I've just compiled and loaded the 5.0.3 binary on my VM. Will test with that. I'm thinking Suricata 6.0.0 and possibly even 5.0.4 are broken within the binary. The first time I stopped Suricata on an interface it stopped after a long time. The last time, in preparation for installing the 5.0.3 binary, it hung up in a kernel spin lock and I had to reboot the firewall to get rid of it!

-

The 5.0.3 binary is installed and running, but with the 6.0.0 package GUI code. So the few small GUI bug fixes are still in place, but the suricata binary is reverted to the older version. Will let this combo run for a while and make sure alerting still works.

Tested stopping an interface with rules defined and it worked properly. Suricata immediately shutdown and the process disappeared as it should. No duplicate process created when restarting.

Update: tested alerts after 30 minutes of runtime and still working fine.

-

with 5.0.3 or with 6.0.0 ?

i'm still doing some test with 6 and i have a long run of alert if i disable all Logging Settings

nope still not working, false alarm

i tried out of curiosity inline mode against igb0 and i had the same problem of the other thread, wan goes offline as soon as truss show "nanosleep"

inline stop workin, nothing is logged anymore and kill the wan

legacy mode stop working and nothing is logged anymore but wan still working -

@kiokoman said in problems with suricata 6.0.0:

with 5.0.3 or with 6.0.0 ?

i'm still doing some test with 6 and i have a long run of alert if i disable all Logging Settings

nope still not working, false alarm

i tried out of curiosity inline mode against igb0 and i had the same problem of the other thread, wan goes offline as soon as truss show "nanosleep"I installed 5.0.3 on my test VM. I have my own private pfSense packages repository server, so I can build any variation of binary and GUI package versions I need to. So I built the 5.0.3 binary and paired it with the 6.0.0 PHP GUI code. I wanted to prove to myself the issues were within the 6.0.0 binary, and I'm now pretty sure they are.

The GUI serves no function with regards to blocking and alerting. All of that happens in the binary piece. The GUI simply generates the

suricata.yamlconf file for the interface and copies the rules file to a specified directory. It has nothing to do with detection and actual alerting. -

yeah i'm with you, the problem is the binary