Some news about upcoming Suricata updates

-

Some exciting new features are coming soon to the Suricata package. These features will be released first into the Development Snapshots branch of pfSense CE and pfSense+. After sufficient time for testing, they will be migrated over to the Release branches.

If you are a Snapshots user/tester, and you have the Suricata package installed, please install these new versions as they come out over the next several days and help us test them.

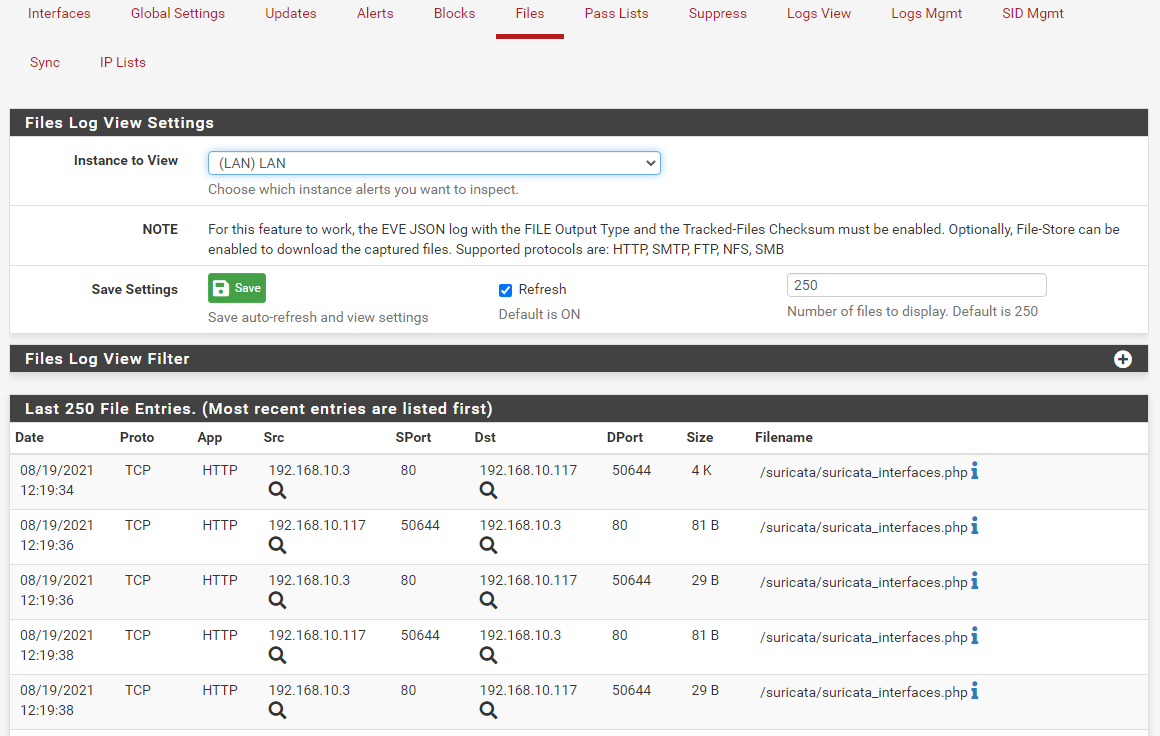

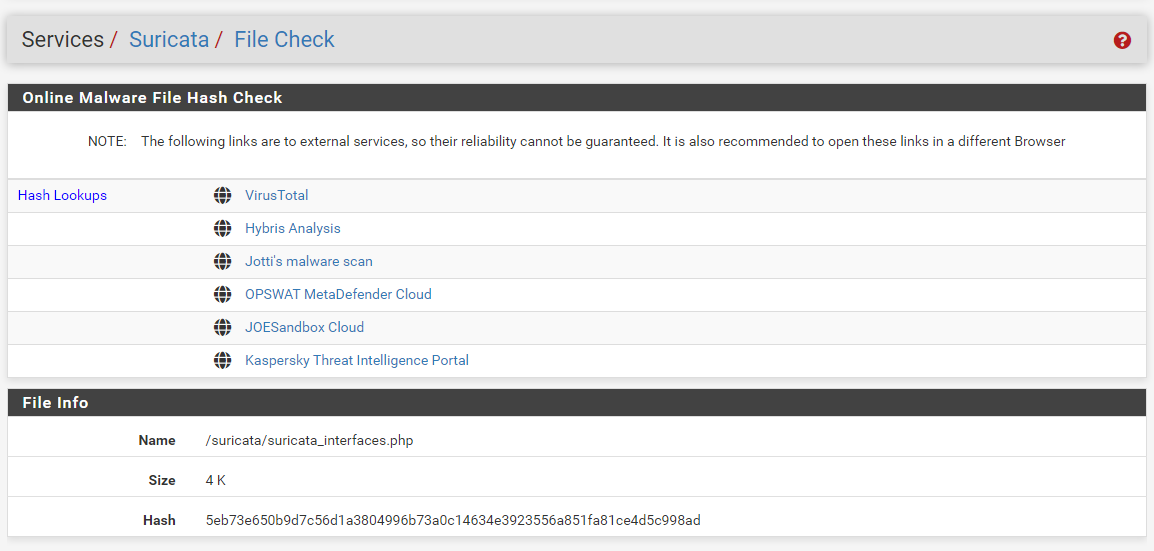

Once again courtesy of Netgate developer @viktor_g, a cool new feature associated with the File Store option in Suricata will be added. A new FILES tab will allow you to browse the captured files Suricata grabs and stores when you enable the File Store option on the INTERFACE SETTINGS tab. This new tab will work much like the current ALERTS tab does for alerts, except instead of alerts you will see a table of captured file names and associated data listed on each row of the table. On the righthand end of a row is an Info icon. Clicking that icon takes you to a new page where the hash of the captured file can be sent to a number of web-based file checker databases such as VirusTotal and others for checking. This allows the admin to easily test a captured file hash against known bad file hashes.

Here is a screenshot from one of my test systems. The files shown were captured as I browsed the LAN side interface of the firewall from a workstation on my local network. So the filenames shown are actually some of the Suricata package PHP source files. The 192.168.10.3 box was my test pfSense firewall, and the 192.168.10.117 box was my Windows 10 workstation. For simplicity in my closed test environment, the firewall is using HTTP for the admin web portal.

Clicking the blue Info icon (the large "i") on the right end of a row will open the File Check page. On this page I could test the hash against known malware hashes stored by the web sites listed at the top of the screen capture below.

The other exciting Suricata package news is buried deeper down in the underlying Suricata binary which does the actual work of inspecting traffic against the rule signatures. Due to a longstanding issue with the Suricata 6.x binary branch and FreeBSD, I've limited the Suricata binary used in pfSense to the 5.x branch. Currently we are using version 5.0.6 of the binary. I've been working for about the last four weeks very closely with some of the upstream Suricata team to find the cause of the 6.x issue on FreeBSD. The cause has finally been located and fixed, so now the 6.x binary branch works just fine on FreeBSD (and thus also on pfSense). So very soon the binary on pfSense will be updated to 6.0.3.

At the same time I've been working on the 6.x binary problem, I have also been deep-diving into the internals of the netmap kernel device used with the Inline IPS Mode in Suricata. I've learned quite a bit about netmap over the last couple of months, and that helped me realize- while studying the binary's source code- that the Suricata binary had some issues with its netmap support. Working in cooperation with the Suricata team upstream, I think we have corrected these problems. So hopefully that translates into Inline IPS Mode operation that is much more stable.

And the biggest netmap news, is we are bringing full support of multi-queue NICs with true multithreading to Inline IPS Mode operation. This should result in a large performance improvement when using Inline IPS Mode with netmap on high core-count processors and with multiple RSS queue NICs (for example, those having 4 or even 8 TX/RX queues exposed for netmap operation). The changes coming in Suricata will allow it to automatically query the NIC for the supported number of RX/TX queues, and then Suricata will ask netmap to create a corresponding number of netmap host stack rings. Suricata will then bind a separate packet receive thread to each pair of NIC and corresponding host stack rings.

This means you should see Suricata really start to shine with its multithreaded nature. Formerly, when using Inline IPS Mode, due to limitations in the older Suricata binary, only a single pair of host stack rings were ever used, no matter how many rings the NIC might offer. And the older Suricata only ever opened a single packet receive thread (because there was only a single pair of host rings to send data to anyway). Early testing at another site by the Suricata team indicated a very nice bump in IPS throughput with the new multi-queue code when used with a high performance NIC.

-

@bmeeks said in Some news about upcoming Suricata updates:

multiple RSS queue NICs (for example, those having 4 or even 8 TX/RX queues exposed for netmap operation)

Thanks for the promising news.

Do you happen to know if this NIC attribute exist in any Netgate appliance(s) -- or would this only be applicable for folks who build their device(s)?

-

Would it also mean that possibly more NICs are supported in inline mode (e.g. hn) or does this stay the same?

Great, exciting news anyways.

-

@flyzipper said in Some news about upcoming Suricata updates:

@bmeeks said in Some news about upcoming Suricata updates:

multiple RSS queue NICs (for example, those having 4 or even 8 TX/RX queues exposed for netmap operation)

Thanks for the promising news.

Do you happen to know if this NIC attribute exist in any Netgate appliance(s) -- or would this only be applicable for folks who build their device(s)?

I believe most of their appliances have NICs that expose multiple queues. You can examine the

dmesg.bootlog in/var/logon your box to find out. During boot-up, the NICs log a message about the number of netmap RX/TX queues they expose. My SG-5100 is logging 4 RX/TX netmap queues per NIC port. -

@bob-dig said in Some news about upcoming Suricata updates:

Would it also mean that possibly more NICs are supported in inline mode (e.g. hn) or does this stay the same?

Great, exciting news anyways.

No, for the moment the type of supported NICs is not changing. That is a FreeBSD thing. But, with the move to the

iflibwrapper for NIC driver development and the fact the wrapper now bakes in netmap support, as time progresses, more and more NIC drivers should come under the netmap-supported umbrella as they are rewritten to use theiflibwrapper API. -

@bmeeks VmxNet3 is really important here.....

-

@cool_corona said in Some news about upcoming Suricata updates:

@bmeeks VmxNet3 is really important here.....

I don't understand your post -- the vmx driver in FreeBSD supports netmap operation, and it is the vmx driver in FreeBSD that handles the vmxnet3 protocol with ESXi and other VMware products. The vmx driver is a supported netmap option in the latest Suricata package.

To use in an ESXi virtual machine, you simply put this in the VM's configuration file:

ethernet0.virtualDev = "vmxnet3"Here is a link to the official FreeBSD documentation on the driver: https://www.freebsd.org/cgi/man.cgi?query=vmx&apropos=0&sektion=4&manpath=FreeBSD+12.2-stable&arch=default&format=html.

Only ESXi will provide multi-queue support for the vmxnet3 driver, though. So any other VMware platform is going to only expose a single virtual NIC ring pair.

-

@bmeeks So now you can run Inline IPS mode on VMXnet3 adapter with logs and alerts?

-

-

@cool_corona said in Some news about upcoming Suricata updates:

@bmeeks Just for your info Bill.

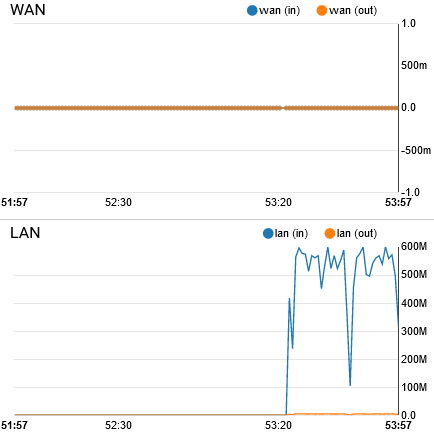

When running Inline IPS the WAN graph doesnt update.

That is always going to be the case no matter what NIC you have. This is a problem within FreeBSD itself and

iflib. When in netmap mode, theiflibcode does not update certain counters. This is not something Suricata even sees, so it can't do anything about it. I think there may be aniflibpatch that fixes this, and if so, hopefully it eventually makes it into a future FreeBSD update in pfSense. -

@cool_corona said in Some news about upcoming Suricata updates:

@bmeeks So now you can run Inline IPS mode on VMXnet3 adapter with logs and alerts?

It should work fine now using the latest package (at least the latest pfSense Snapshots package).

-

@bmeeks

Thank you for the extended documentation of your work. The changes are available with today's Snapshot.Only one question relating to this:

https://redmine.openinfosecfoundation.org/issues/4478I see they've bumped the fix version to 7.0rc1.

Did you include the fix in 6.0.3 binary? Is it the same fix Victor Julien is talking about? -

@nrgia said in Some news about upcoming Suricata updates:

@bmeeks

Thank you for the extended documentation of your work. The changes are available with today's Snapshot.Only one question relating to this:

https://redmine.openinfosecfoundation.org/issues/4478I see they've bumped the fix version to 7.0rc1.

Did you include the fix in 6.0.3 binary? Is it the same fix Victor Julien is talking about?Yes, I included two fixes actually in the 6.0.3 update for pfSense. Those patches will be removed once both make it into Suricata RELEASE upstream (whether that is 6.0.4 or 7.0). The two patches are the fix for the kernel lock "hang" that's discussed in the Suricata Redmine issue; and the new netmap multiple host stack rings support. That second feature is not documented in the Suricata Redmine issue, but it is something they are working towards using my patch as the base. I had contacted the Suricata team via email about both issues (the kernel lock hang and the potential performance improvement by rewriting the netmap support in Suricata).

-

Thank you for your response, for people like you, some of us are still using pfSense.

One more question if I may...

Regarding this https://forum.netgate.com/topic/164314/inline-ips-can-t-increase-threads/8I have changed in my chase the Netmap Threads to 3 and I see an increase in throughput.

With this release, should I switch back to Auto in order to test how Suricata will behave, or it's not related?

Thanks again

-

@nrgia said in Some news about upcoming Suricata updates:

Thank you for your response, for people like you, some of us are still using pfSense.

One more question if I may...

Regarding this https://forum.netgate.com/topic/164314/inline-ips-can-t-increase-threads/8I have changed in my chase the Netmap Threads to 3 and I see an increase in throughput.

With this release, should I switch back to Auto in order to test how Suricata will behave, or it's not related?

Thanks again

Yes, I would recommend swapping to "auto" again when the new update is posted.

Right now, Suricata is only using a single pair of host stack rings with netmap. That's because the older netmap API only supported a single pair (RX/TX) of host stack rings. That means no matter how many queues your NIC might have, only a single Suricata thread is reading from the host stack side because there was only a single ring pair to use. That creates a bottleneck. In the current Suricata binary, when threads is "auto" and one end of the netmap connection is the host stack, then only a single packet capture thread is launched. Putting a numeric value in the box instead will tell Suricata to launch that specific number of threads for reading from the NIC side. That can help a little, but you still have a host stack bottleneck because of the single host stack ring pair.

The host stack side of netmap is the connection to the kernel. So to understand this better, visualize netmap as a pipe between the NIC hardware and the FreeBSD kernel network stack. In netmap operation, the NIC is actually unhooked from the kernel completely. One end of the netmap connection is to your physical NIC (so em0, for example). The other end, the way we configure IPS in pfSense, is the host stack (or FreeBSD kernel network connection). That is denoted in the netmap configuration as em0^ (with a caret suffix). So now Suricata (via netmap using shared memory buffers) is 100% responsible for reading data from the NIC and sending it to pfSense, and vice versa (reading from the pfSense kernel and sending it to the NIC). That's why if the netmap connection dies or screws up, network traffic on the interface stops completely. Killing netmap operation tells the kernel to restore the original direct-connection pathway between NIC and pfSense kernel.

Starting with the most recent update to the netmap API (version 14), which went into FreeBSD 12 and higher, you can tell netmap to open as many host stack rings as you want. The optimum number is usually to match what the physical NIC side of the connection exposes (that number is determined by the NIC hardware). By matching the number of rings on the host stack with queues on the NIC, you can then launch a dedicated packet I/O thread per ring pair (RX and TX). These packet acquisition threads can be spread across available CPU cores, and thus potentially increase throughput quite dramatically if you have high power hardware.

A similar thing happens on the other side of the packet analysis process. When using "autofp" runmode in Suricata, you have a variable number of RX threads reading packets off the wire and sending them to the signature analysis engine packet queue. A totally separate process then launches worker threads ("W#xx") that are responsible for pulling packets from that queue and running them through the signatures. When the run through signatures is done (detect and analysis is finished), the packet is sent via a callback function to the netmap code to be either written out to the original destination, or dropped if the analysis engine determined the packet should be dropped. So now, with multiple host stack rings available, you have greater efficiency from those worker threads as they can write to the host stack concurrently (one thread per host stack ring pair). Formerly, you had multiple worker threads all contending for the same single pair of host stack rings. Thus a bottleneck, and also a source of some of the weird netmap errors such as

netmap_ring_reinit(). The errors happen if one thread moves buffer pointers while another thread was sleeping (concurrent access). There was no locking. That is fixed in my patch, so hopefully some of the netmap weirdness seen in the past is history.Runmode "workers" in Suricata changes the threading model. In "workers" mode, each launched thread is responsible for the entire life-cycle of a packet. It processes the packet all the way from receiving it off the wire, analyzing it in the signature engine, to then either writing it out to the destination or dropping it. Again, multiple host stack rings will help here with performance. You can swap runmodes on the INTERACE SETTINGS tab. A particularly bothersome bug was fixed in Suricata 6.x with the "workers" runmode. In version 5.x, running "workers" mode will cause a ton of log spam with "packet seen on wrong thread" messages. So I would not enable that mode in the current Suricata package which is based on the 5.0.6 binary. Wait for this coming update to 6.0.3 before experimenting with runmode "workers".

-

@bmeeks said in Some news about upcoming Suricata updates:

A particularly bothersome bug was fixed in Suricata 6.x with the "workers" runmode. In version 5.x, running "workers" mode will cause a ton of log spam with "packet seen on wrong thread" messages. So I would not enable that mode in the current Suricata package which is based on the 5.0.6 binary. Wait for this coming update to 6.0.3 before experimenting with runmode "workers".

Actually in Workers mode I got more throuhput in comparison with Autofp. I ran Suricata like this for a year or so. I will test in both running modes in 6.0.3 and compare the results.

Also it's a good ideea that what you summarized here about Suricata workflow should be made sticky.

Much obliged

-

@nrgia said in Some news about upcoming Suricata updates:

@bmeeks said in Some news about upcoming Suricata updates:

A particularly bothersome bug was fixed in Suricata 6.x with the "workers" runmode. In version 5.x, running "workers" mode will cause a ton of log spam with "packet seen on wrong thread" messages. So I would not enable that mode in the current Suricata package which is based on the 5.0.6 binary. Wait for this coming update to 6.0.3 before experimenting with runmode "workers".

Actually in Workers mode I got more throuhput in comparison with Autofp. I ran Suricata like this for a year or so. I will test in both running modes in 6.0.3 and compare the results.

Also it's a good ideea that what you summarized here about Suricata workflow should be made sticky.

Much obliged

"workers" mode is suggested for the highest performance, but it always gave me tons of "packet seen on wrong thread" errors in my testing on virtual machines. This was also reported by others on the web. The "why" had a lot of complicated explanations, and there is a very long thread on the Suricata Redmine site about that particular logged message. It also showed up in stats when they were enabled. This error message disappeared for me with the 6.x binary branch. During testing of the recent bug fix and netmap change, I could readily reproduce the "packet seen on wrong message" by simply swapping out the binary from 6.0.3 to 5.0.6 without changing another thing. That convinced me it was something in the older 5.x code.

"autofp" mode, which means auto flow-pinned, is the default mode. It offers reasonably good performance on most setups. But if you have a high core-count CPU, then "workers" will likely outperform "autofp". You can choose either mode in the pfSense Suricata package and experiment. Which mode works best is very much hardware and system setup dependent. So it's not a one-size-fits-all scenario. Experimentation is required to see which works best in a given setup.

For very advanced users who want to tinker, there are several CPU Affinity settings and other tuning parameters exposed in the

suricata.yamlfile. If tinkering there, I would do so only in a lab environment initially. And remember that on pfSense, thesuricata.yamlfile for an interface is re-created from scratch each time you save a change in the GUI or start/restart a Suricata instance from the GUI. So to make permanent edits, you actually would need to change the settings in the/usr/local/pkg/suricata/suricata_yaml_template.incfile. The actualsuricata.yamlfile for each interface is built from the template information in that file. -

For those of us currently running Snort on capable multi-core hardware, would these enhancements be a good enough reason to start thinking about switching over to Suricata? It sounds like Suricata will have the opportunity to potentially significantly outperform Snort in inline IPS mode. Thanks in advance for your thoughts on this.

-

@tman222 said in Some news about upcoming Suricata updates:

For those of us currently running Snort on capable multi-core hardware, would these enhancements be a good enough reason to start thinking about switching over to Suricata? It sounds like Suricata will have the opportunity to potentially significantly outperform Snort in inline IPS mode. Thanks in advance for your thoughts on this.

My opinion is that it's highly dependent on the traffic load. Probably up to about 1 Gigabit/sec or so, there would not be much difference. As you approach 10 Gigabits/sec, then I would say most certainly so. Of course the number and type of rule signatures enabled plays a huge role in performance. So there is also that variable to consider.

Snort today, when using Inline IPS Mode, already has the same netmap patch that I put into Suricata. In fact, I originally wrote that new code for the Snort DAQ. But Snort itself is only a single-threaded application, so the impact of multi-queue support in the Snort DAQ was minimal in terms of performance.

As many have pointed out, Snort3 is multithreaded. So Snort3 could benefit from the multiple host ring support. Unfortunately, Snort3 uses a new data acquisition library called

libdaq. Although I contributed it to them quite some ago (actually about two years or more), and they said they would, the upstream Snort team has not yet included the multi-queue and host stack endpoint netmap patch I submitted in the newlibdaqlibrary. As a result,libdaqdoes not currently support host stack endpoints for netmap. So you can't use it for IPS mode currently on pfSense unless you configure it to use two physical NIC ports for the IN and OUT pathways and bridge between them. It can't work like Snort2 is working using one NIC port and the kernel host stack as the two netmap endpoints. -

I did some initial speed tests as follows:

Tested on 1Gbs Down and 500 Mbps Up line

pfSense Test Rig

https://www.supermicro.com/en/products/system/Mini-ITX/SYS-E300-9A-4C.cfmService used: speedtest.net

NIC Chipset - Intel X553 1Gbps

Dmesg info:

ix0: netmap queues/slots: TX 4/2048, RX 4/2048 ix0: eTrack 0x80000567 ix0: Ethernet address: ac:1f:*:*:*:* ix0: allocated for 4 rx queues ix0: allocated for 4 queues ix0: Using MSI-X interrupts with 5 vectors ix0: Using 4 RX queues 4 TX queues ix0: Using 2048 TX descriptors and 2048 RX descriptors ix0: <Intel(R) X553 (1GbE)>Results:

Suricata 5.0.6

3 threads - workers mode

Dwn 374.79 - Up 439.38Suricata 6.0.3

auto threads - workers mode

Dwn 410.19 - Up 380.123 threads - workers mode

Dwn 415.47 - Up 436.632 threads - workers mode

Dwn 419.27 - Up 458.21auto threads - AutoFp mode

Dwn 376.13 - Up 358.583 threads - AutoFp mode

Dwn 416.20 - Up 456.342 threads - AutoFp mode

Dwn 418.61 - Up 446.36Please note that if the IPS mode(netmap) is disabled, this configuration can obtain the full line speed.