Suricata Inline IPS blocks LAN

-

Hi

I have a pc with an Intel(R) PRO/1000 Network Connection dual Gb slot that crashes the LAN when some traffic hits the interface if Suricata is in inline mode, If Suricata is stopped, everything is good. The card reads as em0/em1,so it should be compatible, right? But i've fond that the driver for it is: mlx5en: Mellanox Ethernet driver 3.5.2 (September 2019).

Is this normal? If yes, then why does the connection crash? I've fond no record of any error in any logs that i could find, it just stops responding. I could log into the box only via openvpn over the wan interface and stop suricata...

Please help

Thanks -

went back to snort, and it doesn't seem to have this problem, at least not from what i've tested, similar to suricata

bu snort performance is lower than suricata

any solution? any ideas what i should try, what logs to check?

thank you -

What version of the Suricata package are you running? The current version is 6.0.3_3.

Can you be more specific than "crashes the LAN when some traffic hits the interface"?

- Crashes how specifically? Does pfSense itself crash or hang?

- Does the LAN interface stop passing all traffic?

- Does this happen if any type of traffic passes through the interface, or just some specifiy type of traffic (only ICMP, only UDP, only TCP, etc.)?

- Is this problem caused only when specific rules trigger? If so, what are those rules (check the ALERTS tab).

- What messages, if any, are printed in the pfSense system log?

- Have you checked the

suricata.logfile for the LAN interface to see what is logged in there (access this under the LOGS VIEW tab).

-

@bmeeks said in Suricata Inline IPS blocks LAN:

What version of the Suricata package are you running? The current version is 6.0.3_3.

Can you be more specific than "crashes the LAN when some traffic hits the interface"?

- Crashes how specifically? Does pfSense itself crash or hang?

- Does the LAN interface stop passing all traffic?

- Does this happen if any type of traffic passes through the interface, or just some specifiy type of traffic (only ICMP, only UDP, only TCP, etc.)?

- Is this problem caused only when specific rules trigger? If so, what are those rules (check the ALERTS tab).

- What messages, if any, are printed in the pfSense system log?

- Have you checked the

suricata.logfile for the LAN interface to see what is logged in there (access this under the LOGS VIEW tab).

hi

thanks for the reply

it is the latest suricata, yes

sorry about the bad description, it crashes as in it stops all traffic on the lan interface, including to the pfsense.

no rules trigger, i've even disabled all the downloaded categories

no messages, nothing in suricata.log or system log

something that i tested with is downloading a big 2gb ubuntu torrent. it starts downloading, gets to high speed (my net limit is 500mb/s) then at an arbitrary interval ranging from 2 s to 1min the interface stops responding

thanks -

@cobrax2 said in Suricata Inline IPS blocks LAN:

it is the latest suricata, yes

sorry about the bad description, it crashes as in it stops all traffic on the lan interface, including to the pfsense.

no rules trigger, i've even disabled all the downloaded categories

no messages, nothing in suricata.log or system log

something that i tested with is downloading a big 2gb ubuntu torrent. it starts downloading, gets to high speed (my net limit is 500mb/s) then at an arbitrary interval ranging from 2 s to 1min the interface stops respondingThat sounds an awful lot like the old flow manager "stuck thread" bug, but the patch for that is included with the latest pfSense Suricata binary (or at least it is supposed to be included).

If you can get access to the console when the LAN interface hang occurs, execute this series of commands for me:

ps -ax | grep suricataThe above command will print out the process IDs (PID) of all running Suricata processes. Find the PID of the Suricata instance that is on your LAN and then run this command replacing <pid> with the PID of the Suricata instance on your LAN interface:

procstat -t <pid>I would like to see the full output of that last command. I'm looking to see if any threads are showing as kernel-locked.

Another thing you can try is to run the LAN interface in "workers" threading mode instead of "autofp". That setting can be changed on the INTERFACE SETTINGS tab.

Of course if you have removed Suricata and installed Snort, the above steps cannot be performed.

Another helpful piece of info would be the full

suricata.logfile from the interface. Some useful info is logged there during startup of the Suricata instance. -

I also found a few Google references to that Mellanox driver and weird issues with hangs and other problems. That driver may be a problem even though you say it identifies as an "em" driver.

If the flow manager hang bug patch was truly not present in the current Suricata binary build on pfSense, I would expect to be seeing a flood of messages here as that bug always showed itself after only a few minutes of "up time".

-

@bmeeks

i reinstalled suricata so i could debug the problem

the "workers" setting was already set

suricata.log:30/11/2021 -- 19:43:09 - <Notice> -- This is Suricata version 6.0.3 RELEASE running in SYSTEM mode 30/11/2021 -- 19:43:09 - <Info> -- CPUs/cores online: 2 30/11/2021 -- 19:43:09 - <Info> -- HTTP memcap: 67108864 30/11/2021 -- 19:43:09 - <Info> -- Netmap: Setting IPS mode 30/11/2021 -- 19:43:09 - <Info> -- fast output device (regular) initialized: alerts.log 30/11/2021 -- 19:43:09 - <Info> -- http-log output device (regular) initialized: http.log 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_uri 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_uri 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_raw_uri 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_raw_uri 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_request_line 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_client_body 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_response_line 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_header 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_header 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_header_names 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_header_names 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_accept 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_accept 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_accept_enc 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_accept_enc 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_accept_lang 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_accept_lang 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_referer 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_referer 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_connection 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_connection 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_len 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_len 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_len 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_len 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_type 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_type 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_type 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_content_type 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http.server 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http.server 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http.location 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http.location 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_protocol 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_protocol 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_start 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_start 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_raw_header 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_raw_header 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_method 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_method 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_cookie 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_cookie 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_cookie 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_cookie 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file.magic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_user_agent 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_user_agent 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_host 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_raw_host 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_raw_host 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_stat_msg 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_stat_code 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http_stat_code 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http2_header_name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http2_header_name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http2_header 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for http2_header 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dns_query 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dnp3_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dnp3_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tls.sni 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tls.cert_issuer 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tls.cert_subject 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tls.cert_serial 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tls.cert_fingerprint 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tls.certs 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ja3.hash 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ja3.string 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ja3s.hash 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ja3s.string 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dce_stub_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dce_stub_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dce_stub_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for dce_stub_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for smb_named_pipe 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for smb_share 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh.proto 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh.proto 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh_software 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh_software 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh.hassh 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh.hassh.server 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh.hassh.string 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ssh.hassh.server.string 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for file_data 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for krb5_cname 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for krb5_sname 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.method 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.uri 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.protocol 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.protocol 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.method 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.stat_msg 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.request_line 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for sip.response_line 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for rfb.name 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for snmp.community 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for snmp.community 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.connect.clientid 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.connect.username 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.connect.password 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.connect.willtopic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.connect.willmessage 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.publish.topic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.publish.message 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.subscribe.topic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for mqtt.unsubscribe.topic 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for icmpv4.hdr 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for tcp.hdr 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for udp.hdr 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for icmpv6.hdr 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ipv4.hdr 30/11/2021 -- 19:43:09 - <Perf> -- using shared mpm ctx' for ipv6.hdr 30/11/2021 -- 19:43:10 - <Info> -- Rule with ID 2026440 is bidirectional, but source and destination are the same, treating the rule as unidirectional 30/11/2021 -- 19:43:12 - <Error> -- [ERRCODE: SC_ERR_OPENING_RULE_FILE(41)] - opening hash file /usr/local/etc/suricata/suricata_16921_em1/rules/fileextraction-chksum.list: No such file or directory 30/11/2021 -- 19:43:12 - <Error> -- [ERRCODE: SC_ERR_INVALID_SIGNATURE(39)] - error parsing signature "drop http any any -> any any (msg:"Black list checksum match and extract MD5"; filemd5:fileextraction-chksum.list; filestore; sid:28; rev:1;)" from file /usr/local/etc/suricata/suricata_16921_em1/rules/suricata.rules at line 14321 30/11/2021 -- 19:43:12 - <Error> -- [ERRCODE: SC_ERR_OPENING_RULE_FILE(41)] - opening hash file /usr/local/etc/suricata/suricata_16921_em1/rules/fileextraction-chksum.list: No such file or directory 30/11/2021 -- 19:43:12 - <Error> -- [ERRCODE: SC_ERR_INVALID_SIGNATURE(39)] - error parsing signature "drop http any any -> any any (msg:"Black list checksum match and extract SHA1"; filesha1:fileextraction-chksum.list; filestore; sid:29; rev:1;)" from file /usr/local/etc/suricata/suricata_16921_em1/rules/suricata.rules at line 14322 30/11/2021 -- 19:43:12 - <Error> -- [ERRCODE: SC_ERR_OPENING_RULE_FILE(41)] - opening hash file /usr/local/etc/suricata/suricata_16921_em1/rules/fileextraction-chksum.list: No such file or directory 30/11/2021 -- 19:43:12 - <Error> -- [ERRCODE: SC_ERR_INVALID_SIGNATURE(39)] - error parsing signature "drop http any any -> any any (msg:"Black list checksum match and extract SHA256"; filesha256:fileextraction-chksum.list; filestore; sid:30; rev:1;)" from file /usr/local/etc/suricata/suricata_16921_em1/rules/suricata.rules at line 14323 30/11/2021 -- 19:43:12 - <Info> -- 2 rule files processed. 14587 rules successfully loaded, 3 rules failed 30/11/2021 -- 19:43:12 - <Info> -- Threshold config parsed: 0 rule(s) found 30/11/2021 -- 19:43:12 - <Perf> -- using shared mpm ctx' for tcp-packet 30/11/2021 -- 19:43:12 - <Perf> -- using shared mpm ctx' for tcp-stream 30/11/2021 -- 19:43:12 - <Perf> -- using shared mpm ctx' for udp-packet 30/11/2021 -- 19:43:12 - <Perf> -- using shared mpm ctx' for other-ip 30/11/2021 -- 19:43:12 - <Info> -- 14587 signatures processed. 31 are IP-only rules, 3208 are inspecting packet payload, 11147 inspect application layer, 110 are decoder event only 30/11/2021 -- 19:43:12 - <Warning> -- [ERRCODE: SC_WARN_FLOWBIT(306)] - flowbit 'ET.ButterflyJoin' is checked but not set. Checked in 2011296 and 0 other sigs 30/11/2021 -- 19:43:12 - <Warning> -- [ERRCODE: SC_WARN_FLOWBIT(306)] - flowbit 'ET.Netwire.HB.1' is checked but not set. Checked in 2018282 and 0 other sigs 30/11/2021 -- 19:43:12 - <Warning> -- [ERRCODE: SC_WARN_FLOWBIT(306)] - flowbit 'ET.GenericPhish_Adobe' is checked but not set. Checked in 2023048 and 0 other sigs 30/11/2021 -- 19:43:12 - <Warning> -- [ERRCODE: SC_WARN_FLOWBIT(306)] - flowbit 'ET.gadu.loginsent' is checked but not set. Checked in 2008299 and 0 other sigs 30/11/2021 -- 19:43:12 - <Warning> -- [ERRCODE: SC_WARN_FLOWBIT(306)] - flowbit 'et.http.PK' is checked but not set. Checked in 2019835 and 1 other sigs 30/11/2021 -- 19:43:12 - <Warning> -- [ERRCODE: SC_WARN_FLOWBIT(306)] - flowbit 'FB180732_2' is checked but not set. Checked in 2024697 and 0 other sigs 30/11/2021 -- 19:43:12 - <Perf> -- TCP toserver: 41 port groups, 33 unique SGH's, 8 copies 30/11/2021 -- 19:43:12 - <Perf> -- TCP toclient: 21 port groups, 20 unique SGH's, 1 copies 30/11/2021 -- 19:43:12 - <Perf> -- UDP toserver: 41 port groups, 25 unique SGH's, 16 copies 30/11/2021 -- 19:43:12 - <Perf> -- UDP toclient: 12 port groups, 7 unique SGH's, 5 copies 30/11/2021 -- 19:43:12 - <Perf> -- OTHER toserver: 254 proto groups, 3 unique SGH's, 251 copies 30/11/2021 -- 19:43:12 - <Perf> -- OTHER toclient: 254 proto groups, 0 unique SGH's, 254 copies 30/11/2021 -- 19:43:17 - <Perf> -- Unique rule groups: 88 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "toserver TCP packet": 28 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "toclient TCP packet": 15 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "toserver TCP stream": 29 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "toclient TCP stream": 18 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "toserver UDP packet": 24 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "toclient UDP packet": 6 30/11/2021 -- 19:43:17 - <Perf> -- Builtin MPM "other IP packet": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_uri (http)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_uri (http2)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_raw_uri (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_raw_uri (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_request_line (http)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_client_body (http)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_response_line (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_header (http)": 9 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_header (http)": 9 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_header_names (http)": 3 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_header_names (http)": 3 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_accept (http)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_accept (http2)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_accept_enc (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_accept_enc (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_accept_lang (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_accept_lang (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_referer (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_referer (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_connection (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_connection (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_content_len (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_content_len (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_content_len (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_content_len (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_content_type (http)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_content_type (http2)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_content_type (http)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_content_type (http2)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http.server (http)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http.server (http2)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http.location (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http.location (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_start (http)": 4 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_start (http)": 4 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_raw_header (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_raw_header (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_method (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_method (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_cookie (http)": 3 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_cookie (http)": 3 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_cookie (http2)": 3 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_cookie (http2)": 3 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_user_agent (http)": 6 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_user_agent (http2)": 6 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_host (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_host (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_raw_host (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver http_raw_host (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_stat_code (http)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient http_stat_code (http2)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver dns_query (dns)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver dns_query (dns)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver tls.sni (tls)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver tls.sni (tls)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient tls.cert_issuer (tls)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient tls.cert_subject (tls)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient tls.cert_serial (tls)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient tls.cert_fingerprint (tls)": 1 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver ssh.proto (ssh)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient ssh.proto (ssh)": 2 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver file_data (smtp)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient file_data (http)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver file_data (smb)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient file_data (smb)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toserver file_data (http2)": 7 30/11/2021 -- 19:43:17 - <Perf> -- AppLayer MPM "toclient file_data (http2)": 7 30/11/2021 -- 19:43:24 - <Perf> -- Using 1 threads for interface em1 30/11/2021 -- 19:43:24 - <Info> -- Going to use 1 thread(s) for device em1 30/11/2021 -- 19:43:24 - <Info> -- devname [fd: 6] netmap:em1/R em1 opened 30/11/2021 -- 19:43:24 - <Info> -- devname [fd: 7] netmap:em1^/T em1^ opened 30/11/2021 -- 19:43:24 - <Perf> -- Using 1 threads for interface em1^ 30/11/2021 -- 19:43:24 - <Info> -- Going to use 1 thread(s) for device em1^ 30/11/2021 -- 19:43:24 - <Info> -- devname [fd: 8] netmap:em1^/R em1^ opened 30/11/2021 -- 19:43:24 - <Info> -- devname [fd: 9] netmap:em1/T em1 opened 30/11/2021 -- 19:43:24 - <Notice> -- all 2 packet processing threads, 2 management threads initialized, engine started.dmesg | grep Ethernet: this is how i thought the driver is Mellanox. maybe it means something else?

em1: Ethernet address: 00:15:17:7b:6d:cb mlx5en: Mellanox Ethernet driver 3.5.2 (September 2019) em0: Ethernet address: 00:15:17:7b:6d:ca em1: Ethernet address: 00:15:17:7b:6d:cb mlx5en: Mellanox Ethernet driver 3.5.2 (September 2019) em0: Ethernet address: 00:15:17:7b:6d:ca em1: Ethernet address: 00:15:17:7b:6d:cbShell Output - ps -ax | grep suricata

47538 - Ss 0:39.83 /usr/local/bin/suricata --netmap -D -c /usr/local/etc/suricata/suricata_16921_em1/suricata.yaml --pidfile /var/run/suricata_em116921.pid -vv

71349 - S 0:00.00 sh -c ps -ax | grep suricata 2>&1

71739 - S 0:00.00 grep suricataprocstat

PID TID COMM TDNAME CPU PRI STATE WCHAN 47538 100574 suricata - -1 120 sleep nanslp 47538 100646 suricata W#01-em1 -1 120 sleep select 47538 100653 suricata W#01-em1^ -1 120 sleep select 47538 100654 suricata FM#01 -1 121 sleep nanslp 47538 100655 suricata FR#01 -1 120 sleep nanslpthank you very much!

-

Are you running on bare metal or within a VM? Curious that Suricata is only opening a single thread for I/O. That indicates your NIC is exposing only a single queue. That would be expected in a virtual machine using an emulated adaptor, but is a bit unusual for bare metal. Most bare metal NICs have at least two queues, and many expose four.

Also, what rules package are running that is generating the missing MD5 checksum list? That error should not be happening, and the fact it is getting logged means those rules are doing nothing for you.

-

@bmeeks

yes, it is installed on pc directly

the nic is a Intel(R) PRO/1000 PT dual port, installed in the vga pcie slot, as it is a bit longer than the standard pcie slot. does this matter?

the mb is an asus prime, cpu intel celeron G5905, 8GB ram

i will deactivate all the rules again, so it won't generate that error anymore

edit:

hmm i was using et rules, but the errors stays after deactivating them all.

the builtin rules are the ones generating errors. i mean files.rules, httpevents.rules, etc

thanks -

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

yes, it is installed on pc directly

the nic is a Intel(R) PRO/1000 PT dual port, installed in the vga pcie slot, as it is a bit longer than the standard pcie slot. does this matter?

the mb is an asus prime, cpu intel celeron G5905, 8GB ram

i will deactivate all the rules again, so it won't generate that error anymore

thanksThat NIC is a plain-vanilla older Intel card. It should not be using the Mellanox driver. Not sure where that boot message is coming from. But that Mellanox driver has been included in pfSense since 2.4.5 or perhaps even earlier.

I don't believe those errors are related to your hang condition, but they indicate something is not configured 100% correctly. The message indicates you have a rule referencing a list of MD5 checksums, but the file containing that list is not in the referenced location -- hence the logged error and the failure of the rule to load properly.

-

@bmeeks

hmm i was using et rules, but the errors stays after deactivating them all.

the builtin rules are the ones generating errors. i mean files.rules, httpevents.rules, etc -

@bmeeks said in Suricata Inline IPS blocks LAN:

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

yes, it is installed on pc directly

the nic is a Intel(R) PRO/1000 PT dual port, installed in the vga pcie slot, as it is a bit longer than the standard pcie slot. does this matter?

the mb is an asus prime, cpu intel celeron G5905, 8GB ram

i will deactivate all the rules again, so it won't generate that error anymore

thanksThat NIC is a plain-vanilla older Intel card. It should not be using the Mellanox driver. Not sure where that boot message is coming from. But that Mellanox driver has been included in pfSense since 2.4.5 or perhaps even earlier.

maybe that mellanox doesnt relate to the nic?

did i use the right command to check?

thanks -

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

hmm i was using et rules, but the errors stays after deactivating them all.

the builtin rules are the ones generating errors. i mean files.rules, httpevents.rules, etcNo, I think you are mistaken there. Those built-in rules should not be referencing any md5 checksums. I have ET-Open and all the built-in rules enabled in my test VMs, and I don't get that error message. Have you created any custom rules by chance? Or have you specified a custom URL for downloading rules packages?

-

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks said in Suricata Inline IPS blocks LAN:

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

yes, it is installed on pc directly

the nic is a Intel(R) PRO/1000 PT dual port, installed in the vga pcie slot, as it is a bit longer than the standard pcie slot. does this matter?

the mb is an asus prime, cpu intel celeron G5905, 8GB ram

i will deactivate all the rules again, so it won't generate that error anymore

thanksThat NIC is a plain-vanilla older Intel card. It should not be using the Mellanox driver. Not sure where that boot message is coming from. But that Mellanox driver has been included in pfSense since 2.4.5 or perhaps even earlier.

maybe that mellanox doesnt relate to the nic?

did i use the right command to check?

thanksRunning this command will list all the active PCI devices:

pciconf -lv -

@bmeeks

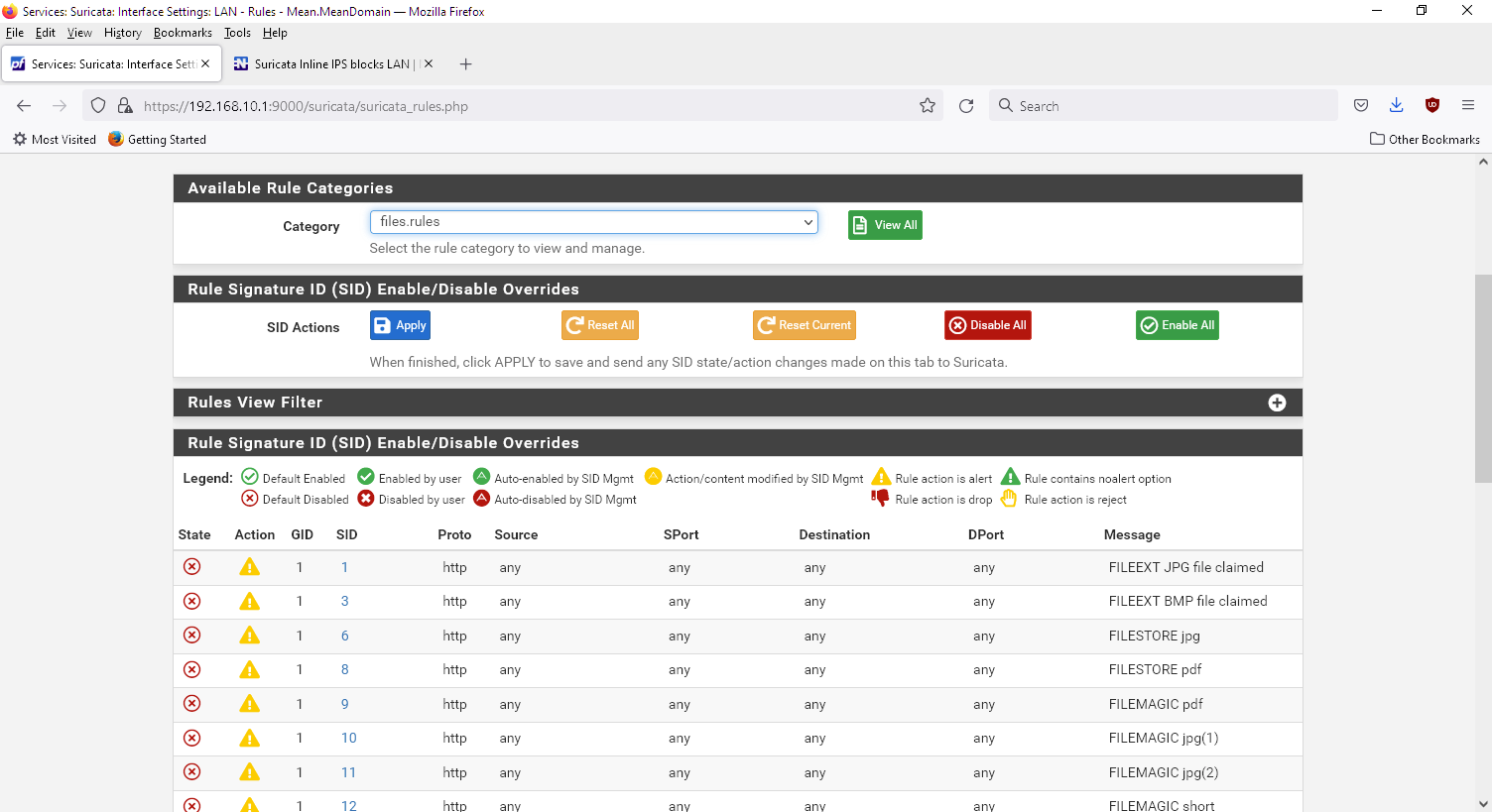

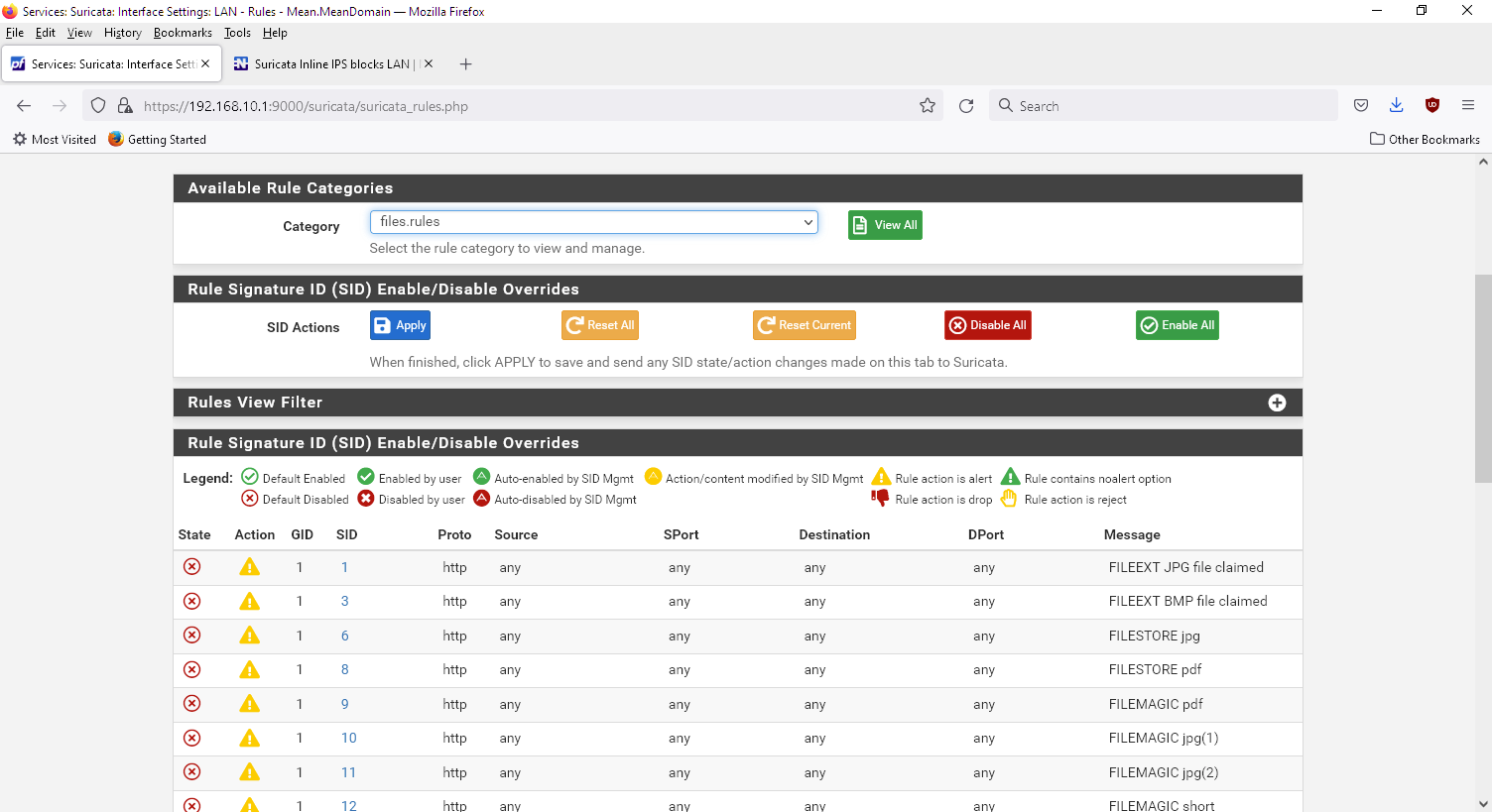

look what i mean:

all the categories are disabled from the et open, and no other are installed -

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

look what i mean:

all the categories are disabled from the et open, and no other are installedHave you checked the

suricata.logfile again after doing this? That file is recreated with each startup of Suricata on an interface. The built-in rules are not causing your error. -

@bmeeks

yes, it's the same -

@bmeeks said in Suricata Inline IPS blocks LAN:

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks said in Suricata Inline IPS blocks LAN:

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

yes, it is installed on pc directly

the nic is a Intel(R) PRO/1000 PT dual port, installed in the vga pcie slot, as it is a bit longer than the standard pcie slot. does this matter?

the mb is an asus prime, cpu intel celeron G5905, 8GB ram

i will deactivate all the rules again, so it won't generate that error anymore

thanksThat NIC is a plain-vanilla older Intel card. It should not be using the Mellanox driver. Not sure where that boot message is coming from. But that Mellanox driver has been included in pfSense since 2.4.5 or perhaps even earlier.

maybe that mellanox doesnt relate to the nic?

did i use the right command to check?

thanksRunning this command will list all the active PCI devices:

pciconf -lvhostb0@pci0:0:0:0: class=0x060000 card=0x86941043 chip=0x9b738086 rev=0x03 hdr=0x00 vendor = 'Intel Corporation' class = bridge subclass = HOST-PCI pcib1@pci0:0:1:0: class=0x060400 card=0x86941043 chip=0x19018086 rev=0x03 hdr=0x01 vendor = 'Intel Corporation' device = '6th-9th Gen Core Processor PCIe Controller (x16)' class = bridge subclass = PCI-PCI vgapci0@pci0:0:2:0: class=0x030000 card=0x86941043 chip=0x9ba88086 rev=0x03 hdr=0x00 vendor = 'Intel Corporation' class = display subclass = VGA xhci0@pci0:0:20:0: class=0x0c0330 card=0x86941043 chip=0x06ed8086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake USB 3.1 xHCI Host Controller' class = serial bus subclass = USB none0@pci0:0:20:2: class=0x050000 card=0x86941043 chip=0x06ef8086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake PCH Shared SRAM' class = memory subclass = RAM none1@pci0:0:22:0: class=0x078000 card=0x86941043 chip=0x06e08086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake HECI Controller' class = simple comms ahci0@pci0:0:23:0: class=0x010601 card=0x86941043 chip=0x06d28086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' class = mass storage subclass = SATA pcib2@pci0:0:29:0: class=0x060400 card=0x86941043 chip=0x06b08086 rev=0xf0 hdr=0x01 vendor = 'Intel Corporation' device = 'Comet Lake PCI Express Root Port' class = bridge subclass = PCI-PCI isab0@pci0:0:31:0: class=0x060100 card=0x86941043 chip=0x06848086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' class = bridge subclass = PCI-ISA none2@pci0:0:31:4: class=0x0c0500 card=0x86941043 chip=0x06a38086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake PCH SMBus Controller' class = serial bus subclass = SMBus none3@pci0:0:31:5: class=0x0c8000 card=0x86941043 chip=0x06a48086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake PCH SPI Controller' class = serial bus em0@pci0:1:0:0: class=0x020000 card=0x135e8086 chip=0x105e8086 rev=0x06 hdr=0x00 vendor = 'Intel Corporation' device = '82571EB/82571GB Gigabit Ethernet Controller D0/D1 (copper applications)' class = network subclass = ethernet em1@pci0:1:0:1: class=0x020000 card=0x135e8086 chip=0x105e8086 rev=0x06 hdr=0x00 vendor = 'Intel Corporation' device = '82571EB/82571GB Gigabit Ethernet Controller D0/D1 (copper applications)' class = network subclass = etherneti guess that line with Mellanox in dmesg | grep Ethernet does not relate to em1?

-

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks said in Suricata Inline IPS blocks LAN:

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks said in Suricata Inline IPS blocks LAN:

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

yes, it is installed on pc directly

the nic is a Intel(R) PRO/1000 PT dual port, installed in the vga pcie slot, as it is a bit longer than the standard pcie slot. does this matter?

the mb is an asus prime, cpu intel celeron G5905, 8GB ram

i will deactivate all the rules again, so it won't generate that error anymore

thanksThat NIC is a plain-vanilla older Intel card. It should not be using the Mellanox driver. Not sure where that boot message is coming from. But that Mellanox driver has been included in pfSense since 2.4.5 or perhaps even earlier.

maybe that mellanox doesnt relate to the nic?

did i use the right command to check?

thanksRunning this command will list all the active PCI devices:

pciconf -lvhostb0@pci0:0:0:0: class=0x060000 card=0x86941043 chip=0x9b738086 rev=0x03 hdr=0x00 vendor = 'Intel Corporation' class = bridge subclass = HOST-PCI pcib1@pci0:0:1:0: class=0x060400 card=0x86941043 chip=0x19018086 rev=0x03 hdr=0x01 vendor = 'Intel Corporation' device = '6th-9th Gen Core Processor PCIe Controller (x16)' class = bridge subclass = PCI-PCI vgapci0@pci0:0:2:0: class=0x030000 card=0x86941043 chip=0x9ba88086 rev=0x03 hdr=0x00 vendor = 'Intel Corporation' class = display subclass = VGA xhci0@pci0:0:20:0: class=0x0c0330 card=0x86941043 chip=0x06ed8086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake USB 3.1 xHCI Host Controller' class = serial bus subclass = USB none0@pci0:0:20:2: class=0x050000 card=0x86941043 chip=0x06ef8086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake PCH Shared SRAM' class = memory subclass = RAM none1@pci0:0:22:0: class=0x078000 card=0x86941043 chip=0x06e08086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake HECI Controller' class = simple comms ahci0@pci0:0:23:0: class=0x010601 card=0x86941043 chip=0x06d28086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' class = mass storage subclass = SATA pcib2@pci0:0:29:0: class=0x060400 card=0x86941043 chip=0x06b08086 rev=0xf0 hdr=0x01 vendor = 'Intel Corporation' device = 'Comet Lake PCI Express Root Port' class = bridge subclass = PCI-PCI isab0@pci0:0:31:0: class=0x060100 card=0x86941043 chip=0x06848086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' class = bridge subclass = PCI-ISA none2@pci0:0:31:4: class=0x0c0500 card=0x86941043 chip=0x06a38086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake PCH SMBus Controller' class = serial bus subclass = SMBus none3@pci0:0:31:5: class=0x0c8000 card=0x86941043 chip=0x06a48086 rev=0x00 hdr=0x00 vendor = 'Intel Corporation' device = 'Comet Lake PCH SPI Controller' class = serial bus em0@pci0:1:0:0: class=0x020000 card=0x135e8086 chip=0x105e8086 rev=0x06 hdr=0x00 vendor = 'Intel Corporation' device = '82571EB/82571GB Gigabit Ethernet Controller D0/D1 (copper applications)' class = network subclass = ethernet em1@pci0:1:0:1: class=0x020000 card=0x135e8086 chip=0x105e8086 rev=0x06 hdr=0x00 vendor = 'Intel Corporation' device = '82571EB/82571GB Gigabit Ethernet Controller D0/D1 (copper applications)' class = network subclass = etherneti guess that line with Mellanox in dmesg | grep Ethernet does not relate to em1?

No, that line is a red herring.

-

@cobrax2 said in Suricata Inline IPS blocks LAN:

@bmeeks

yes, it's the sameYou have something unusual going on, then. You can look at the error message in the log and see those rules are coming from a "Black list checksum match" rule. That is not part of any built-in rules.

I suspect you may have some custom rules defined for the interface. And the action keyword for those rules is DROP. No built-in rule is ever shipped with DROP as the action.