Network Drive Slow Performance?

-

@johnpoz Indeed hehe

Lawrence is basically showing how latency works with smb protocol. -

@mcury I have not have to play with smb over wan in long time - but latest smb should be better - @wingrait what version of smb are you using.. 2 or 3 or 3.1? What versions of windows devices are doing the copy?

Back in the day our solution was riverbeds between locations..

For everyone's curiosity what is the latency as well - could you post what the ping response time is between these devices trying to do smb file copy.

Windows scaling might be failing as well.. you would really have to do some pcaps to see what your windows is scaling at.

-

@johnpoz said in Network Drive Slow Performance?:

but looks like @Gertjan dropped the last character

Yeah ... sorry, e final 1 or l was missing.

Corrected.

You found the one is wanted to show, as it was the latest one anyway. -

Had vacation over the holidays and just getting back to this. Thank you everyone for your responses.

I didn't know about SMB until this thread, so I'm not sure what version would be used. We have a shared directory on Windows Server 2019 mapped as a network drive on Windows 10 & 11 clients. It would be whatever the defaults are for those.

In the office the non-VPN latency is ~1ms. Remotely it depends on the client, but my own laptop was at 37-43ms and couldn't transfer faster than 1.9Mbps over 400/20Mbps cable connection.

The video was great at explaining why the transfer was slow over VPN. I guess the next progression is how to fix or work around it?

Would using a Linux-based NAS as a mapped drive be any different if the clients are still Windows? Would setting up FTP for large file transfers be a work-around?

-

@wingrait ftp is so dated, and should of died off 10 some users ago - did you mean sftp? ;)

if your talking 2019 server and win 10 and 11 clients you for sure should be using smb 3.1

You should be able to get way more speed that that with 40ms RTT, but you would want to make sure your using window scaling, maybe for some reason that is not being used.. The default 64k window size would limit you to about what your seeing with a 40ms RTT.. Need to bump that up to say 512Kbytes if you want to max out say a 100mbps connection.

But if your clients only have 10mbps up - they wouldn't be able to upload any faster than that.. Doesn't matter what protocol you use..

-

@johnpoz After researching more about it, found some Powershell commands to verify we are using SMB 3.1.1 which I think is the most current version.

With regards to window scaling suggestion, does that change typically need to happen on clients, server, or both?

Is SSL/TLS OpenVPN contributing to the issue? Should I be looking at IPSec or Wireguard instead, or would those only be minor performance improvements?

-

@wingrait Yeah any sort of tunnel would be a hit - some more than others, but if your only seeing like 2MBps I doubt that is the cause.. More like your not doing scaling and just constrained by the the BDP (bandwidth-delay product)..

I haven't dived into such issues in a really long time, so have to refresh the details in my head. There could be something preventing windows scaling when going through the tunnel.. Windows for sure be doing it out of the box from what I remember.

There is a way to check what scaling your doing from a sniff and wireshark - and for some crazy reason I do recall something able to strip that, but I believe that was a past problem I was working out long time ago with different firewall.. I would have to do some research if there is something in openvpn that could prevent scaling from kicking in, and force you to use low window size of 64k, etc.

edit: question for you - is the client openvpn being run on the windows box, or is it a site to site connection where say there are pfsense on each end the devices just route through the vpn. Or is the openvpn client running on the windows 10/11 machine and its connecting to pfsense openvpn server?

-

@johnpoz said in Network Drive Slow Performance?:

. Or is the openvpn client running on the windows 10/11 machine and its connecting to pfsense openvpn server?

Using OpenVPN client on Windows 10/11, connecting to PfSense OpenVPN server via SSL/TLS over UDP. Layer 3 tunnel mode. IPv4 only. Server Mode = Remote Access (SSL/TLS Auth + User Auth)

-

@wingrait just to make sure scaling is enabled - what is the output of this command on both your client and your server?

C:\>netsh int tcp show global Querying active state... TCP Global Parameters ---------------------------------------------- Receive-Side Scaling State : enabled Receive Window Auto-Tuning Level : normal Add-On Congestion Control Provider : default ECN Capability : disabled RFC 1323 Timestamps : disabled Initial RTO : 1000 Receive Segment Coalescing State : enabled Non Sack Rtt Resiliency : disabled Max SYN Retransmissions : 4 Fast Open : enabled Fast Open Fallback : enabled HyStart : enabled Proportional Rate Reduction : enabled Pacing Profile : off -

Client:

TCP Global Parameters ---------------------------------------------- Receive-Side Scaling State : enabled Receive Window Auto-Tuning Level : normal Add-On Congestion Control Provider : default ECN Capability : disabled RFC 1323 Timestamps : allowed Initial RTO : 1000 Receive Segment Coalescing State : enabled Non Sack Rtt Resiliency : disabled Max SYN Retransmissions : 4 Fast Open : enabled Fast Open Fallback : enabled HyStart : enabled Proportional Rate Reduction : enabled Pacing Profile : offServer:

TCP Global Parameters ---------------------------------------------- Receive-Side Scaling State : enabled Receive Window Auto-Tuning Level : normal Add-On Congestion Control Provider : default ECN Capability : enabled RFC 1323 Timestamps : disabled Initial RTO : 3000 Receive Segment Coalescing State : enabled Non Sack Rtt Resiliency : disabled Max SYN Retransmissions : 2 Fast Open : disabled Fast Open Fallback : enabled HyStart : enabled Pacing Profile : off -

@wingrait well the "easy" fix is out the window - if that had been disabled.

Trying to figure out a way to be able to lab this to try and duplicate your problem, or not see it, etc. My work laptop is so locked down - its pretty useless other than work ;) And I really don't have access to another network I could test with anyway.

So best bet is I can route traffic through one of my virtual pfsenses, and introduce delay in the connection to simulate 40ms RTT.. Which is easy enough to do with something like dummynet..

Then I could vpn into pfsense that from my windows machine and move files to and from my nas over that connection.. Just have to set it all up.. Lots of football this weekend so no promises - but you have my curiosity kat meowing at me about this - its not like it doesn't come up now and then, etc.

edit: hmmm why is 1323 timestamps disabled on the server? That could have an effect when high BDP comes into play pretty sure.. You could try enabling those.. I wouldn't matter much when your on a lan, but I do believe not having timestamps could play a role for scaling when you have higher RTT..

-

@johnpoz Not expecting anyone to do all of that work!

I just adjusted the tunnel MTU because the default is 1500 and it was breaking packets. Through ping [Server] -l [MTU] was able to determine 1470 was a size that worked for our connections. Changed the config, reconnected, and the situation still did not improve. I've also tried setting the DisableBandwidthThrottling registry key and rebooted; it does not appear to have impacted performance.

I can't say with certainty, but I don't think it is a VPN Server performance issue either. We only have a couple folks remote, the CPU for the appliance is almost always at 0-1% and the RAM is only at 27%.

-

@wingrait not a lot of work - and something that would come in handy for other stuff.. Being able to add latency in my lab can come in handy.

On the quick napkin math I did, I think your problem would seem to just point to BDP, with a 40ms RTT you would be have slower performance

on a 100mbps connection maximum throughput with a TCP window of 64 KByte and RTT of 40.0 ms <= 13.11 Mbit/sec.

13Mbps / 8 would be like 1.6MBps = which is like what your seeing right, you said 1-2 Mbps - but that can't be right, that is completely utterly horrible, so I take it you meant Bytes not bits??

So maybe clarify what speeds your seeing.. And a good test would be to run a iperf test over the connection as well to see what sort of actual speed you can see without smb being involved at all.

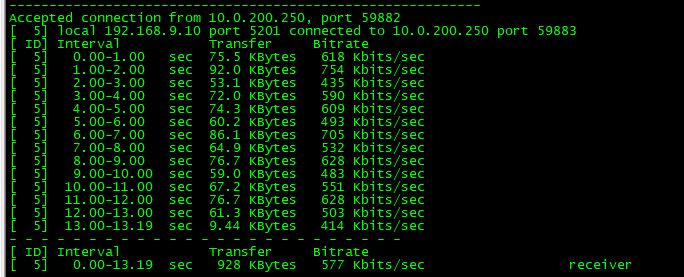

Testing with my phone via cell connection is horrible - at least at the moment.. The response time is all over the place seeing like 375ms etc. and low of 53.. So iperf test is only showing this..

So a test of what speed you can get via iperf could be useful

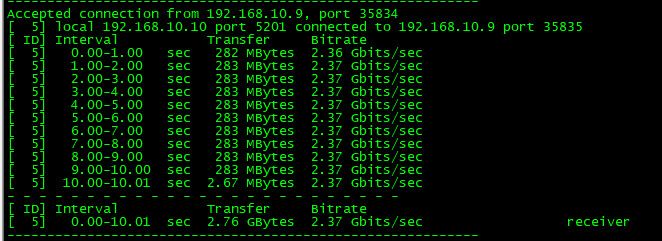

btw - here is a iperf over just the local network.

-

@johnpoz

I cannot believe the hours that were wasted on this! Connections from providers are Mbps. On a windows file transfer, the GUI reports it in MB/s. Most of our users have Spectrum which has an upload cap of 10Mbps for most tiers in our area. 10Mbps = 1.25MB/s with no other overhead. Haven't felt this dumb in a long time.

I cannot believe the hours that were wasted on this! Connections from providers are Mbps. On a windows file transfer, the GUI reports it in MB/s. Most of our users have Spectrum which has an upload cap of 10Mbps for most tiers in our area. 10Mbps = 1.25MB/s with no other overhead. Haven't felt this dumb in a long time. -

@wingrait said in Network Drive Slow Performance?:

10Mbps = 1.25MB/s with no other overhead.

hahaha - well problem solved ;) Glad you got it figured out.. Bytes vs bits is hard sometimes hahahah <ROFL>

edit: btw thanks for pointing out the actual issue, vs just walking away leaving the thread hanging to keep egg off your face..

The B vs b thing bites everyone in the butt at some point, reminds me of still the constant question about wireless, but the router says it can do 1900mbps on the box - why am I only see 200 ;) hehehe