25.03.b.20250507.1611 crash

-

I just had a 25.03.b.20250507.1611 crash. However, it might not be a beta specific issue as I get these crashes once or twice a year (out of the blue), and there isn't normally much useful to go on:

db:1:pfs> bt Tracing pid 37021 tid 128707 td 0xfffff80104540000 kdb_enter() at kdb_enter+0x33/frame 0xfffffe00cdb99a80 panic() at panic+0x43/frame 0xfffffe00cdb99ae0 trap_fatal() at trap_fatal+0x40b/frame 0xfffffe00cdb99b40 calltrap() at calltrap+0x8/frame 0xfffffe00cdb99b40 --- trap 0x9, rip = 0xffffffff812e4c2a, rsp = 0xfffffe00cdb99c10, rbp = 0xfffffe00cdb99d60 --- pmap_remove_pages() at pmap_remove_pages+0x6ba/frame 0xfffffe00cdb99d60 vmspace_exit() at vmspace_exit+0x80/frame 0xfffffe00cdb99d90 exit1() at exit1+0x53a/frame 0xfffffe00cdb99df0 sys_exit() at sys_exit+0xd/frame 0xfffffe00cdb99e00 amd64_syscall() at amd64_syscall+0x115/frame 0xfffffe00cdb99f30 fast_syscall_common() at fast_syscall_common+0xf8/frame 0xfffffe00cdb99f30 --- syscall (1, FreeBSD ELF64, exit), rip = 0x3994995318ea, rsp = 0x399496fb4868, rbp = 0x399496fb4960 --- db:1:pfs> show registers cs 0x20 ds 0x3b es 0x3b fs 0x13 gs 0x1b ss 0x28 rax 0x12 rcx 0x9a55bef9e544a743 rdx 0xfffffe00cdb995a0 rbx 0x100 rsp 0xfffffe00cdb99958 rbp 0xfffffe00cdb99a80 rsi 0xfffffe00cdb99810 rdi 0xffffffff82750a00 gdb_consdev r8 0x25 r9 0x25 r10 0 r11 0 r12 0 r13 0 r14 0xffffffff814688b4 r15 0xfffff80104540000 rip 0xffffffff80d4e3d3 kdb_enter+0x33 rflags 0x82 kdb_enter+0x33: movq $0,0x1d70132(%rip) db:1:pfs> show pcpu cpuid = 6 dynamic pcpu = 0xfffffe008efeb540 curthread = 0xfffff80104540000: pid 37021 tid 128707 critnest 1 "sh" curpcb = 0xfffff80104540520 fpcurthread = 0xfffff80104540000: pid 37021 "sh" idlethread = 0xfffff80002619740: tid 100009 "idle: cpu6" self = 0xffffffff83a16000 curpmap = 0xfffff8010450b7e8 tssp = 0xffffffff83a16384 rsp0 = 0xfffffe00cdb9a000 kcr3 = 0xffffffffffffffff ucr3 = 0xffffffffffffffff scr3 = 0x0 gs32p = 0xffffffff83a16404 ldt = 0xffffffff83a16444 tss = 0xffffffff83a16434 curvnet = 0Is there an interest in looking at the complete dump?

-

Yes, please upload it here: https://nc.netgate.com/nextcloud/s/rs8eJHAETGniYGt

-

@stephenw10 done

-

Hmm, well these are not good:

<6>pid 3612 (pftop), jid 0, uid 0: exited on signal 6 (core dumped) <6>pid 9368 (pftop), jid 0, uid 0: exited on signal 6 (core dumped) <6>pid 65518 (pftop), jid 0, uid 0: exited on signal 6 (core dumped) <5>gif0: loop detected <5>gif0: loop detected <5>gif0: loop detectedThere's no timestamps on those so I don't know how close to the crash that is.

Otherwise it's all pretty generic unfortunately.

Do you know what version it started happening in? After enabling something?

-

@stephenw10 I saw the pftop crash first in the 1May beta (I mentioned it here https://forum.netgate.com/post/1213946). I hadn't been using pftop that much prior to that so it was my first sighting looking at the /status.php output.

I ran pftop a few times today, and the log entries are related to those, not close to the crash if I can recall todays events accurately...

I get a few gif0 loop detected occasionally. I only enabled a gif interface last weekend so it's also new, could be something misconfigured. I'll look into that over the weekend.

-

Ah, OK. That should be fixed in the next pftop version

Have you seen the pmap crash in older pfSense versions? I thought I recalled a previous thread....

Edit: Ah this I guess: https://forum.netgate.com/topic/187067/rare-kernel-panic-on-23-09-1-release-amd64-non-netgate-hw

-

@stephenw10 said in 25.03.b.20250507.1611 crash:

I thought I recalled a previous thread....

Indeed, it was mentioned way back in https://forum.netgate.com/topic/187067/rare-kernel-panic-on-23-09-1-release-amd64-non-netgate-hw?_=1746825894764

I have had the infrequent ones over the years, normally at night but there hasn't been much to go on so I haven't pushed the issue further.

-

Hmm, I'll see if our devs have any insight there but something spanning versions like that seems to point at something specific to your system.

If you have any further crash reports that might help.

-

@stephenw10 said in 25.03.b.20250507.1611 crash:

If you have any further crash reports that might help.

I just realised I do have a bunch, could be every crash between Oct23 and now. I'll zip them up to preserve time stamps. You tell me where to put them.

-

@pst You may use the same link as before.

-

@marcosm Ah, done.

-

Thanks!

-

@stephenw10

I had a look at the crash dumps, ddb.txt especially, to see if I could see a pattern. I have a growing suspicion that they could be caused by the RRD_Summary package, and related to /var/db/rrd/updaterrd.sh especially.I base this theory on the following observations:

The processes active at the time of the crashes, either "sh" running some script, rrdtool, or sysctl (and both are called from updaterrd.sh):

$ for f in textdump.tar*/; do ls -l $f/ddb.txt; grep curthread $f/ddb.txt; done -rw-r--r-- 1 ps 197609 401246 Nov 28 2023 'textdump.tar(1)0//ddb.txt' curthread = 0xfffffe00848f1720: pid 11 tid 100007 critnest 1 "idle: cpu4" fpcurthread = none -rw-r--r-- 1 ps 197609 419097 Nov 29 2023 'textdump.tar(1)1//ddb.txt' curthread = 0xfffffe00cdcbb720: pid 82157 tid 112839 critnest 1 "sh" fpcurthread = 0xfffffe00cdcbb720: pid 82157 "sh" -rw-r--r-- 1 ps 197609 455061 Mar 30 2024 'textdump.tar(2)//ddb.txt' curthread = 0xfffffe00cbd8b720: pid 73857 tid 113360 critnest 1 "sysctl" fpcurthread = 0xfffffe00cbd8b720: pid 73857 "sysctl" -rw-r--r-- 1 ps 197609 439927 Jun 16 2024 'textdump.tar(3)//ddb.txt' curthread = 0xfffff80001a22740: pid 8252 tid 100331 critnest 1 "sh" fpcurthread = 0xfffff80001a22740: pid 8252 "sh" -rw-r--r-- 1 ps 197609 441834 Nov 19 02:53 'textdump.tar(4)//ddb.txt' curthread = 0xfffff80088a12740: pid 4364 tid 104673 critnest 1 "sh" fpcurthread = 0xfffff80088a12740: pid 4364 "sh" -rw-r--r-- 1 ps 197609 454361 Jan 23 02:50 'textdump.tar(5)//ddb.txt' curthread = 0xfffff8021b38d740: pid 28810 tid 100633 critnest 1 "sh" fpcurthread = 0xfffff8021b38d740: pid 28810 "sh" -rw-r--r-- 1 ps 197609 336991 May 9 16:27 'textdump.tar(6)//ddb.txt' curthread = 0xfffff80104540000: pid 37021 tid 128707 critnest 1 "sh" fpcurthread = 0xfffff80104540000: pid 37021 "sh" -rw-r--r-- 1 ps 197609 424254 Sep 28 2023 textdump.tar//ddb.txt curthread = 0xfffffe00cb1783a0: pid 73702 tid 101438 critnest 1 "rrdtool" fpcurthread = 0xfffffe00cb1783a0: pid 73702 "rrdtool"In the latest crash textdump.tar(6)/ddb.txt, the "sh" that crashed while trying to exit (state RE)

db:1:pfs> ps pid ppid pgrp uid state wmesg wchan cmd 37021 68125 22 0 RE CPU 6 shhas a parent (68125) that is also a shell

68125 1 22 0 S+ wait 0xfffffe00ca219b00 shwhose parent is "1" i.e the system init. If I check the running system there aren't many shell processes started and running from init.

[25.03-BETA][admin@pfsense.local.lan]/root: ps -lx | awk '{ if ( $3 == 1 ) { print $0 } }' | grep "/bin/sh" 0 98160 1 0 68 20 14644 3208 wait SN u0- 1:10.95 /bin/sh /var/db/rrd/updaterrd.shNow, this doesn't really take me any closer to understanding why the crashes occur on my system, but if you agree to my reasoning we might at least have narrowed the problem down a bit. I could uninstall RRD_Summary, but due to the infrequency of the crashes we wouldn't know for at least six months if that was the cause, and it wouldn't solve the actual problem either.

-

Right, that could well be the case but it shouldn't cause a kernel panic! I run that package here without issue. One of our devs is meditating on it.

-

@stephenw10 said in 25.03.b.20250507.1611 crash:

<5>gif0: loop detected

<5>gif0: loop detected

<5>gif0: loop detectedand regarding these, I managed to track down the root cause...

The loop detected indications appeared when I put my computer to sleep... What?

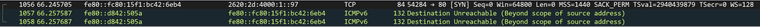

Packet tracing on the gif interface revealed this in wireshark:

After further digging I realised that one of my hyper-v guests (an Ubuntu instance) seems to be calling home every time it gets a suspend indication - but using the link-local address. I'm not sure if that's an issue in the hyper-v guest or the hyper-v server sending packets using the link-local address.

After updating the LAN rules to filter out _private6_ addresses I no longer see gif0 screaming about loop detections.

All is well, for now...

-

@stephenw10 but obviously there had to be multiple reasons for these "loop detected", I discovered one additional cause which seems more related to the inner workings of pfSense.

The scenario is as follows

- I put the computer to sleep

- a TCP retransmission is received on the gif interface aimed for the now sleeping computer

- after three seconds (timer expiry?) a ICMPv6 Destination Unreachable (Address Unreachable) is generated by pfSense

- this ICMPv6 packet is what triggers the "gif0: loop detected" (the timing in syslog and packet trace matches)

The information in the "destination unreachable" looks fine to me, so there is no obvious reason why it could be interpreted as "looped". I can upload the pcap if anyone is interested?

-

Hmm, how exactly are you using the gif tunnel(s) there?

-

@stephenw10 gif0 is the only gif tunnel I have, it is a tunnelbroker.net connection that provides IPv6 to the LAN (where the sleepy computer resides) and a number of VLANs.

Those LAN/VLANs all have static IPv6 configuration (/64) in the routed/48 tunnelbroker subnet.The router mode is set to Assisted with DHCPv6 servers running.

In addition to tunnelbroker.net I also have one VLAN that is configured with IPv6 by Tracking my ISP WAN which uses DHCPv6.

-

Hmm, curious. Yes, hard to see how that could create any sort of loop on any interface.

Is that client that goes to sleep attached directly to pfSense? Such that the link state could change when it goes into standby?

-

@stephenw10 said in 25.03.b.20250507.1611 crash:

Is that client that goes to sleep attached directly to pfSense?

No, there's an unmanaged switch inbetween