Squidguard category filtering silently fails with large blacklist - a workaround

-

Hi All,

This is a somewhat (?) well known problem with PFSense seemingly for many years.

If you try to use a "large" blacklist like http://dsi.ut-capitole.fr/blacklists/download/blacklists.tar.gz you may run into problems with the category based blocklists failing to work at all, or working intermittently or partially, despite no errors appearing in the user interface when you try to download/apply the blacklist.

This is even mentioned on the page for that blacklist:

http://dsi.ut-capitole.fr/blacklists/index_en.php

"Squidguard in Pfsense has a problem with the size of the database. pfblockerNG (a specific package or pfsense) might be the solution: an explanation"

As the capitole.fr blacklist is the only remaining free one available that I can find, this is quite a big problem. There is a smaller size version of the blacklist available from the same site specifically to work around this problem with PFSense but that is not ideal as it obviously leaves out a lot of sites.

After digging into this the root cause of the problem is a design decision to always extract the archive to a temporally created ram disk which is only 300MB in size - if the extracted files exceed this size the extraction partially fails (and without reporting any errors in the user interface - but that is a separate bug) and the incomplete version of the blacklist files are then imported to the squidguard database.

This leaves you with an incomplete category database with many sections missing so you will find category blocking fails to work or some categories you have selected don't seem to work...

The capitole.fr database seems to be right on the edge of what will fit - on one of my systems it does seem to fit and it works, on another very similar system it fails miserably, not sure why... but it left me running around in circles for quite a while trying to understand why the category filters worked on one system and a failed on a second identically configured system...

I'm assuming that extracting to a ramdisk was intended for small devices like the SG-1100 which have limited storage and limited write cycle life on that storage, (eMMC ?) however for people like me running PFSense on a Xeon Server with 8GB of ram and 128GB SSD there is absolutely no need to extract to a limited size ramdisk.

So I wrote a simple patch to modify squidguard_configurator.inc not to use a ramdisk and voila - the problem is solved.

I'm using the full size capitole.fr blacklist on two systems with 100% reliability, as well as auto-updating them twice a week using a custom cron job.

I present the patch for anyone who might want to try it at their own risk. Don't use it if you have a small device like one of the low end arm based netgate devices with limited storage as the extraction process may fill your drive and cause other problems, or cause unnecessary wear. (That's why the ramdisk is there in the first place)

However it will be fine and in fact highly desirable if you use a normal large SSD, spinning drive or are running on a virtual machine where storage limitations are not an issue.

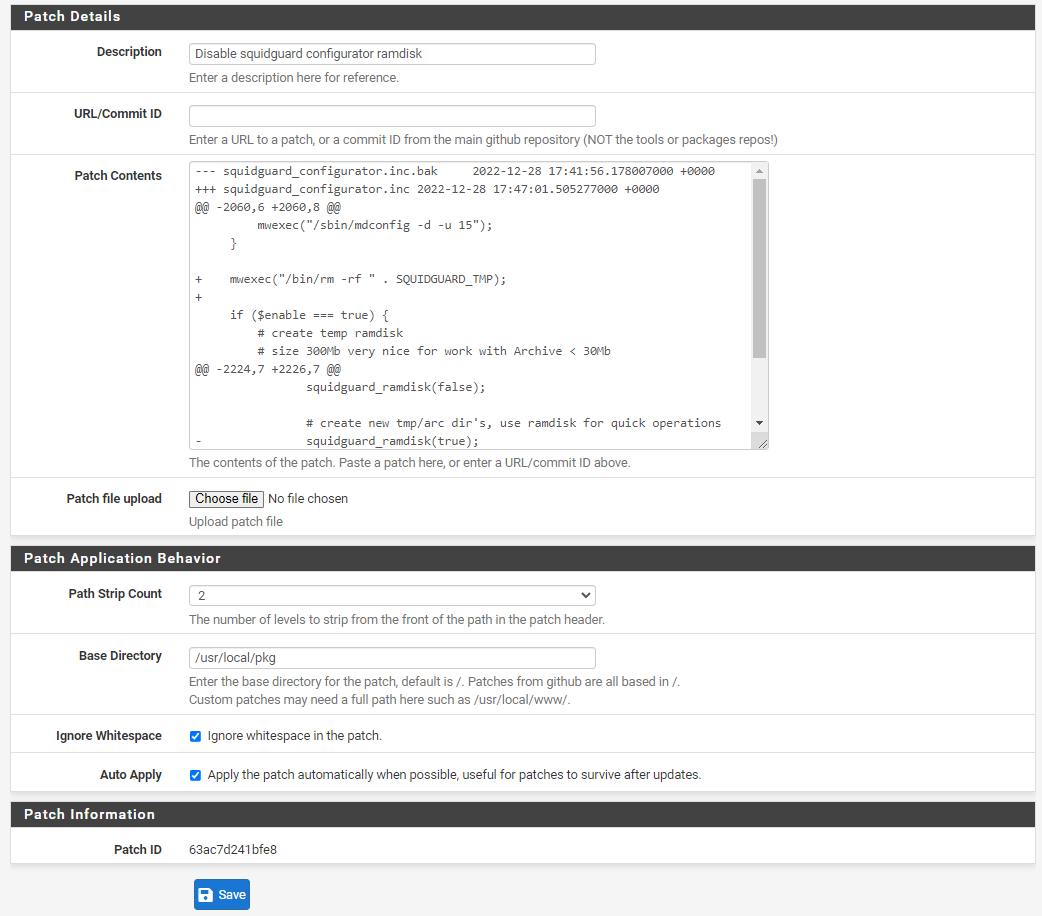

To apply the patch you'll need to install the System Patches package from the package manager, then go to Patches, Add new patch, and carefully copy and paste the following directly into Patch contents: (make sure you scroll down while selecting to get everything)

--- squidguard_configurator.inc.bak 2022-12-28 17:41:56.178007000 +0000 +++ squidguard_configurator.inc 2022-12-28 17:47:01.505277000 +0000 @@ -2060,6 +2060,8 @@ mwexec("/sbin/mdconfig -d -u 15"); } + mwexec("/bin/rm -rf " . SQUIDGUARD_TMP); + if ($enable === true) { # create temp ramdisk # size 300Mb very nice for work with Archive < 30Mb @@ -2224,7 +2226,7 @@ squidguard_ramdisk(false); # create new tmp/arc dir's, use ramdisk for quick operations - squidguard_ramdisk(true); + # squidguard_ramdisk(true); # don't use ramdisk! mwexec("/bin/mkdir -p -m 0755 {$tmp_unpack_dir}"); mwexec("/bin/mkdir -p -m 0755 {$arc_db_dir}");Make sure base directory is set to /usr/local/pkg. Here is a screenshot:

Then apply the patch to enable it. Once you do this large blacklists should work reliably.

Incidentally - I've noticed a bug with the "Auto Apply" option, it says that it should automatically re-apply the patch after an update, however I've noticed that if I uninstall squidguard and reinstall it it does not re-apply the patch automatically unless I reboot as well. However you can just click the apply button after reinstalling squidguard rather than rebooting.

Really the ability to use or not use a ramdisk should be a preference option in the Squidguard preferences pane - and it probably wouldn't be that hard to add.

If there is enough interest I could look at writing a better patch which actually adds a user interface preference check box to to enable/disable use of a ramdisk, something that could potentially be submitted for inclusion in future versions...

-

@dbmandrake This is really cool, thank you! This should be added to the squid package.

-

@dbmandrake I really think you should open a redmine and post the patch there. Maybe it will get in before the release or as a package updat a few weeks from now.

Good job ! -

@michmoor Just to be clear - the patch as it stands above is not suitable for inclusion as it is just a simple hack to disable ramdisk functionality for my (and probably many peoples) use case. There are some small devices where the ramdisk is actually needed.

A proper fix would be adding a preference option in the UI which is quite a bit more work, but if I get the time I might have a look to see if I can come up with something, no promises though.

Squidguard is not part of the base install but is a separately installed package through the package manager, so as far as I know Netgate could push an update to the squidguard package at any time including a fix for this issue as it wouldn't be tied to the main release cycle of the base installation. (I just saw an update for the OpenVPN client export utility this morning and applied it for example)

-

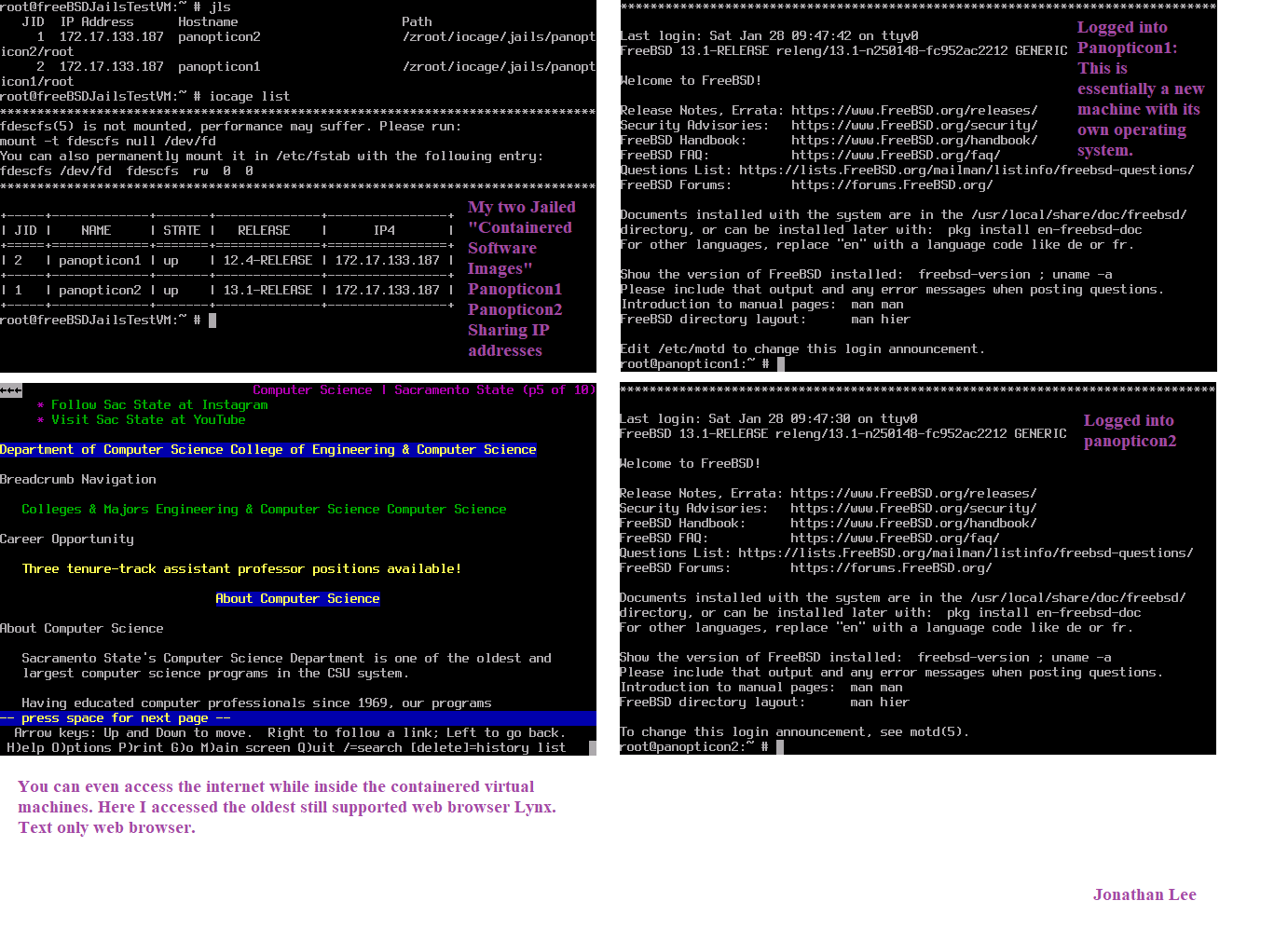

@dbmandrake the more I read about this issue the more I wonder if containers would be a good solution for this. Maybe a container that could spin up just for the download needs and install the blacklist and delete itself just to replace the updated URLs in a blacklist. Something like Docker, Kerbernets, Mesosphere, or FreeBSD Jails. Maybe not to the level of container orchestration, but on a smaller one container use need. It would be stored on the drive, and be isolated and protected. Some may say RAM disk was the birth of virtualization. I always thought so during DOS 3.11 days. So why not just move into container utilization for blacklists. What are your thoughts on something like containerized blacklists to help with how large the url blacklists are getting?

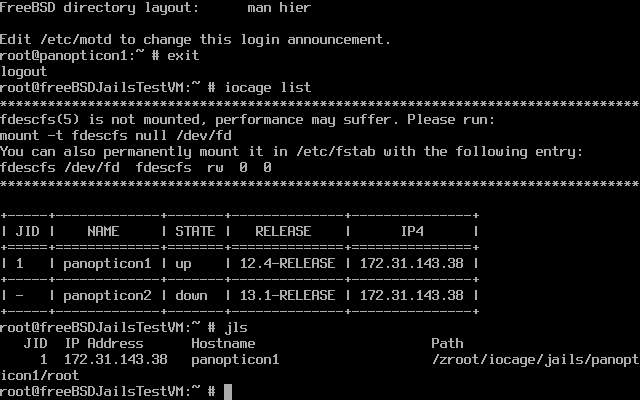

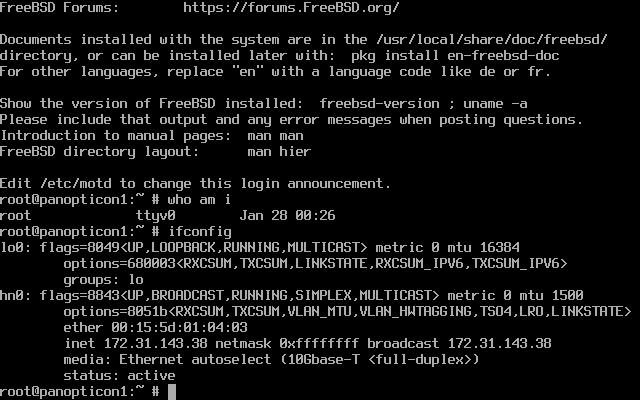

What about something like FreeBSD Jails? I have been researching them. They can even share IP addresses with the primary host. Also risky as they can data marshal the network cards.

(Image: FreeBSD Jails Testing with iocage management)Hypothetical solution:

If you needed to have access to the container for a URL update just start the Jail download, it downloads while the other Jail is in use, after the update is pushed swap to the other Jail as the primary and leave the other as standby for next time. Why even wait for the new download right? Just like AMO the OS that runs the Siemens Hicom PBXs, they use of dual boards, they have a primary and a secondary. To update software changes in a Hicom PBX you run the command "EXE-updat: BP, ALL;" two times so it sends the updates and changes to each board, when the command is run it changes the active board from 1 to 2 and after back to 1. same with EXE-updat : A1, ALL; they have a active and a stand by. We have the software to do this with blacklists now and containers, lets update the firewalls like this. Right?

This is a proven solution within currently in use as a hardware design for large scale PBXs. We just need to retool this within the context of containers configurations for use with PfSense firewalls software improvements.

(Image: Logged into FreeBSD Jail)Hypothetical:

If the Jail is set up just to hold a blacklist database, it could self-check changes within that database and so on within the two containers, once an update starts for the standby jail, it just swaps in as the primary and you never see any downtime, the primary becomes the secondary and waits until the next scheduled update.Maybe the solution is that we need a different kind of download, "a Jailed containerized download," One container holds the primary useable list, and once it is outdated it just swaps to the other container.

-

@jonathanlee Holy Cow there are plenty of porn websites.

Here's what I did as another workaround for just too many blocks in memory that slip through and fail. Maybe it's of interest to someone here.

By default, PFSense Blocks everything right?

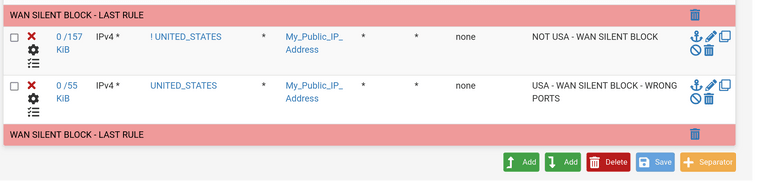

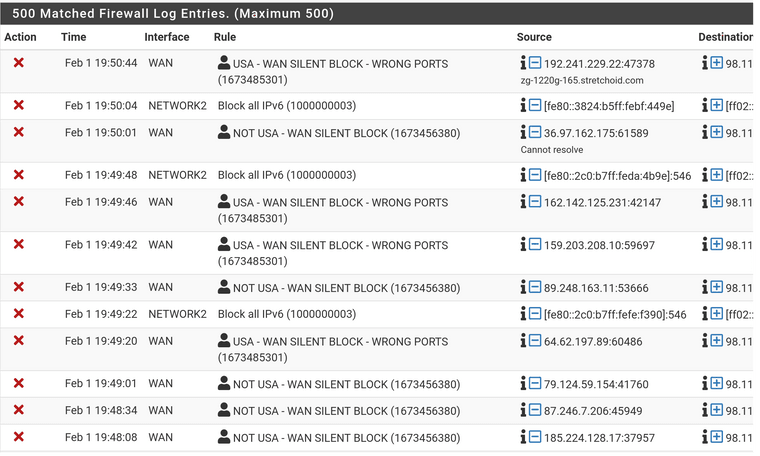

This is My specific Case WAN RULES:

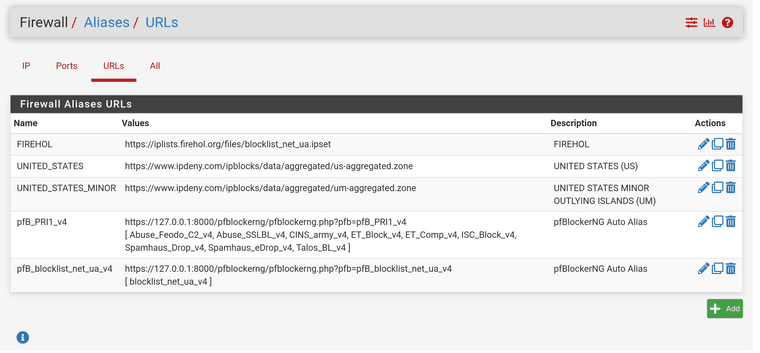

1) Allow Both USA IPS - This would work for most unless you command total global e-commerce!

Maybe you would allow a few others in. I already worked hard and added every country in PFSense ALIASES for you guys to take whatever you need. PFSENSE-GEOIP-List-of-Countries

2) Allow only these Ports - Created Aliases Called Allowed Ports***3) Create a Drop Rule last rule (yes it drops anyway) and Tagged in Log RealTime "Dropped Wrong Ports" Or "Dropped Wrong Country!"

4) Finally, I only drop what's needed because of the small open footprint. Don't block the world!

Then, I created white lists for updates for Ubuntu, PFSense, Debian, Proxmox, etc at the Top of the Drop rule.

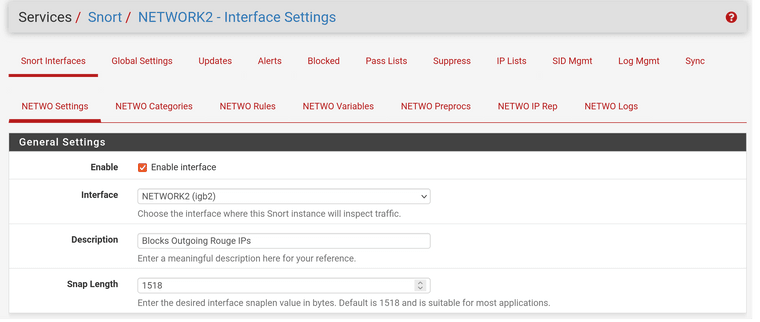

5) Lastly, ON the LAN side or WAN side use pfBlocker to protect your open inbound ports or just block outbound Lan. It's a choice but remember those rouge IPs some are USA based (We passed "USA IPs and Selected Ports")but most are not. Like I said it's a choice but now you should be able to use all these ips without a rouge Ip slipping through. The SNORT on the LAN side only.

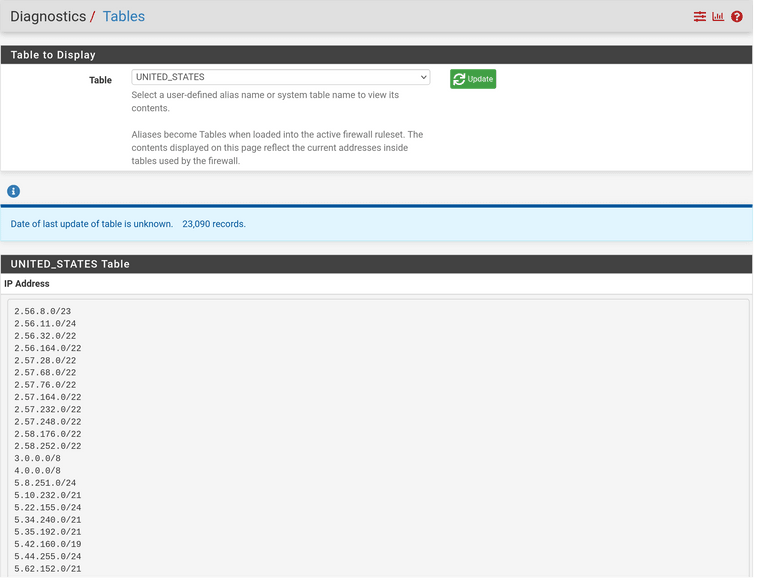

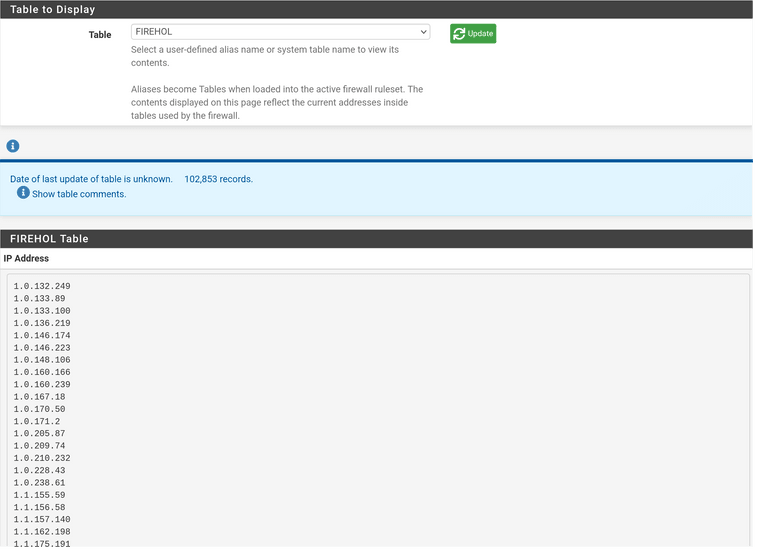

6) Now make absolutely sure your IPS lists are working @ Diagnostics / Tables.

7) System Advanced Firewall adjust "Firewall Maximum Table Entries!" The maximum number of table entries for systems such as aliases, sshguard, snort, etc, combined. Note: Leave this blank for the default. On this system, the default size is: 50,000,000.

I Use 50 Million (WTF) with 32 gigs of Ram but I'm sure I'm not even close. I do know you will have to tune this if you have less RAM for sure. At some point, if you keep adding growings lists then you will have "got aways," block what you really need.Everything should work w/o adding multiple massive lists.

By locking down the IPS to Alllow, and by only allowing only those IPs limited access to the selected few ports you can reduce

your footprint significantly and not bog down your firewall. I hope this helps someone.

-

@mikeinnyc yes there is so many websites that need to be blocked it does a memory overflow when loading the blacklights, unreal right? I do a reduced list and add in specific needs.

-

@mikeinnyc Hi Mike great post, how much resources are being consumed with this configuration?

-

Here is a better example of Jails in use. Each is running within the host machine. Maybe a solution to attack this problem this way?

They can even change the IP addresses of the Jails while they are live, so you could set it to one or the other with Lan based IP addresses also.

-

When I worked on wall street for a really fast three decades before the days of IT Lockdowns and dialup 56k, T1s, and the most expensive DSL connections $800 a month. We "May Have" used gambling sites and others. (See the movie the wolf of Wall Street!") I do not recall ever seeing porn on computer screens!" Well after a couple of industry lawsuits here's how it rolled in every co afterward:

- DENY EVERYTHING - Guess what we have this using pfSense by default!

- Beg Compliance for Opening website access - Always denied. Thank gawd for cell phones :)

- Read approved websites list of maybe 1 page in total - all business related.

WHY- Because LOG FILES must be kept for life on Wall Street. So it's possible that "Girls Gone Really Wild," may come back to haunt me! :) Probably would help me hahaha.

Now, my recommendations - I have vast experience in getting sued as a CEO.

IF - You have employees or people you can physically tap on the shoulder (1099) then use the above. Only have approved outgoing websites. If they complain, they won't because personal cell phone 5G is outside the scope and jurisdiction of record keeping

unless it's business related. Besides they screen record everything and video record. Using encrypted sites like signal and datchat for personal use is unstoppable.If Web Hosting - Deny all countries by default - except this Whitelist block of IPS from USA. You probably should use cloud flare if more than a few countries. So many bad actors from certain countries.

Deny all ports - Except this whitelist of ports. You can further add ips to lock in down more.

Then, protect those web hosting ports with rate Liming stick tables (HAProxy) and other filters.

The point is that with web hosting you should rarely need outbound denys lists why because by default deny all except this IP and that Ip and this port. Your production Network traffic should not leak into Private lans period.

Buy enterprise hardware, not VMs. You will always have problems so check logs. -

Guys, all this talk about firewall rule policies, HA Proxy, corporate policies etc is nothing to do with what this topic is about and is only serving to derail and dilute the thread.

Please try to stick discussion about the bug in the SquidGuard package which allows a too small ramdisk to overflow during extraction without any warnings or errors and then imports a corrupted database into squidGuard, this is what this thread is about. Thanks.

-

It is about Business Impact Analysis and Mission Essential Functions.

The rabbit hole:

Yes, configure your system on a as need basis. Take into consideration many factors some being Data Classifications, Geographical Considerations, Data Sovereignty, Organizational Consequences. The Serverless Architecture of our modern systems today also start to play key roles, when using something like Azure as a Domain. Moreover, Software Defined Networking is playing more of a role than most end users know about today with the use of hyper-convergence. "User-facing" problems are far different than confidentiality and data integrity problems and they require different considerations within risk mitigation. It is not only that machine virtualization that needs consideration, but container virtualization, and full application virtualization. They take different roles within risk mitigation as they can perform data marshalling over the NIC cards easily. Look to how many cloud service models they have today, Infrastructure as a service, Software as a service, Platform as a service, Anything as a service, and even Security as a service. The toxic idea of just avoiding virtualization, and or ignoring it, no longer applies. The risk mitigation plans have to include virtualization today. All needs must be taken into consideration within implementing authorization solutions. It's Discretionary and Role-Based Access Control. Windows 11 helped to solve some of the issues with virtualization risks on an end user platform, as some issues were occurring inside of Windows 10.Access Control Lists:

I have also noticed that some websites if you simply just block the IP address inside of the access control lists, they can still be accessed over HTTPS or HTTP, that is why I am using Squidguard it checks the http/https get requests, but it needs a blacklist to function, manual or downloadable.One of the reasons why I am studying software and computer science, is to help find a really good solution, and it seems as soon as we get a good one working some prototype protocol evolves that needs to be accounted for that is not following the rules or compliance within Internet Assigned Numbers Authority. Why have rules like the ones from IANA if there is no compliance. Now in comes the need for something like internet backbone compliance servers or cards installed right on a Ciena system, that can track and block prototype protocol abuses. Lets agree on one thing the wild west days before GDPR and CCPA are gone forever.

-

This post is deleted! -

@dbmandrake Please take time to check my hypothetical solution with Squidgard lists above, let me know what you think.

-

@jonathanlee I know this sounds off-topic but it's dead on.

Don't block the world and call it a bug :)

This is a hardware limitation issue. Try adding more memory first and then add many IP aliases.The real question is network design.

By Default pfSense Blocks everything. How can we add more whitelists?

Do you really want employees accessing everything or is this "Home use only?"

Sorry to be abrupt but this solves your problem. Better hardware and more ram. -

@jonathanlee Re: Containers / Jails.

This seems like a massive degree of overkill, what problem do they solve exactly ?

The reason why the ramdisk exists at all is for small devices with limited storage where the temporary disk space needed to extract the plain ascii version of the blocklists (around 300MB for the blocklist I'm using) would cause the device to run out of disk space.

I'm just not seeing how a container solves the problem of lack of disk space.

It also speeds up the extraction and importing process since what are essentially temp files don't have to be written out to disk.

However on a server with a decent sized SSD there isn't really any advantage to using the ramdisk apart from a slight speed increase, but the disadvantage is it can fail with larger blocklists and due to inadequate error checking the failure is not detected and the incomplete blocklist is imported into squidguard without complaint which then silently breaks your filter categories. This is a big problem in an environment like a school where a school has a duty of care to not allow pupils to access certain kinds of websites.

If I do write a patch to add a ramdisk enable/disable preference option I will also write a patch to fix the error checking so that a failure due to exceeding the ramdisk size (when enabled) is reported to the user and the incomplete blocklists do not overwrite the currently active ones.

I would like to do this it's just a matter of finding the time to work on it as I'm bogged down with too many things at the moment.

-

@mikeinnyc Sorry but you comments are way off the mark - "Better hardware and more ram" doesn't solve anything. You clearly haven't read and/or understood the original post and grasped the issue with the fixed size ramdisk which is currently a part of the blocklist import process.

The hardware is absolutely capable of working with a blocklist of the size in question - without even breaking a sweat. Once the limitation of the small, fixed size ramdisk is removed, that is.

-

@dbmandrake im thinking that post was some type of SPAM. I could be wrong

-

@dbmandrake I actually forgot about the speeds of SSD drives today, the hypothetical solution I hoped would also help solve the issue with downtime when updating blacklists, on my firewall everything goes offline during blacklist updates, and the firewall can't use the full blacklist because of the same issue you described and solved. My system is the MAX so it has an extra 30GBs SSD on it. Additionally, it could protect the blacklist uptime if something got corrupted with a bad blacklist update, this way it could default back to that other container if that issue should ever occur. Kind of like a HA-Proxy just for blacklists, primary and secondary. High availability.

Thanks for looking at that post, I just wanted to have some input on it with Squidguard, alongside more visibility on FreeBSD Jails for the possibly retooling them for something else.

-

@jonathanlee said in Squidguard category filtering silently fails with large blacklist - a workaround:

@dbmandrake I actually forgot about the speeds of SSD drives today, the hypothetical solution I hoped would also help solve the issue with downtime when updating blacklists, on my firewall everything goes offline during blacklist updates, and cant use the full blacklist because of the issue you described.

I've been using the full size blacklist since before I started this thread without issue - with the patch to disable the ramdisk. No issues have cropped up yet, in fact the firewall hasn't been rebooted since before this thread was started. I actually have a second firewall running this patch as well as I've had to temporarily set up a second proxy server for a slightly different use case.

Regarding going offline during the update, I'd have to check but as far as I know Squid doesn't go offline during the extraction of the tar file - which is the longest part of the process.

I think it's only offline for a few seconds at the end of the import process for the same amount of time as if you'd pressed the Apply button in the squidguard config page, which forces squidguard to re-read the on disk version of the blacklist binary database into memory.

But I should run a test to time how long the proxy is out of action. I have mine scheduled to do the blacklist update automatically at 2am anyway so if the proxy is down for a few seconds at 2am nobody cares.

Not sure what you mean when you say "everything" goes offline on your firewall when the blacklist updates - only the proxy (and transparent proxy) will be affected, all other traffic is unaffected.