half network speed after upgrading

-

So plenty powerful enough then!

Link states are all as expected?

Try testing locally using iperf. Or use speedtest-cli from pfSense itself. Try to narrow down which NIC(s) are throttling the connection.

Steve

-

link states are as expected i even tried manually setting them to values. the i225 is running at 2.5g and the chelsio is 1g so because the speed is limited to exactly half of 1g i am going to guess its something with the chelsio card. i don't have a second card to drop in and test though. pfblocker seems to be giving me some trouble as well after update. for some reason its taking 1-2 full cores for some tmp log. will have to report that to them

-

@high_bounce said in half network speed after upgrading:

chelsio 520bt.

That's a dual port card? Try testing between those ports if they are both internal subnets.

-

yes it is a dual port. tried changing to other port same problem

-

Right but if you test between the ports using iperf. Or between a client and pfSense running iperf itself that would show if it's the Chelsio.

-

ran iperf between my main desktop and pfsense and i get 450Mbps from pfsense but 950Mbps to pfsense. if there are other command you would like me to run please post them i haven't messed with iperf outside of speed tests

-

Ah, interesting. I'd try swapping the server and client roles so the states are opened the other way and see if that changes anything.

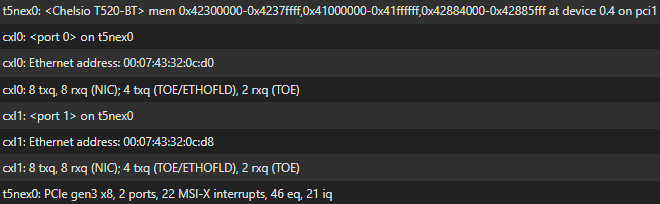

Make sure the Chelsio shows multiple Tx and Rx queues in the boot logs when the driver attaches. It could be using 1 CPU core in one direction for some reason. Though with that CPU i should still be OK. -

the rx/tx cues are off i have turned off hyperthreading on the cpu. that's all i see that are wrong

-

@high_bounce perhaps this is old school and things don't work this way anymore but generally the second you actually SET a speed and duplex on one side...the link goes to hell. Because it is no longer auto-negotiating properly, the other side will drop to ultra slow and half duplex.

If you set anything at all manually...you need to be hyper vigilant at setting everything manually. ... and most consumer switches and modems aren't even capable of being set manually....so guaranteed failure. -

Yeah 8 queues each way, no problem.

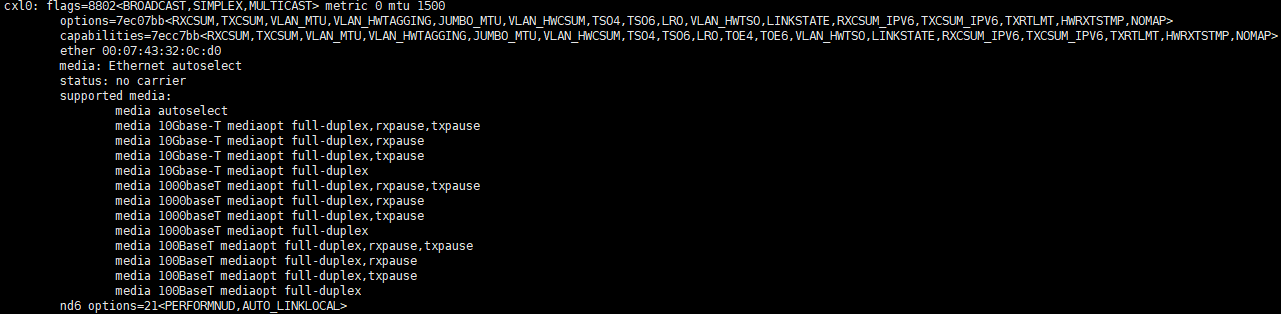

You could look at the off-load options that are enabled in:

ifconfig -vvvm cxl0 -

-

Hmm, I'd try disabling TSO4 and LRO as a first step there. Those are both normally disabled by default in Sys > Adv > Networking.

-

both are already checked in the Adv settings. should i try unchecking then rechecking in the UI?

-

Yes, try that. Though that may require you to reboot.

Otherwise you should be able to manually disable them using ifconfig like:

ifconfig cxl0 -tso4Unfortunately the syntax there can be confusing and I don't have anything setup right now to check.

-

no changes with the radio buttons or ifconfig. ifconfig gets reset after reboot so maybe needs to be set in a config file

-

Disabling those options using ifconfig doesn't work? Or it doesn't make any difference to throughput?

-

it changes the options for the interface but no change in throughput. also i changed boot environment back to 22.05 to check for differences i found a few i have no idea if they make a difference:

boot logs

23.01 8 txq/rxq, 22 MSI-X, 46eq, 21iq

22.05 4 txq/rxq, 14 MSI-X, 30eq, 13iqifconfig looks all the same but 23.01 has an option called "NOMAP" i am not sure what it means and cant find anything online about it

-

NOMAP is to allow unmapped mbufs. I don't expect it to make any difference but I can't find any way to disable it! It looks like you should be able to

ifconfig cxl0 -nomapbut that doesn't work for me.You can almost certainly tune the queues back to 4x in the sysctls/tunables but it's hard to imagine that would help. 22 MSI-X interrupts.... I couldn't say but again it's hard to see how more would be worse.

If you run

top -HaSPduring a test do you see the loading spread across the cores correctly? -

no change in cpu utilisation im still at a lost as to cause are you able to replicate on your end?

-

I don't have a Chelsio 10GBase-T NIC to test with unfortunately. But I haven't seen anything like that on any other NICs.