limit of virtio performance

-

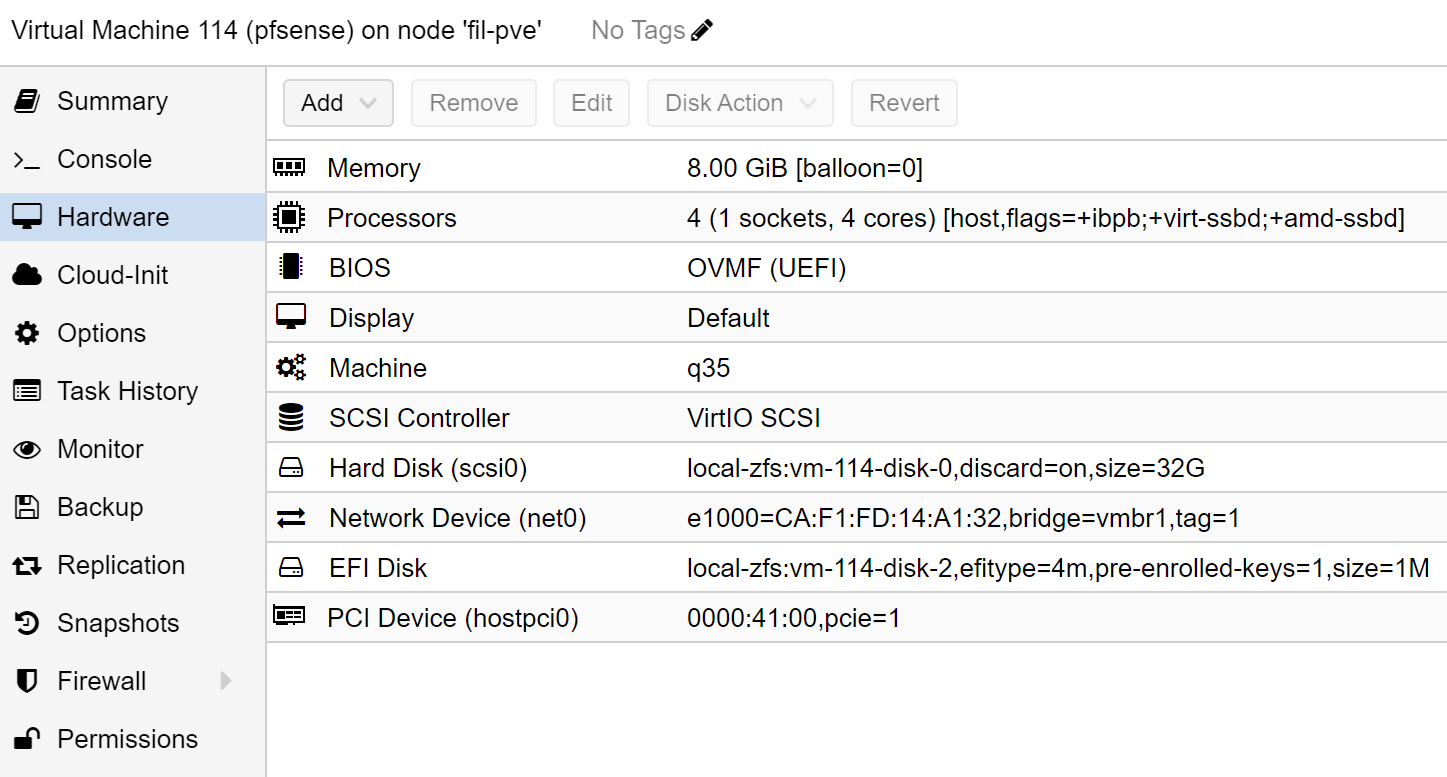

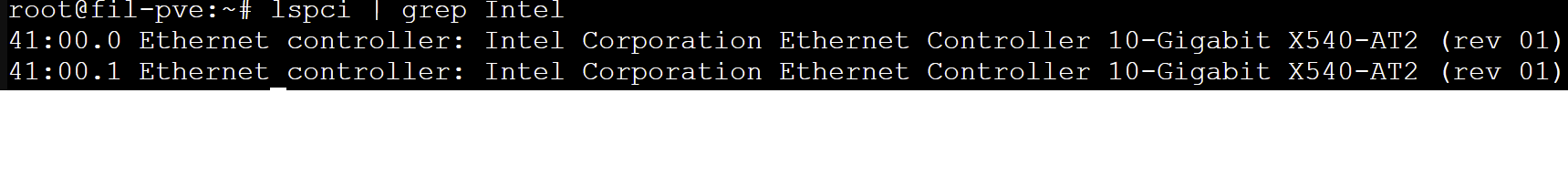

I am running pfSense on Proxmox. The host is very lightly loaded, and plenty of resources allocated to pfSense, so no bottleneck there. I normally use a an Intel X540 NIC with passthrough, one port connected to WAN (10G) and other port is connected to my L2 switch (10G, various VLANs).

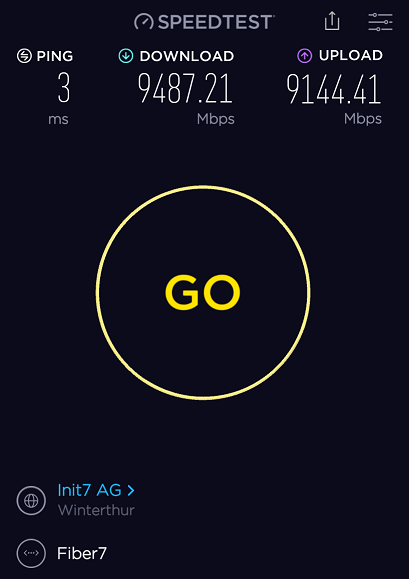

I test the connection speed with Speedtest, my ISP has one on their site, so only testing the connection to the ISP. With X540, I always see over 9Gb/s.

I thought maybe I can remove the physical NIC, so I assigned virtio interfaces to pfSense (one for WAN, one for LAN and each are connected to different bridges and different physical ports on the host). When I then test, the maximum I saw was around 6Gb/s (with checksum and lro,tso etc. disabled). Is this the maximum that can be reached with virtio adapter ?

-

Offtopic reply:

@metebalci I'm honestly surprised you can reach 9Gbit/s with passthrough.

Is this with nat and firewall enabled?

What hardware are you running?Passthrough will frequently increase performance.

Depends on hypervisor compatibility with freebsd on how much virtual NICs suffer.You could try a beta version to see if that makes a difference - but current beta snapshots have debugging enabled which can limit performance

-

@heper Yes pfSense is my only firewall and router. I have IPv6 setup so normally NAT is not used much but I tested both with and without IPv6 enabled in my Windows PC where I run the Speedtest app and the results are not different, always over 9Gb/s and it is actually increasing but test finishes around 9.5Gb/s. Quickly looking to top output on pfSense, I see around 75% CPU use at peak but it finishes quickly so I am not sure how accurate this is.

Proxmox is installed on an EPYC 7313P (16 cores, 3GHz) system, and at the moment I have 4 cores assigned to pfSense and 8GB RAM. I did not pin the pfSense VM so actually it is not 100% optimized, I can pin it closer to where NIC is actually connected (there are 4 NUMA nodes), but it seems for 10G it does not matter. NIC is Intel X540-AT2, 2 ports copper, WAN port is connected to media converter (Copper-SFP+) which goes to FTTH port, the other port is connected to a QNAP switch to which my PC is also connected.

-

@metebalci said in limit of virtio performance:

is installed on an EPYC 7313P (16 cores, 3GHz) system

that explains why it handles 10GBe somewhat in proxmox

possible related info about virtio

https://forum.netgate.com/topic/138174/pfsense-vtnet-lack-of-queues/13not sure if this tx/rq queue issue is the same with proxmox

-

@heper I also tried vtnet max queue 8 but I didnt check if it is applied. I should do a bit more controlled experiment and also try Linux, I dont know if this is related to hypervisor or freebsd.

-

I just tried a fresh FreeBSD 12.3 install with virtio adapter and it actually reaches >9Gb/s. So it seems the problem is neither Proxmox nor FreeBSD 12.3. I tried both q35/OVMF, i440fx/SeaBIOS type guests.

-

I am confused. I have one pfsense 2.6 (freebsd 12.3) and one freebsd 12.3, both have exactly same VM settings (only difference is pfsense has 2 interfaces). I installed iperf3 to both. Testing from my PC on the same 10G network, freebsd 12.3 performs >9Gb/s, pfsense performs ~2Gb/s. I disabled packet filtering/firewall on pfsense, no change. So what is the difference ?

-

@metebalci

Never measure performance with pfsense as and endpoint. Always measure through it. -

@heper OK. I realized my ISP also has iperf3 server listening so I tried that instead of speedtest. I attached a virtio vtnet interface to pfsense and made a better comparison with single vs. parallel flows, IPv4 vs. IPv6, and coming from physical port (ix) vs. virtio (vtnet). WAN is always physical port.

You had right, I think it is related to tx/rx queue issue you also linked above.

My test results show a single flow (ix or vtnet) can support around 5Gb/s on my system (packet filtering enabled). If I enable parallel, ix reaches to 9Gb/s, it does not matter IPv4 (NAT) or v6 (no NAT), and it consumes around 70% CPU (4 cores). However, when using vtnet with parallel flow, neither throughput nor CPU use changes and it is still around 5-6Gb/s (similar to single flow). It is actually very good for a single flow (as good as physical) and CPU consumption is not different (only a few percents higher maybe).