Best simple network

-

@fireix $5000 is the at the cheap end. All depends on need.

48 port 10G SFP+ capable switch from Juniper will run someone $30-45k without var discounts.

But if you can get a microtik at sub 600 go for it. I would do stacking to make my life easier.pfsense will have a LAGG down to the stack with a link in each member and you are trunking VLANs downstream while pfsense is the l3 gateway for the vlans.

-

@michmoor My budget doesn't allow two switches to $30-45K each :)

I do like the single panel with stacking, but I'm almost never inside it anyway (almost no changes in network needed) and it isn't that hard to login to two switches.

So if I can do with STP or what seems to be called "spine and leaf", that would be great. I can't see why it shouldn't work. STP is created to disable the duplicate link and the switches learn where the fastest path is.

Here is the switch I was thinking about, $ 599:

https://mikrotik.com/product/crs326_24s_2q_rm

-

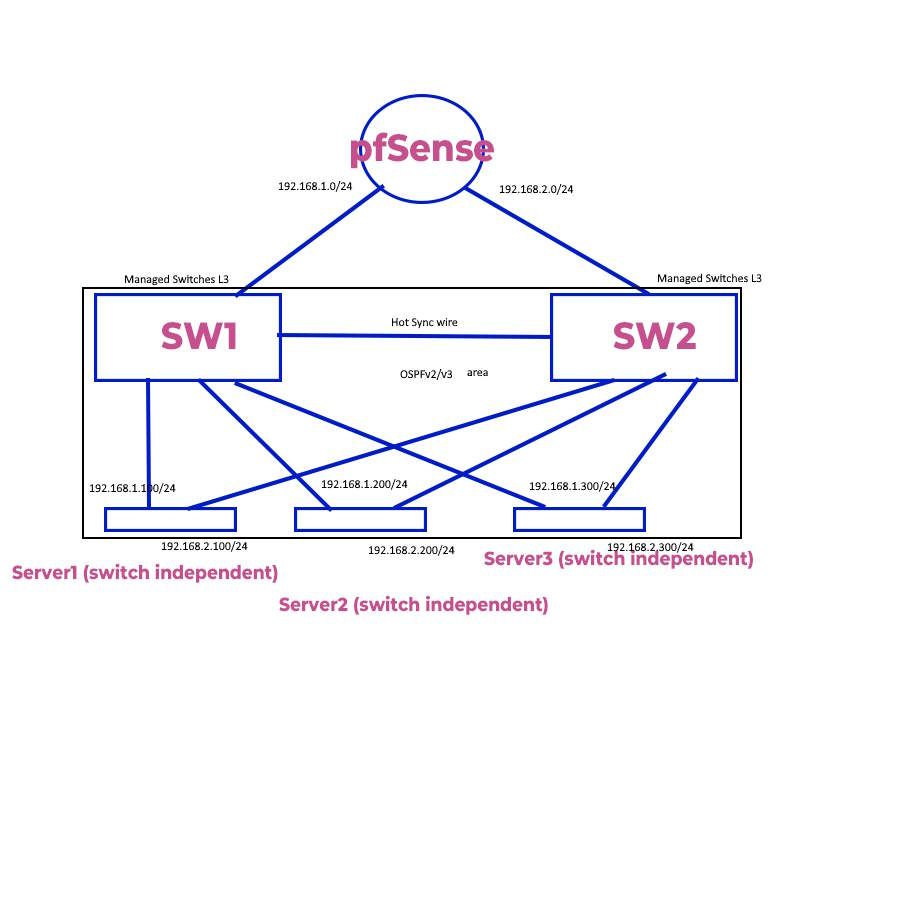

@fireix Spine + Leaf technology design doesnt use a layer2 topology. Its all Layer 3 utilizing ECMP. The point is not to use any Layer2 technology such as STP. If you are trying to go in that direction than Microtik isnt on the roadmap. You should really speak to a VAR as they can recommend the right hardware for your needs. I think that will be out of scope of what you are trying to accomplish here anyway.

If you need a low-cost 10G switch then anything from Microtik,Ubqiuity or Netgear will do. -

- How are the servers connected?

100MBit/s or 1 Gbit/s or 10 GBit/s - What is the deeper goal you may reach with that setup?

Only redundancy, failsafe for server reaching, Test or LAB.. - Are there LAGs in the setup?

Either LACP or static ones - Stacking is a must be?

Over stacking modules or SFP modules - Is the connection between the switches a sync line or stack?

Only for my interest

Unmanaged Switches

No LAG, no Stack and no STP

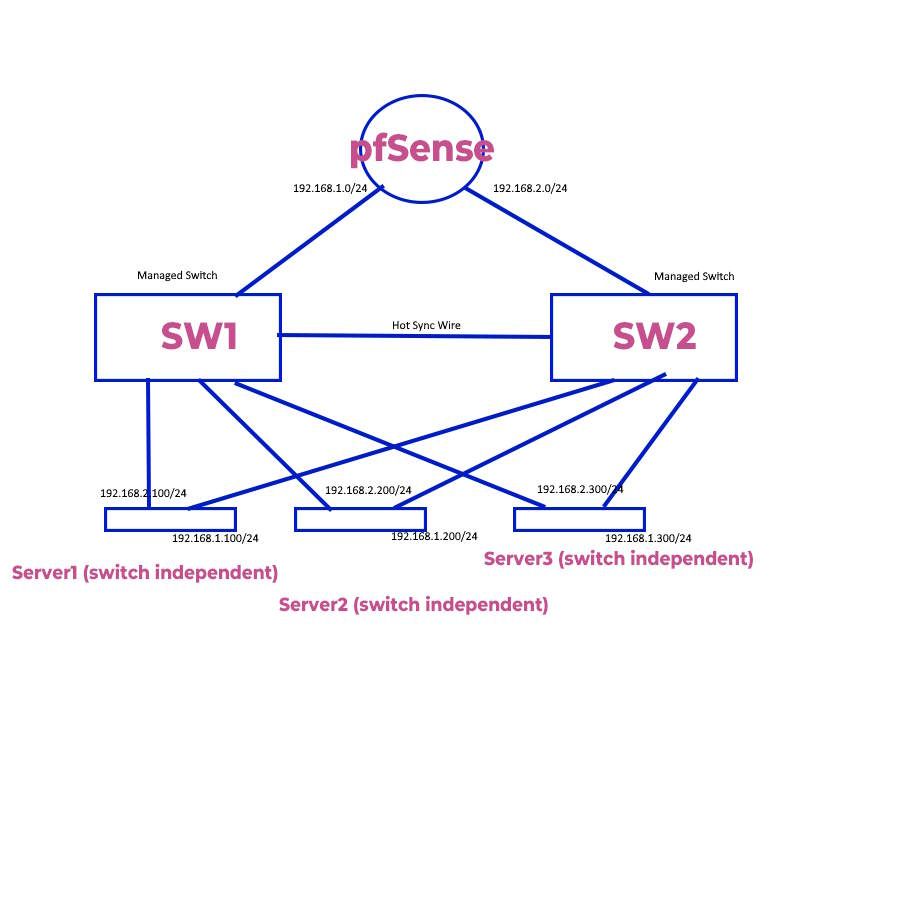

Managed L2+ Switches

Layer2+ (static routing)

Managed L3 Switches

Layer3 Switches

Fully managed L3 Switches

Layer3 Switches with more routing protocols

Spine and Leaf switches are fully managed L3 switches

and out of your told budget from 500 € - 600 €Netgear XS708E ~250 € each over eBay. link

Netgear XS708T ~375 € each over eBay. link

Benefit of both may be the Netgear NMS300 software

that is free of charge until an amount of switches and

makes the entire administration of the switches a bit easier. - How are the servers connected?

-

@michmoor I do see that Mikrotik has something called MLAG (https://stubarea51.net/2021/06/04/mikrotik-routerosv7-first-look-mlag-on-crs-3xx-switches/).

I already have D-link stackable switches stacked and it is great. The issue at hand is that it is only 4 SPF+ 10 Gbps ports in it (and 48 1 Gbps). And the jump from the price point of these at maybe 700-800 per each to maybe 5-50K is to big. It isn't even easy to find :)

Since my network is relatively small and most of the blade/servers have spf+ card, I would love to use pure spf+ switches with 20 spf+ ports. Not easy to find, but Mikotik has them. Question is if I will have a nightmare with broadcast issues or if it will just be fine.

-

@dobby_ These switches you link to wouldn't help me with 10 Gbps SPF+ that is my main goal for entire network.

I already have D-link stacked managed switches with 4x SPF+ 10 Gbps and 48 ports 1Gbps. And they do the job, it is not really huge traffic normally. But the goal is to replace 1 Gbps ports with something with maybe 15-20 spf+ 10 Gbps ports instead, to speed up backups and just have everything running on one single type of cables.

This is a single rack in a data center where I run important servers, I can't have any downtime at all.

I have a flat network with no vlans, no segmentation and don't need any routing in my network. pfSense handles everything I need. The goal is not throughput (that's why I don't need LACP lag between each server and switch), but redundancy or at least fail-over that kicks in within a minute automatically. And before you say it, it is bad practice to not segment network, but I do have a valid reason.

With STP protection instead of LACP/lag on everything, I don't need to set up dedicated ports on the switch like I do now for lot of equipment (I setup LACP team on the Stacked Switch stack and then the same on each server - one by one).

All gear has STP protection, but their LAG-support is pretty bad on some of it (with bad, I mean complicated and often ends up with errors because they handle it differently than most modern equipment do). Also, if I replace some gear and plug into wrong port on switch, it is bad. So I wonder if I would get less administration with pure STP instead of the port config I deal with today.

Goal is less admin, more unity (only two switches), redundancy and higher bandwidth (1Gbps->10Gbps) is also a plus. Redundancy and ease of administration is more important than getting for instance 2x20Gbps that I could do in a LACP team.

-

@fireix To be clear, 10G SFP+ switches are popular, and extremely easy to find. What you are trying to find is something in your budget which might be difficult to do. As I stated already, if you want a 48 port 10/25/40/100G capable Layer 2 switch that can operate as a Leaf in a spine/leaf topology, run MC-LAG, or other advanced features then you need to pay.

You already stated that its a few blades. And as i already started off the thread im not sure and you havent stated why you even need so many switches. Maybe there is more to the topology than what you have given.In either case, to be honest, you should probably look at hiring a consultant or speaking to a VAR to find out whats best for your environment. It doesnt seem you have a grasp on the fundamentals here of building a network. Please dont take this personally but you should understand the requirements (budget, bandwidth needs, security,etc.).

-

I know exactly my budget (2x600 USD), the bandwidth needs (1 Gbps is more than enough per port for most, but since I have most stuff with 10 Gbps nic card by now (SPF+), it makes sense to base it on that - and security needs (no changes needed) :)

I have run servers in 3 data centers for 20 years redundant setup and almost no downtime on my part, so I think I can do a few more :) The thing is that once things are running, it requires very little maintenance in such a simple environment. So tend to forget a lot of the basics. I have learned myself enough to set it up and done it one way, but the way I'm now thinking about (make the network more switch-port independent) makes sense to me.

Even Aruba Networks writes in their blog that stacking isn't necessarily the most robust way of doing things (https://blogs.arubanetworks.com/solutions/stacking-network-switches-why-and-why-not/). For instance can upgrading a single switch be impossible without downtime and re-joining can be like auto-magic that is not very transparent.

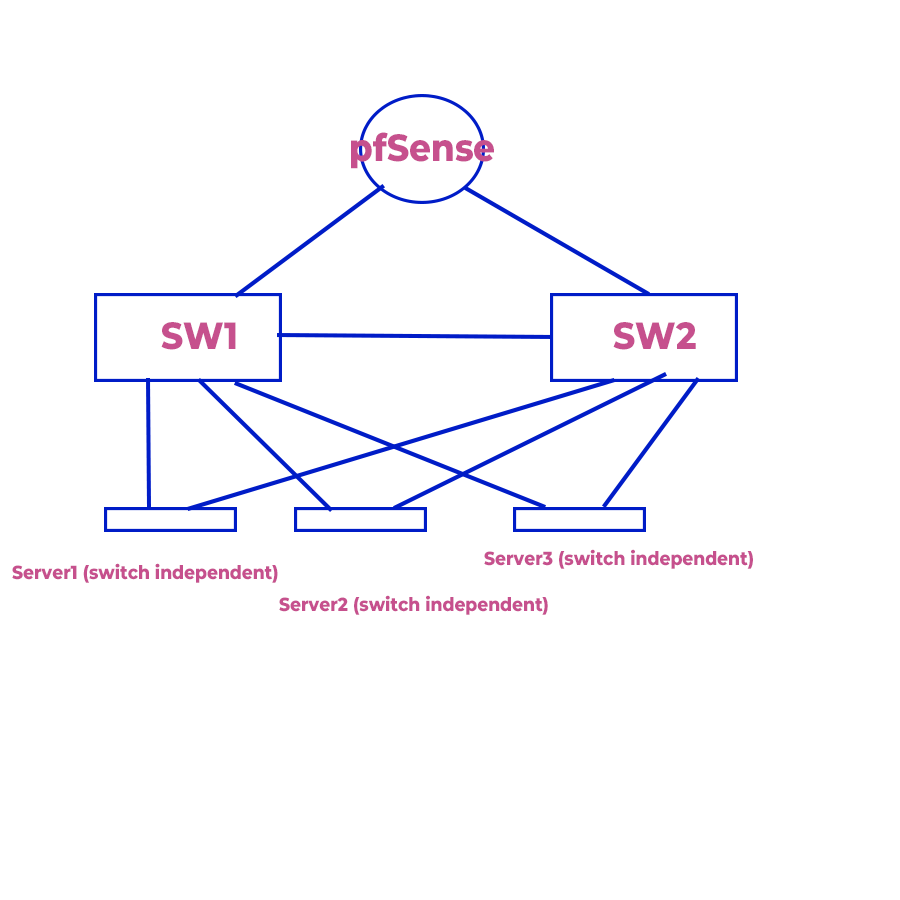

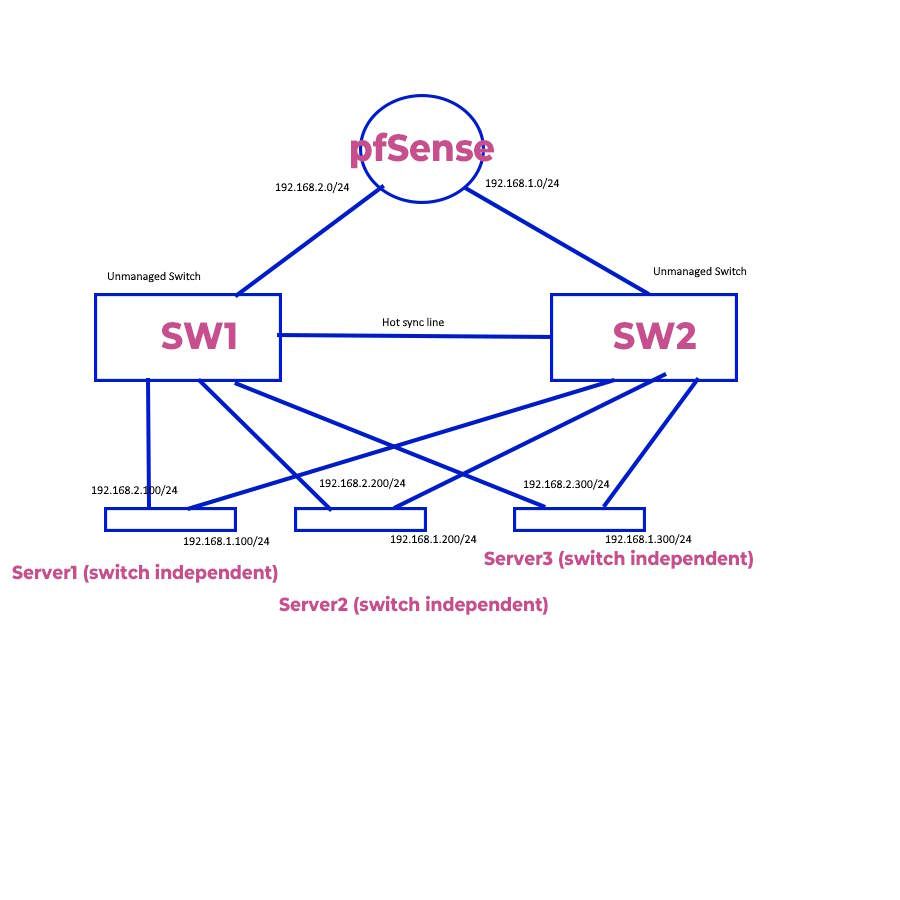

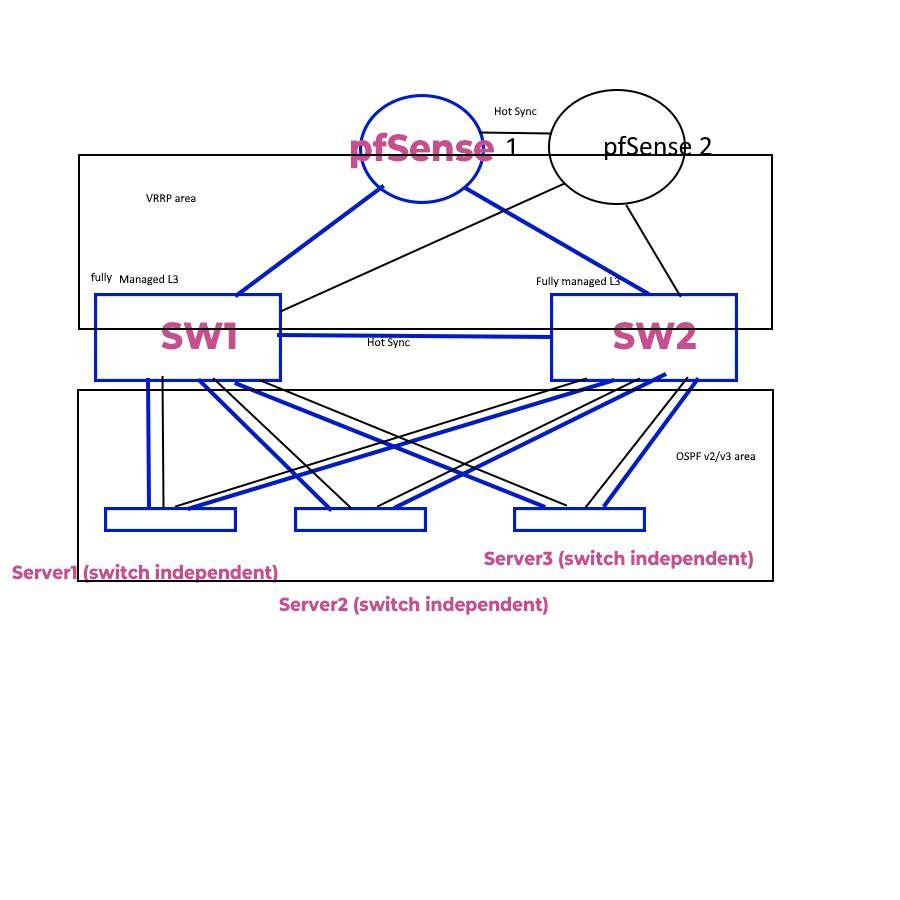

Do you feel that two switches in a network really are a lot? The only reason for two switches is if one switch is damaged or fell out, I don't want to jump into my car and drive for 20 minutes. Let's say I will use 15-20 servers (all with 10 Gbps SFP+ NIC) to each switch. I have experienced one switch breaking in my 20 years, so it doesn't happen often, but still I can't risk it. Just like my drawing.

Note that running servers like this in a data center is totally different from hostile networks with guest users, DHCP-servers, access points, printers, random PC's/servers and segmentation on IP and VLANs. So it is like a single /24 across entire broadcast domain and none of the host trust each other. That is why I mean by simple. Two switches, one pfSense (with one as backup), one /24. I think you misunderstand what I'm asking for. In a normal office network, I would have have some stuff on VLAN, different networks/subnets for the different departments and real IP-segmentation. This is not what I need. Totally everybody can see everybody-network.

The question is: Why won't running a network with two switches and one pfSense not work with spanning tree protocol enabled? All my research says that it will work. Stacking and the recommended method is the current setup I have today - and provides single management - but I do see possible administration benefits from going away from this model. I don't know why layer2 and layer3 is brought into this. My current switches are L2+L3, but I didn't need any modifications other than setting up LACP-teams on both sides. With my easier setup, I would break the LACP-setup on both sides. Since I don't have multiple networks, I shouldn't need all the features in an expensive switch? Everybody in my network can talk to everybody - and that is what I want it to be.

-

@fireix since your switches are not stacked - my guess on this would be you would setup a lagg but use the failover mode of setup, so only really the active port would be used.. But if that port failed/went down then the other port in the lagg would be used. In this mode you don't need to setup any lacp on the switch side of the connection.

I would for sure test this, and might not account for all kinds of failure modes. But should allow for say if one of the switches going dark..

-

@johnpoz said in Best simple network:

@fireix since your switches are not stacked - my guess on this would be you would setup a lagg but use the failover mode of setup, so only really the active port would be used.. But if that port failed/went down then the other port in the lagg would be used. In this mode you don't need to setup any lacp on the switch side of the connection.

I would for sure test this, and might not account for all kinds of failure modes. But should allow for say if one of the switches going dark..

I assume you mean to setup both the ports on each switch and the device almost like LACP (just changing LAG mode to active-backup), that kind of defeat my ideal purpose I hope for :) My goal would be to not have to configure the switch ports at all.

I'm thinking of this featured called switch independent lag in Windows (there is similar in Linux). It requires no switch setup/port-setup at all on the switch. It is only using one of the ports at a time and if one loose connection, it will activate the other one. It can be two totally different switches and based on what I have read - it has not a requirement on pre-configuration of the switch itself at all.

I'm aware that I would "miss" the nice feature of having 2 Gbps or 20 Gbps when both are active in a LACP team, but it would be easier to manage/cable and to be honest it would care if the speed is doubled.

When I have been lazy and couldn't figure out to setup LACP team due to old hardware, I have actually run with this mode and haven't experienced any issue so far. When I for instance has deactivated the port in the switch, the other one has started sending traffic and work. But not sure if this is luck since the rest of my network is LACP or it is how it actually supposed to work. I assume Windows (in this case) simply is pinging the gw I have set up on the team connection and if ping stops, it sends over the other one. Should be very easy to code. This will also ensure that two nics on same server never is active at same time, so wouldn't even trigger any shutdown of links by using STP (I assume).

".. the NIC Team works independently of the switch; no additional configuration of network hardware is needed. If this mode is on, you can connect different network adapters to different switches to improve fault tolerance (protection against switch failure"

https://4sysops.com/archives/nic-teaming-in-windows-server-2022/

-

@fireix said in Best simple network:

each switch and the device almost like LACP

no that would be the lag setup on pfsense, would put it failover mode.

https://docs.netgate.com/pfsense/en/latest/interfaces/lagg.html

How else would pfsense see traffic on multiple interface, or the possibility of traffic on multiple interfaces that were 1 interface to pfsense. You could setup a bridge I guess on pfsense. And just hope the switch shuts down one of the interfaces..

-

@johnpoz Ah, you talked about the pfSense setup. Yes, there I agree, they must be in brige (used that setup before) or LAG (use now against D-links stacked switches).

But on the other end, between the two unconfigured switches (maybe with exception of lag setup against pfSense) and servers: Windows will ensure only one NIC is enabled at any time, so shouldn't ever be a situation where one server sends from both their nics at the same time.

-

Even Unify migth be a good option it seems.

Just a tiny but above budget, but at least easy management.

https://eu.store.ui.com/collections/unifi-network-routing-switching/products/unifi-switch-aggregation-pro

-

- 2 x Netgear XS708E

For 500 € used over eBay - 3 x HPE 561T V2 Dual Port 10G RJ45 (X540)

For 120 € each, refurbed from server store - 10 x CAT6A FTP/S (PIMF) 1200MHz patch cables

To ensure the full 10 GBit/s are given

All in all for 860 € plus fresh cables. No problems

with optics.If you only flash the newest firmware on the Netgear switches and don´t configure them, they will act as an

dump switch you don´t have to configure anything then!Server port 1 - 192.168.2.0/24

Server port 2 - 192.168.1.0/24No STP will be needed and all is done in pure routing.

- 2 x Netgear XS708E

-

@dobby_ I already have invested and put in SPF+ fiber cards and for blade servers - I can't switch out a card. Also 8 ports are about 8 ports to little :) Also, I would prefer a modern equipment, it is an important reason also. My current D-Link stackable is just a bit newer than these and firmware updates are ended long time ago.

-

@Dobby_ Thought I'd be the only one who would ever use a number like 300 in an IP address.