TNSR high rx miss

-

Hi,

I need some help. I am testing TNSR with Cisco's Trex. I currently only want to measure the L3 forwarding capabilities. But I have a very high rx miss rate that I can't explain.Does anyone have any idea?

tnsr-cmd# show int LAN-0 Interface: LAN-0 Description: TREX-PORT-0 Admin status: up Link up, link-speed 100 Gbps, full duplex Link MTU: 1500 bytes MAC address: 10:70:fd:05:d8:52 IPv4 MTU: 0 bytes IPv4 Route Table: ipv4-VRF:0 IPv4 addresses: 172.22.26.1/24 IPv6 MTU: 0 bytes IPv6 Route Table: ipv6-VRF:0 IPv6 addresses: fe80::1270:fdff:fe05:d852/64 VLAN tag rewrite: disable Rx-queues: queue-id 0 : cpu-id 8 : rx-mode polling counters: received: 167421864888 bytes, 110590448 packets, 0 errors transmitted: 6217498 bytes, 100282 packets, 0 errors protocols: 110590446 IPv4, 0 IPv6 1 drops, 0 punts, 1370830682 rx miss, 0 rx no buffertnsr-cmd# show int LAN-1 Interface: LAN-1 Description: TREX-PORT-1 Admin status: up Link up, link-speed 100 Gbps, full duplex Link MTU: 1500 bytes MAC address: 10:70:fd:30:e8:40 IPv4 MTU: 0 bytes IPv4 Route Table: ipv4-VRF:0 IPv4 addresses: 172.22.27.1/24 IPv6 MTU: 0 bytes IPv6 Route Table: ipv6-VRF:0 IPv6 addresses: fe80::1270:fdff:fe30:e840/64 VLAN tag rewrite: disable Rx-queues: queue-id 0 : cpu-id 9 : rx-mode polling counters: received: 6217488 bytes, 100282 packets, 0 errors transmitted: 167421577308 bytes, 110590259 packets, 0 errors protocols: 100279 IPv4, 1 IPv6 2 drops, 0 punts, 0 rx miss, 0 rx no buffernsr01 tnsr(config)# show configuration candidate cli configuration history enable nacm disable nacm read-default permit nacm write-default deny nacm exec-default permit nacm disable sysctl vm nr_hugepages 3072 dataplane cpu main-core 5 dataplane cpu skip-cores 5 dataplane cpu corelist-workers 8 dataplane cpu corelist-workers 9 dataplane cpu corelist-workers 10 dataplane cpu corelist-workers 11 dataplane cpu corelist-workers 12 dataplane cpu corelist-workers 13 dataplane cpu corelist-workers 14 dataplane cpu corelist-workers 15 dataplane ethernet default-mtu 1500 dataplane dpdk dev 0000:81:00.0 network name LAN-0 dataplane dpdk dev 0000:c1:00.0 network name LAN-1 dataplane memory main-heap-size 6G dataplane buffers buffers-per-numa 32768 dataplane buffers default-data-size 2048 dataplane statseg heap-size 256M nat global-options nat44 max-translations-per-thread 128000 nat global-options nat44 enabled false route table ipv4-VRF:0 id 0 exit interface tap cap-0 host namespace dataplane instance 10 exit interface tap cap-1 host namespace dataplane instance 11 exit interface LAN-0 description TREX-PORT-0 enable rx-mode interrupt ip address 172.22.26.1/24 exit interface LAN-1 description TREX-PORT-1 enable ip address 172.22.27.1/24 exit interface tap10 enable exit interface tap11 enable exit nat ipfix logging domain 1 nat ipfix logging src-port 4739 nat nat64 map parameters security-check enable exit neighbor LAN-0 172.22.26.10 10:70:fd:06:0f:07 neighbor LAN-1 172.22.27.10 10:70:fd:06:26:7f span LAN-0 onto tap10 hw both exit span LAN-1 onto tap11 hw both exit interface LAN-0 exit interface LAN-1 exit interface tap10 exit interface tap11 exit route table ipv4-VRF:0 id 0 route 16.0.0.0/24 next-hop 0 via 172.22.26.10 LAN-0 exit route 48.0.0.0/16 next-hop 0 via 172.22.27.10 LAN-1 exit exit route dynamic manager exit route dynamic bgp disable exit route dynamic ospf6 exit route dynamic ospf exit route dynamic rip exit dhcp4 server lease persist true lease lfc-interval 3600 interface socket raw exit unbound server do-ip4 do-tcp do-udp harden glue hide identity port outgoing range 4096 exit snmp host disablethanks for your help

-

@erikdy

Does it happen all the time or only when a certain level of traffic is tested. If the latter, what level is that?What are you testing in trex? How?

What is the hardware of the DUT?

I would:

turn off the span captures

turn off interrupt mode

test againAlso unsure what you're doing with all those workers. You probably need to pay strict attention to what socket is talking to what NIC on what worker thread(s), etc.

https://docs.netgate.com/tnsr/en/latest/advanced/dataplane-cpu.html

-

Hi @derelict, thanks for your reply, I have answered your questions separately

Does it happen all the time or only when a certain level of traffic is tested. If the latter, what level is that?

If I use the basic profiles from Trex with a low multi factor, then it is not happen.

One of my setups produced 100bps by 8Mpps. Even with the simple profiles and only 10 Gbit traffic I can get decent throughput.What are you testing in trex?

Trex is an traffic generator that is able to generate above 100Gbti/s of traffic.

Finally, I would like to know what traffic is possible with 100Gbit interface through a VPN S2S. For the beginning I want to test the general routing capabilities.How?

Trex uses pcap files to play back traffic. Various streams can be generated for this purpose.

Cisco Trex v3.02 in STF mode.

c = 16 m = 1000 d = 10 profile = 'cap2/imix_1518.yaml'What is the hardware of the DUT?

I have five of these:

Supermicro H12DSU-iN

2x AMD EPYC ROME 7F32 Processor, 8-Core 8C/16T

128GB (16x 8GB) DDR4-3200MHz ECC

2x 480GB Samsung SSD PM883 480GB

2x MCX516A-CDAT - ConnectX-5 Ex EN network interface card, 100GbE dual-port QSFP28, PCIe4.0 x16

QSFP58 DA-Cable 100GbitsAt the moment i use one TNSR and one Trex connect with two QSFP56 DA-Cable.

I would:

turn off the span captures

turn off interrupt mode

test againThe interrupt mode was switched off, I copied an old version by mistake. I tested with and without span interfaces. But do you think these have such a big effect?

The platform I am using is a bit special, the two 100GBE cards are both on the NUMA-NODE1at all 5 nodes. This is the reason for this custom thread configuration.I had used four iperf3 client/server before Trex. In L3 forwarding I had 91 out of 91 Gbit/s, with Nat I have about 15~16 Gbit/s loss.

-

@erikdy said in TNSR high rx miss:

Hi @derelict, thanks for your reply, I have answered your questions separately

Does it happen all the time or only when a certain level of traffic is tested. If the latter, what level is that?

If I use the basic profiles from Trex with a low multi factor, then it is not happen.

One of my setups produced 100bps by 8Mpps. Even with the simple profiles and only 10 Gbit traffic I can get decent throughput.OK but at what traffic level do you start seeing the misses?

What are you testing in trex?

Trex is an traffic generator that is able to generate above 100Gbti/s of traffic.

Finally, I would like to know what traffic is possible with 100Gbit interface through a VPN S2S. For the beginning I want to test the general routing capabilities.How?

Trex uses pcap files to play back traffic. Various streams can be generated for this purpose.

Cisco Trex v3.02 in STF mode.

c = 16 m = 1000 d = 10 profile = 'cap2/imix_1518.yaml'OK, so the answer to my question is imix.

> What is the hardware of the DUT? I have five of these: Supermicro H12DSU-iN 2x AMD EPYC ROME 7F32 Processor, 8-Core 8C/16T 128GB (16x 8GB) DDR4-3200MHz ECC 2x 480GB Samsung SSD PM883 480GB 2x MCX516A-CDAT - ConnectX-5 Ex EN network interface card, 100GbE dual-port QSFP28, PCIe4.0 x16 QSFP58 DA-Cable 100GbitsI doubt we have done any in-house testing on AMD processors.

At the moment i use one TNSR and one Trex connect with two QSFP56 DA-Cable.

I would:

turn off the span captures

turn off interrupt mode

test againThe interrupt mode was switched off, I copied an old version by mistake. I tested with and without span interfaces. But do you think these have such a big effect?

It is known that span captures impact performance. The packets have to be copied. This is not "free."

Interrupt mode is a fairly recent addition. I was just suggesting it be tested both ways.

-

OK but at what traffic level do you start seeing the misses?

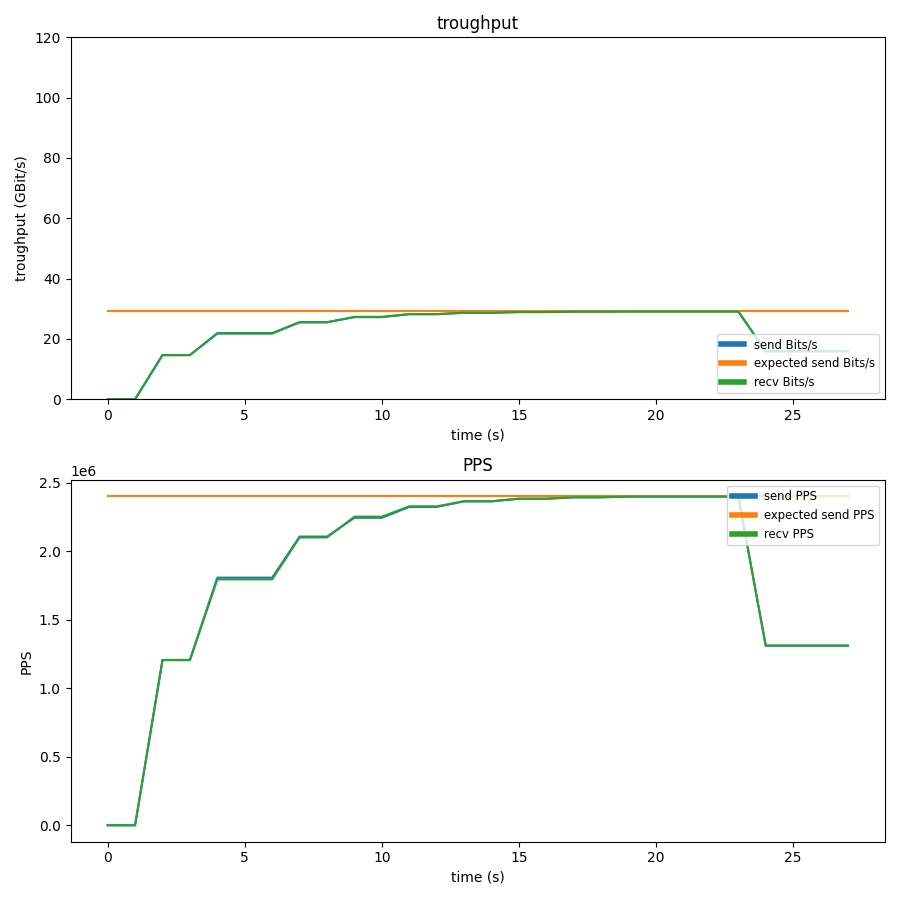

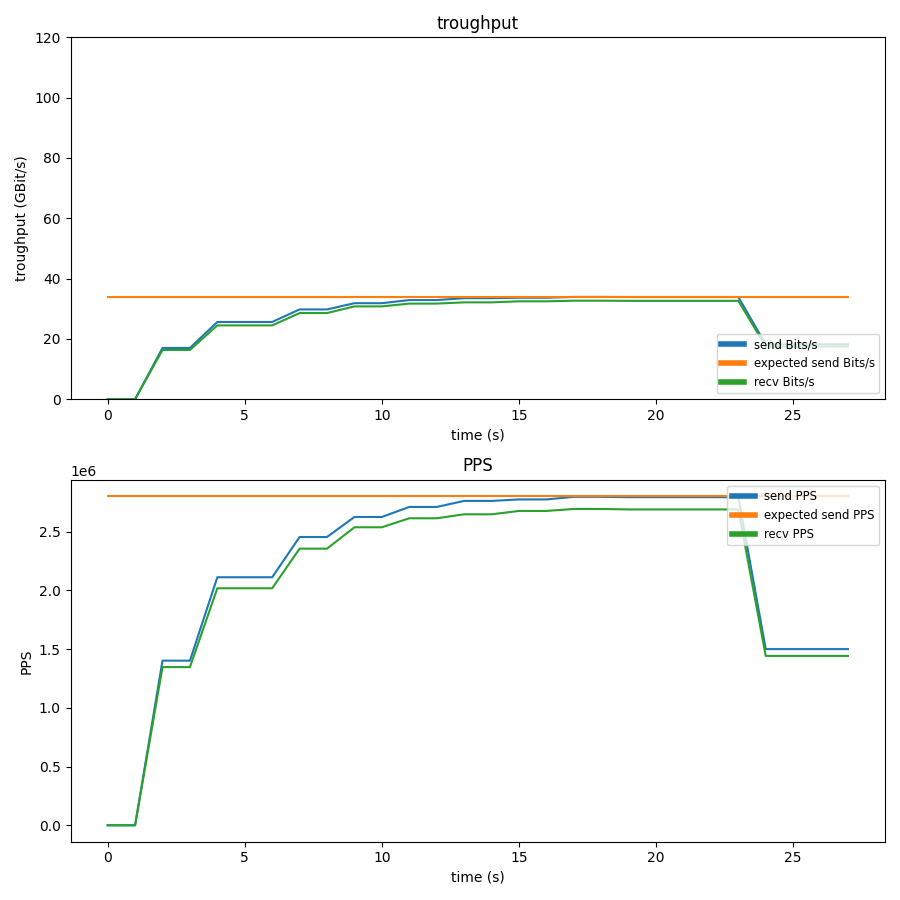

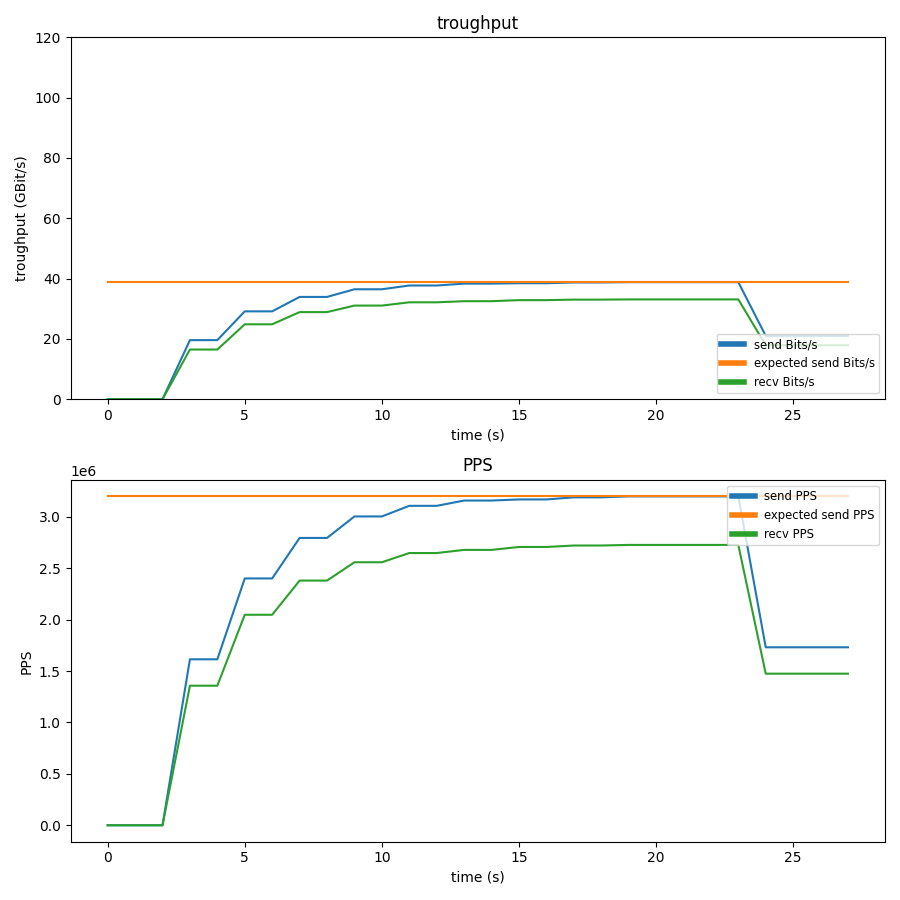

I made some runs. The problem beginns above 30Gbits

And you are right. the performance without span interfaces is better. I don't know what I did wrong.