Terrible SR-IOV Performance

-

I've seen some discussions around SR-IOV issues with pfSense in the past, but I wanted to revisit this again. Here are some details on the setup...

pfSense (in this case) is essentially running as a KVM workload, but all the instantiation of the virtual machine (i.e. the KVM XML config) is being orchestrated by KubeVirt/OpenShift. Yes, pfSense is running via Kubernetes in this case. Why? Because using this deployment strategy, I can leverage a GitOps deployment model for pfSense and run a Kubernetes job to configure pfSense at the time of boot. In testing (I tweeted some of my findings HERE.

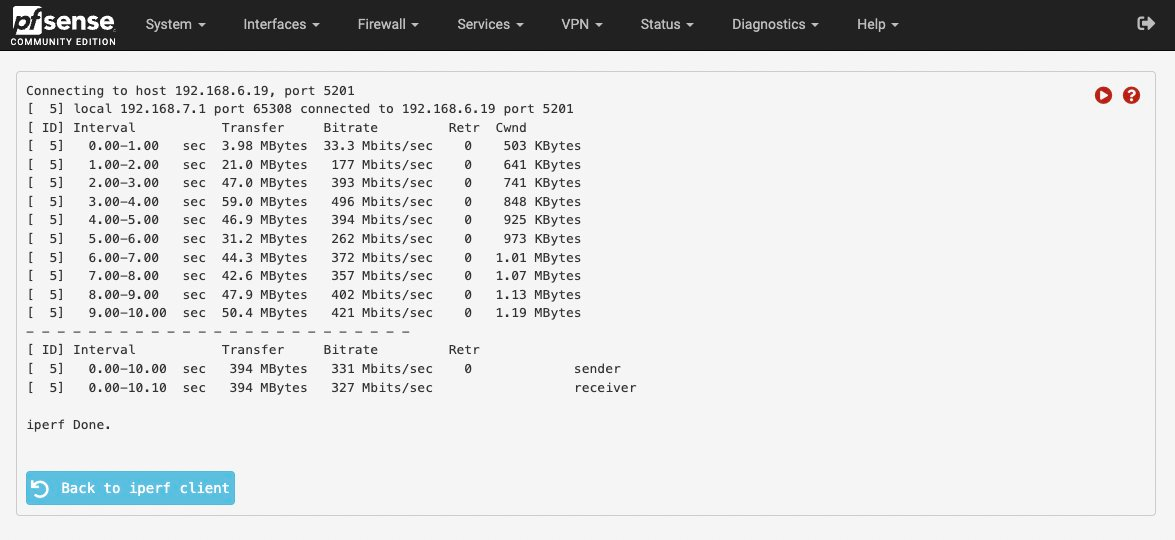

I found that pfSense running with SR-IOV-based interfaces has ridiculously poor performance. Considering that these interfaces are leveraging 25G XXV710-DA2 NICs...this is kind of terrible:

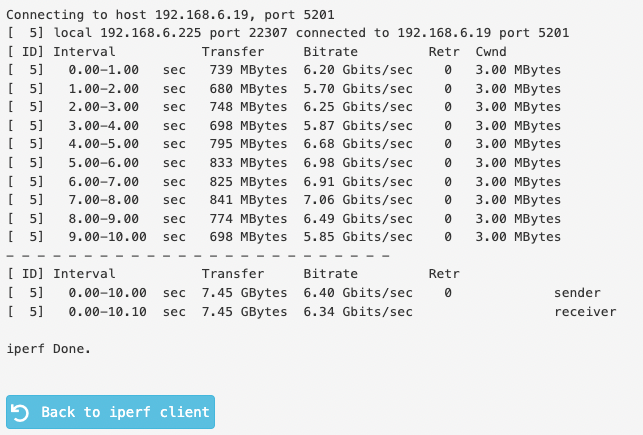

But if I remove SR-IOV in favor of a standard KVM-based Linux bridge, I start seeing much better performance. It's still not 25G, but it's more reasonable.

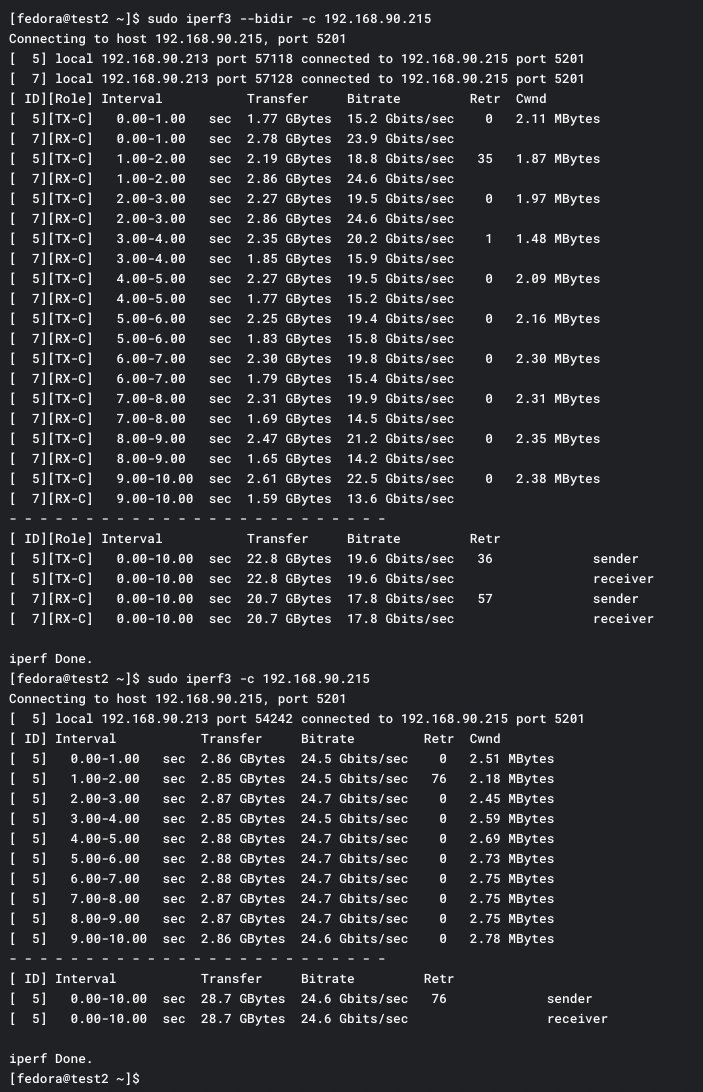

With 2 Fedora hosts, and using the exact same SR-IOV-based configuration (called a NetworkConfigurationPolicy + NetworkAttachementDefinition in KubeVirt/OpenShift), I am able to saturate the 25G link running a bidirectional iperf3 test.

Should I assume that pfSense network performance is just this bad when using SR-IOV, or is there some tunable other than what I've read/followed on the Netgate wiki? I have seen complaints in the past about poor pfSense SR-IOV performance with other hypervisors, but SR-IOV is a really common use case at this point and it's incredibly useful to have for a security device. As we start to use pfSense in more virtualized deployment scenarios, it would be nice to see things like SR-IOV-based performance improved.

-

I found that pfSense running with SR-IOV-based interfaces has ridiculously poor performance.

Perhaps it is not really made for that "perfromance"

and/or throughput? Did you consider to give TNSR

a try out?Considering that these interfaces are leveraging 25G XXV710-DA2 NICs...this is kind of terrible:

I have seen and heart about peoples were getting out

with a10 GBit/s port NIC something around ~2 GBit/s

in "real life" and 4 GBit/s till 4,7 GBit/s with measuring

as entire throughput. So if you get out now something

around nearly 7 GBit/s with a 25 GBit/s adapter, you

have at my counting +1 GBit/s on top of the others

using "only" 10 GBit/s hardware.Should I assume that pfSense network performance

is just this bad when using SR-IOV, or is there some

tunable other than what I've read/followed on the

Netgate wiki?Perhaps you could be getting out here and there

something more (throughput) with one or more

tunings, for sure this must be testet out again and

again so that all tunings will be matching fine.As we start to use pfSense in more virtualized

deployment scenarios, it would be nice to see

things like SR-IOV-based performance improved.pfSense is using FreeBSD as underlying OS and if

this will be a driver related "thing" they can´t much

do in that case. But again did you thinking on TNSR

in that case? It is more for that higher throughput

made as I am informed. -

@dobby_ I would expect TNSR to work as advertised. This initial test/demo was to see where pfSense is at in terms of SR-IOV, since it’s still Netgate’s answer to a virtualized firewall. Fair response/questions - thanks for the feedback.

-

@v1k0d3n Curious what was your speed when you actually used pfsense as a router vs a host..

-

@johnpoz with or without SR-IOV? Maybe you're asking for both scenarios, but in the case with SR-IOV I don't even know if it's worth testing with what I'm able to tell at this point...