Suricata process dying due to hyperscan problem

-

@bmeeks said in Suricata process dying due to hyperscan problem:

@kiokoman said in Suricata process dying due to hyperscan problem:

@bmeeks

i have removed all the rules from an interface but the hyperscan error is still there after a few moments for me.

+noaslr is still doing nothing

any chance you can provide the dbg pkg of suricata?Not at the moment. I'm trying to reconstruct my package builder for the RELENG_2_7_2 branch of CE (which is the current 2.7.2 release), and that build is failing. Working with the Netgate team on that. Once I get my package builder working again, then I can build a debug package and perhaps share it.

Nothing else can happen until at least after this coming weekend as I am about to be out of town for a few days.

Well. i'm not in a hurry , i just like to solve mistery

-

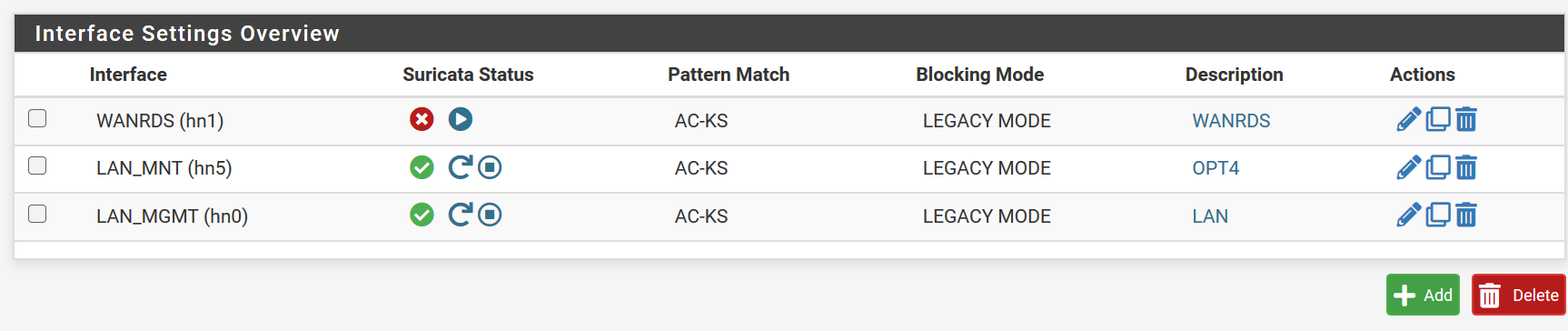

Suricata still hangs on interfaces with higher traffic, even though I set it to use AC-KS.

It is strange that the same message appears with the hyperscan error, although all interfaces are set to AC-KS:

[104160 - W#03] 2023-12-13 16:18:07 Error: spm-hs: Hyperscan returned fatal error -1.

-

@paulp same here:

[122730 - W#04] 2023-12-12 17:20:11 Error: spm-hs: Hyperscan returned fatal error -1.

-

@NRgia said in Suricata process dying due to hyperscan problem:

@Maltz said in Suricata process dying due to hyperscan problem:

@bmeeks said in Suricata process dying due to hyperscan problem:

I don't see the point in changing the default if users can just simply make the change manually and save it.

I think changing the default would be tremendously useful for people who have no way of knowing why Suricata is crashing over a month after the pfSense update that seemingly broke it. People who haven't, or don't have to expertise to, spend hours poring over system logs, find the right log entry to google, and make their way to this thread.

I beg to differ, why force everybody to use some settings as workaround, in order to track down an issue? This is not a test branch. As far as I understood from the posts here, this happens only if Suricata is in Legacy Mode. For example I use Suricata in inline mode on WAN and also on LAN with multiple VLANS and I don't encounter this issue. I'm not saying that we should not attempt to fix this, but forcing all of us to use the proposed defaults is bad practice.

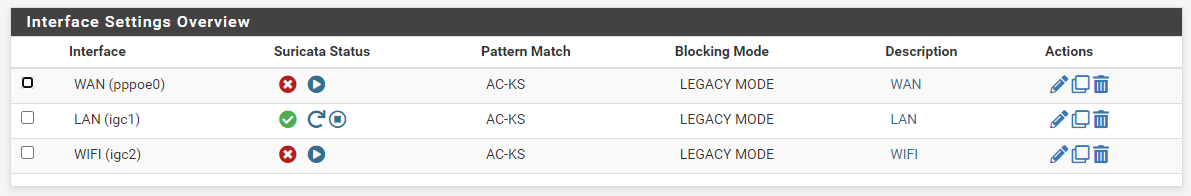

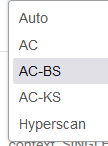

Let me rephrase: Changing the logic around the default "Auto" setting would be useful. If AC-BS is the only one that works reliably in Legacy Mode (it's the only one that works for me at any rate) then that's the one "Auto" should choose.

-

@Maltz

Exactly which is why i proposed it. As someone pointed out this isnt the dev branch this is the 'prod' branch so change the default to what is working for most instead of having people guess what the issue is, google it, find your way to the forum post and now a forum post which is already a 205 posts topic. Now the person needs to read all of this to conclude Hyperscan doesnt work. -

This issue is incredibly frustrating to pin down. It took a full day, but I just saw the Hyperscan error on one of my VMs. The ASLR change does seem to significantly improve my ability to start Suricata instances and keep them running but, it's not a viable workaround. I've switched over to AC-BS. I'll watch this for a few days and see what happens.

-

Good news! At least I hope so :)

I changed Pattern Match to AC-BS and for now I see it has been working for about 20 hours.

With the Pattern Matcher Algorithm set to Auto, AC-KS or Hyperscan, Suricata stopped after a few hours on the interfaces that had higher traffic. -

@bmeeks said in Suricata process dying due to hyperscan problem:

best workaround for you guys is to select AC-CS (the normal default when Hyperscan is not present

I don't seem to have AC-CS as an option on the routers I've checked?

-

@SteveITS

Ive read AC-KS is performing well enough. -

@SteveITS I assumed that was a typo. AC-BS is the only one that works for me.

-

[101504 - Suricata-Main] 2023-12-15 16:56:12 Notice: threads: Threads created -> RX: 1 W: 8 FM: 1 FR: 1 Engine started.

[153671 - RX#01-vmx2] 2023-12-15 16:56:18 Info: checksum: No packets with invalid checksum, assuming checksum offloading is NOT used

[153740 - W#05] 2023-12-15 17:24:54 Error: spm-hs: Hyperscan returned fatal error -1.15/12/2023 -- 17:24:54 - <Debug> -- sid 2200024: e 0x3919a4bcde90 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- [packet 0x3919c77ff200][no tunnel] no drop 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496b480 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- p->ts.tv_sec 1702657438 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- packet 0 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200020: e 0x3919a4bcddd0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493c7c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200025 15/12/2023 -- 17:24:54 - <Debug> -- fb 0x3919554fe600 fb->head 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p 0x3919c6aec600 pkt 0x3919c6aec850 ether type 0800 15/12/2023 -- 17:24:54 - <Debug> -- pkt 0x3919c6aec85e len 454 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x391954943980 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115470 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200020: e 0x3919a4bcddd0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200021 15/12/2023 -- 17:24:54 - <Debug> -- ssn 0x3919bc2d59c0, stream 0x3919bc2d59d0, p 0x3919c441ea00, p->payload_len 39 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- IPV4 89.37.71.254->192.168.9.89 PROTO: 17 OFFSET: 0 RF: 0 DF: 0 MF: 0 ID: 40415 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115560 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- UDP sp: 4090 -> dp: 60363 - HLEN: 8 LEN: 426 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200110: e 0x3919a4bcec40 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200111 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493cf40 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496c380 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a30 15/12/2023 -- 17:24:54 - <Debug> -- ports 4090->60363 ports -1 -1 -1 -1 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155a0 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200021 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115560 15/12/2023 -- 17:24:54 - <Debug> -- ports 4090->60363 ports 6081 -1 -1 -1 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- ports 4090->60363 ports 4789 -1 -1 -1 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200005: e 0x3919a4bcdb30 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x3919549700c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200025: e 0x3919a4bcdec0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200111 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496b480 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Returning ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200021: e 0x3919a4bcde00 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- packet 0 -- flow 0x39195496c380 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200026 15/12/2023 -- 17:24:54 - <Debug> -- packet 0 has flow? yes 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- packet 0 is TCP. Direction TOSERVER 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200006 15/12/2023 -- 17:24:54 - <Debug> -- p->pcap_cnt 0 direction toserver pkt_src wire/pcap 15/12/2023 -- 17:24:54 - <Debug> -- time we got is 1702657494 sec, 604115 usec 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- stream->seg_tree RB_MIN 0x3919bb7d63c0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200021: e 0x3919a4bcde00 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493cf40 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200022 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p->pcap_cnt 0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- stream_offset 0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- 0 -> seg 0x3919bb7d63c0 seq 2065244386 abs 0 size 318 abs 318 (0) ack:fully ack'd 15/12/2023 -- 17:24:54 - <Debug> -- allocated a new packet only using alloc... 15/12/2023 -- 17:24:54 - <Debug> -- size 318, cnt 1 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493c7c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200022 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- stream_offset 0 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155b0 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115480 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- start: pcap_cnt 0, policy 0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- end 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115570 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a30 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200111: e 0x3919a4bcec70 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- size 0, cnt 0 15/12/2023 -- 17:24:54 - <Debug> -- p->ts.tv_sec 1702657438 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Midstream not enabled, so won't pick up a session 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- size 318, packet 0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- app progress 0 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200006: e 0x3919a4bcdb60 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200111: e 0x3919a4bcec70 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x391954943980 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200112 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200022: e 0x3919a4bcde30 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x3919549700c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- last_ack 2065244704 (abs 318), base_seq 2065244386 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200008 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p 0x3919c6b16c00 pkt 0x3919c6b16e50 ether type 0800 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115570 15/12/2023 -- 17:24:54 - <Debug> -- packet 0: extra packets 0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- packet 0 calling Detect 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200112 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493cf40 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200023 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115490 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p->pcap_cnt 0 direction toserver pkt_src wire/pcap 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a40 15/12/2023 -- 17:24:54 - <Debug> -- getting one blob 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200022: e 0x3919a4bcde30 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493c7c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- gap_ahead false mydata_len 318 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200023 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200008: e 0x3919a4bcdb90 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a40 15/12/2023 -- 17:24:54 - <Debug> -- pkt 0x3919c6b16e5e len 106 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- IPV4 89.37.71.254->192.168.9.89 PROTO: 17 OFFSET: 0 RF: 0 DF: 0 MF: 0 ID: 40419 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115580 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115580 15/12/2023 -- 17:24:54 - <Debug> -- UDP sp: 4090 -> dp: 60363 - HLEN: 8 LEN: 78 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x3919549700c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- flag STREAM_TOSERVER set 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p->flowflags 0x21 15/12/2023 -- 17:24:54 - <Debug> -- packet doesn't have established flag set (proto 6) 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- testing against "ip-only" signatures 15/12/2023 -- 17:24:54 - <Debug> -- ssn->state established 15/12/2023 -- 17:24:54 - <Debug> -- ports 4090->60363 ports -1 -1 -1 -1 15/12/2023 -- 17:24:54 - <Debug> -- stream 0x3919bc2d5a60 data in buffer 0x3919bc2d5a98 of len 318 and offset 0 15/12/2023 -- 17:24:54 - <Debug> -- ports 4090->60363 ports 6081 -1 -1 -1 15/12/2023 -- 17:24:54 - <Debug> -- GAP?2 15/12/2023 -- 17:24:54 - <Debug> -- parser 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200023: e 0x3919a4bcde60 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200009 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200112: e 0x3919a4bceca0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200112: e 0x3919a4bceca0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200026: e 0x3919a4bcdef0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200114 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200023: e 0x3919a4bcde60 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496b480 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200027 15/12/2023 -- 17:24:54 - <Debug> -- data_len 318 flags 05 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Stream initializer (len 318) 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a50 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1154a0 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493cf40 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- ports 4090->60363 ports 4789 -1 -1 -1 15/12/2023 -- 17:24:54 - <Debug> -- Returning ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x391954943980 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493c7c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200024 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200114: e 0x3919a4bcecd0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200024 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200114 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- time we got is 1702657494 sec, 605547 usec 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200115 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200009: e 0x3919a4bcdbc0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115590 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a60 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x3919549700c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- allocated a new packet only using alloc... 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200010 15/12/2023 -- 17:24:54 - <Debug> -- p->ts.tv_sec 1702657438 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- buflen 318 for toserver direction 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115590 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155c0 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- searchlen 17 buflen 318 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200115: e 0x3919a4bced00 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p 0x3919c6b6a800 pkt 0x3919c6b6aa50 ether type 0800 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- pkt 0x3919c6b6aa5e len 1480 15/12/2023 -- 17:24:54 - <Debug> -- IPV4 3.160.212.64->192.168.11.161 PROTO: 6 OFFSET: 0 RF: 0 DF: 0 MF: 0 ID: 44798 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- TCP sp: 443 -> dp: 55288 - HLEN: 20 LEN: 1460 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a50 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200116 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a70 15/12/2023 -- 17:24:54 - <Debug> -- Hyperscan Match 0: id=48 @ 8 (pat id=67) 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- tcp toserver 0x3919a3e38060, tcp toclient 0x3919a3e4a520: going to use 0x3919a3e38060 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- tcp port 5900 -> 54479:5900 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1154b0 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200027: e 0x3919a4bcdf20 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200024: e 0x3919a4bcde90 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493c7c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- time we got is 1702657494 sec, 605983 usec 15/12/2023 -- 17:24:54 - <Debug> -- TCP list 0x3919a3e38060, port 5900, direction toserver, sghport 0x3919555b79a0, sgh 0x3919a39ff8a0 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning pointer 0x3919a39ff8a0 of type SigGroupHead ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Adding 1 sids 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200025 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200114: e 0x3919a4bcecd0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200024: e 0x3919a4bcde90 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493cf40 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- s->co->offset (0) s->cd->depth (8) 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200025 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200116: e 0x3919a4bced30 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x391954943980 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200115 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155a0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200117 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a60 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496b480 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 220002815/12/2023 -- 17:24:54 - <Debug> -- Entering ... >>

15/12/2023 -- 17:24:54 - <Error> -- Hyperscan returned fatal error -1.15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- requesting disabling filestore for flow 15/12/2023 -- 17:24:54 - <Debug> -- requesting disabling magic for flow 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155a0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200010: e 0x3919a4bcdbf0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a80 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- requesting disabling md5 for flow 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x3919549700c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200025: e 0x3919a4bcdec0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- requesting disabling sha1 for flow 15/12/2023 -- 17:24:54 - <Debug> -- allocated a new packet only using alloc... 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493cf40 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200011 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200115: e 0x3919a4bced00 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x391954943980 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200116 15/12/2023 -- 17:24:54 - <Debug> -- p->ts.tv_sec 1702657438 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200026 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155d0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- p 0x3919c6cc5000 pkt 0x3919c6cc5250 ether type 0800 15/12/2023 -- 17:24:54 - <Debug> -- requesting disabling sha256 for flow 15/12/2023 -- 17:24:54 - <Debug> -- requesting disabling filesize for flow 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200117: e 0x3919a4bced60 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200025: e 0x3919a4bcdec0 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195493c7c0 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- pkt 0x3919c6cc525e len 1135 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b115a70 15/12/2023 -- 17:24:54 - <Debug> -- IPV4 3.160.212.64->192.168.11.161 PROTO: 6 OFFSET: 0 RF: 0 DF: 0 MF: 0 ID: 44799 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1154c0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- running match functions, sm 0x39195b1155b0 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496f940 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200026 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- TCP sp: 443 -> dp: 55288 - HLEN: 20 LEN: 1115 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200028: e 0x3919a4bcdf50 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- f->file_flags 0555 set_file_flags 0555 g_file_flow_mask 0000 15/12/2023 -- 17:24:54 - <Debug> -- sid 2200116: e 0x3919a4bced30 Callback returned false 15/12/2023 -- 17:24:54 - <Debug> -- sgh non_pf ptr 0x3919a7a2e400 cnt 291 (syn 0x3919a7a2ee00/306, other 0x3919a7a2e400/291) 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200118 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x39195496b480 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- flow 0x391954943980 det_ctx->varlist 0x0 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> 15/12/2023 -- 17:24:54 - <Debug> -- no match 15/12/2023 -- 17:24:54 - <Debug> -- Returning: 0 ... << 15/12/2023 -- 17:24:54 - <Debug> -- inspecting signature id 2200030 15/12/2023 -- 17:24:54 - <Debug> -- Entering ... >> -

AC-BS on two 6100 in CARP/HA makes primary partially hang (after boot when suricata starts) and secondary takes over the interface that suricata was about to start on primary which results in traffic not working at all as primary still thinks it is master for the same interface. Hyperscan coredumps immediately when suricata starts.

-

@btspce interesting…not seeing that on our 6100 pair.

only a boot not a stop/start? (Not sure I restarted again after Suricata install)

only a boot not a stop/start? (Not sure I restarted again after Suricata install) -

@SteveITS The webgui hangs after install and during the start of suricata or after boot of the 6100 when suricata starts. CPU goes to 80-100% and after about 10 seconds webgui hangs. No more updates. Killing the suricata process and restart php-fpm restores access to webgui where I can see that both primary and secondary firewall is now master for the same interface and no traffic passes.

No issues when running 23.05.1

-

i never mentioned it here but i'm running on a CARP environment, are we all running on carp or there is people affected without carp?

-

@kiokoman

Not running CARP here. -

Same problems after upgrading to 23.09.1

don't run carp -

@lukasz-s,

I'm encountering issues with starting Suricata on one of my PFsense installations, and I'm seeking assistance and sharing my observations with the community.

I currently have two similar setups with High Availability (HA) and CARP configurations, both running as virtual machines in Proxmox. However, one of them is working perfectly on PFsense 2.7.2, while the other encountered issues after updating to the same version.

Working System (referred to as "System A"):

- 8 Cores (HOST) without NUMA.

- 16GB of RAM.

- Virtio trunk interface with 8 queues.

- Two PF VMs running on two nodes within a cluster.

- Proxmox hardware with 2 x Xeon(R) Gold 6230 CPUs and 2 dual-port 100GB Mellanox cards.

Non-working System (referred to as "System B"):

- 6 Cores (HOST) with NUMA.

- 12GB of RAM.

- Dual-port Mellanox 25Gb/s NICs passed through to the PF VM and configured as LAGG.

- Two PF VMs running on two nodes within a cluster.

- Proxmox hardware with 2 x Intel(R) Xeon(R) CPU E5-2620.

On November 14th, I upgraded Suricata from version 6.x to 7.0.2 on one of the nodes in System B, while still running PFsense 2.7.0. This upgrade caused the GUI to crash, and I had to restart it from the console. I encountered a PHP error in the log, which I unfortunately don't have access to anymore. After this incident, I was unable to start Suricata on the interface.

Due to time constraints, I reverted to a snapshot to get it running again. During this period, I also tested Suricata installations on other systems for comparison, both with and without HA. Surprisingly, I couldn't reproduce the problem on any of these test systems, all running PFsense 2.7.2. This led me to believe that the issue might have been fixed.

Subsequently, I upgraded System A to PFsense 2.7.2 without any issues with Suricata or other components. However, when I upgraded the backup node of System B, the upgrade completed successfully, but Suricata failed to start once again.

Upon examining the Suricata log file, I found no errors; the last line indicated that it had started. However, the system.log displayed the following entries:

- "php-fpm[27588]: Starting Suricata on WAN_GC(lagg0.10) per user request..."

- "php[13124]: [Suricata] Updating rules configuration for: WAN_GC ..."

- "php[13124]: [Suricata] Enabling any flowbit-required rules for: WAN_GC..."

- "php[13124]: [Suricata] Building new sid-msg.map file for WAN_GC..."

- "php[13124]: [Suricata] Suricata START for v10(lagg0.10)..."

- "kernel: pid 16716 (suricata), jid 0, uid 0: exited on signal 11 (core dumped)"

It appears that Suricata crashes immediately upon startup.

Here are the steps I've taken and my findings:

- Uninstalling the Suricata package with the "retain config" option unchecked and reinstalling it did not resolve the issue.

- Disabling all rules allowed the interface to start.

- Enabling a single rule also allowed it to start, but enabling a different single rule had the same effect. Enabling both prevented Suricata from starting.

- Increasing values in "WAN - Flow" and "Stream" did not make a difference.

- Trying various settings in the system tunable found in forums had no effect.

- Disabling "Block offenders" allowed Suricata to start.

- Discovering the issue with hyperscan: Setting "Pattern Matcher Algorithm" to "AC" instead of "Auto" allowed Suricata to start and work with all rules active.

- Disabling NUMA in Proxmox did not help.

Both System A and B have very similar configurations, including mostly the same packages, with the main difference being the network interface cards. System B uses a dual 25G Mellanox card passed through, while System A uses a virtio NIC. However, I also attempted to start Suricata on a virtio card in System B, which was not in use, but it also refused to start. Additionally, System A has a newer CPU. System B employs LAGG with multiple VLANs, while System A has its LAGG managed by Proxmox and only sees a single virtio card with a VLAN trunk. System A has 29 VLAN interfaces, while System B has only 12.

Both systems were running Suricata PF pkg 7.0.2_2 and suricata-7.0.2_5, with all other packages up to date.

I have since reverted System B to a previous state, preventing me from conducting further tests. Nevertheless, I hope this information proves useful to the developers in diagnosing and resolving the issue.

Thank you.

-

upgraded Suricata from version 6.x to 7.0.2 on one of the nodes in System B, while still running PFsense 2.7.0. This upgrade caused the GUI to crash

It’s mostly expected to have problems if upgrading packages before pfSense versions…need to change your update branch to Previous first. Otherwise it may pull in later libraries etc.

-

Thanks. I don't remember exactly what happened back then but the newer version of Suricata was available in the package list, but no new PF-update was listed. I then believed that it belonged to my current PF version. I reverted back to a snapshot, and all was good after then.

The problem I have now, is not related to above, since this time PF was updated to 2.7.2, and Suricata was updated automatically along with it.