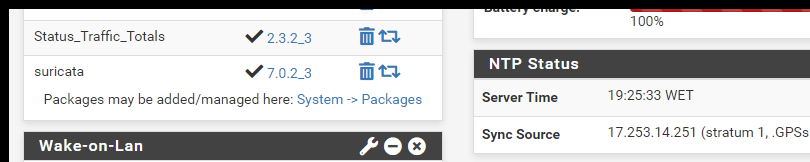

7.0.2_3 update "broke" IPS (netamap) on LAN interface (?)

-

Hi,

I'm not using Suricata but it seems related to this:

https://forum.netgate.com/topic/185935/warning-for-suricata-users-upstream-bug-can-be-triggered-if-you-alter-the-new-mid-stream-policy-default

-

Hi,

Thanks for the tip, suspiciously similar to what we are experiencing.

However, neither UDP nor ICMP work, the interface is completely dead.@bmeeks - write this in the post -"ICMP and UDP should still work,"

f.e.: ICMP:

I know this because I pinged (ping -t) the interface to see when it comes back and and immediately in the available 2 minutes I can drop the faulty Suricata

---edit:

it's not true that it's completely dead sorry, I didn't measure it, but it's dead for about 5 - 6 minutes, then it comes back for 1 - 2 minutes, - and so on -

@DaddyGo said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

Hi,

Thanks for the tip, suspiciously similar to what we are experiencing.

However, neither UDP nor ICMP work, the interface is completely dead.@bmeeks - write this in the post -"ICMP and UDP should still work,"

f.e.: ICMP:

I know this because I pinged (ping -t) the interface to see when it comes back and and immediately in the available 2 minutes I can drop the faulty Suricata

---edit:

it's not true that it's completely dead sorry, I didn't measure it, but it's dead for about 5 - 6 minutes, then it comes back for 1 - 2 minutes, - and so onHere is the upstream bug report: https://redmine.openinfosecfoundation.org/issues/6726.

OPNsense users were experiencing it worse due to the default settings in their Suricata package. More info about that is in some new posts to a previously closed Suricata netmap ticket here: https://redmine.openinfosecfoundation.org/issues/5744#note-81.

As I understand the bug, it results in flow hashes not being correctly calculated for every situation resulting in some dropped traffic due to the mismatched flow IDs used internally by the Suricata engine.

-

@DaddyGo:

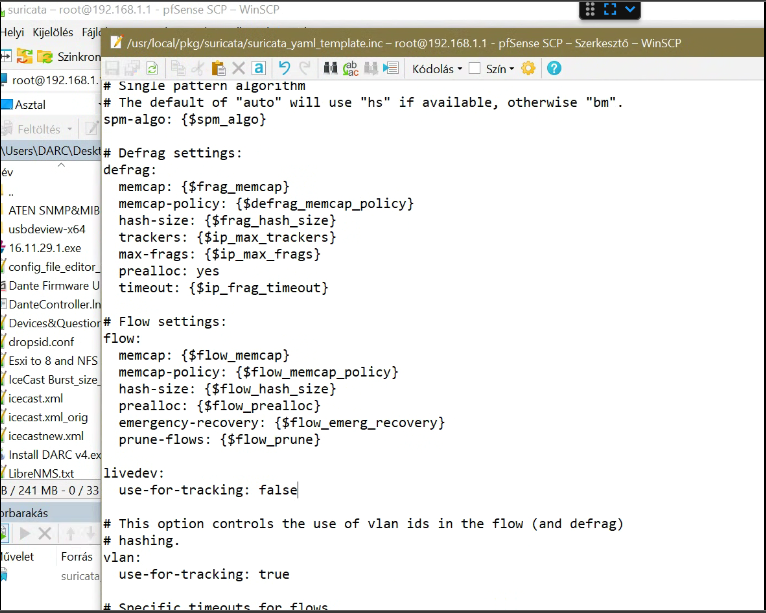

If you want, give this modification a try and see if it helps the traffic stall problem.Navigate to the file

/usr/local/pkg/suricata/suricata_yaml_template.incand open it for editing. You can use the built-in pfSense editor under DIAGNOSTICS > EDIT FILE for this.Scroll down in that file and find this section of text --

# Flow settings: flow: memcap: {$flow_memcap} memcap-policy: {$flow_memcap_policy} hash-size: {$flow_hash_size} prealloc: {$flow_prealloc} emergency-recovery: {$flow_emerg_recovery} prune-flows: {$flow_prune}Add this text immediately under the section taking care to preserve line breaks and indents exactly as shown:

livedev: use-for-tracking: falseWhen completed, the new section of text in the file should look like this:

# Flow settings: flow: memcap: {$flow_memcap} memcap-policy: {$flow_memcap_policy} hash-size: {$flow_hash_size} prealloc: {$flow_prealloc} emergency-recovery: {$flow_emerg_recovery} prune-flows: {$flow_prune} livedev: use-for-tracking: falseSave the change to the file, then return to the Suricata GUI and open each configured Suricata interface for editing.

Simply click Save on the page and that will regenerate the

suricata.yamlfile for the interface using the new setting from the template file.See if this allows Suricata to work properly in netmap mode. Please let me know if this helps. It is likely a tweak I need to make as a new default in the package if it works.

-

depending on your Suricata packet capture settings, you may just need to increase netmap thread buffer sizes. this can be done via System / Adavanced / System Tunables.

any jumbo pathways (i.e. packets or frames) anywhere on relevant infrastructure (i.e. the pfSense host itself, or any directly-connected routing or switching devices)? we've used

dev.netmap.buf_size="9280"in environments with jumbo paths. but you might be able to get Suricata to come up with ony "4096" if using normal/default MTUs. reboot after adding the tunable (and with Suricata reenabled so you can confirm everything comes up).you may also need to do some additional system or Suricata tuning. again, depends on environment and Suricata pcap settings. you could disable Suricata pcaps altogether—Services / Suricata / Interfaces / [interface] / Settings / Logging Settings... uncheck "Enable Packet Log"—before attemtpting anything else just to confirm that that's what's preventing Suricata from passing traffic.

FWIW, we've also relied on that brief window while Suricata is initiliazing to bail on attempted reconfigs. :)

-

@bmeeks said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

Please let me know if this helps. It is likely a tweak I need to make as a new default in the package if it works.

Hello Bill, thanks for the tweak.

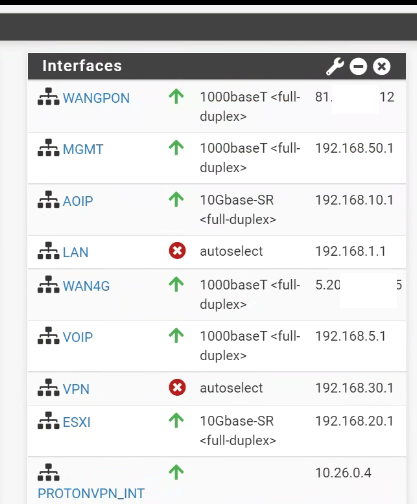

A little bit now the snake bites its own tail situation I'm in, as I'm not on the site.

I have a mgmt. interface to pfSense (w/o Suricata), but there is no internet there. And on the LAN, if I reinstall Suricata, because keep config was set, - I guess the interface will be down again when Suricata starts.

So it takes me a while to configure the mgmt. network over the backup 4G internet to go to pfSense on an interface where Suricata is not configured, so I can make sure I can reach it even if Suricata shuts down the LAN.

I'm definitely going to try the tweak one of the following nights (production environment) because I need the Suricata as there are 250 endpoints behind it.

I'll be back with more news soon, in the meantime thanks for your quick reply and help.

-

@cyberconsultants said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

you may just need to increase netmap thread buffer sizes. this can be done via System / Adavanced / System Tunables.

Hi,

Thanks, but it was already a set up, working Suricata, with tunings, as it should be.

I've been pushing IPS/IDS stuff for a couple of years now.

-

@DaddyGo said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

I'll be back with more news soon, in the meantime thanks for your quick reply and help.

I understand. Just post back here once you're able to try the additional setting tweak. The combination of setting the

livedev-use-for-trackingtofalseAND leaving the defaultmidstream-policytoignoreseems to have done the trick for the OPNsense folks. -

@bmeeks said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

Just post back here once you're able to try the additional setting tweak.

Hello Bill,

Unfortunately, it didn't work for me.

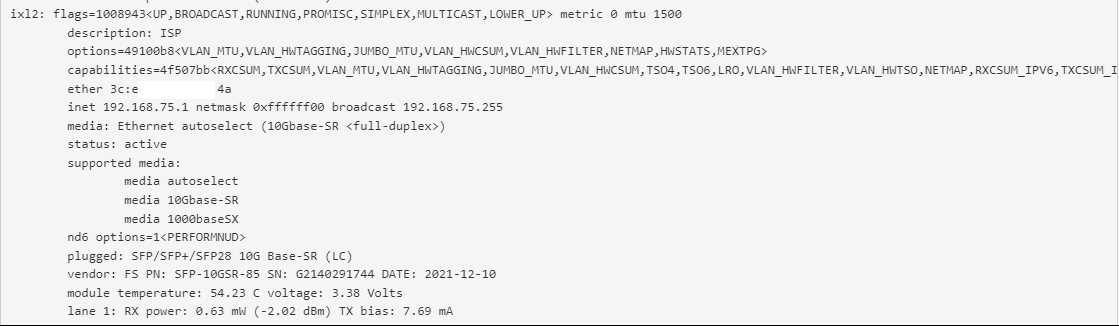

After further investigation, what I can say for sure is that this is (7.0.2_3 update) not a problem on system with (ixl) Intel X700 series...The test speaks for itself - I show you a series of pictures:

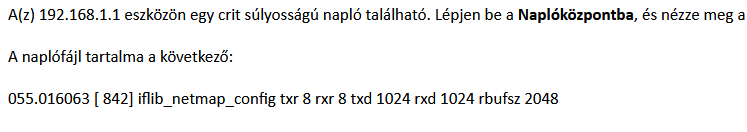

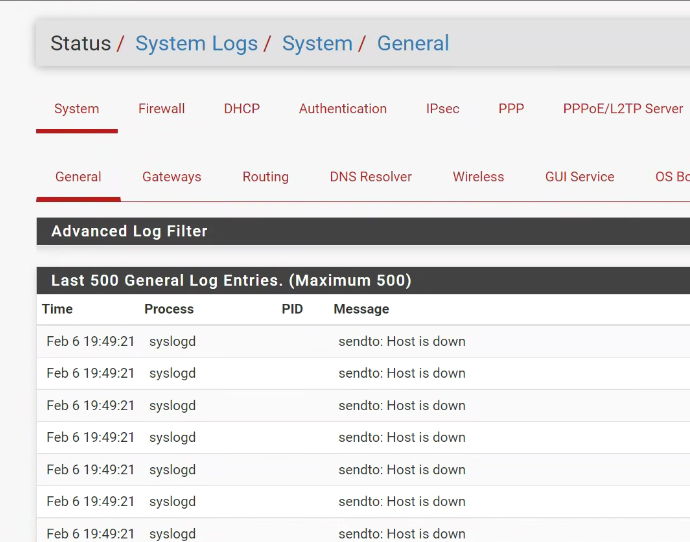

immediately came these erro logs, by email, - dozens of...

pfs / Intel 710 with 7.0.2_3:

-

@DaddyGo:

So, to be sure I am understanding correctly --Systems with Intel X700 NICs work fine. So, what NIC type is in the failing systems?

Or did I misunderstand your post?

-

@bmeeks said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

Or did I misunderstand your post?

You did not misunderstand, we have several systems where we use Suricata in IPS mode.

Out of curiosity, I ran the update (7.0.2_3) on a system with an Intel XL-710 BM1 controller, i.e. a (ixl) driver.

https://www.supermicro.com/manuals/other/AOC-STG-i4S.pdfNo problem here (Supermicro server), so it could be driver dependent...(?)

The problematic system uses Intel X520, or two of them, original Cisco N2XX-AIPCI01.

https://www.cisco.com/c/dam/en/us/products/collateral/interfaces-modules/ucs-virtual-interface-card-1225/ethernet-x520-server-adapter.pdfUCS Server Firmware: huu-4.1(2m) - this serverFW includes the slightly Cisco-tailored NIC driver, once upon a time I compared with original Intel there is no significant difference...

-

@DaddyGo:

Yes, very much sounds like a driver issue. Also be aware that a given "generic" driver in FreeBSD such asixorigbwill usually work with several slightly different firmware versions/revisions of that NIC family. But therein lies a possibility for a particular firmware version from that family to produce issues (bugs). -

@bmeeks said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

Yes, very much sounds like a driver issue.

Today I was reading a lot of old "netmap" related FreeBSD forum threads and there the X520 drives were described as quite stable from a netmap point of view, unlike the XL710.

Now the situation seems to have reversed. :-)

This installation had a harder start at the beginning...

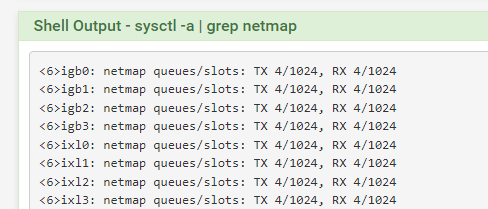

https://forum.netgate.com/topic/184932/starting-suricata-failing-netmap-on-oversized-hw-multiple-processors-cores-ramI am trying to further refine the netmap settings, this is what I use:

https://github.com/luigirizzo/netmap/issues/783this is what I am trying to achieve

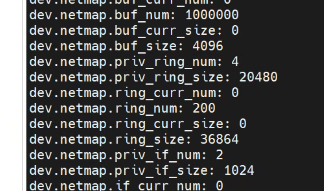

dev.netmap.buf_num=1000000

dev.netmap.ring_num=1024

dev.netmap.buf_size=2048 and 4096but I can't get the "default" dev.netmap.ring_num=200 value in sys tunables to change,...

sysctl OIDs, but stays at 200, after the tunnig, - maybe I should put in Loader Tunables too? (loader.conf.local)can't find info on this... exactly where it should be located

although Steve has written in the past that it is difficult to get information about this, especially for kernel components

https://forum.netgate.com/topic/69184/advanced-system-tunables-vs-boot-loader-conf-local-and-boot-loader-conf/4so it's in the sys tunables with 1024, but stays at 200...

-

@DaddyGo said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

but I can't get the "default" dev.netmap.ring_num=200 value in sys tunables to change,...

sysctl OIDs, but stays at 200, after the tunnig,I'm not an expert in NIC drivers, but I do know that in some cases the driver itself can refuse to accept certain larger values for some parameters. There is an open Suricata Redmine Issue right now for the DPDK interface (used only on Linux) that is experiencing a problem setting a parameter to the value required. It won't go high enough because the NIC driver itself refuses to use the larger values.

-

@bmeeks said in 7.0.2_3 update "broke" IPS (netamap) on LAN interface (?):

It won't go high enough because the NIC driver itself refuses to use the larger values.

Okay I'll play with this a bit more I saw somewhere that under CentOS this NIC goes up to 1024, as this is therefore the upper limit:

[NETMAP_RING_POOL] = { .name = "%s_ring", .objminsize = sizeof(struct netmap_ring), .objmaxsize = 32*PAGE_SIZE, .nummin = 2, .nummax = 1024,