pfsense VM disk becomes full - please help identify the culprit?

-

Hello,

I have pfsense CE 2.7.2-RELEASE in a proxmox VM. Generally works well compared to bare metal I had before. The only difference is that on bare-metal I configured root-zfs for pfsense, but in this virtualised environment, I use UFS for boot, because proxmox use zfs and zvol for the VM.

The problem I am facing is that over a couple of weeks, the pfsense disk fills up until services like dns stop because no more writing is possible. I can check with

dfand it shows 102% disk usage.When I check with

dulooking for the cause, it shows only 13G used.Rebooting the proxmox machine resolves the issue temporarily.

I have tried everything but unable to identify what is consuming the space.

Can anyone help me please?

After a reboot, temporarily resolving the issue, this is what I have. Note that the df result is different, but the du result is unchanged:

[2.7.2-RELEASE][root@pfSense.spacelab]/root: df -h / Filesystem Size Used Avail Capacity Mounted on /dev/ufsid/659ec5d8d33f9720 120G 13G 97G 12% /[2.7.2-RELEASE][root@pfSense.spacelab]/root: du -h -d 1 / 4.0K /.snap 101M /boot 4.5K /dev 16M /rescue 4.0K /proc 48M /root 10G /var 4.0K /media 12K /conf.default 4.0K /mnt 1.6M /tmp 4.6M /sbin 17M /lib 4.0K /net 15M /cf 164K /libexec 8.0M /etc 1.4M /bin 2.0G /usr 20K /home 1.1M /tftpboot 13G /[2.7.2-RELEASE][root@pfSense.spacelab]/root: mount /dev/ufsid/659ec5d8d33f9720 on / (ufs, local, noatime, soft-updates, journaled soft-updates) devfs on /dev (devfs) /dev/vtbd0p1 on /boot/efi (msdosfs, local) tmpfs on /var/run (tmpfs, local)root@pvepbs:~# cat /etc/pve/qemu-server/100.conf balloon: 0 bios: ovmf boot: order=ide2;virtio0 cores: 6 cpu: host,flags=+aes efidisk0: local-zfs:vm-100-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M hostpci0: 0000:01:00,pcie=1 hostpci1: 0000:03:00,pcie=1 ide2: none,media=cdrom machine: q35 memory: 16384 meta: creation-qemu=8.1.2,ctime=1704716297 name: pfsense numa: 0 onboot: 1 ostype: other scsihw: virtio-scsi-single serial0: socket smbios1: uuid=dd5d2b62-e3a4-4da9-b796-e8c97bfb8358 sockets: 1 vga: qxl virtio0: local-zfs:vm-100-disk-1,discard=on,iothread=1,size=128G vmgenid: babe5976-8e38-42cb-9d4b-4f14f6e2cdfagstat:

dT: 1.010s w: 1.000s L(q) ops/s r/s kBps ms/r w/s kBps ms/w %busy Name 0 6 0 0 0.0 6 1023 0.4 0.1| vtbd0 0 0 0 0 0.0 0 0 0.0 0.0| vtbd0p1 0 5 0 0 0.0 5 1023 0.3 0.1| vtbd0p2 0 0 0 0 0.0 0 0 0.0 0.0| vtbd0p3 0 5 0 0 0.0 5 1023 0.3 0.1| ufsid/659ec5d8d33f9720 0 0 0 0 0.0 0 0 0.0 0.0| label/swap0 0 0 0 0 0.0 0 0 0.0 0.0| cd0systat -vmstat:

2 users Load 0.88 0.66 0.50 Feb 10 12:56:58 Mem usage: 17%Phy 2%Kmem VN PAGER SWAP PAGER Mem: REAL VIRTUAL in out in out Tot Share Tot Share Free count 28 Act 1414M 106M 521G 151M 13076M pages 3 28 All 1423M 114M 521G 241M ioflt Interrupts Proc: 186 cow 32032 total r p d s w Csw Trp Sys Int Sof Flt 958 zfod atkbd0 1 1 235 73K 1K 15K 30K 12K 1K ozfod 270 cpu0:timer %ozfod 320 cpu1:timer 3.0%Sys 2.5%Intr 1.4%User 0.0%Nice 93.1%Idle daefr 362 cpu2:timer | | | | | | | | | | | 280 prcfr 283 cpu3:timer =++ 541 totfr 279 cpu4:timer 192 dtbuf react 280 cpu5:timer Namei Name-cache Dir-cache 348426 maxvn pdwak 6559 igc0:rxq0 Calls hits % hits % 68769 numvn 319 pdpgs 91 igc0:rxq1 6778 6778 100 55062 frevn intrn 7885 igc0:rxq2 1197M wire 118 igc0:rxq3 Disks vtbd0 cd0 pass0 771M act igc0:aq 29 KB/t 89.07 0.00 0.00 767M inact 83 igc1:rxq0 tps 3 0 0 0 laund 8450 igc1:rxq1 MB/s 0.24 0.00 0.00 13G free 118 igc1:rxq2 %busy 0 0 0 730M buf 6924 igc1:rxq3 igc1:aq 34 virtio_pci 3 virtio_pci 7 ahci0 39 -

@sloopbun Did you try an fsck? https://docs.netgate.com/pfsense/en/latest/troubleshooting/filesystem-check.html

-

@SteveITS said in pfsense VM disk becomes full - please help identify the culprit?:

@sloopbun Did you try an fsck? https://docs.netgate.com/pfsense/en/latest/troubleshooting/filesystem-check.html

Thanks for the suggestion. I had not done so. I just did the reboot with disk check from the gui.

Since I had posted, my disk usage had gone from 13G to 19G.

After the reboot with disk check, it is back to the 13G. Will see how it increases in the next day.

-

Disk space in the host server isn't overcommitted, is it? For instance, in VMWare one can assign 2TB to each each of 2 VMs residing on a 3TB host disk and eventually someone will lose out.

Just spitballin'. -

@provels

Since doing the reboot with filesystem check, my disk usage has jumped from 13G to 21G.[2.7.2-RELEASE][root@pfSense.spacelab]/root: df -hi Filesystem Size Used Avail Capacity iused ifree %iused Mounted on /dev/ufsid/659ec5d8d33f9720 120G 22G 89G 20% 64k 16M 0% / devfs 1.0K 0B 1.0K 0% 0 0 - /dev /dev/vtbd0p1 260M 1.3M 259M 1% 2 510 0% /boot/efi tmpfs 4.0M 192K 3.8M 5% 56 14k 0% /var/run[2.7.2-RELEASE][root@pfSense.spacelab]/root: du -h -d 1 / 4.0K /.snap 101M /boot 4.5K /dev 16M /rescue 4.0K /proc 96M /root 10G /var 4.0K /media 12K /conf.default 4.0K /mnt 1.1M /tmp 4.6M /sbin 17M /lib 4.0K /net 15M /cf 164K /libexec 8.0M /etc 1.4M /bin 1.8G /usr 20K /home 1.1M /tftpboot 13G /Host storage looks normal to me:

root@pvepbs:~# zfs list NAME USED AVAIL REFER MOUNTPOINT rpool 41.2G 680G 104K /rpool rpool/ROOT 2.46G 680G 96K /rpool/ROOT rpool/ROOT/pve-1 2.46G 680G 2.46G / rpool/data 33.5G 680G 96K /rpool/data rpool/data/vm-100-disk-0 80K 680G 80K - rpool/data/vm-100-disk-1 33.5G 680G 33.5G - rpool/pbs_datastore 96K 600G 96K /rpool/pbs_datastore rpool/var-lib-vz 5.06G 680G 5.06G /var/lib/vz -

What pfSense packages are you using ?

Have a look, at the /var/log/ folder, and all the /var/log/ sub folders.

There are packages that log 'network' activity. If you have a lot of network activity, log files can grow to very fast, within a day, to several Gbytes. The solution would be : stop using the tools that log a lot, or don't have them log anymore.You /var/ :

10G /varI bet these 10G are mostly in /var/log/

[23.09.1-RELEASE][root@pfSense.bhf.tld]/root: df -hi Filesystem Size Used Avail Capacity iused ifree %iused Mounted on ...... pfSense/var/log 94G 86M 94G 0% 116 197M 0% /var/log ...... -

Hello!

I have seen something similar (?) when running a sparse guest fs on top of a thin-provisioned host fs.

I dont run zfs/zvol on proxmox, so I dont know if this applies.https://forum.netgate.com/topic/166274/pfsense-hyper-v-vhdx-growing-like-crazy

John

-

@Gertjan said in pfsense VM disk becomes full - please help identify the culprit?:

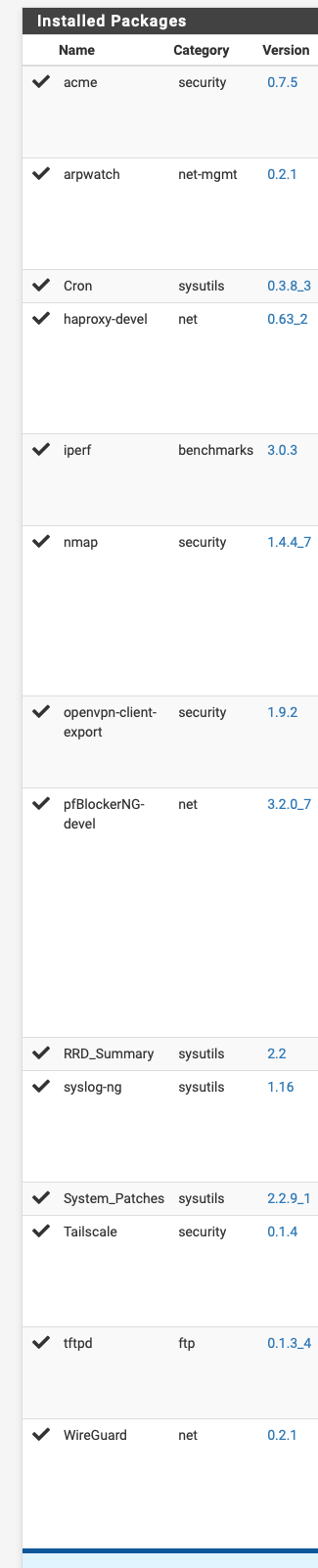

What pfSense packages are you using ?

Have a look, at the /var/log/ folder, and all the /var/log/ sub folders.

There are packages that log 'network' activity. If you have a lot of network activity, log files can grow to very fast, within a day, to several Gbytes. The solution would be : stop using the tools that log a lot, or don't have them log anymore.You /var/ :

10G /varI bet these 10G are mostly in /var/log/

[23.09.1-RELEASE][root@pfSense.bhf.tld]/root: df -hi Filesystem Size Used Avail Capacity iused ifree %iused Mounted on ...... pfSense/var/log 94G 86M 94G 0% 116 197M 0% /var/log ......Here are my packages, there is some logging, and I have deleted a bunch just to free up space, but the reported size of the /var/ directory does not balloon, just the lack of available space reported by

df.

@serbus said in pfsense VM disk becomes full - please help identify the culprit?:

Hello!

I have seen something similar (?) when running a sparse guest fs on top of a thin-provisioned host fs.

I dont run zfs/zvol on proxmox, so I dont know if this applies.https://forum.netgate.com/topic/166274/pfsense-hyper-v-vhdx-growing-like-crazy

John

This is much more along the lines of what I have in mine. In proxmox, with zvol, there is a setting for "discard" which I have enabled.

I note that the last reply on that thread was:

@LesserBloops said in PfSense Hyper-V .vhdx growing like crazy:

I hit this when I tried using ZFS as the file system with expanding disks. It went runaway and eventually caused an outage.

I've been meaning to go back and try again with a fixed size disk instead of an expanding one, but haven't got around to it yet, and EXT seems to be fine...

There are some parameters that I am not confident in the effects of such as

volmodeandreservationwhich I am hesitant to mess with with a recommendation to do so. I not that some parameters likelogicalusedandvolsizerepresent the size of the disk that pfsense sees.I don't think this would seem to explain why the available space shown by

df /progressively grows over time, without any file usage, but I would be happy to take advice on resolving this.The odd thing to me is that I thought this was a fairly standard practice, as explained in the docs here: https://docs.netgate.com/pfsense/en/latest/recipes/virtualize-proxmox-ve.html

The fact that this is not a common issue points to it being a configuration issue on my behalf, right?

root@pvepbs:~# zfs get all rpool/data/vm-100-disk-1 NAME PROPERTY VALUE SOURCE rpool/data/vm-100-disk-1 type volume - rpool/data/vm-100-disk-1 creation Mon Jan 8 13:18 2024 - rpool/data/vm-100-disk-1 used 33.5G - rpool/data/vm-100-disk-1 available 684G - rpool/data/vm-100-disk-1 referenced 33.5G - rpool/data/vm-100-disk-1 compressratio 3.58x - rpool/data/vm-100-disk-1 reservation none default rpool/data/vm-100-disk-1 volsize 128G local rpool/data/vm-100-disk-1 volblocksize 16K default rpool/data/vm-100-disk-1 checksum on default rpool/data/vm-100-disk-1 compression on inherited from rpool rpool/data/vm-100-disk-1 readonly off default rpool/data/vm-100-disk-1 createtxg 2282 - rpool/data/vm-100-disk-1 copies 1 default rpool/data/vm-100-disk-1 refreservation none default rpool/data/vm-100-disk-1 guid 7625546012721003177 - rpool/data/vm-100-disk-1 primarycache all default rpool/data/vm-100-disk-1 secondarycache all default rpool/data/vm-100-disk-1 usedbysnapshots 0B - rpool/data/vm-100-disk-1 usedbydataset 33.5G - rpool/data/vm-100-disk-1 usedbychildren 0B - rpool/data/vm-100-disk-1 usedbyrefreservation 0B - rpool/data/vm-100-disk-1 logbias latency default rpool/data/vm-100-disk-1 objsetid 2013 - rpool/data/vm-100-disk-1 dedup off default rpool/data/vm-100-disk-1 mlslabel none default rpool/data/vm-100-disk-1 sync standard inherited from rpool rpool/data/vm-100-disk-1 refcompressratio 3.58x - rpool/data/vm-100-disk-1 written 33.5G - rpool/data/vm-100-disk-1 logicalused 119G - rpool/data/vm-100-disk-1 logicalreferenced 119G - rpool/data/vm-100-disk-1 volmode default default rpool/data/vm-100-disk-1 snapshot_limit none default rpool/data/vm-100-disk-1 snapshot_count none default rpool/data/vm-100-disk-1 snapdev hidden default rpool/data/vm-100-disk-1 context none default rpool/data/vm-100-disk-1 fscontext none default rpool/data/vm-100-disk-1 defcontext none default rpool/data/vm-100-disk-1 rootcontext none default rpool/data/vm-100-disk-1 redundant_metadata all default rpool/data/vm-100-disk-1 encryption off default rpool/data/vm-100-disk-1 keylocation none default rpool/data/vm-100-disk-1 keyformat none default rpool/data/vm-100-disk-1 pbkdf2iters 0 default rpool/data/vm-100-disk-1 snapshots_changed Mon Feb 12 9:32:58 2024 - -

@sloopbun I remembered this thread https://forum.netgate.com/topic/183318/free-up-space-disk-storage-80/ with a similar report I think but it doesn't look like there was a resolution.

-

@SteveITS said in pfsense VM disk becomes full - please help identify the culprit?:

@sloopbun I remembered this thread https://forum.netgate.com/topic/183318/free-up-space-disk-storage-80/ with a similar report I think but it doesn't look like there was a resolution.

In that thread, it is suggested that the command

geom part listmight reveal something, but it looks normal to me but maybe someone else can spot something?:[2.7.2-RELEASE][root@pfSense.spacelab]/root: geom part list Geom name: vtbd0 modified: false state: OK fwheads: 16 fwsectors: 63 last: 268435415 first: 40 entries: 128 scheme: GPT Providers: 1. Name: vtbd0p1 Mediasize: 272629760 (260M) Sectorsize: 512 Stripesize: 0 Stripeoffset: 20480 Mode: r1w1e1 efimedia: HD(1,GPT,616156c8-afd5-11ee-9ccd-e9881622351f,0x28,0x82000) rawuuid: 616156c8-afd5-11ee-9ccd-e9881622351f rawtype: c12a7328-f81f-11d2-ba4b-00a0c93ec93b label: (null) length: 272629760 offset: 20480 type: efi index: 1 end: 532519 start: 40 2. Name: vtbd0p2 Mediasize: 132871356416 (124G) Sectorsize: 512 Stripesize: 0 Stripeoffset: 272650240 Mode: r1w1e2 efimedia: HD(2,GPT,6161bcfd-afd5-11ee-9ccd-e9881622351f,0x82028,0xf77e000) rawuuid: 6161bcfd-afd5-11ee-9ccd-e9881622351f rawtype: 516e7cb6-6ecf-11d6-8ff8-00022d09712b label: (null) length: 132871356416 offset: 272650240 type: freebsd-ufs index: 2 end: 260046887 start: 532520 3. Name: vtbd0p3 Mediasize: 4294926336 (4.0G) Sectorsize: 512 Stripesize: 0 Stripeoffset: 133144006656 Mode: r1w1e1 efimedia: HD(3,GPT,616220f2-afd5-11ee-9ccd-e9881622351f,0xf800028,0x7fffb0) rawuuid: 616220f2-afd5-11ee-9ccd-e9881622351f rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b label: (null) length: 4294926336 offset: 133144006656 type: freebsd-swap index: 3 end: 268435415 start: 260046888 Consumers: 1. Name: vtbd0 Mediasize: 137438953472 (128G) Sectorsize: 512 Mode: r3w3e7[2.7.2-RELEASE][root@pfSense.spacelab]/root: gpart show -p => 40 268435376 vtbd0 GPT (128G) 40 532480 vtbd0p1 efi (260M) 532520 259514368 vtbd0p2 freebsd-ufs (124G) 260046888 8388528 vtbd0p3 freebsd-swap (4.0G)After deleting some old logs and such,

dushows just 4.8G of disk usage butdfshows 26G used...[2.7.2-RELEASE][root@pfSense.spacelab]/root: du -h -d1 / 4.0K /.snap 101M /boot 4.5K /dev 16M /rescue 4.0K /proc 96M /root 2.1G /var 4.0K /media 12K /conf.default 4.0K /mnt 13M /tmp 4.6M /sbin 17M /lib 4.0K /net 15M /cf 164K /libexec 8.0M /etc 1.4M /bin 1.8G /usr 20K /home 1.1M /tftpboot 4.8G /[2.7.2-RELEASE][root@pfSense.spacelab]/root: df -hi Filesystem Size Used Avail Capacity iused ifree %iused Mounted on /dev/ufsid/659ec5d8d33f9720 120G 26G 85G 23% 55k 16M 0% / devfs 1.0K 0B 1.0K 0% 0 0 - /dev /dev/vtbd0p1 260M 1.3M 259M 1% 2 510 0% /boot/efi tmpfs 4.0M 192K 3.8M 5% 56 14k 0% /var/run -

I am not 100% sure, but I don't think I had this problem when I had pfsense on zfs, on top of the zvol in proxmox. I believe this kind of zfs on zfs is not recommended because it leads to write amplification, but it would be an improvement for me if another solution cannot be found.

I could go back to that. Or I could go back to bare-metal - but I am using this proxmox machine for backing up my other proxmox machine, so I would prefer not to at this stage. There is of course the other downside of losing proxmox managed backups and snapshots, but that is not a deal breaker.

-

@sloopbun said in pfsense VM disk becomes full - please help identify the culprit?:

Since I had posted, my disk usage had gone from 13G to 19G.

Where are you checking this from, within pfsense or via the hypervisor/proxmox?

-

@Popolou said in pfsense VM disk becomes full - please help identify the culprit?:

@sloopbun said in pfsense VM disk becomes full - please help identify the culprit?:

Since I had posted, my disk usage had gone from 13G to 19G.

Where are you checking this from, within pfsense or via the hypervisor/proxmox?

From within pfsense. For example, below is the progression over a few hours today, but I do not see any change in the zvol reported on the host, proxmox.

root@pvepbs:~# date Tue Feb 13 08:45:13 AM CET 2024 root@pvepbs:~# zfs list rpool/data/vm-100-disk-1 NAME USED AVAIL REFER MOUNTPOINT rpool/data/vm-100-disk-1 33.4G 684G 33.4G -root@pvepbs:~# date Tue Feb 13 03:14:46 PM CET 2024 root@pvepbs:~# zfs list rpool/data/vm-100-disk-1 NAME USED AVAIL REFER MOUNTPOINT rpool/data/vm-100-disk-1 33.2G 684G 33.2G -[2.7.2-RELEASE][root@pfSense.spacelab]/root: date Tue Feb 13 08:45:13 CET 2024 [2.7.2-RELEASE][root@pfSense.spacelab]/root: df -hi / Filesystem Size Used Avail Capacity iused ifree %iused Mounted on /dev/ufsid/659ec5d8d33f9720 120G 26G 85G 23% 55k 16M 0% /[2.7.2-RELEASE][root@pfSense.spacelab]/root: date Tue Feb 13 15:07:25 CET 2024 [2.7.2-RELEASE][root@pfSense.spacelab]/root: df -hi / Filesystem Size Used Avail Capacity iused ifree %iused Mounted on /dev/ufsid/659ec5d8d33f9720 120G 32G 79G 29% 55k 16M 0% /[2.7.2-RELEASE][root@pfSense.spacelab]/root: du -h -d 1 / 4.0K /.snap 101M /boot 4.5K /dev 16M /rescue 4.0K /proc 96M /root 2.2G /var 4.0K /media 12K /conf.default 4.0K /mnt 9.8M /tmp 4.6M /sbin 17M /lib 4.0K /net 15M /cf 164K /libexec 8.0M /etc 1.4M /bin 1.8G /usr 20K /home 1.1M /tftpboot 4.8G / -

@sloopbun said in pfsense VM disk becomes full - please help identify the culprit?:

I believe this kind of zfs on zfs is not recommended

Hello!

It is not recommended.

Comparing the output from df and du can be problematic, such as when you mount over the top of a folder that already has files in it, or a file has been deleted but is still open.

You may need to try something like lsof or fstat to dig deeper.

John

-

@serbus Thanks for the suggestion. Any recommendation incantations of fstat and lsof? I thought I might find unlinked files with lsof, but

lsof +L1 /did not show any 0 in the NLINK column.Similarly, I am not sure what I am looking for with fstat...

[2.7.2-RELEASE][root@pfSense.spacelab]/root: fstat | awk '{print $5}' | sort | uniq -c | sort -nr 466 / 276 /dev 155 local 94 internet 83 pipe 44 39 /var/run 35 internet6 14 - 12 netgraph 3 route 3 pseudo-terminal 1 MOUNT -

If I run five fsck from single user mode, as recommended in the docs, it shows no errors, even for the first run.

But when I run fsck from the regular pfsense terminal, I have a lot of unreferenced files, though the free space is reported correctly because this is just after a fresh reboot.

That is weird, right?

fsck:[2.7.2-RELEASE][root@pfSense.spacelab]/root: fsck -y /dev/ufsid/659ec5d8d33f9720 ** /dev/ufsid/659ec5d8d33f9720 (NO WRITE) ** SU+J Recovering /dev/ufsid/659ec5d8d33f9720 USE JOURNAL? no Skipping journal, falling through to full fsck ** Last Mounted on / ** Root file system ** Phase 1 - Check Blocks and Sizes INCORRECT BLOCK COUNT I=400686 (273856 should be 258944) CORRECT? no INCORRECT BLOCK COUNT I=641028 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=7371851 (383040 should be 382784) CORRECT? no INCORRECT BLOCK COUNT I=9615548 (896 should be 640) CORRECT? no INCORRECT BLOCK COUNT I=9615593 (144 should be 128) CORRECT? no ** Phase 2 - Check Pathnames ** Phase 3 - Check Connectivity ** Phase 4 - Check Reference Counts UNREF FILE I=400686 OWNER=root MODE=100600 SIZE=140112184 MTIME=Feb 13 19:53 2024 CLEAR? no UNREF FILE I=15225275 OWNER=root MODE=100666 SIZE=0 MTIME=Feb 13 19:44 2024 CLEAR? no ** Phase 5 - Check Cyl groups FREE BLK COUNT(S) WRONG IN SUPERBLK SALVAGE? no SUMMARY INFORMATION BAD SALVAGE? no BLK(S) MISSING IN BIT MAPS SALVAGE? no 54742 files, 1317012 used, 30100372 free (4028 frags, 3762043 blocks, 0.0% fragmentation)[2.7.2-RELEASE][root@pfSense.spacelab]/root: fsck -y / ** /dev/ufsid/659ec5d8d33f9720 (NO WRITE) ** SU+J Recovering /dev/ufsid/659ec5d8d33f9720 USE JOURNAL? no Skipping journal, falling through to full fsck ** Last Mounted on / ** Root file system ** Phase 1 - Check Blocks and Sizes INCORRECT BLOCK COUNT I=400686 (3136 should be 8) CORRECT? no INCORRECT BLOCK COUNT I=480777 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=480791 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=480799 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=480800 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=1041768 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=1041770 (2560 should be 768) CORRECT? no INCORRECT BLOCK COUNT I=1041771 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=2243678 (64 should be 8) CORRECT? no INCORRECT BLOCK COUNT I=3044945 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=3285252 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=3285261 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=3285294 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=3285301 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=3285324 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=6330120 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=6330142 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=7131638 (48 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=7131644 (960 should be 768) CORRECT? no INCORRECT BLOCK COUNT I=7131645 (16 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=7131718 (960 should be 768) CORRECT? no INCORRECT BLOCK COUNT I=7131722 (960 should be 768) CORRECT? no INCORRECT BLOCK COUNT I=7131734 (960 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=7131742 (960 should be 768) CORRECT? no INCORRECT BLOCK COUNT I=7131751 (960 should be 768) CORRECT? no INCORRECT BLOCK COUNT I=7371851 (380224 should be 380096) CORRECT? no INCORRECT BLOCK COUNT I=9615548 (624 should be 544) CORRECT? no INCORRECT BLOCK COUNT I=9615562 (48 should be 24) CORRECT? no INCORRECT BLOCK COUNT I=9695856 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=9855876 (2304 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=12100439 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=13542017 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=13542018 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=14102562 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=14102571 (48 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=14102821 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=14102834 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=15225288 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=15225289 (8 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=15225290 (48 should be 0) CORRECT? no INCORRECT BLOCK COUNT I=15225291 (8 should be 0) CORRECT? no ** Phase 2 - Check Pathnames SETTING DIRTY FLAG IN READ_ONLY MODE UNEXPECTED SOFT UPDATE INCONSISTENCY SETTING DIRTY FLAG IN READ_ONLY MODE UNEXPECTED SOFT UPDATE INCONSISTENCY SETTING DIRTY FLAG IN READ_ONLY MODE UNEXPECTED SOFT UPDATE INCONSISTENCY SETTING DIRTY FLAG IN READ_ONLY MODE UNEXPECTED SOFT UPDATE INCONSISTENCY ** Phase 3 - Check Connectivity UNREF DIR I=7291717 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:44 2024 RECONNECT? no UNREF DIR I=7291716 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:44 2024 RECONNECT? no UNREF DIR I=7051269 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:44 2024 RECONNECT? no UNREF DIR I=480792 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:45 2024 RECONNECT? no UNREF DIR I=480801 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:45 2024 RECONNECT? no ** Phase 4 - Check Reference Counts UNREF FILE I=320567 OWNER=root MODE=100600 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320569 OWNER=root MODE=100600 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320570 OWNER=root MODE=100600 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320571 OWNER=root MODE=100600 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320572 OWNER=root MODE=100600 SIZE=888 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320577 OWNER=root MODE=100600 SIZE=319 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320579 OWNER=root MODE=100600 SIZE=503 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=320580 OWNER=root MODE=100600 SIZE=262 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no ZERO LENGTH DIR I=480777 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=480782 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=480783 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=480784 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no UNREF FILE I=480785 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=480786 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=480787 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=480788 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=480789 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=480790 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no ZERO LENGTH DIR I=480791 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no UNREF DIR I=480792 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=480799 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=480800 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no UNREF DIR I=480801 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:45 2024 CLEAR? no UNREF FILE I=961546 OWNER=root MODE=140666 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=1041770 OWNER=root MODE=100644 SIZE=1253376 MTIME=Feb 9 19:29 2024 RECONNECT? no CLEAR? no UNREF FILE I=1041777 OWNER=root MODE=100644 SIZE=212992 MTIME=Dec 6 22:23 2023 RECONNECT? no CLEAR? no UNREF FILE I=1041802 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=1041810 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=2564108 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=2564118 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=2564120 OWNER=root MODE=140755 SIZE=0 MTIME=Feb 13 19:40 2024 RECONNECT? no CLEAR? no UNREF FILE I=2564121 OWNER=root MODE=100600 SIZE=16384 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=2564122 OWNER=root MODE=140755 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no LINK COUNT DIR I=6330112 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:45 2024 COUNT 6 SHOULD BE 5 ADJUST? no UNREF FILE I=6330120 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=6330140 OWNER=root MODE=100644 SIZE=221 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=6330142 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=6810887 OWNER=root MODE=140666 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF DIR I=7051269 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:44 2024 CLEAR? no UNREF FILE I=7131644 OWNER=root MODE=100644 SIZE=457710 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131656 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131662 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131681 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no LINK COUNT FILE I=7131688 OWNER=root MODE=100755 SIZE=348096 MTIME=Dec 8 18:07 2023 COUNT 4 SHOULD BE 3 ADJUST? no UNREF FILE I=7131705 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131710 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131715 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131718 OWNER=root MODE=100644 SIZE=457244 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131722 OWNER=root MODE=100644 SIZE=457710 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131734 OWNER=root MODE=100644 SIZE=457710 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131742 OWNER=root MODE=100644 SIZE=457244 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131747 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=7131751 OWNER=root MODE=100644 SIZE=457710 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF DIR I=7291716 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:44 2024 CLEAR? no UNREF DIR I=7291717 OWNER=root MODE=40755 SIZE=512 MTIME=Feb 13 19:44 2024 CLEAR? no LINK COUNT FILE I=9615549 OWNER=root MODE=100600 SIZE=11300 MTIME=Feb 12 22:11 2024 COUNT 2 SHOULD BE 1 ADJUST? no LINK COUNT FILE I=9615560 OWNER=root MODE=100600 SIZE=11448 MTIME=Feb 13 12:49 2024 COUNT 2 SHOULD BE 1 ADJUST? no UNREF FILE I=9615576 OWNER=root MODE=100600 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no LINK COUNT FILE I=9615577 OWNER=root MODE=100600 SIZE=11293 MTIME=Feb 13 01:48 2024 COUNT 2 SHOULD BE 1 ADJUST? no LINK COUNT FILE I=9615578 OWNER=root MODE=100600 SIZE=11263 MTIME=Feb 13 09:11 2024 COUNT 2 SHOULD BE 1 ADJUST? no LINK COUNT FILE I=9615587 OWNER=root MODE=100600 SIZE=11258 MTIME=Feb 13 16:18 2024 COUNT 2 SHOULD BE 1 ADJUST? no LINK COUNT FILE I=9615588 OWNER=root MODE=100600 SIZE=11317 MTIME=Feb 13 05:42 2024 COUNT 2 SHOULD BE 1 ADJUST? no UNREF FILE I=9615618 OWNER=root MODE=100600 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=9695987 OWNER=root MODE=100644 SIZE=2220 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no LINK COUNT DIR I=12099330 OWNER=root MODE=40755 SIZE=2560 MTIME=Feb 13 19:45 2024 COUNT 37 SHOULD BE 33 ADJUST? no UNREF FILE I=12180487 OWNER=root MODE=100644 SIZE=568 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no ZERO LENGTH DIR I=12180505 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=12180506 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no ZERO LENGTH DIR I=12180507 OWNER=root MODE=40755 SIZE=0 MTIME=Feb 13 19:45 2024 CLEAR? no UNREF FILE I=12180508 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=12180509 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=12180510 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=12180511 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=12180512 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=12180513 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=15225275 OWNER=root MODE=100666 SIZE=0 MTIME=Feb 13 19:44 2024 CLEAR? no UNREF FILE I=15225276 OWNER=root MODE=100666 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=15225278 OWNER=root MODE=100644 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=15225299 OWNER=root MODE=100666 SIZE=0 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=15225300 OWNER=root MODE=100644 SIZE=1776 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no UNREF FILE I=15225301 OWNER=root MODE=100644 SIZE=156665 MTIME=Feb 13 19:45 2024 RECONNECT? no CLEAR? no ** Phase 5 - Check Cyl groups FREE BLK COUNT(S) WRONG IN SUPERBLK SALVAGE? no SUMMARY INFORMATION BAD SALVAGE? no BLK(S) MISSING IN BIT MAPS SALVAGE? no ALLOCATED FILE 15225275 MARKED FREE 54697 files, 1280056 used, 30136626 free (4026 frags, 3766575 blocks, 0.0% fragmentation)[2.7.2-RELEASE][root@pfSense.spacelab]/root: df -hi / Filesystem Size Used Avail Capacity iused ifree %iused Mounted on /dev/ufsid/659ec5d8d33f9720 120G 4.9G 105G 4% 55k 16M 0% / -

-

@serbus Thanks. Open, or zombie procresses/files is a likely candidate, though I cannot find any. In that link there are a couple of commands for linux that makes sense to me, but either do not turn up any results, or do not work on freebsd. Any idea what their equivalent might be?

I am referring to are:

lsof | grep -i deletedwhich returns nothing, and:

[2.7.2-RELEASE][root@pfSense.spacelab]/: mount -o bind / /mnt mount: /: mount option <bind> is unknown: Invalid argument [2.7.2-RELEASE][root@pfSense.spacelab]/:which I think has an equivalent freebsd of:

mount -t nullfs / /mntThough once mounted, appear to be identical to /. No extra files in /mnt/var or anything...

Was there any other avenue of exploration you saw that might help?

Apart from the zombie processes/files, the other likely candidate in my mind is trim/discard/clean-up. What do you think about turning on trim for UFS in pfsense?

-

To add an unhelpful update. This problem was still occurring a few days ago, so I restarted as usual to start the ticking time bomb again.

I now have almost four days of uptime and available disk is in line with disk usage.

I swear I did not change anything or try to fix anything since this thread went quiet. In fact I have been convincing myself to go back to bare metal. Super weird and I don't trust it...

[2.7.2-RELEASE][root@pfSense.spacelab]/root: uptime 9:19AM up 3 days, 22:56, 2 users, load averages: 0.33, 0.22, 0.18[2.7.2-RELEASE][root@pfSense.spacelab]/root: df -h / Filesystem Size Used Avail Capacity Mounted on /dev/ufsid/659ec5d8d33f9720 120G 5.8G 104G 5% /[2.7.2-RELEASE][root@pfSense.spacelab]/root: du -h -d 1 / 4.0K /.snap 101M /boot 4.5K /dev 16M /rescue 4.0K /proc 96M /root 3.1G /var 4.0K /media 12K /conf.default 4.0K /mnt 9.9M /tmp 4.6M /sbin 17M /lib 4.0K /net 16M /cf 164K /libexec 8.0M /etc 1.4M /bin 1.8G /usr 20K /home 1.1M /tftpboot 5.8G / -

@sloopbun Did you ever get this resolved? I'm about to commit to a proxmox vm install myself.