Suricata v7.0.7_5 abruptly stops

-

@anishkgt What lists are you using in pfBlocker? We have never had a problem running those together at clients, but, we don't use, for instance, the UT1 list which is gigantic, and we don't just enable all the Suricata rules either.

I don't think Netgate has an exchange program. And I think the 6100 is the first model with 8 GB of RAM but I didn't look into that.

-

@SteveITS Forgot mention. I've been test running the nextDNS service which seems to be doing what pfblockerNG does. So, as of now I have stopped pfblockerNG and i was only running Suricata.

This is what i have installed in the package manager

- aws-wizard

- bandwidthd

- ipsec-profile-wizard

- ntopng

- pfBlockerNG

- Service_Watchdog

- System_Patches

- WireGuard

Of which ntopng and pfBlockerNG is nor running. I don't see much CPU (49%) and memory (13%) usage.

-

@anishkgt said in Suricata v7.0.7_5 abruptly stops:

@SteveITS Forgot mention. I've been test running the nextDNS service which seems to be doing what pfblockerNG does. So, as of now I have stopped pfblockerNG and i was only running Suricata.

This is what i have installed in the package manager

- aws-wizard

- bandwidthd

- ipsec-profile-wizard

- ntopng

- pfBlockerNG

- Service_Watchdog

- System_Patches

- WireGuard

Of which ntopng and pfBlockerNG is nor running. I don't see much CPU (49%) and memory (13%) usage.

What is Service Watchdog monitoring? It should NEVER be configued to monitor and restart the IDS/IPS packages (Suricata or Snort). It does not understand how to properly monitor those package binaries for correct operation nor does it know how to correct restart individual failed instances.

Your problem is most likely a result of insufficient free RAM. Large rulesets combined with certain configuration options in Suricata can lead to huge RAM usage. This usage increases even more during rule updates.

-

@bmeeks The Service_watchdog monitors the KEA_DHCP Server and the DNS Resolver. So would somehthing aroung 8GB be worth it. Since Netgate does not offer exchange, I might as well go with Protectli. I was hoping netgate would allow that way i could contribute to pfsense development.

-

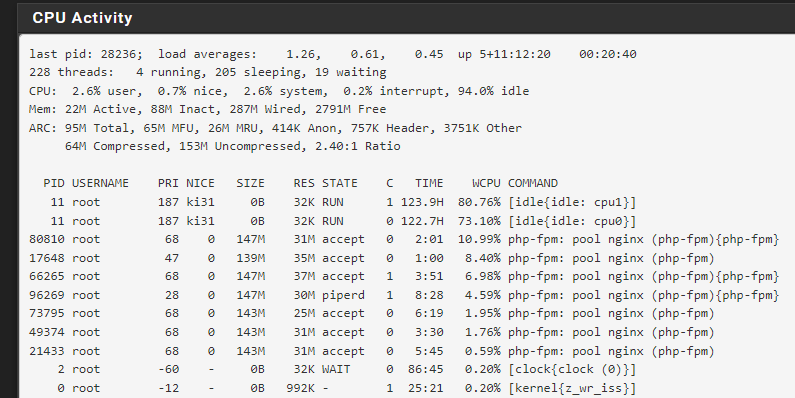

@anishkgt I think you should find out what is using RAM to find out the cause, and what your setup requires. Check Diagnostics/Activity or run "top" at a command line.

To clarify my comment above, many of our clients have 2100s and we've never had a memory problem with Suricata.

Do you have a 2100 Max? A SSD is recommended to run ntopng because of the disk writing.

https://www.netgate.com/supported-pfsense-plus-packages -

-

@anishkgt The Max has an SSD.

That shows 2791 MB free. I guess you'll have to watch it for when the out of memory crash happens.

-

@SteveITS I don't get any notification that i could really monitor the Suricata service. How can i monitor ? i mean how can it checked against the time the service stops.

-

@anishkgt said in Suricata v7.0.7_5 abruptly stops:

@SteveITS I don't get any notification that i could really monitor the Suricata service. How can i monitor ? i mean how can it checked against the time the service stops.

Look for any other events in the pfSense system log that occur around the same time as the out-of-memory process killer log entry.

The entry that says:

Dec 4 22:43:37 kernel pid 51709 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 22:43:37 kernel pid 26454 (suricata), jid 0, uid 0, was killed: failed to reclaim memoryPost the messages a few minutes either side of the time where the above message is logged. That may offer a clue as to what other processes were trying to do something that triggered the out-of-memory condition.

-

@bmeeks Here is what i can see in the system logs. I have not started the suricata service ever since it had stopped.

Dec 14 00:01:42 kernel mvneta1: promiscuous mode disabled Dec 14 00:01:42 kernel pid 15084 (unbound), jid 0, uid 59, was killed: failed to reclaim memory Dec 14 00:01:42 kernel pid 91364 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 13 20:56:28 kernel mvneta1: promiscuous mode enabled Dec 4 22:43:37 kernel mvneta1: promiscuous mode disabled Dec 4 22:43:37 kernel pid 51709 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 22:43:37 kernel pid 26454 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 18:15:21 kernel mvneta1: promiscuous mode enabled Dec 4 00:01:48 kernel mvneta1: promiscuous mode disabled Dec 4 00:01:48 kernel pid 12763 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 00:01:48 kernel pid 4569 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 20:54:12 kernel mvneta1: promiscuous mode enabled Dec 3 11:02:53 kernel mvneta1: promiscuous mode disabled Dec 3 11:02:53 kernel pid 31700 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 11:02:51 kernel pid 73039 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 09:33:53 kernel mvneta1: promiscuous mode enabled Dec 3 00:01:50 kernel mvneta1: promiscuous mode disabled Dec 3 00:01:50 kernel pid 90934 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 00:01:50 kernel pid 75984 (suricata), jid 0, uid 0, was killed: failed to reclaim memoryI can see the DNS Resolver which i assume is the "unbound" was also killed. I remeber earlier it being an issue when suricata stopped. What could be root cause.

-

@anishkgt said in Suricata v7.0.7_5 abruptly stops:

@bmeeks Here is what i can see in the system logs. I have not started the suricata service ever since it had stopped.

Dec 14 00:01:42 kernel mvneta1: promiscuous mode disabled Dec 14 00:01:42 kernel pid 15084 (unbound), jid 0, uid 59, was killed: failed to reclaim memory Dec 14 00:01:42 kernel pid 91364 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 13 20:56:28 kernel mvneta1: promiscuous mode enabled Dec 4 22:43:37 kernel mvneta1: promiscuous mode disabled Dec 4 22:43:37 kernel pid 51709 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 22:43:37 kernel pid 26454 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 18:15:21 kernel mvneta1: promiscuous mode enabled Dec 4 00:01:48 kernel mvneta1: promiscuous mode disabled Dec 4 00:01:48 kernel pid 12763 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 4 00:01:48 kernel pid 4569 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 20:54:12 kernel mvneta1: promiscuous mode enabled Dec 3 11:02:53 kernel mvneta1: promiscuous mode disabled Dec 3 11:02:53 kernel pid 31700 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 11:02:51 kernel pid 73039 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 09:33:53 kernel mvneta1: promiscuous mode enabled Dec 3 00:01:50 kernel mvneta1: promiscuous mode disabled Dec 3 00:01:50 kernel pid 90934 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 3 00:01:50 kernel pid 75984 (suricata), jid 0, uid 0, was killed: failed to reclaim memoryI can see the DNS Resolver which i assume is the "unbound" was also killed. I remeber earlier it being an issue when suricata stopped. What could be root cause.

Your firewall kernel is running out of free memory space for critical procesess. This line is the clue:

Dec 4 00:01:48 kernel pid 4569 (suricata), jid 0, uid 0, was killed: failed to reclaim memoryand these --

Dec 14 00:01:42 kernel pid 15084 (unbound), jid 0, uid 59, was killed: failed to reclaim memory Dec 3 00:01:50 kernel pid 90934 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memorySuricata and the other processes listed are being arbitrarily killed by the FreeBSD kernel's OOM (out-of-memory) reaper. I explained in an earlier post in this thread what that means and how it works: https://forum.netgate.com/topic/195456/suricata-v7-0-7_5-abruptly-stops/9?_=1734182772335.

You do not have enough RAM in an SG-2100 to run all the packages and options you have enabled. You will need to drastically trim your Suricata ruleset and/or curtail use of some pfBlockerNG DNSBL lists.

When Suricata is updating its rules the memory usage can nearly double. The same sort of RAM consumption increase likely happens when pfBlockerNG updates its DNSBL lists and the

unboundPython module is running. It is likely that during one of these times is when the OOM reaper engages and kills one or more of your user space processes.One last comment, I don't know if you are running pfSense in ZFS mode, but if you are that can exacerbate memory problems on a box with limited RAM running optional packages due to the ZFS ARC (Adaptive Replacement Cache). ZFS likes to use free RAM for caching.

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

@bmeeks I had been testing nextdns and all the while pfblockerNG was disabled but not uninstalled. Would it still update causing the memory low alerts.

-

@anishkgt said in Suricata v7.0.7_5 abruptly stops:

@bmeeks I had been testing nextdns and all the while pfblockerNG was disabled but not uninstalled. Would it still update causing the memory low alerts.

I do not have an answer for every "what if this" scenario. All I can tell you absolutely is that your firewall is running out of available free RAM from time to time. And when that happens, FreeBSD will call on its OOM reaper logic to kill the largest user-space processes until it can reclaim enough RAM to satisfy the most recent system allocation request.

You have too much stuff running for the 2 GB of memory available in your box. If you run a stock vanilla pfSense with NOTHING else added, it should be okay. Then slowly add things back to see where the camel's back is broken and the OOM reaper starts killing things again. I am not fully familiar with all of the inner workings of pfBlockerNG. I know it depends upon

crontasks to handle routine updates. Those may still run even with it disabled (but not uninstalled). But I'm not sure about how that logic works as I've never used the package. -

@bmeeks said in Suricata v7.0.7_5 abruptly stops:

2 GB of memory available in your box

The 2100 is 4 GB.

You've had a tad more experience with Suricata than I

but, for OP, I expect Suricata is not the actual problem/memory hog here. Perhaps, a full RAM disk or something like that? If you're using a RAM disk try turning that off.

but, for OP, I expect Suricata is not the actual problem/memory hog here. Perhaps, a full RAM disk or something like that? If you're using a RAM disk try turning that off. -

@SteveITS said in Suricata v7.0.7_5 abruptly stops:

The 2100 is 4 GB.

Oops! My mistake. Don't know why I was thinking 2 GB.

Still, something is using up the available free RAM on the box.

-

@SteveITS Thanks for the heads-up. I was shoping for Protectli models

RAM Disk was disabled by default. I remember changing the 'Firewall Maximum States' from 338,000 to 500,000 and 'Firewall Maximum Table Entries' from default to 800000. I will set it to default and see how it goes.

-

@anishkgt those settings are only relevant if you’re running out of space in them, in which case you’d have other problems.

The 2100s we’ve set up are usually around or under 1 GB usage. You will need to figure out what is using RAM when you run out.

-

@SteveITS There is nothing much i could come up with about the service that is causing this except that there is a cron update of pfBlockerNG happeing at the same time.

The cron update happens after these are disabled. I had removed the 'Service Watchdog' which eventually failed to restart the DNS resovler (unbound).

Next Event

Dec 15 04:00:01 php 55448 [pfBlockerNG] Starting cron process. Dec 15 02:00:01 php 37998 [pfBlockerNG] Starting cron process. Dec 15 00:01:36 kernel mvneta1: promiscuous mode disabled Dec 15 00:01:36 kernel pid 11897 (unbound), jid 0, uid 59, was killed: failed to reclaim memory Dec 15 00:01:36 kernel pid 23736 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 15 00:01:24 php 38071 [pfBlockerNG] No changes to Firewall rules, skipping Filter Reload Dec 15 00:00:33 php-cgi 39142 [Suricata] The Rules update has finished. Dec 15 00:00:33 php-cgi 39142 [Suricata] Suricata signalled with SIGUSR2 for LAN (mvneta1)...Earlier Event

Dec 14 22:00:01 php 20743 [pfBlockerNG] Starting cron process. Dec 14 21:58:43 kernel mvneta1: promiscuous mode disabled Dec 14 21:58:43 kernel pid 5252 (php-fpm), jid 0, uid 0, was killed: failed to reclaim memory Dec 14 21:58:43 kernel pid 75593 (suricata), jid 0, uid 0, was killed: failed to reclaim memory Dec 14 21:57:50 php-fpm 5252 [Suricata] Suricata signalled with SIGUSR2 for LAN (mvneta1)...Does this mean i should be better off with more RAM ?

-

@anishkgt which lists are you using in pfBlocker? Filling 4 GB implies a lot, or big ones. That may be a question for the pfBlocker forum…

If you’re using the UT1 adult list for example that is gigantic. There may be better solutions like Cloudflare Family DNS.

-

@anishkgt said in Suricata v7.0.7_5 abruptly stops:

Dec 15 00:01:24 php 38071 [pfBlockerNG] No changes to Firewall rules, skipping Filter Reload``` Dec 15 00:00:33 php-cgi 39142 [Suricata] The Rules update has finished.The order of these two log entries tells me that the Suricata rules update had just completed, then the pfBlockerNG update

crontask kicked off about a minute later. So, at this point Suricata was happy and running again with its updated rules.Next, the two following log entries indicate to me that the pfBlockerNG

crontask exhausted system RAM, so the OOM Reaper process kicked off and killed the two largest users of contiguous RAM -- Suricata andunbound(the DNS Resolver). My suspicion is a large DNSBL list was being updated by the pfBlockerNG update job. That will involve the Python module ofunboundcausing that process to balloon in its memory footprint. Thus it would become a target of the OOM Reaper process as would Suricata because both would likely be the largest consumers of RAM at that point.Dec 15 00:01:36 kernel pid 11897 (unbound), jid 0, uid 59, was killed: failed to reclaim memory Dec 15 00:01:36 kernel pid 23736 (suricata), jid 0, uid 0, was killed: failed to reclaim memoryLike @SteveITS mentioned, your choices of pfBlockerNG lists can matter a lot. Some of the available choices are frankly just too large and other options such as using Cloudflare might be a better solution. Don't know the specifics of which lists you are using, but it's clear from the system logging that whatever you have chosen is "too much data" for the 4 GB of RAM in your firewall.