boot failure upgrading to 25.03.b.20250306.0140 i

-

@netblues And also plus updated to 25.03 beta, with no issues. Dirty upgrade too.

-

Hmm, interesting. I tried usssing a Q35 machine in Proxmox and it booted fine. That in itself also didn't change the PCI device type either.

I think it must be changing the presented PCIe controller since it seems to fail to detect entirely any NICs or the drive controller. Still couldn't make Proxmox do it though even with a bunch of custom command hackery.

-

@stephenw10 actually pcie isn't supported in i440fx, you have to change this to pci

In older kvm implementations, creating a new freebsd13.1 would create a q35 based virtual machine.

Now if you create a new freebsd14 i440fx is selected.Proxmox isn't a redhat derivative as you know , too.

In any case, no issues in new installations. Upgrades will hurt "randomly" since this isn't really pfsense related. Its upstream freebsd

-

@netblues said in boot failure upgrading to 25.03.b.20250306.0140 i:

actually pcie isn't supported in i440fx,

Hmm, interesting. I wonder if my change didn't really apply somehow then.

-

Were you using an odd mix like q35+BIOS instead of q35+UEFI?

FreeBSD 15.x recently changed some things in probing of older ISA style devices, which I wouldn't expect to impact things here, but it's possible some quirk in that combination triggered an issue.

I use q35+OVMF/UEFI on nearly all my Proxmox VMs with all virtio devices (Proxmox 8.3.5) and everything runs perfectly.

I still have a few that are using i440fx+BIOS and all virtio devices and they're good, too.

-

Hmm, yes I probably did end up with Q35+BIOS.

Do you see the PCI devices shown as

(modern)in the boot logs? -

I don't see any mention of

modernin my logs, they still show asVirtIO (legacy)even on recently created VMs.In the

info qtreeof all the VMs it doesn't seem to express a preference either way:disable-legacy = "off" disable-modern = falseSame on every device

-

@jimp said in boot failure upgrading to 25.03.b.20250306.0140 i:

Were you using an odd mix like q35+BIOS instead of q35+UEFI?

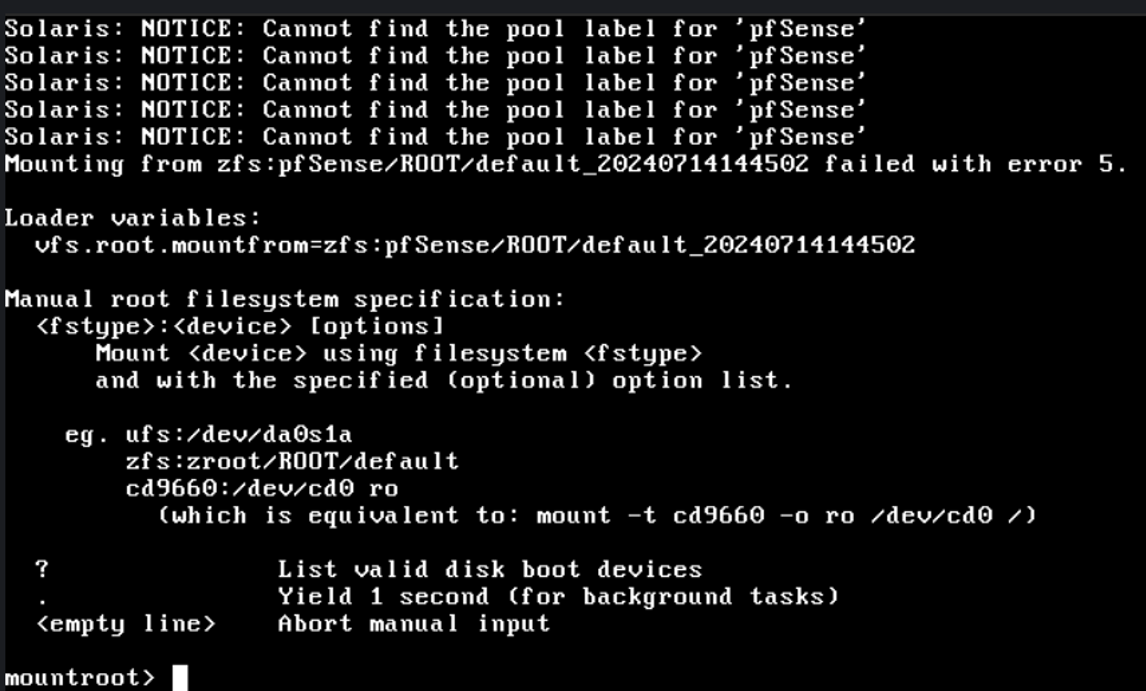

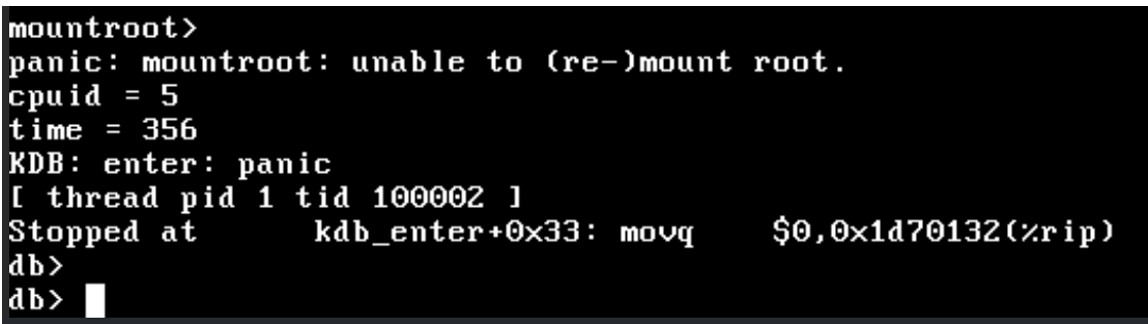

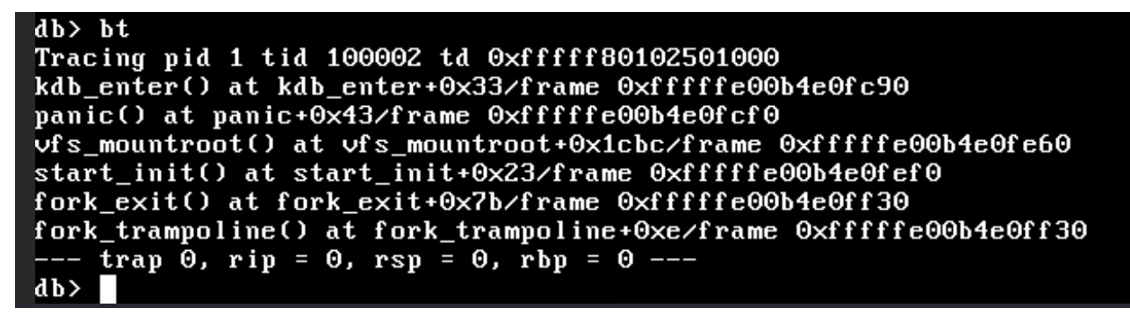

Everything was/is q35 + bios, but the issue was that after booting couldn't load disk voiume.

Just changing driver to scsci /or sata from virtio would allow startup normaly till network cards not being detected at later stage.This can't be a uefi thing IMHO.

-

@netblues said in boot failure upgrading to 25.03.b.20250306.0140 i:

@jimp said in boot failure upgrading to 25.03.b.20250306.0140 i:

Were you using an odd mix like q35+BIOS instead of q35+UEFI?

Everything was/is q35 + bios, but the issue was that after booting couldn't load disk voiume.

Just changing driver to scsci /or sata from virtio would allow startup normaly till network cards not being detected at later stage.This can't be a uefi thing IMHO.

Declaring that without trying it seems hasty. There isn't much of a compelling reason to run q35+BIOS so it's probably a much less tested combination comparatively.

It absolutely can make a difference in how the system probes devices and boots.

-

Tried, but it will take more than changing bios settings. Most probably a reinstall

-

I setup a fresh VM as q35+BIOS and it worked fine and showed legacy virtio devices by default.

I forced modern devices with

qm set 157 --args "-global virtio-net-pci.disable-legacy=on -global virtio-blk-pci.disable-legacy=on"and it still booted fine and worked on the network,dmesgoutput shows modern for the disk/block device and network devices.This is on a fresh 2.8.0 VM but it should be using the same base OS version as 25.03 builds.

That's all on Proxmox though so it's possible KVM is doing something differently, maybe it's a newer version of KVM/qemu or something else changed.

-

Yeah it feels like whatever is upstream of those PCI devices fails to be detected and hence anything downstream isn't seen at all. I did try setting

-global virtio-pci.disable-legacy=on, which should be valid, but it fails to boot entirely. -

I tried that and hit the same failure, hence my limiting it to NICs and disk devices, which did work.

One thing I did read when looking into this is that it may make a difference if the root device is PCI vs PCIe but I didn't look into how to check/change that on Proxmox yet.

Though at least I did confirm that the modern NICs and block devices do work on their own, so the original stated issue is somewhat of a red herring and the workaround may have allowed it to function, but not for the reason that was thought.

-

Yup, that's exactly what I did.

Good to get confirmation at least.

Good to get confirmation at least. -

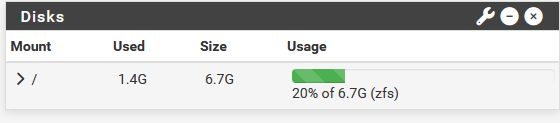

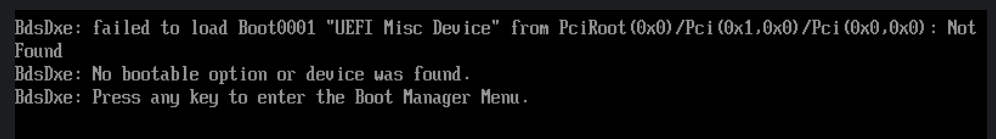

good Morning, i am currently running 25.03-BETA (amd64)

built on Tue Feb 4. and when ever i try to upgrade to the latest i get this failure but i am not using any virtual machines, is a minisfourm ms-01, onboard nics and nvme driveany pointers i could look for?

-

fresh install cleared it up

-

N netblues referenced this topic on