Trouble with configuring Jumbo frames :(

-

I will have a detailed look at your mail later (at daytime)

The problem was that my config did contain a line which was probably generated in the past (automatically)

<shellcmd>ifconfig lagg1 mtu 9000</shellcmd>

Lagg1 did exist in the past but not any more !!!I manually changed that line to

<shellcmd>ifconfig lagg0 mtu 9000</shellcmd>

and uploaded / reloaded that configThat seems to have fixed the issue (not the reason that the config was wrong!!)

After the change I could assign 9000 to the related interfaces.No idea if the issue will return below the output of if config

also see pfSense https://redmine.pfsense.org/issues/3922Before the change

ifconfig lagg0

lagg0: flags=1008943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST,LOWER_UP> metric 0 mtu 1500

options=ffef07bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,VLAN_HWFILTER,NV,VLAN_HWTSO,LINKSTATE,RXCSUM_IPV6,TXCSUM_IPV6,HWSTATS,TXRTLMT,HWRXTSTMP,MEXTPG,TXTLS4,TXTLS6,VXLAN_HWCSUM,VXLAN_HWTSO,TXTLS_RTLMT>

ether e8:eb:d3:2a:79:eb

hwaddr 00:00:00:00:00:00

inet6 fe80::eaeb:d3ff:fe2a:79eb%lagg0 prefixlen 64 scopeid 0xc

laggproto lacp lagghash l2,l3,l4

laggport: mce0 flags=1c<ACTIVE,COLLECTING,DISTRIBUTING>

laggport: mce1 flags=1c<ACTIVE,COLLECTING,DISTRIBUTING>

groups: lagg

media: Ethernet autoselect

status: active

nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL>after the change

[24.11-RELEASE][admin@pfSense.lan]/root: ifconfig lagg0

lagg0: flags=1008943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST,LOWER_UP> metric 0 mtu 9000

options=ffef07bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,VLAN_HWFILTER,NV,VLAN_HWTSO,LINKSTATE,RXCSUM_IPV6,TXCSUM_IPV6,HWSTATS,TXRTLMT,HWRXTSTMP,MEXTPG,TXTLS4,TXTLS6,VXLAN_HWCSUM,VXLAN_HWTSO,TXTLS_RTLMT>

ether e8:eb:d3:2a:79:eb

hwaddr 00:00:00:00:00:00

inet6 fe80::eaeb:d3ff:fe2a:79eb%lagg0 prefixlen 64 scopeid 0xc

laggproto lacp lagghash l2,l3,l4

laggport: mce0 flags=1c<ACTIVE,COLLECTING,DISTRIBUTING>

laggport: mce1 flags=1c<ACTIVE,COLLECTING,DISTRIBUTING>

groups: lagg

media: Ethernet autoselect

status: active

nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> -

I did configure jumbo frames by editing the config file. It is behaving strange

after reload the config

lagg0 is changed in lagg1 and MTU for 9014 to 9000. !!!???But in the mean time I had change the vlan settings to 9000 :( and it works.

Actual results below. Issues are:

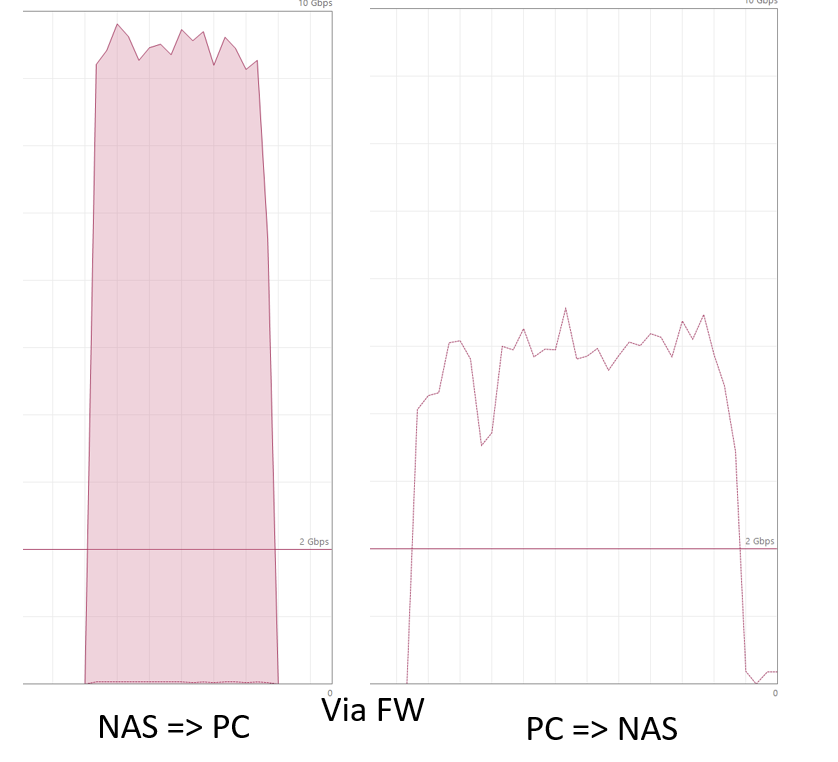

- data from NAS to PC is OK

- data from PC to NAS can be / should be better :(

- loosing frames when copying NAS to PC for jet unknow reason

- PC is using a smaller frame size, not optimal IMHO see wireshark traces

-

@louis2 and that is pretty meaningless without the baseline.. where your just using 1500..

And your length there in your pcap that is over your frame size would indicate your capturing on the sender device that has offload set, where the OS sends larger data and the nic sizes it correctly..

That part about missing previous segment doesn't actually mean anything was lost other not seen in your capture. start your capture earlier before you start sending data your interested in.

The difference could also just mean that the sender pc just not capable of sending any faster - could be disk limitation?

Why I would take the disks out of the equation and use something that just sends traffic on the wire, like iperf. As that an actual copy of data to/from disk?

As to 9014 vs 9000 - this could just be how nic/os displays the same thing - one counting the header, and one not..

For example my nas shows it as 9000, while my PC shows it as 9014.. But if you look at the interface it shows 9000

So your routing this traffic over pfsense, and your hairpinning it over a lagg with vlans.. So you really have no control over actual what physical path the traffic will take.. And your worried about efficiency?

-

John as written I do not know all answers. Know that!

- however it is for sure that the NAS seems to prefer 9014 at trunk level above 9000 (I tested); The NIC in PC, NAS and pfSense are all ConnectX4 Lx. And the default frame values for that nic are higher than 1500 and 9000.

- I do know exactly the route of the lagg

- I do not know why windows 'send-frames' are not the expected 9000 nor that I know how to change that

- the disk at both sides PC and NAS (truenas scale) are NVME-drives. It is fore sure that the NAS can send 10Gbe and the PC can write 10Gbe (see NAS to PC)

- I do not know why not in the opposite. However the smaller frame could be one of the reasons. That is the reason that I searched, but not found a way to change that.

- MTU is not like MTU not like L2-MTU. An MTU of 9000 does probably not imply a net transport capacity of 9000. Probably 'some' bites less needed for overhead. And on L2 the frame needs to be bigger due to again overhead. I assume that this is not new to you. But it makes that it is not all-ways clear what is mend MTU.

- pfSense hardly have, not to say does not have a L2 interface GUI. I think there are bugs in pfSense related L2-handling (as related to laggs). See my experiences.

- the trace was started before the lost frames did occur and it does occur multiple times,

- I assume the length as displayed by WireShark is the L2 MTU-size. The traces are made on the PC. I think that I am going to make taces on the NAS side using pfSense to check what happens there.

-

@louis2 said in Trouble with configuring Jumbo frames :(:

I do know exactly the route of the lagg

Not what I meant - when you though a bunch of vlans on a lagg - you really have no control over what physical wire the traffic will flow over..

you could be up down on the same wire (hairpin), or you could be up wire 1 and down wire 2..

No the len is not the mtu size. How would you think that, it is well over your 9k jumbo

And where is the baseline of 1500?

-

John, I will do a baseline 1500 test (promised!) , however I plan to do that after understanding ^completely^ what happens in the actual (jumbo) setup.

Which is not so easy ..

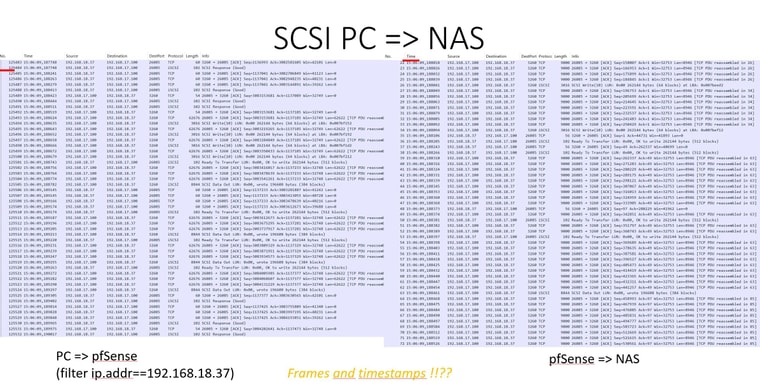

I did also make a wireshark trace of the traffic between NAS and pfSense and that seems not so 1:1 as I did expect. So one of the things I plan to to is to trace at the two sites of the firewall at the same time and compare them.

I also would like to understand what is causing the difference in transfer speed between NAS => PC and PC => NAS and why the PC is using significant smaller frames (and how to change that).

More issues like:

- what I am loosing packages !? And is that at both sites of the FW?

(using wireshark at pc and probably also pfsense capture is affecting / slowing down the transfer speed) - and the very doubtful way to setup frame/MTU size at pfSense

- what I am loosing packages !? And is that at both sites of the FW?

-

I did make a parallel trace of the frames at both sites (nas side and pc side) of the firewall. The NAS trace using pfSense the PC trace using wireshark.

You can see that the frames at both sites are nowhere the same. I am not yest sure what to think about that and how to improve that.

Hereby I share the captures (as picture)

-

John,

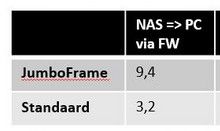

I am sill trying to improve the windows behavoir, but here the speed test I did form NAS to PC.

Which my actual PC NIC-connectX4 settings (jumbo 9000, send and receive buffers 2048 an RoCE on 4096, the rest default / on as far as possible) The figures are as follows:

- NAS => PC using jumbo frames via pfSense 9,3 gbit

- NAS => PC using 1500 frames via pfSense 6,8 gbit

- NAS => PC using 1500 frames same vlan 8.8 gbit

So the conclusion seems to:

- moderate gain for a same lan connection

- big gain with the firewall involved

Note there that the setup of my firewall is significant above average !!

Intel(R) Core(TM) i5-6600K CPU @ 3.50GHz

Current: 3602 MHz, Max: 3500 MHz

4 CPUs : 1 package(s) x 4 core(s)

AES-NI CPU Crypto: Yes (active)

IPsec-MB Crypto: Yes (inactive)

QAT Crypto: No -

@louis2 and can your PC even write at 850MBps? your (6.8Gbps), let alone your nas at 1100MBps.

did you do this test over your lagg - where all you know your traffic is hairpinning when your nas sends via route, and clearly not when on the same network.

From your traces your offloading on your pc, and not on pfsense. That is why you see large size on your pc side and 9000 on your pfsense side. Where was the test from PC to nas with 1500 and jumbo?

Do a sniff where your pc traffic comes into pfsense. Your sure not going to see 62k size.

I would take the lagg out of the equation and use 2 different physical wires for your 2 networks for your 1500 vs jumbo tests.

For difference in 6.8 to 9.3 to make a difference your devices would need to be able sustain read/write at those speeds to and from their disks.. At 9.3 your talking about sustained read/write over 1100MBps

-

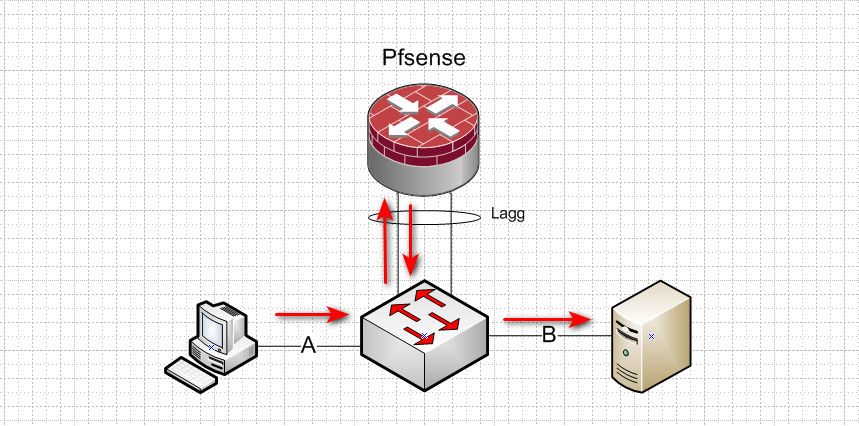

John I will make a small drawing tomorrow. However My test setup is quite simple:

My PC and NAS are equiped with NVME SSD's

The NIC has two (fiber) ports.

port-1 is connected via the normal route vlan => pfsense => NAS

or in more detail

PC => 10G-switch

=> 10G lagg to pfSense

=> back to 10G-switch

=> to the NAS on the 10G-switch

=> 10G lagg to pfSense

=> back to 10G switch

=> 10G-switch to pCPort-2 is connected to a small 10G-switch

small 10G switch to 10G-switch

to the NAS

back to the small 10G switch

back to the PC

All on the same GreenZone VlanFor the test I did enable NIC port-1 for the tests via pfsense

and for the other test I enable port-2For the 1500 test I changed the 9000 mtu in the following way

pfSense- trunk on pfSense still 9000

- mtu for greenzone 9000 => 1500

NAS - trunk still 9000 (I think I did test with both 9000 and 1500)

- greenzone mtu back to 1500

PC - NIC MTU to 1514 (default value)

-

To be shure

- one capture was made using wireshark on the PC and

- in parallel I used the capture on pfsense to capture the traffic towards the NAS

-

Here the setup and results from my jumbo frame test.

The differences between jumbo and standard are significant bigger than before. I do not know why.

The behavoir when sending data from PC to NAS is by far not as smooth and stable as from NAS to PC. I tried what the best MTU settings is for PC and NAS (on trunk level!!_ I can not change that on pfSense.

Especially the PC profit strongly from a bit bigger MTU than 9000.

The application frame at the NAS and pfSense where 1500 and 9000 all the time, I can not control that on the PC.

As before I do not understand every thing especially as related to the behavoir of the Windows11 PC.

-

you are still on a lagg - you have no idea if that hairpinns or not..

take the lag out of the equation on both your nas and your pfsense. Are disks involved in this test? Take them out - use just iperf.

Using different uplinks for each network (a or b) prevents hairpin.

When you lagg you don't actually know what physical interface is being used.. You could end up with this

f

fLags are great when you have lots of different devices talking to lots of other devices, and you want redundancy if one of the connections fail. Or more bandwidth in the total pipe that multiple clients talking to multiple other devices can share. But when you throw a bunch of vlans on a lag, and you have intervlan traffic that routes over the same lag, you are not going to be sure it doesn't hairpin. Which depending might not matter so much if say your lagg connection was 10ge and your devices connections were only 1ge.. But when you want to make sure that your not sharing the same physical connection for both directions of the traffic for optimal speed, lagg is not the way to go because you have no actual control over which path traffic will take.

Same goes for just 1 single connection, ie a trunk port that has multiple vlans on it - and you have intervlan traffic - your going to hairpin.

-

John I really really do not understand your hairpin / lagg issue !!!

In case the pc and the nas are on a different vlan, the traffic will pass pfSense.

Add that implies an upstream and a downstream both through the lagg. And IMHO it is not so interesting which physical path from the lagg they take.

So you are correct the uplink and the down stream could take the same physical path. But each physical path is 10G-up and 10G down. And since stream-1 is almost 100% up and the other stream is almost 100% down they are not eating each others bandwidth away. So in the worst case scenario the lagg capacity equals the capacity of one 10G-connection.

The NAS connection is 10G just as all other connections. So the maximum traffic the NAS can generate is 10bit.

Being the sum to all other vlans. And if that are other vlans they will all pass pfSense. So what !!??In case the traffic stays on the same vlan, the traffic will not pass pfSense at all, so not passing the the lagg at all.

For info at this moment I use L2/L3/L4 hash algorithm at the pfSense side. At the switch site (SX3008F) I use source and destination IP). I could reserve one path for the NAS vlan and the other path for all other vlans'but if that would be an improvement ... I doubt.

So what what is the problem !!!!!! ??????

Louis

-

@louis2 These are the only 2 machines talking to each other at the same time? Then it isn't a problem, your acks are going to go on the same wire as well now.. So you would never be able to see full throughput. be it that small.

Your talking about a optimization of jumbo, but then are not caring about your overall bandwidth being reduced.

What if you have machines C and D talking to each other on a completely different vlans - but they share the same wire now. Or could be.

If your happy with your setup.. Have at it.

All of that aside - you still haven't shown that your disks can read/write at the extra throughput jumbo could bring.. If the disks can not write/read even bandwidth X (standard 1500).. Does it make any sense to complex up the network with jumbo to gain that extra speed jumbo could provide?

There is no freaking way jumbo gives you this sort of boost

You have something else going on there.. If you are only seeing 3.2 on 1500, and 9.4 on jumbo.