DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208

-

Yeah let me see if can get any equivalent data from an ice NIC system.

-

@stephenw10 said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

Yeah let me see if can get any equivalent data from an ice NIC system.

Still plagued by this and really wondering about the new ice driver. If these timestamps are accurate then the kernel shows these link 'failures' to be less than 50µs. I would have thought the fault mask alone would be more generous than that:

2025-06-04 22:22:00.694984+01:00 check_reload_status 678 Linkup starting ice0.1003 2025-06-04 22:22:00.690120+01:00 check_reload_status 678 Linkup starting ice0 2025-06-04 22:22:00.686014+01:00 kernel - ice0.1003: link state changed to UP 2025-06-04 22:22:00.685997+01:00 kernel - ice0: link state changed to UP 2025-06-04 22:22:00.685977+01:00 kernel - ice0: Link is up, 10 Gbps Full Duplex, Requested FEC: None, Negotiated FEC: None, Autoneg: False, Flow Control: None 2025-06-04 22:22:00.685955+01:00 kernel - ice0.1003: link state changed to DOWN 2025-06-04 22:22:00.685927+01:00 kernel - ice0: link state changed to DOWN 2025-06-04 22:22:00.601736+01:00 check_reload_status 678 Linkup starting ice0.1003 2025-06-04 22:22:00.596914+01:00 check_reload_status 678 Linkup starting ice0I've ordered another DAC as most of mine are ipolex, so going to try a different brand, just in case that I have both 1 bad cable plus a poor compatibility with an entire DAC brand. Not likely but worth a try I guess.

I also wondered why the above shows

Autoneg: Falsewhen auto negotiation is enabled and does correctly negotiate to 10 GbE full-duplex. If I fix the speed at 10 GbE (ie Autoselect Off on pfSense) it shows the same result.[25.03-BETA]/root: ifconfig -mvvvv ice0 ice0: flags=1008843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST,LOWER_UP> metric 0 mtu 9000 description: LAN options=4e100bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,VLAN_HWFILTER,RXCSUM_IPV6,TXCSUM_IPV6,HWSTATS,MEXTPG> capabilities=4f507bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,VLAN_HWFILTER,VLAN_HWTSO,NETMAP,RXCSUM_IPV6,TXCSUM_IPV6,HWSTATS,MEXTPG> ether 3c:xx:xx:xx:xx:dc inet 10.0.1.1 netmask 0xffffff00 broadcast 10.0.1.255 inet6 fe80::xxxx:xxxx:xxxx:ebdc%ice0 prefixlen 64 scopeid 0x6 inet6 fe80::1:1%ice0 prefixlen 64 scopeid 0x6 inet6 2a02:xxx:xxxxx:1:xxxx:xxxx:xxxx:ebdc prefixlen 64 media: Ethernet autoselect (10Gbase-Twinax <full-duplex>) status: active supported media: media autoselect media 10Gbase-Twinax nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> drivername: ice0 plugged: SFP/SFP+/SFP28 Unknown (Copper pigtail) vendor: ipolex PN: SFP-H10GB-CU1M SN: WTS11J72204 DATE: 2019-07-24 /root: dmesg | grep ice0 ice0: <Intel(R) Ethernet Connection E823-L for SFP - 1.43.2-k> mem 0xf0000000-0xf7ffffff,0xfa010000-0xfa01ffff at device 0.0 numa-domain 0 on pci11 ice0: Loading the iflib ice driver ice0: The DDP package was successfully loaded: ICE OS Default Package version 1.3.41.0, track id 0xc0000001. ice0: fw 5.5.17 api 1.7 nvm 2.28 etid 80011e36 netlist 0.1.7000-1.25.0.f083a9d5 oem 1.3200.0 ice0: Using 8 Tx and Rx queues ice0: Reserving 8 MSI-X interrupts for iRDMA ice0: Using MSI-X interrupts with 17 vectors ice0: Using 1024 TX descriptors and 1024 RX descriptors ice0: Ethernet address: 3c:xx:xx:xx:xx:dc ice0: ice_add_rss_cfg on VSI 0 could not configure every requested hash type ice0: Firmware LLDP agent disabled ice0: link state changed to UP ice0: Link is up, 10 Gbps Full Duplex, Requested FEC: None, Negotiated FEC: None, Autoneg: False, Flow Control: None ice0: netmap queues/slots: TX 8/1024, RX 8/1024 ice0: Module is not present. ice0: Possible Solution 1: Check that the module is inserted correctly. ice0: Possible Solution 2: If the problem persists, use a cable/module that is found in the supported modules and cables list for this device. ice0: link state changed to DOWN ice0: link state changed to UP ice0: Link is up, 10 Gbps Full Duplex, Requested FEC: None, Negotiated FEC: None, Autoneg: False, Flow Control: None vlan0: changing name to 'ice0.1003' ice0: Media change is not supported. ice0: Media change is not supported. ice0: Media change is not supported. nd6_dad_timer: called with non-tentative address fe80:d::1:1(ice0.1003) ice0: link state changed to DOWN ice0.1003: link state changed to DOWN ice0: Link is up, 10 Gbps Full Duplex, Requested FEC: None, Negotiated FEC: None, Autoneg: False, Flow Control: None ice0: link state changed to UP ice0.1003: link state changed to UP ice0: Media change is not supported. ice0: Media change is not supported. [25.03-BETA]/root: ️

️ -

@RobbieTT said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

I also wondered why the above shows Autoneg: False when auto negotiation is enabled and does correctly negotiate

Hmm, that is odd.

Yes I agree, the timing doesn't look like a real link event which does point to driver issue. So far I haven't found any of our devices throwing the same logs. I'll keep looking.

-

@RobbieTT said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

If these timestamps are accurate then the kernel shows these link 'failures' to be less than 50µs

Even the basic physics of Ethernet (especially 10GBASE-SR/LR) would react more slowly than that. It's about 1 second to down and 1 second to up, not micro.

-

@w0w said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

Even the basic physics...

Begad, even the laws of physics are against me!

I need to tend to my infinite improbability drive.

️

️ -

@RobbieTT said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

Begad, even the laws of physics are against me!

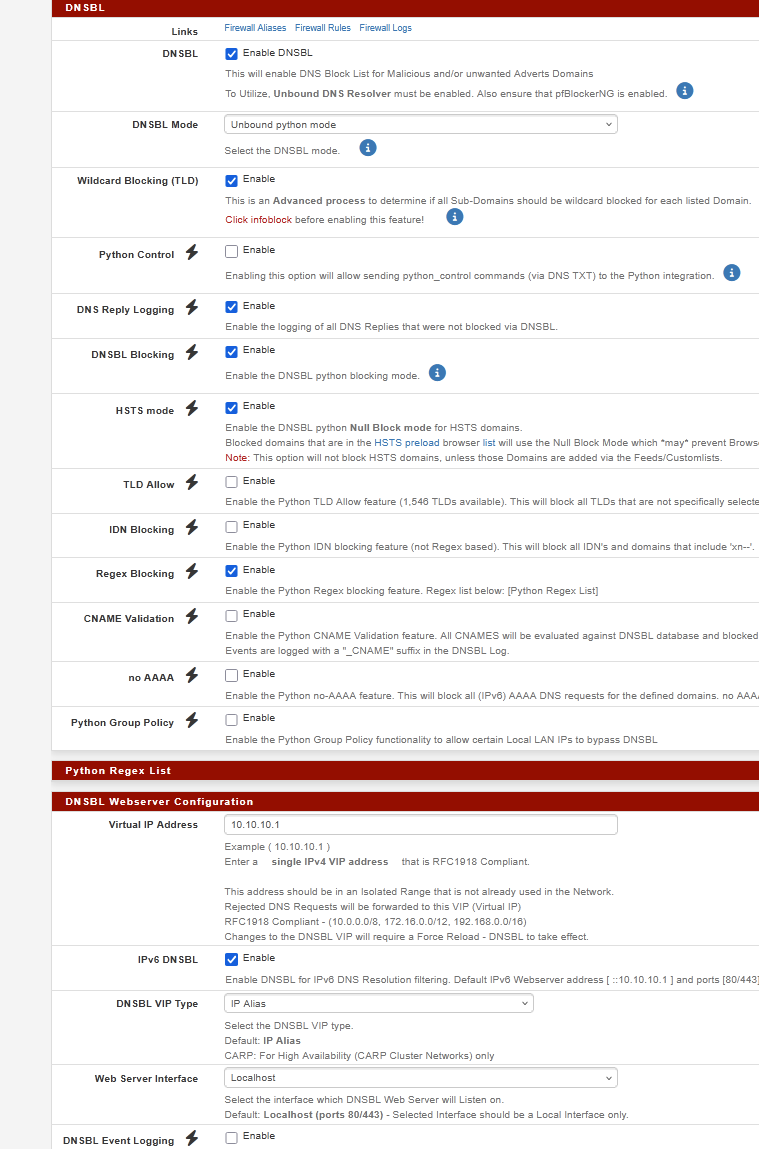

What about the power-saving settings for the CPU and the network card? For the CPU, you can check and adjust it to maximum performance in the advanced settings of pfSense for testing purposes. As for the network card, it should be possible to set dev.ice.X.power_save=0 in the system tunables, where X is the port number. However, I'm not sure if this actually works. In theory, this should eliminate glitches with interrupts on the bus if they are, for example, related to power-saving features.

-

A good thought but no NIC involved for these particular links (SFP28), they are direct to the CPU.

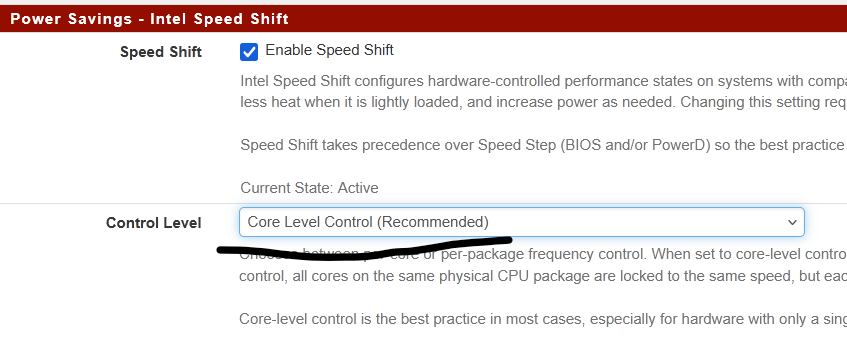

No PowerD in use either - core level Speed Shift capable and enabled in pfSense.

️

️ -

Hmm, a change in the default

eeesetting or similar might present like this. Though I don't see anything likely in the driver history. Especially since 24.11. -

@RobbieTT

I noticed a difference in how SpeedShift behaved between versions 24.11 and 25.03. Maybe it’s just a coincidence and the reason lies elsewhere, but it could also be due to changes in the default SpeedShift settings between the versions. Also it could be some kernel changes regarding it.You can list most of the settings by issuing a command

sysctl -a | grep -E 'hwp|epp|dev.cpu.0.(freq|cx)'Ideally, do it on both systems and then you can compare it.

-

On my system I've found that

machdep.hwpstate_pkg_ctrl is 0 on 24.11 and 1 on 25.03I think this is it

I don't remember if I changed this setting or not — probably not, but I'm not sure. -

That default hasn't changed as far as I know. I really wouldn't expect it to make any difference to a driver from switching that though.

-

@stephenw10

Yep, it is possible that I have changed it.

And regarding this "new" ice behaviour, I don't know, do some kernel options changed in between? -

Seems like it must be something like that. I can't see any driver changes that could present like this directly.

Though perhaps it could be something in the SFP module since I've nothing on our 8300 test boxes that also use ice(4) NICs.

-

@w0w said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

@stephenw10

Yep, it is possible that I have changed it.On mine:

machdep.hwpstate_pkg_ctrl: 0So no difference between versions on my systems.

️

️ -

@stephenw10 said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

Seems like it must be something like that. I can't see any driver changes that could present like this directly.

Though perhaps it could be something in the SFP module since I've nothing on our 8300 test boxes that also use ice(4) NICs.

Replaced this ipolex SFP+ DAC:

drivername: ice0 plugged: SFP/SFP+/SFP28 Unknown (Copper pigtail) vendor: ipolex PN: SFP-H10GB-CU1M SN: WTS11J72204 DATE: 2019-07-24With a freshly purchased 10Gtek one:

drivername: ice0 plugged: SFP/SFP+/SFP28 Unknown (Copper pigtail) vendor: OEM PN: CAB-10GSFP-P1M SN: CSC241010630178 DATE: 2024-10-22It didn't change anything and the issue remains. I wasn't really expecting a difference as I had been through my stock of SFP+ DACs but best to be sure I guess.

So far the only way to stop the issue is to revert to 24.11 and below. The problem only manifests itself with 25.03b.

-

kern.ipc.tls.enable: 1on 25.03

I doubt that this option has any real effect, but for now it's the only difference I can see in the kernel. At the very least, it can be used by both the ice driver and the kernel itself.

-

@stephenw10 said in DNS resolver exiting when loading pfblocker 25.03.b.20250409.2208:

Seems like it must be something like that. I can't see any driver changes that could present like this directly.

Do we still need to add

ice_ddp_load="YES"to theloader.conf.localfile, or are we done with that tuneable?I don't have it added but I presumed all is well given that the system shows that it is loaded:

ice0: <Intel(R) Ethernet Connection E823-L for SFP - 1.43.2-k> mem 0xf0000000-0xf7ffffff,0xfa010000-0xfa01ffff at device 0.0 numa-domain 0 on pci11 ice0: Loading the iflib ice driver ice0: The DDP package was successfully loaded: ICE OS Default Package version 1.3.41.0, track id 0xc0000001. ice0: fw 5.5.17 api 1.7 nvm 2.28 etid 80011e36 netlist 0.1.7000-1.25.0.f083a9d5 oem 1.3200.0 ️

️ -

I'm not sure if this unbound error is relevant but as it appears around the time of these events:

php-fpm 16215 /rc.newwanipv6: The command '/usr/local/sbin/unbound -c /var/unbound/unbound.conf' returned exit code '1', the output was '[1749405403] unbound[41019:0] error: bind: address already in use [1749405403] unbound[41019:0] fatal error: could not open ports'Looking at sockets:

IPv4 System Socket Information USER COMMAND PID FD PROTO LOCAL FOREIGN root php-fpm 16215 4 udp4 *:* *:* IPv6 System Socket Information USER COMMAND PID FD PROTO LOCAL FOREIGN root php-fpm 16215 5 udp6 *:* *:*It's not an area I am familiar with.

️

️ -

No that's ugly but shouldn't be an issue. It tries to start Unbound too rapidly and it's already running. That should not stop it.

-

Not grabbing the bunting just yet but the short-lived 0606 beta booted ok and without all the noise the interface allegedly having issues. It did have 1 cycle of reacting to a non-existent interface issue when running but the improvement at boot was quite a change.

Now running the 0610 beta and still no issues at boot and no false interface issues for 30 hours+.

Did a bug get caught and shot?

️

️