[solved] 25.03.b.20250610.1659 re-enabling limiters leads to syslog kernel messages "update_fs ..."

-

I recently re-enabled limiters on some interfaces, after running without for a long, time and I noticed I get these messages in the syslog. Do they suggest issues, or just FYI (although doesn't really inform me of anything..)

2025-06-18 14:40:17.190574+02:00 kernel - update_fs fs 9 for sch 9 not 11 still unlinked 2025-06-18 14:40:17.190543+02:00 kernel - update_fs fs 9 for sch 9 not 65547 still unlinked 2025-06-18 14:40:17.190512+02:00 kernel - update_fs fs 9 for sch 9 not 10 still unlinked 2025-06-18 14:40:17.190481+02:00 kernel - update_fs fs 9 for sch 9 not 65546 still unlinked 2025-06-18 14:40:17.190451+02:00 kernel - update_fs fs 9 for sch 9 not 6 still unlinked 2025-06-18 14:40:17.190419+02:00 kernel - update_fs fs 9 for sch 9 not 65542 still unlinked 2025-06-18 14:40:17.190389+02:00 kernel - update_fs fs 9 for sch 9 not 5 still unlinked 2025-06-18 14:40:17.190357+02:00 kernel - update_fs fs 9 for sch 9 not 65541 still unlinked 2025-06-18 14:40:17.190327+02:00 kernel - update_fs fs 9 for sch 9 not 2 still unlinked 2025-06-18 14:40:17.190293+02:00 kernel - update_fs fs 9 for sch 9 not 65538 still unlinked 2025-06-18 14:40:17.190250+02:00 kernel - update_fs fs 9 for sch 9 not 1 still unlinked 2025-06-18 14:40:17.190150+02:00 kernel - update_fs fs 9 for sch 9 not 65537 still unlinked 2025-06-18 14:38:29.857170+02:00 kernel - update_fs fs 9 for sch 9 not 11 still unlinked 2025-06-18 14:38:29.857132+02:00 kernel - update_fs fs 9 for sch 9 not 65547 still unlinked 2025-06-18 14:38:29.857089+02:00 kernel - update_fs fs 9 for sch 9 not 10 still unlinked 2025-06-18 14:38:29.857048+02:00 kernel - update_fs fs 9 for sch 9 not 65546 still unlinked 2025-06-18 14:38:29.857010+02:00 kernel - update_fs fs 9 for sch 9 not 6 still unlinked 2025-06-18 14:38:29.856971+02:00 kernel - update_fs fs 9 for sch 9 not 65542 still unlinked 2025-06-18 14:38:29.856934+02:00 kernel - update_fs fs 9 for sch 9 not 5 still unlinked 2025-06-18 14:38:29.856895+02:00 kernel - update_fs fs 9 for sch 9 not 65541 still unlinked 2025-06-18 14:38:29.856853+02:00 kernel - update_fs fs 9 for sch 9 not 2 still unlinked 2025-06-18 14:38:29.856812+02:00 kernel - update_fs fs 9 for sch 9 not 65538 still unlinked 2025-06-18 14:38:29.856755+02:00 kernel - update_fs fs 9 for sch 9 not 1 still unlinked 2025-06-18 14:38:29.856643+02:00 kernel - update_fs fs 9 for sch 9 not 65537 still unlinkedLooking at Diagnostics / Limiter Info I can't find a scheduler 9, which might explain why the kernel is moaning?

-

There is a queue belonging to a scheduler 9 though (HERE, below), looks like it wasn't automatically deleted when the limiter was deleted (which seems like a bug...).

How can I manually remove it, the limiter is was configured under is no more?

Limiter Information Limiters: 00001: 600.000 Mbit/s 0 ms burst 0 q131073 2000 sl. 0 flows (1 buckets) sched 65537 weight 0 lmax 0 pri 0 droptail sched 65537 type FIFO flags 0x0 0 buckets 0 active 00002: 600.000 Mbit/s 0 ms burst 0 q131074 2000 sl. 0 flows (1 buckets) sched 65538 weight 0 lmax 0 pri 0 droptail sched 65538 type FIFO flags 0x0 0 buckets 0 active 00005: 400.000 Mbit/s 0 ms burst 0 q131077 2000 sl. 0 flows (1 buckets) sched 65541 weight 0 lmax 0 pri 0 droptail sched 65541 type FIFO flags 0x0 0 buckets 0 active 00006: 100.000 Mbit/s 0 ms burst 0 q131078 2000 sl. 0 flows (1 buckets) sched 65542 weight 0 lmax 0 pri 0 droptail sched 65542 type FIFO flags 0x0 0 buckets 0 active 00010: 600.000 Mbit/s 0 ms burst 0 q131082 2000 sl. 0 flows (1 buckets) sched 65546 weight 0 lmax 0 pri 0 droptail sched 65546 type FIFO flags 0x0 0 buckets 0 active 00011: 600.000 Mbit/s 0 ms burst 0 q131083 2000 sl. 0 flows (1 buckets) sched 65547 weight 0 lmax 0 pri 0 droptail sched 65547 type FIFO flags 0x0 0 buckets 0 active Schedulers: 00001: 600.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 4 5744 0 0 0 00002: 600.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 2 248 0 0 0 00005: 400.000 Mbit/s 0 ms burst 0 q65541 50 sl. 0 flows (1 buckets) sched 5 weight 0 lmax 0 pri 0 droptail sched 5 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 3 00006: 100.000 Mbit/s 0 ms burst 0 q65542 50 sl. 0 flows (1 buckets) sched 6 weight 0 lmax 0 pri 0 droptail sched 6 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 4 00010: 600.000 Mbit/s 0 ms burst 0 q65546 50 sl. 0 flows (1 buckets) sched 10 weight 0 lmax 0 pri 0 droptail sched 10 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 5 10 00011: 600.000 Mbit/s 0 ms burst 0 q65547 50 sl. 0 flows (1 buckets) sched 11 weight 0 lmax 0 pri 0 droptail sched 11 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 6 11 Queues: q00001 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail q00002 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail q00003 50 sl. 0 flows (1 buckets) sched 5 weight 0 lmax 0 pri 0 droptail q00004 50 sl. 0 flows (1 buckets) sched 6 weight 0 lmax 0 pri 0 droptail q00005 50 sl. 0 flows (1 buckets) sched 10 weight 0 lmax 0 pri 0 droptail q00006 50 sl. 0 flows (1 buckets) sched 11 weight 0 lmax 0 pri 0 droptail q00009 50 sl. 0 flows (1 buckets) sched 9 weight 0 lmax 0 pri 0 droptail <<=== HERE q00010 50 sl. 0 flows (1 buckets) sched 10 weight 0 lmax 0 pri 0 droptail q00011 50 sl. 0 flows (1 buckets) sched 11 weight 0 lmax 0 pri 0 droptail -

I raised redmine #16275 on this.

... and to manually remove unconnected queues one can use

/sbin/dnctl queue listfollowed by

/sbin/dnctl queue delete nwhere n is the queue number

-

Do you have Nexus/MIM enabled?

-

@stephenw10 Nope

-

@stephenw10 additionally, but also relating to Limiters, I'd like to make you aware of this possible regression as noted for 2.8.0: https://forum.netgate.com/topic/197859/2-8-0-limiter-rule-not-honored-on-lan-download-with-multiple-limiters-queues

I saw the same issue in 25.03 the other day, but today, after recreating the limiters yesterday, I get a different result. It is still failing though, just slightly differently, and I need to do some more testing before I can comfortably raise a bug on the issue.

-

@pst

I'm having odd issues with limiters too and I have not really chased it down yet due to other (recently resolved) issues.My thread was here and I'm going to look back into it:

https://forum.netgate.com/topic/197395/25-03-beta-bufferbloat-fq-codel-issues?_=1750539387871

️

️ -

@RobbieTT good, the more evidence we can gather the better. I don't think it's related to the new if_pppoe though as I don't use that (and neither would the 2.8.0 user in the thread I referenced above).

I plan to do some comparison tests between near default setups of 2.7.2, 2.8.0 and the current 25.03 in the next few days.

-

To summarize the results from the testing I've done so far on 25.03 (june10 beta):

Without the floating rule for buffer bloat prevention, limiters on LAN are working, both with policy routing and default.

With a floating rule for buffer bloat prevention configured:

- for LANs with policy routing, both UL and DL LAN limiters are disregarded

- for LANs without policy routing, LAN DL limit is disregarded, the LAN UL limit is adhered to

-

How is your floating rule defined?

Are you using if_pppoe?

-

@stephenw10 said in [solved] 25.03.b.20250610.1659 re-enabling limiters leads to syslog kernel messages "update_fs ...":

How is your floating rule defined?

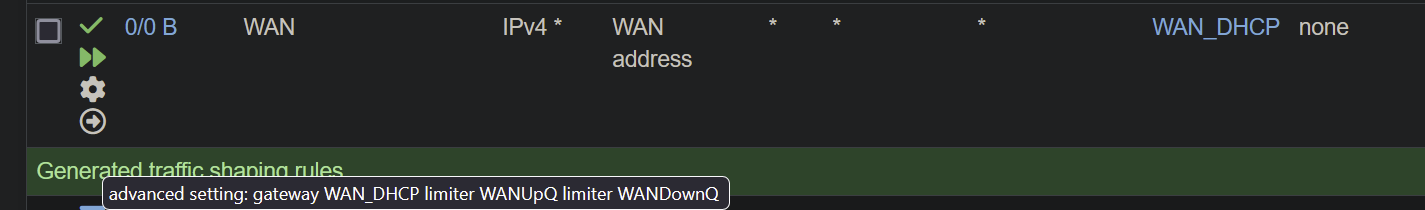

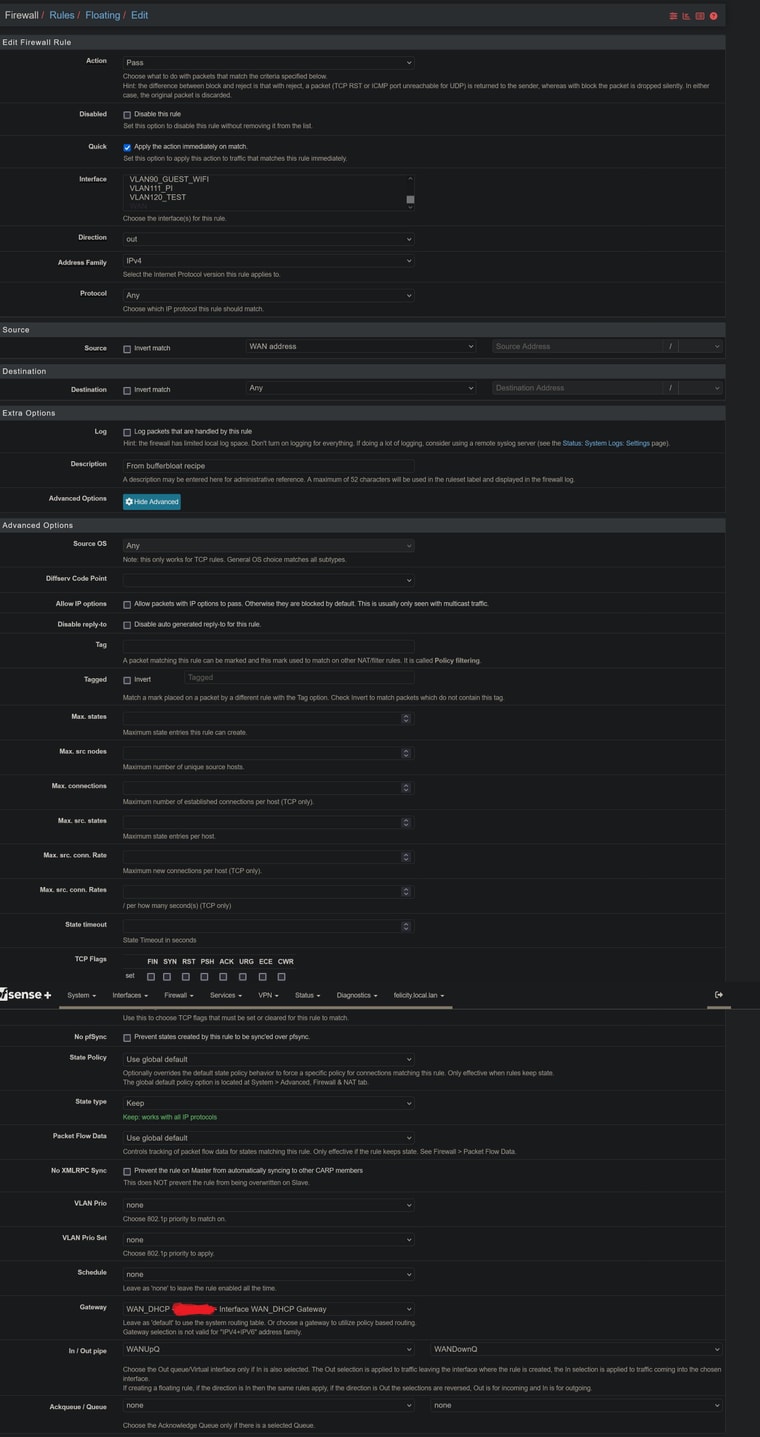

as per the latest Netgate recipe

(ignore the fact the rule's got no states/bytes as it's been deactivated for a few days)

Are you using if_pppoe?

No.

IMHO, the problem resembles, on the surface at least, one from long ago: redmines 13026 and 14039

-

So pass - quick - outbound on WAN only?

Are you using pppoe at all? Or dhcp WAN?

-

@stephenw10 said in [solved] 25.03.b.20250610.1659 re-enabling limiters leads to syslog kernel messages "update_fs ...":

So pass - quick - outbound on WAN only?

yes, here's the rule:

[25.03-BETA][admin@felicity.local.lan]/root: pfctl -sr | grep -i buffer pass out quick on igb0 route-to (igb0 xxx.xxx.xxx.xx1) inet from xxx.xxx.xxx.xx3 to any flags S/SA keep state (if-bound) label "USER_RULE: From bufferbloat recipe" label "id:1750159398" label "gw:WAN_DHCP" ridentifier 1750159398 dnqueue(2, 1)

Are you using pppoe at all? Or dhcp WAN?

no pppoe, only dhcp v4/v6 on WAN

I should mention that I disabled IPv6 during the testing as to not interfere. Redmine #16201 mentions that the IPv6 rule needs to look slightly different as there is no NAT involved, so the source will not be WAN but rather the client's LAN address (so I skipped IPv6 for now)