VMware ESXi 6.7 increased latency with NIC Passthrough

-

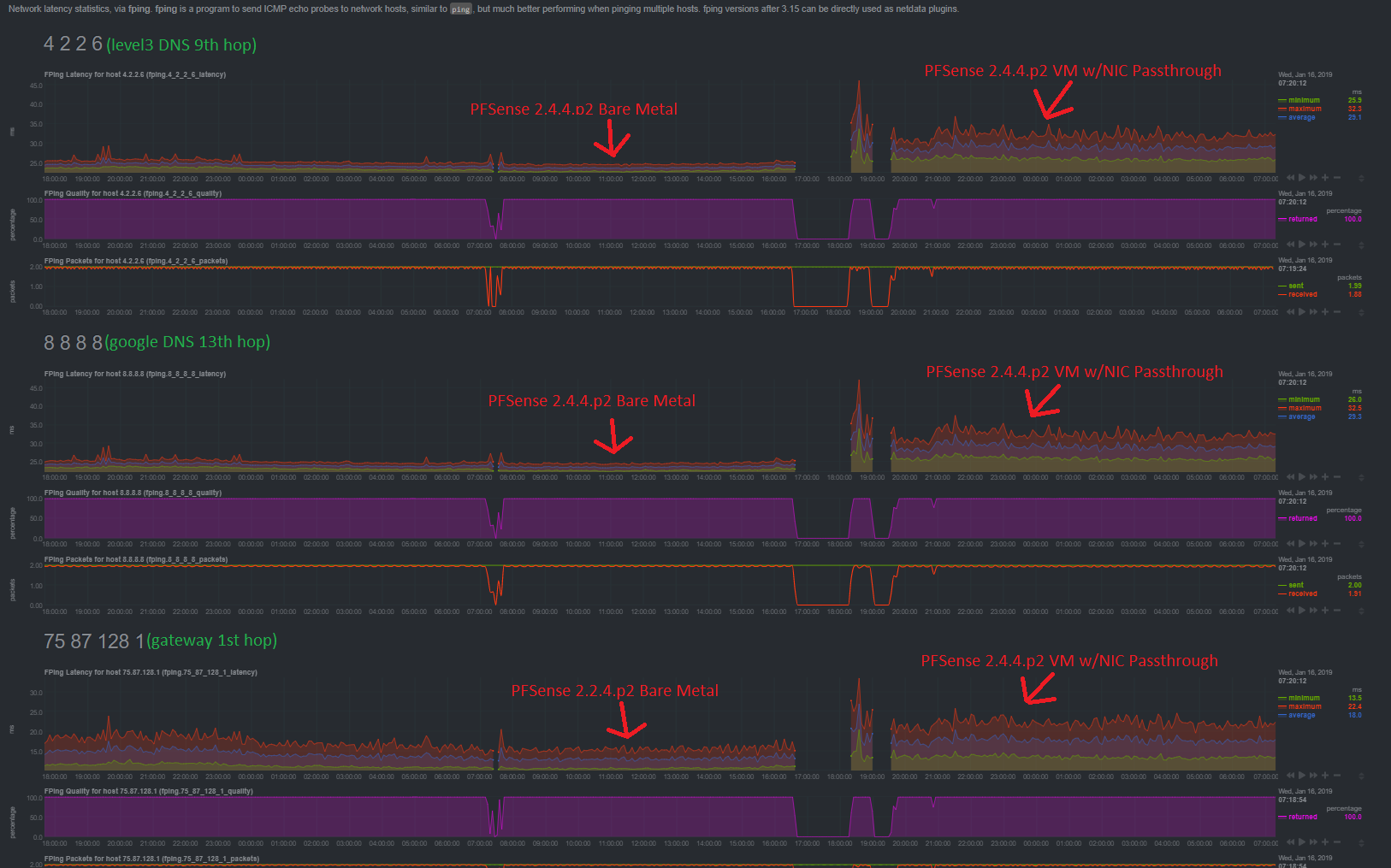

Greetings, I've noticed what I think is a suprising increase in latency when virtualizing PFsense on VMware ESXi 6.7. The hardware specs for both bare metal deployment and the virtualized deployment are the same. Does anyone else see this much of an increase in latency when virtualizing and using NIC passthrough?

Specs:

16GB DDR3 RAM

Intel Celeron J3455 Processor (Quad Core, 1.5ghz)

Intel I340-T4 Quad Port Gigabit NICI've attached an fping/Netdata graph showing the latency increase both to the gateway and carrying through to other traffic leaving the firewall. I realize that virtualization is not free however, I was thinking that the NIC passthrough would eliminate a lot of the latency additions seen from virtualizing. I was surprised to see this much of an increase.

In all cases shown on the graph, the PFsense box is using the same routes and has the same WAN IP address between bare metal and VM. The only thing changing on the graphs is the switch from a bare metal 2.4.4.p2 install to a virtualized 2.4.4.p2 install using NIC passthrough for LAN/WAN to the VM.

-

Just a follow up here in case anything in the future experiences issues like this.

I was able to resolve this by changing two settings. First, I had to set the ESXi host power management to High Performance, this was defaulted to Balanced. This stopped the clock throttling on the CPU, which in my case is a very low power 10W SOC, I did not notice an increase in power usage but it helped to stabilize the responsiveness of the ESXi host on this low powered system.

The second item that I changed was to edit the pfSense VM, go to advanced options, and set the latency sensitivity to High. by default, the latency sensitivity is set to Normal. A High setting means that the CPU and memory need to be reserved for the VM to power on, so ensure that your host has enough resources to support this.

Changing the two settings above resolved the latency differences that I was seeing. I am now getting identical latency readings between bare metal and a virtualized pfSense VM with NIC passthrough.

I tested this method with 2.4.4p2, 2.4.4p3, and current versions of the 2.5 development images. All experienced the same successful outcome in terms of reducing latency to that of a bare metal system.