pfSense on ESXi 6.7 - slow throughput

-

Setting up a home lab and planned to use and learn pfSense as the firewall.

However I'm strugling with network throughput, WAN<->LAN. Seems to top out at around 60-80mbit no matter what I do.

IA is 500mbit full duplex. Hardware is a Dell T7500 workstation (8 CPUs x Intel(R) Xeon(R) CPU E5620 @ 2.40GHz / 72GB RAM).

2 intel NICs (dual PCIe card) and 1 onboard broadcom NIC.

The 2 intel NICs are set with etherchannel to a cisco switch using vSwitch0, the broadcom NIC is set ot a WAN vSwitch and connected directly to ISP equipement.

pfSense have been given 2 cores and 4GB RAM, running off a SSD disk.

Using vmxnet3 and latest pfSense development build (2.4.5-DEVELOPMENT (amd64)). openvm-tools installed from the package manager.Things I have tried:

- Using e1000e NICs (no difference in speed)

- Created a VM and put it on the WAN vSwitch (speedtest.net / test.telenor.net both give 500/500 henche I'd say ESXi and network is OK)

- Toggled on/off "Disable hardware TCP segmentation offload"

- Toggled on/off "Disable hardware large receive offload" (combined with the above ON and this ON, speed dropped to 2-3mbit)

- Toggled on/off "Disable hardware checksum offload" (no noticeable difference)

- Tried to download test file directly via SSH on the pfSense (no noticeable speed difference)

- Fresh install (no noticeable speed difference with suricata on/off)

- Started off with latest production build (2.4.4) (no difference in speed compared to 2.4.5)

Any thoughts?

-

@helger said in pfSense on ESXi 6.7 - slow throughput:

However I'm strugling with network throughput, WAN<->LAN. Seems to top out at around 60-80mbit no matter what I do.

How exactly are you testing this?

-

@johnpoz https://speedtest.net, https://test.telenor.net, wget ftp://ftp.uninett.no/debian-iso/9.8.0/amd64/iso-dvd/debian-9.8.0-amd64-DVD-3.iso

These are the 3 methods I've been using, all 3 give about the same result.

Doing the same on a VM connected to the WAN vSwitch on the same ESXi host gives full speed.-Helge

-

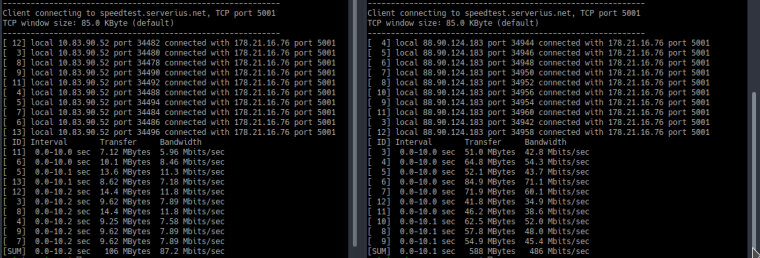

Did a iperf test now as well, window on the left is my workstation behind the pfSense, the one on the right is a ubuntu server running on the same ESXi host as the pfSense and put directly on the WAN side.

-

So your connecting to something out on the internet?

Dude check from your wan to your lan, lan to your wan through pfsense.. And how many connections are you doing like 10? Just do 1 you should see pretty close to full speed of your local network speed..

-

@johnpoz Internet services yes. The above iperf is towards a public iperf server.

There seems to be something in the pfSense setup that messes with me. Since as you see, there is a major difference in speed going directly to the iperf server vs via the pfSense.Single thread test:

From a LAN PC:

iperf -c speedtest.serverius.net -P 1Client connecting to speedtest.serverius.net, TCP port 5001

TCP window size: 85.0 KByte (default)[ 3] local 10.83.90.52 port 35472 connected with 178.21.16.76 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.1 sec 110 MBytes 91.1 Mbits/secFrom a VM directly attached to the WAN interface/vSwitch:

iperf -c speedtest.serverius.net -P 1Client connecting to speedtest.serverius.net, TCP port 5001

TCP window size: 85.0 KByte (default)[ 3] local 88.88.101.151 port 44368 connected with 178.21.16.76 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 397 MBytes 332 Mbits/secWith 10 threads:

From a LAN PC:

iperf -c speedtest.serverius.net -P 10Client connecting to speedtest.serverius.net, TCP port 5001

TCP window size: 85.0 KByte (default)[ 13] local 10.83.90.52 port 35514 connected with 178.21.16.76 port 5001

[ 11] local 10.83.90.52 port 35508 connected with 178.21.16.76 port 5001

[ 5] local 10.83.90.52 port 35512 connected with 178.21.16.76 port 5001

[ 6] local 10.83.90.52 port 35496 connected with 178.21.16.76 port 5001

[ 7] local 10.83.90.52 port 35500 connected with 178.21.16.76 port 5001

[ 3] local 10.83.90.52 port 35510 connected with 178.21.16.76 port 5001

[ 9] local 10.83.90.52 port 35498 connected with 178.21.16.76 port 5001

[ 12] local 10.83.90.52 port 35502 connected with 178.21.16.76 port 5001

[ 4] local 10.83.90.52 port 35506 connected with 178.21.16.76 port 5001

[ 8] local 10.83.90.52 port 35504 connected with 178.21.16.76 port 5001

[ ID] Interval Transfer Bandwidth

[ 6] 0.0-10.0 sec 15.1 MBytes 12.7 Mbits/sec

[ 3] 0.0-10.0 sec 9.75 MBytes 8.17 Mbits/sec

[ 7] 0.0-10.1 sec 10.5 MBytes 8.74 Mbits/sec

[ 9] 0.0-10.1 sec 15.1 MBytes 12.6 Mbits/sec

[ 12] 0.0-10.1 sec 7.75 MBytes 6.43 Mbits/sec

[ 4] 0.0-10.2 sec 9.88 MBytes 8.13 Mbits/sec

[ 5] 0.0-10.2 sec 9.88 MBytes 8.11 Mbits/sec

[ 11] 0.0-10.3 sec 15.4 MBytes 12.6 Mbits/sec

[ 13] 0.0-10.3 sec 10.1 MBytes 8.21 Mbits/sec

[ 8] 0.0-10.3 sec 10.0 MBytes 8.11 Mbits/sec

[SUM] 0.0-10.3 sec 114 MBytes 92.0 Mbits/secFrom a VM directly attached to the WAN interface/vSwitch:

iperf -c speedtest.serverius.net -P 10Client connecting to speedtest.serverius.net, TCP port 5001

TCP window size: 85.0 KByte (default)[ 5] local 88.88.101.151 port 44372 connected with 178.21.16.76 port 5001

[ 3] local 88.88.101.151 port 44376 connected with 178.21.16.76 port 5001

[ 6] local 88.88.101.151 port 44374 connected with 178.21.16.76 port 5001

[ 7] local 88.88.101.151 port 44380 connected with 178.21.16.76 port 5001

[ 8] local 88.88.101.151 port 44382 connected with 178.21.16.76 port 5001

[ 10] local 88.88.101.151 port 44386 connected with 178.21.16.76 port 5001

[ 9] local 88.88.101.151 port 44384 connected with 178.21.16.76 port 5001

[ 4] local 88.88.101.151 port 44378 connected with 178.21.16.76 port 5001

[ 11] local 88.88.101.151 port 44388 connected with 178.21.16.76 port 5001

[ 12] local 88.88.101.151 port 44390 connected with 178.21.16.76 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 61.4 MBytes 51.5 Mbits/sec

[ 6] 0.0-10.0 sec 66.0 MBytes 55.4 Mbits/sec

[ 12] 0.0-10.0 sec 39.6 MBytes 33.2 Mbits/sec

[ 10] 0.0-10.0 sec 51.0 MBytes 42.7 Mbits/sec

[ 9] 0.0-10.0 sec 47.0 MBytes 39.3 Mbits/sec

[ 4] 0.0-10.0 sec 57.2 MBytes 47.9 Mbits/sec

[ 11] 0.0-10.0 sec 71.2 MBytes 59.5 Mbits/sec

[ 7] 0.0-10.1 sec 50.9 MBytes 42.3 Mbits/sec

[ 8] 0.0-10.1 sec 45.1 MBytes 37.5 Mbits/sec

[ 5] 0.0-10.1 sec 100 MBytes 83.5 Mbits/sec

[SUM] 0.0-10.1 sec 590 MBytes 490 Mbits/secSo as you can see, the performance is significantly better using the WAN interface/vSwitch of the ESXi host for a regular VM vs trough the pfSense.

So I can't imagine it being anything else than some sort of setting or incompability issue in the pfSense setup. -

Another test done, set up iperf server on the VM connected to the WAN directly and running iperf client on the LAN host.

Same speed achived:1 thread:

iperf -c 88.88.101.151 -P 1Client connecting to 88.88.101.151, TCP port 5001

TCP window size: 85.0 KByte (default)[ 3] local 10.83.90.52 port 54354 connected with 88.88.101.151 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.1 sec 112 MBytes 92.4 Mbits/sec5 threads:

iperf -c 88.88.101.151 -P 5Client connecting to 88.88.101.151, TCP port 5001

TCP window size: 85.0 KByte (default)[ 5] local 10.83.90.52 port 54380 connected with 88.88.101.151 port 5001

[ 7] local 10.83.90.52 port 54384 connected with 88.88.101.151 port 5001

[ 6] local 10.83.90.52 port 54382 connected with 88.88.101.151 port 5001

[ 4] local 10.83.90.52 port 54378 connected with 88.88.101.151 port 5001

[ 3] local 10.83.90.52 port 54376 connected with 88.88.101.151 port 5001

[ ID] Interval Transfer Bandwidth

[ 6] 0.0-10.0 sec 54.2 MBytes 45.4 Mbits/sec

[ 3] 0.0-10.0 sec 14.6 MBytes 12.2 Mbits/sec

[ 7] 0.0-10.1 sec 15.0 MBytes 12.5 Mbits/sec

[ 5] 0.0-10.2 sec 13.9 MBytes 11.4 Mbits/sec

[ 4] 0.0-10.2 sec 14.8 MBytes 12.1 Mbits/sec

[SUM] 0.0-10.2 sec 112 MBytes 92.2 Mbits/secConnecting to the WAN VM from a host out on the internet:

iperf -c 88.88.101.151 -P 5Client connecting to 88.88.101.151, TCP port 5001

TCP window size: 85.0 KByte (default)[ 3] local 185.125.169.246 port 41574 connected with 88.88.101.151 port 5001

[ 4] local 185.125.169.246 port 41576 connected with 88.88.101.151 port 5001

[ 5] local 185.125.169.246 port 41578 connected with 88.88.101.151 port 5001

[ 6] local 185.125.169.246 port 41580 connected with 88.88.101.151 port 5001

[ 7] local 185.125.169.246 port 41582 connected with 88.88.101.151 port 5001

[ ID] Interval Transfer Bandwidth

[ 6] 0.0-10.0 sec 102 MBytes 85.9 Mbits/sec

[ 4] 0.0-10.0 sec 122 MBytes 102 Mbits/sec

[ 3] 0.0-10.1 sec 131 MBytes 109 Mbits/sec

[ 7] 0.0-10.1 sec 118 MBytes 98.6 Mbits/sec

[ 5] 0.0-10.1 sec 114 MBytes 94.5 Mbits/sec

[SUM] 0.0-10.1 sec 588 MBytes 487 Mbits/sec -

@helger said in pfSense on ESXi 6.7 - slow throughput:

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.1 sec 112 MBytes 92.4 Mbits/secYou go something wrong for sure.. But stop testing with multiple connections... from wan to lan or lan to wan you should see pretty freaking close to full speed.. Until you have that working forget testing anything else.

-

@johnpoz Sure, can try with single threads. Just with multiple I'm sure I get max the pipe can do which isn't always the case for single threads, but ok single from now on.

So to the issue at hand, any thoughts to what could be tested in terms of settings or things to check? -

Nevermind, I'll go hide in shame now.... Didn't realize 1 NIC had "decided" to turn off autoneg and set itself in 100HD ... Jeezes..

-

So what is your speed now local testing? Single thread?

Here I Just fired up ubuntu vm on my win10 box for testing using hyper-v.. Its a OLD box... dell xps8300... Running pfsense vm on it.. Where wan is connected vswitch to my physical network.. On a crap realtek nic ;)

So you go

iperf3 client on Unbund vm - vswitch - pfsense vm - vswitch - real nic --- switch --- nic NAS --- running iperf server in a docker..

And hitting pretty freaking close to speed with 840ish.. Now the physical box does 940 to the same iperf3 server... But ok with that performance ;)

-

@johnpoz Getting the full gbit speed, tried between firewall zones, but inside the ESXi host. pfSense pushing just shy of 3Gbit.

Guess thats quite fair with a dual core.With suricata turned on I got just above 1Gbit.

Physical workstation to a VM traversing zones (client<->server)

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 1.06 GBytes 913 Mbits/secI'm happy!

-

@helger said in pfSense on ESXi 6.7 - slow throughput:

Getting the full gbit speed, tried between firewall zones, but inside the ESXi host. pfSense pushing just shy of 3Gbit.

Guess thats quite fair with a dual core.

With suricata turned on I got just above 1Gbit.

Physical workstation to a VM traversing zones (client<->server)

[ ID] Interval Transfer Bandwidthhello all

the same problems with 6.7 U2 ....

regards

-

There was NO problem...

"Nevermind, I'll go hide in shame now.... Didn't realize 1 NIC had "decided" to turn off autoneg and set itself in 100HD ... Jeezes.."If you are seeing 90'ish mbps when you think you should see gig... Same issue more than likely!

-

hello

I did not understand it well you removed the autonegotiated in the WAN of the Esxi? or of the Pfsense? I will prove it

Thank you