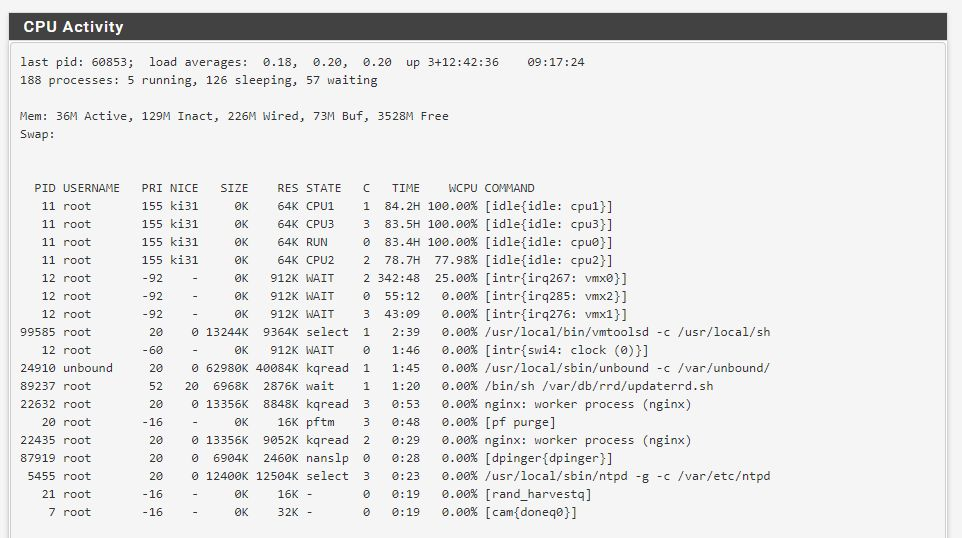

Loving pfSense in my vSphere environment, but...high CPU...

-

Surely someone at pfSense must know the solution to this issue...

A simple google search suggests I’m certainly not the only person who is suffering this issue!

-

Seeing the same thing here 2.4.4.p2. When shipping a lot of traffic seeing high level of interrupts on both vmx interfaces on my pfsense VM. I've got dedicated NIC ports on my Intel 4 port I340 card for WAN and LAN and then separate physical ports for management and one other VM. Definitely don't see this interrupt issue on the non virtualised pfsense running on this hardware.

For reference this in running on a HP T620 thin client (with 4 port i340 intel network card) with 16gb of RAM and a 4 CORE AMD GX-420CA CPU and 128 M2 SSD for local storage. Ran pfsense native on here before and it doesn't even break a sweat. Definitely some sort of oddity using the VMX interfaces.

thanks

Ad. -

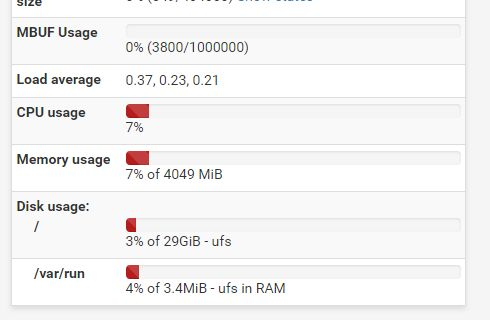

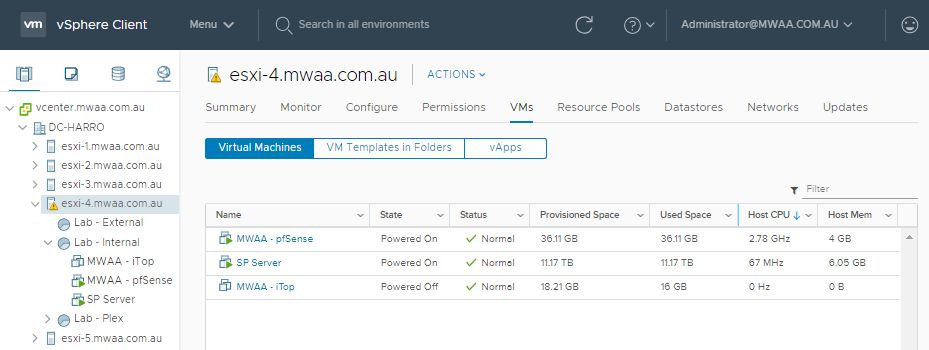

No idea. I've been running pfSense under ESXi since the 4.x days. Currently running on 6.7.0.11675023 on Dell Powervault NX3200. No CPU issues. pfSense VM consumes around 137 MHz according to VMware, about 4% according to pfSense dashboard.

-

I have the same exact issue, anyone have any ideas? I have tried disabling Hardware Checksum Offloading but nothing changes

-

@areynot My envirnment seems to be working fine now - not sure what was the issue I'm afraid.

-

@david_harrison actually so is mine... everything seems ok now... what I did notice is that CPU usage goes up when assigning more than 1 cpu to the pfSense VM, but for my case 1 is more than enough

-

my issue wasn't so much that pfSense was using a lot of CPU...

I have multiple VM's running on the host, and really when you added that up, pfSense was lucky to be getting what it was.

Turn off all you other VM's on the host and see what pfSense is actually using on the host.

-

Seems like classic CPU overcommitment to me. If you have "high CPU" or you think you have, check your VMware stats and get info about how many physical/logical AND virtual CPUs are working. The virtual to phys/logical should be around 1/2.5 according to best practices from VMware itself. So if you run 1/3 or higher and have high crunching CPUs you may see "high load" or "high CPU" in pfSense that is mostly pfSense not getting CPU ticks when it needs them and so has to wait for number crunching time.

Also it's recommended you only give VMs the amount of CPUs they actually need, not more "just in case" as these "idling" vCPUs in VMs also get their ticks even if only idling and "stealing" them from VMs that are in dire need for CPU ticks - so if 1 or 2 vCPUs are good - go with it and add more later if needed instead of going full in and throw 4 or 6 vCores into it :)

-

Discussing this issue elsewhere I got this response. Basically in my case the HP T620 thin client can't do hardware passthrough on vmware which means you end up with a high level of interrupts when running pfsense hard inside of ESXi. Basically it's just a lot of work of the virtual NICS without passthrough in ESXi which the T620 does not support... I guess that may be the case with other CPU's hence the high CPU usage. Example below is for this device but will be applicable to any CPU which dies not have SR-IOV.

see below

a) While the GX420CA is a decent little chip (used in the Arista 10/40 GbE switches), it's not great for virtualization. The Jaguar/Puma cores are only so-so (around 2400 Passmarks, or around the performance of a Intel Core i5-540M), and that single channel RAM controller means that any memory copying operations simply, well, suck.

b) If you want to use hypervised pfsense, get something that can do SR-IOV / PCIe passthrough, so the hypervisor won't have to handle packets and flip it into the VMs - that's always computationally expensive, and running it as vmxnet3 simply makes it worse (since the e1000e emulation in VMWare is self-throttling, while vmxnet3 implies pushing it as hard as the hardware can handle). The GX420CA in the t620 Plus does not have SR-IOV support, so no PCIe passthrough is possible AFAIK. Technically the RX427BB APU in the t730 thin clients can do it (as can certain Core i5/i7s from, say, Haswell and up), but the problem is like this:

-

You need something that can support PCIe access control services (ACSCtl flag in lspci -vv), since you'll need that so SR-IOV can work with PCIe passthrough sanity check (you don't really want SR-IOV to start passing stuff to device groups that don't make sense). That's dependent on hardware capability and BIOS/UEFI implementations. Even if your CPU can do, say, VT-d or AMDVi, it doesn't mean that ACS is baked in. If it's not baked in, well, you'll need a Linux kernel patch to get around the lack of ACS. Also, due to no ACS implementation, well, you won't get Alternative Routing-ID Interpretation (ARI), so you can only pass up to 7 SRIOV Virtual Functions. So as you can guess, hackery like this is not supported by VMWare ESXi - this is strictly Linux. Both the t620 Plus and the t730 does not have VMDirectPath or passthrough support in ESXi. Not sure about the DFI DT122-BE (the cheap noisy industrial computer equivalent to the t730), but I am going to say...very unlikely.

-

Your network card must be able to hand out SRIOV Virtual Functions to the hypervisor so the hypervisor can dish it out to the guest VMs - that's supported in most Mellanox, Intel and Solarflare cards.

-

Your network card must have driver support in FreeBSD to work with virtual functions as a VM Guest.

That's fairly problematic - as far as I can tell, only Intel 82599/i350s and newer can work with them. Solarflares and Mellanoxes both don't seem to work well with VFs.

So yeah, you are much better off running the t620 Plus on bare metal pfsense, and if you really need the hypervisor capability, use bhyve to run VMs on it instead.

-

-

Hi,

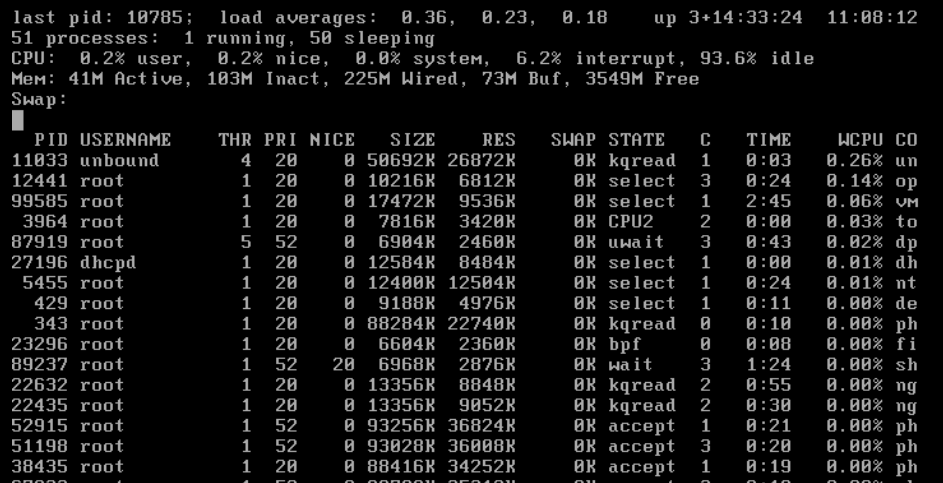

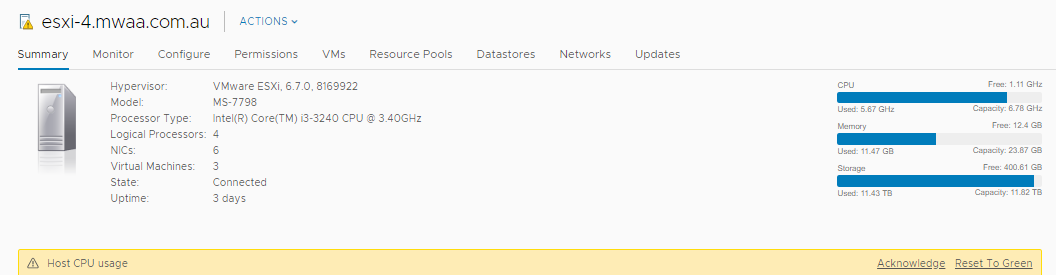

im passing through PT1000 and I350 Intel Nics and getting high cpu load when iperfing (1GBit) the pfsense host directly and also when iperfing hosts behind pfsense using it as as router (debian<--pfsense-->debian).Pfsense shows 10% CPU load while the esxi host has a very high cpu load (80% on i3 7100).

Also tried vmxnet3 paravirt nics but load it only going down a bit - and shouldn't pci passthrough move all the irq stuff to the pfsense vm anyway ?

However when doing the same iperf tests on the same machine with a bare metal pfsense install im only getting 10% CPU load and the machine.

I then installed the latests development snapshots of 2.5 and the host cpu load on esxi goes down significantly.

Had the same high host cpu load when using KVM on centos 7 or fedora 30. (Also with virtio nics or pci passthrough of the nics). I tested this on various machines (Supermicro X11 boards, Fujitsu D3644 boards etc.).

Guess it's some kind of problem once pfsense / freebsd is being virtualized. When I run a virtualized linux or RouterOS im not getting these host cpu load issues when iperfing.

Seems 2.5 or Freebsd 12 solves the problems so far...Will try to continue the tests on a naked Freebsd 11 vs 12 setup...

-

Ok -

Naked FreeBSD 11 on esxi 6.7u2 with i350 t4 in pci passthrough -> iperf 1Gbit = 80% CPU load on a 3.9 Ghz i3 7100 cpu

Naked FreeBSD 12 on esxi 6.7u2 with i350 t4 in pci passthrough -> iperf 1Gbit = 6% CPU load on a 3.9 Ghz i3 7100 cpuEdit - seems like the host cpu ramps up to 80% on freebsd 12 and 11 when i run 1 Gbit through the vms...

the vms show only 12% cpu load inside (htop).

Guess its just how freebsd handles packets... a debian vm shows 12% host load and 8% vm load when im iperfing... -

This post is deleted!