Should performance differ so much LEGACY/INLINE IDS?

-

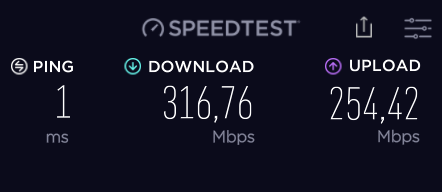

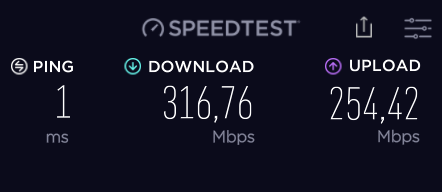

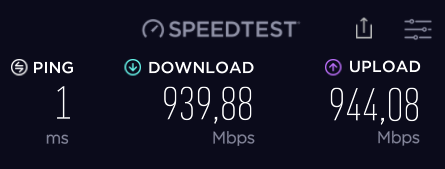

This is inline mode on WAN and legacy on LAN:

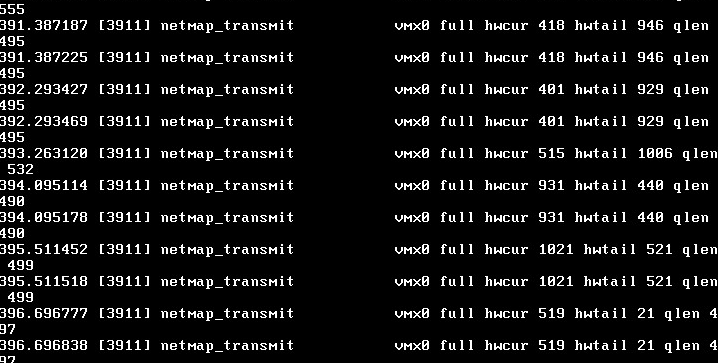

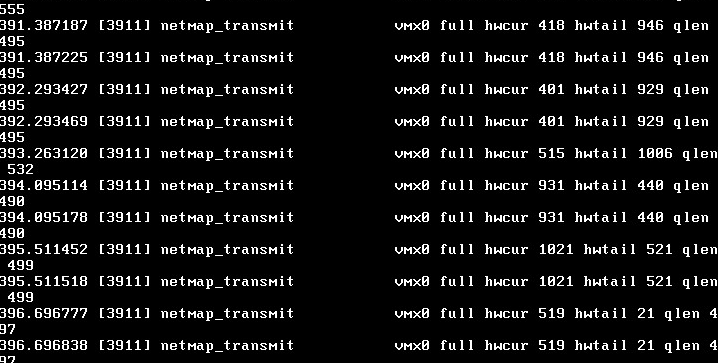

Followed by netmap issues due ti inline mode used

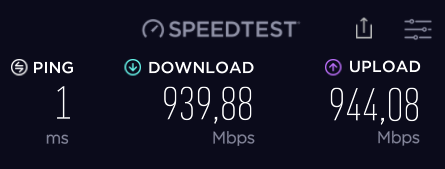

Changing WAN to legacy mode:

And its back to full wire speed. Expect a 60% performance hit on Inline mode.

-

If cat /var/log/system.log | grep netmap shows errors similar to the one above, then netmap is trying to put a packet into a buffer that is already full. The error is indicating that the packet has been dropped as a result. As with the "bad pkt" error, this is not the end of the world if it happens periodically. However, if you see this filling your log, then it's an indication that Suricata (or whatever application is using netmap) cannot process the packets fast enough. In other words: you need to get a faster CPU or disable some of your rules. You can buy yourself a tiny bit of burst by modifying buffers, but all you are doing is buying a few seconds of breathing room if you are saturating your link.

https://forum.netgate.com/topic/138613/configuring-pfsense-netmap-for-suricata-inline-ips-mode-on-em-igb-interfaces

-

@kiokoman said in Should performance differ so much LEGACY/INLINE IDS?:

If cat /var/log/system.log | grep netmap shows errors similar to the one above, then netmap is trying to put a packet into a buffer that is already full. The error is indicating that the packet has been dropped as a result. As with the "bad pkt" error, this is not the end of the world if it happens periodically. However, if you see this filling your log, then it's an indication that Suricata (or whatever application is using netmap) cannot process the packets fast enough. In other words: you need to get a faster CPU or disable some of your rules. You can buy yourself a tiny bit of burst by modifying buffers, but all you are doing is buying a few seconds of breathing room if you are saturating your link.

https://forum.netgate.com/topic/138613/configuring-pfsense-netmap-for-suricata-inline-ips-mode-on-em-igb-interfaces

I am but only getting 1/3 of wire speed on a 16 core XEON setup that can easily saturate the wire.

And if I keep going, it will crash.

-

or disable some of your rules

in any case Netmap is a bit of a disappointment

-

@Cool_Corona said in Should performance differ so much LEGACY/INLINE IDS?:

This is inline mode on WAN and legacy on LAN:

Followed by netmap issues due ti inline mode used

Changing WAN to legacy mode:

And its back to full wire speed. Expect a 60% performance hit on Inline mode.

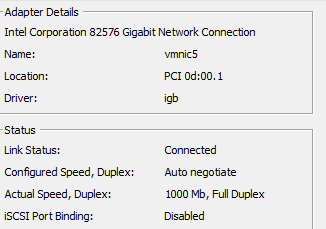

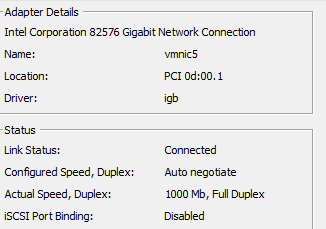

Your logs indicate you are using the vmx NIC driver. That driver has issues in FreeBSD with netmap. Here is a link to one of the current ones: https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=247647.

I've preached this what seems like a thousand times, but some of you guys just can't seem to get it in your head. You can't use just any and all NICs with the netmap device in FreeBSD. And inline IPS mode in Suricata and Snort uses the netmap device. So ergo, inline IPS mode performance and reliability is very much dependent on the exact NIC hardware you have. Legacy Mode uses libpcap, and it is pretty much 100% NIC agnostic. Not so with inline IPS mode.

So yeah, when you use a NIC driver that is not plain vanilla Intel (and older Intel chips at that), then you can expect netmap-related difficulties with inline IPS mode.

And Inline IPS Mode is not going to perform as well as Legacy Mode. It just can't and never will. If you want true line speed on Gigabit and faster links, then Inline IPS Mode is not for you. The whole reason for inline IPS mode is to prevent the packet leakage that naturally occurs with Legacy Mode. That and inline IPS mode allows more granular control of security by selectively dropping individual packets instead of blocking all packets to and from a host.

-

Hi Bill. I am aware of that but a 60% performance penalty??

This is the driver used on ESXi. Shouldnt netmap support an igb driver nowadays??

-

Hate to bring this up again.. But are you not the one that said you only run this to see who is knocking.. Which you can just see in the logs anyway?

Just confused to why your putting yourself through pain of any sort of performance hit, even if it was 1% for just another way to see the same data you would see in the default firewall log..

There is no point to running a IPS, unless its going to actually provide function that you need.. With most traffic being https, and you not even running services your hosting to the public? I don't see how the added complexity, or any sort of hit to performance is justified..

Maybe that is just me ;)

-

Its a test rig.... for something much bigger. Thats why.

-

@Cool_Corona said in Should performance differ so much LEGACY/INLINE IDS?:

Hi Bill. I am aware of that but a 60% performance penalty??

This is the driver used on ESXi. Shouldnt netmap support an igb driver nowadays??

The physical driver is meaningless within an ESXi VM unless you configured hardware pass-through. Since the logs show you using the vmx driver, then it appears pass-through is not enabled. Go look up "emulated netmap adapter" to understand why these virtual NIC drivers don't perform well under netmap. A 60% penalty is not surprising with emulated adapters. And within a VM that is doubled because the vmx adapter itself is already an emulated copy of hardware (but not the real thing). So you have, in effect, an emulation running on top of another emulation.

When you use a Virtual Machine, it is using virtualized hardware. You can actually select from several different virtual hardware NICs to use with a virtual machine in ESXi. The actual physical NIC in the ESXi host does not matter as no VM uses that hardware directly unless you enable pass-through to the VM. And pass-through is a one-on-one thing, so if you pass a NIC through to a given VM, then that NIC can't be used by any other VMs nor any virtual switch.

-

W w0w referenced this topic on

W w0w referenced this topic on