[UnSolved] Possible BUG : Wireguard routing weirdly

-

TLDR: A clean reinstall after a factory reset solved the issue.

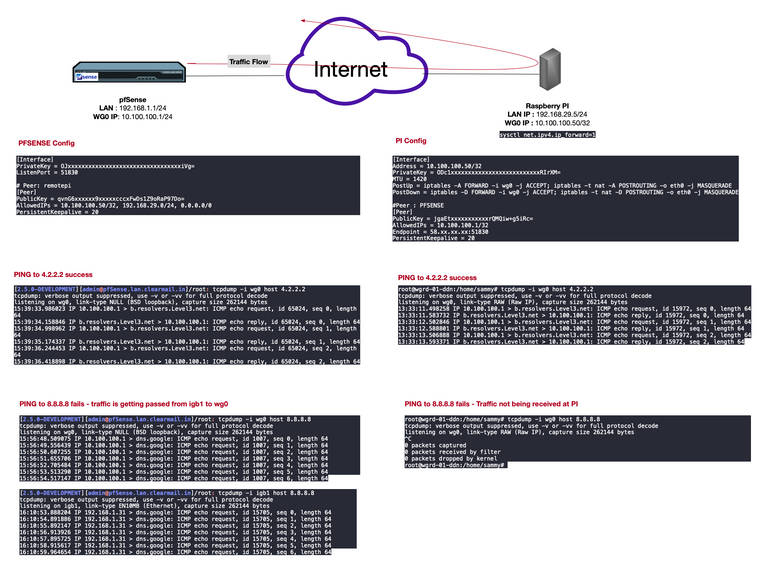

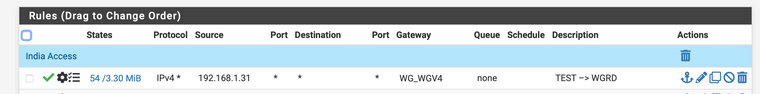

And the bug is back !I have the following setup :

Intel(R) Core(TM) i5-5250U 4 CPUs: 1 package(s) x 2 core(s) x 2 hardware threads

AES-NI

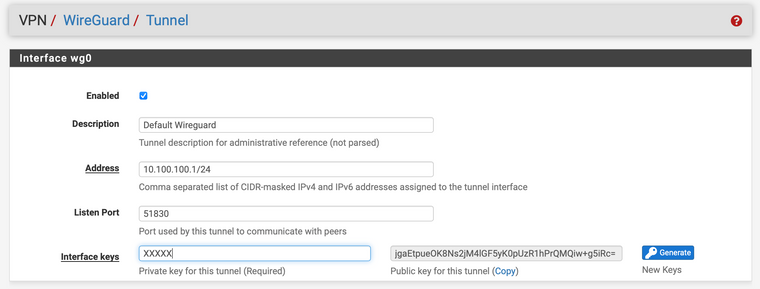

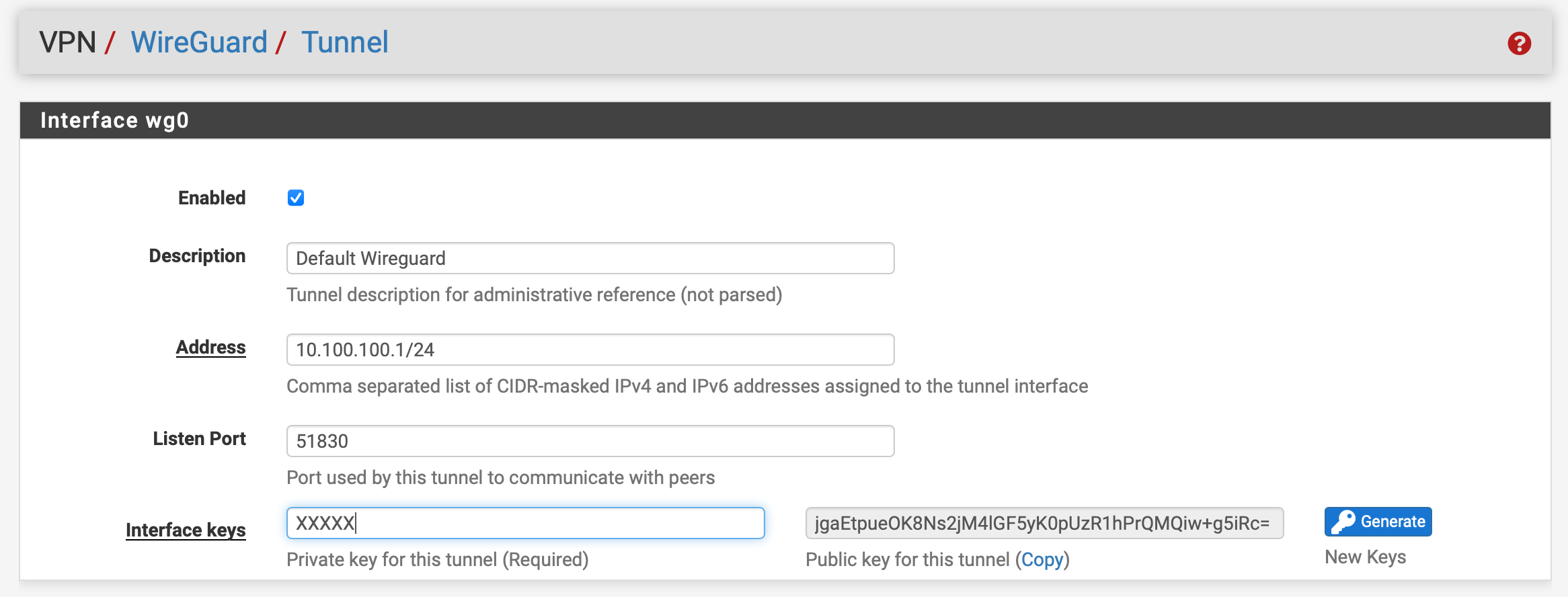

Version: 2.5.0.a.20210127.2350Local pfsense : 10.100.100.1/32 and 192.168.1.0/24

Remote PI : 10.100.100.50/32 and 192.168.29.0/24I am trying to get some device in my local LAN to route via the remote PIs internet. Here's what works

- The tunnel is up, peer is connected

- firewall rules for the interface are set to any any allow

- Outbound NAT is set

- The remote PI is set to forward traffic and NAT rules are in place in Remote PI

With this I am able to

- Reach the Remote PI LAN from my pfSense LAN and vice versa.

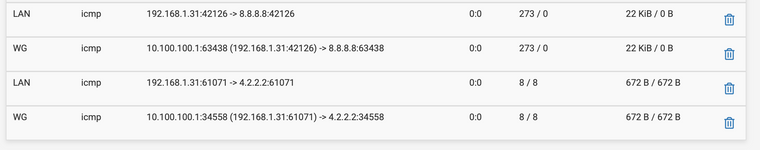

- Reach 4.2.2.2 from my pfSense LAN by using the internet at Remote PI (see logs)

I cannot seem to reach 8.8.8.8 and some other IP's (LinkedIn etc) through this. I checked - double checked everything and couldn't find any issue. Attached logs will show you that the packet destined for 8.8.8.8 leaves the tunnel but never arrives at the PI (whereas a packet for 4.2.2.2 does reach). I can reach those IP's from remote PI so does not look like the remote ISP is blocking anything.

I have tried to

- Disable hardware checksum offload (is ticked)

- Different MTU values from 1380 to 1420

*set keep-alive timer of 20 sec - Double checked there's no rule blocking 8.8.8.8 either locally or on the remote PI

Weird things I noticed

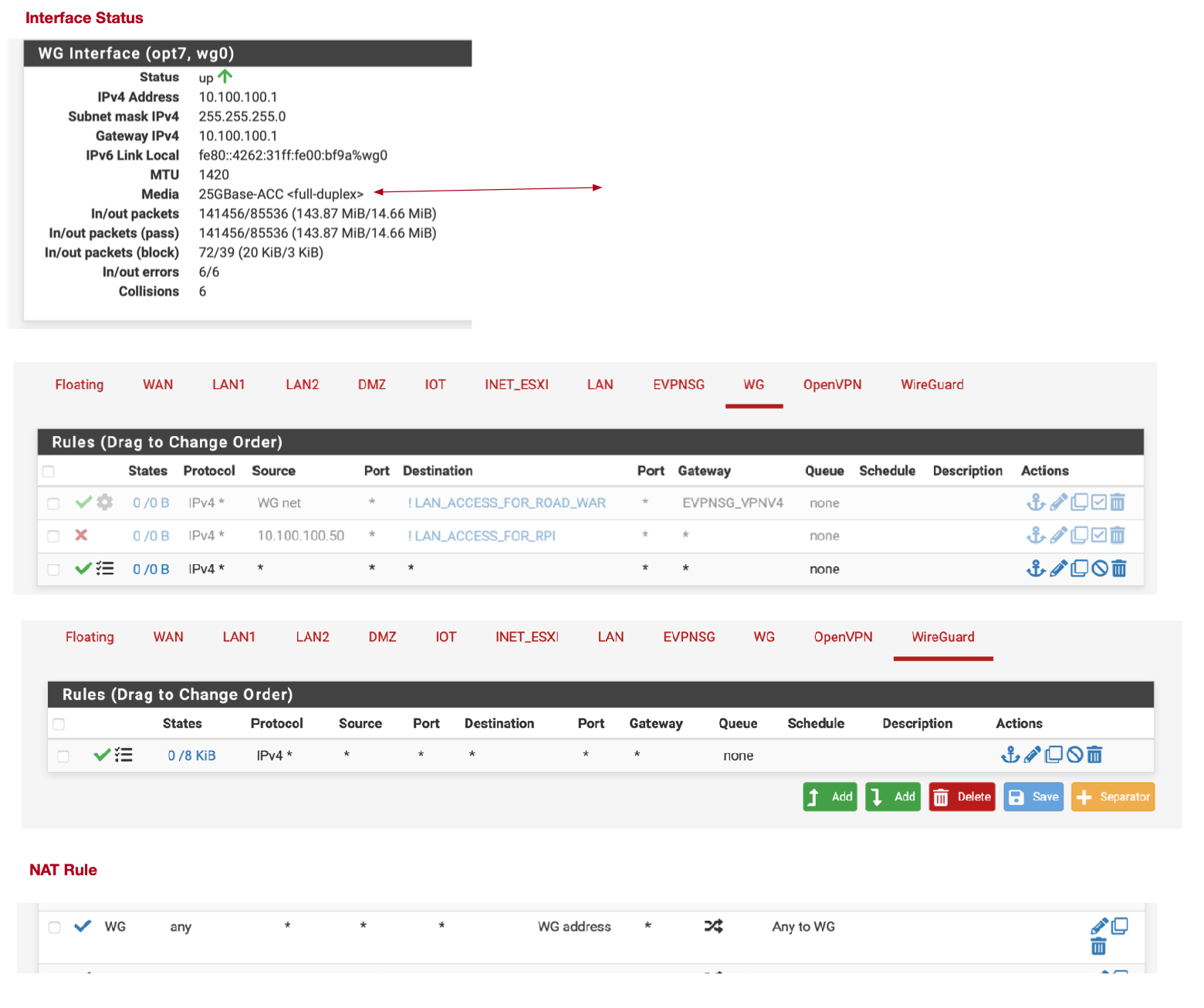

- The WG interface shows 25Gbase ACC??

I hope someone can help! Please click the image to see a high resolution version

image url)

image url)

-

Not that it's relevant here but the first thing you should do is fix your outbound NAT rule. Never use a source of "Any" on those. Use a specific source, such as your LAN subnet. If you use "Any" then the interface can NAT traffic from itself, which can be problematic.

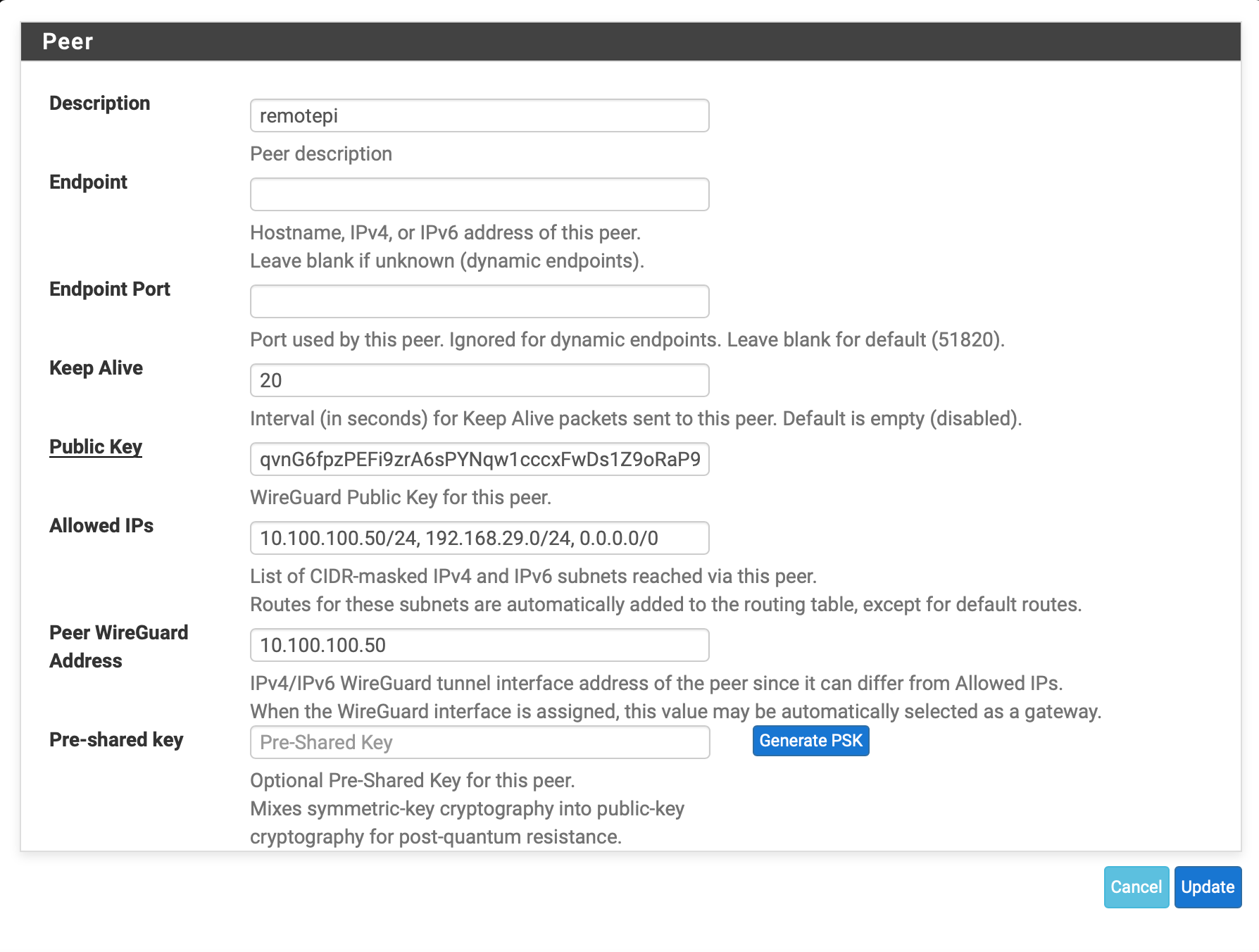

Also, I see you don't have the endpoint defined on the peer. For that to pass traffic the PI end must first send traffic to the pfSense end. If it doesn't, it has no way to initiate since it doesn't know where the PI end is. That will result in the exact behavior you see. You can check this by looking at the output of

wgat a command prompt. When the firewall knows where the remote side is, it will print the endpoint in that output even when it isn't in the configuration file.If you made a change on the pfSense end between those tests, that could explain the difference in behavior.

You could setup something on the Pi which periodically sends a ping back to the pfSense end to keep the tunnel alive. I don't know if the internal keep alive in WireGuard is enough for that.

-

@jimp said in Possible BUG : Wireguard routing weirdly:

Also, I see you don't have the endpoint defined on the peer. For that to pass traffic the PI end must first send traffic to the pfSense end. If it doesn't, it has no way to initiate since it doesn't know where the PI end is. That will result in the exact behavior you see. You can check this by looking at the output of wg at a command prompt. When the firewall knows where the remote side is, it will print the endpoint in that output even when it isn't in the configuration file.

Thank you for replying. I have fixed the NAT rule. The REMOTE PI is behind a CGNAT (kind of like a dynamic IP) and hence I am unable to define the peer IP. To initiate the tunnel I login to the remote PI (team viewer) and ping the tunnel IP on pfsense (10.100.100.1) which brings up the tunnel. After that the PI continuously pings the pfSense LAN to keep the tunnel up.

Between the tests, I have validated that the tunnel is UP. Even as I am typing this, my pings to 4.2.2.2 go through and 8.8.8.8 do not (and some other IP's too). In the screenshot (earlier post), you can see the pins are correctly natted to the 10.100.100.1 (WG interface IP) and are sent to 10.100.100.50 (Remote PI tunnel interface) but never received there.

I'm using a test PC 192.168.1.31 to route traffic to the WG interface

-

If you see it exit one side but not enter the other, the next thing to check is the outer tunnel traffic.

Capture on the external interfaces (e.g. WAN) and see if the packets show up there as you ping, and if they make it from one side to the other. If the outer packets leave pfSense and arrive on the Pi, the problem is likely on the Pi side.

Also I can't find the link at the moment but there was recently an issue with WireGuard and certain keys that could cause traffic to be dropped. May not hurt to make a fresh set of public/private keys on both sides to see if the behavior changes.

-

I am unsure if I can capture the ping on the WAN because the ping would be encapsulated inside the Wireguard tunnel. I do see lots of traffic going back and forth between the RemotePI and pfsense if I listen on WAN.

I'll regenerate the keys tomorrow and see if that helps. Thank you for that suggestion.Meanwhile if anyone else has any other suggestions, please do let me know.

P.S - I upgraded to the latest build and same behaviour. -

If you can stop all traffic that would be crossing WireGuard so that only your test pings would show, that would help narrow down what you see on WAN. Otherwise it would be impossible to know for sure if there is a lot of traffic.

-

So I tried pinging with a 1000 byte packet and for destinations that I can ping I see a 1072 byte packet on the WAN capture. For destinations that I cannot Ping these packets are missing in the WAN capture. As a conclusion I think, the packets are disappearing inside pfsense. BTW did you see the interface speed of WG0 in my screenshot ? It’s defaulting to a very odd looking one - perhaps that may be the cause ?

-

That speed is fine/normal for WireGuard. It's irrelevant.

If the packets are on the wg0 interface but don't leave WAN the only explanation I can think of is that WireGuard can't find the peer to route them. Not sure what might be happening there or why it's only certain addresses, though.

I'm not aware of anyone else having anything like this happen.

-

@jimp

I guess the only option left is factory reset with manual config. I have tried a factory reset and reinstated the config file with the same results. Will keep you posted. -

@jimp

A clean reinstall after a factory reset solved the issue. I did not have to specify any MTU on the interface. This closes the issue. Thank you for the time and the attention. -

@jimp I have hit this again. After the clean install and built everything was working fine till I noticed today that some apps that use the WG tunnel do not work - so I started checking and the same issue is back. Can't reach certain IP's - the packets are leaving the WG tunnel but not leaving the WAN interface.

Is there something you suggest or can I provide more logs to see if this is a bug ?

P.S - You were right, it does look like WireGuard cannot find peer to route the packet. As soon as I add the offending IP's in the remote peers allowed IP's - the traffic starts to flow. So that eliminates everything else as the root cause.

peer: qvsssssxxxxxxxxxxxxxxx=

endpoint: 4.xx.xx.xx:58451

allowed ips: 8.8.8.8/32, 192.168.29.0/24, 10.100.100.50/32, 0.0.0.0/0 -

Guys anyone for this ? Should I raise a BUG report ?

-

Still not clear it's a bug here, and not a config issue, since nobody else can seem to reproduce it but you.

-

Ok I'll wait on it. I don't have a spare/lab setup to reproduce the bug - but if someone is keen enough to test this here are the steps to trigger this (at least in my setup).

- Setup a new wireguard tunnel wg0 to remote destination - In allowed IP's allow - REMOTE_LAN, Wireguard tunnel IP and 0.0.0.0/0. Everything else is default.

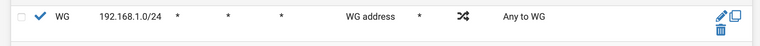

- Setup a Wireguard Interface [WG] - Policy to allow source WG net to any any proto any

- No rules for WIREGUARD group - Leave as is

- Gateway set to WAN_DHCP

- Create a LAN rule to route a PFSENSE_LAN_IP and set gateway to WG_WGv4 GW (Note: all LAN traffic is not being routed via the tunnel, only selected traffic)

- Now trace / ping from the machine (PFSENSE_LAN_IP) to a few websites and see if you can reach. I particularly had issues with 8.8.8.8 , LinkedIn.com and a few others.

-

-

@arrmo Quite possible - different symptoms with a common underlying cause. Will have to wait and see. I asked for help on reddit and until now no one else seems to come across this issue. A few days ago I did try setting up rules under WIREGUARD group to see if that'd make a difference to the lost packets and it did not :(.

-

@ab5g OK, NP - let's see how it goes. As long as that group rule is in place, most traffic gets through (still some odd sites). But with it off, much more trouble. And I have tried adding all sorts of pass rules in LAN and WG (interface), none of them seem to be working. Dang it!

Thanks!

-

@arrmo ping jimp here with your issue details. One more voice will help maybe.

-

@ab5g said in [UnSolved] Possible BUG : Wireguard routing weirdly:

ping jimp here with your issue details

ping? Meaning IM? Thinking the comments above are a ping of sorts, no?

Thanks!

-

@AB5G BTW, are you finding that a pass-all rule on the WireGuard group does help any, or not at all? I find it helps, but it's not a fix-all. Still some issues.

I checked the firewall logs, nothing there noted as blocked, so fun to debug. Any suggestions? Enabled logging on default rules? Or try tcpdump? To try to help resolve this.

Thanks!

. But if not, perhaps another example?

. But if not, perhaps another example?