HAProxy is running, but backend is down in stats and cannot access server

-

Re: [HAProxy is running](but backend is down in stats and cannot access server)

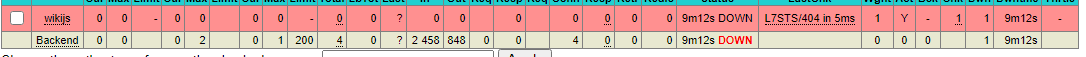

Hello @PiBa, I am running into a similar issue and this is what I am seeing on my PfSense UI under stats:

L7STS/404 : HTTP Status Check returned code <404>Contents of my

haproxy.cfgfile is as follows:global maxconn 1000 stats socket /tmp/haproxy.socket level admin expose-fd listeners gid 80 nbproc 1 nbthread 1 hard-stop-after 15m chroot /tmp/haproxy_chroot daemon tune.ssl.default-dh-param 2048 log-send-hostname TGpfSense-proxy server-state-file /tmp/haproxy_server_state listen HAProxyLocalStats bind 127.0.0.1:2200 name localstats mode http stats enable stats admin if TRUE stats show-legends stats uri /haproxy/haproxy_stats.php?haproxystats=1 timeout client 5000 timeout connect 5000 timeout server 5000 frontend shared-frontend-merged bind 1.2.3.4:443 name 1.2.3.4:443 ssl crt-list /var/etc/haproxy/shared-frontend.crt_list mode http log global option http-keep-alive option forwardfor acl https ssl_fc http-request set-header X-Forwarded-Proto http if !https http-request set-header X-Forwarded-Proto https if https timeout client 30000 acl aclcrt_shared-frontend var(txn.txnhost) -m reg -i ^([^\.]*)\.domain\.com(:([0-9]){1,5})?$ acl ACL1 var(txn.txnhost) -m str -i wiki.domain.com http-request set-var(txn.txnhost) hdr(host) use_backend wiki.domain.com_ipv4 if ACL1 frontend http-to-https bind 1.2.3.4:80 name 1.2.3.4:80 mode http log global option http-keep-alive timeout client 30000 http-request redirect scheme https backend wiki.domain.com_ipv4 mode http id 10100 log global timeout connect 30000 timeout server 30000 retries 3 source ipv4@ usesrc clientip option httpchk OPTIONS / server wikijs 10.10.10.30:3000 id 10101 check inter 1000haproxy -vvresult:HA-Proxy version 1.8.27-493ce0b 2020/11/06 Copyright 2000-2020 Willy Tarreau <willy@haproxy.org> Build options : TARGET = freebsd CPU = generic CC = cc CFLAGS = -O2 -pipe -fstack-protector-strong -fno-strict-aliasing -fno-strict-aliasing -Wdeclaration-after-statement -fwrapv -Wno-address-of-packed-member -Wno-null-dereference -Wno-unused-label -DFREEBSD_PORTS OPTIONS = USE_GETADDRINFO=1 USE_ZLIB=1 USE_CPU_AFFINITY=1 USE_ACCEPT4=1 USE_REGPARM=1 USE_OPENSSL=1 USE_LUA=1 USE_STATIC_PCRE=1 USE_PCRE_JIT=1Kindly advise how to address this issue. I would like to avoid having the need to run another system for this purpose when such a feature is available in PFSense.

Note: Public IP and domain names have been modified in the

.cfgfile for this post. -

@tgill

The webserver replies with a '404' response.. why?Do you need to supply a 'Host: www' header perhaps with the request.? The healthcheck backend configuration page 'hints' at how that can be done.

-

@piba

Thank you for checking out this post. I was able to resolve the issue by addinghttp-check expect status 404under advanced settings. TBH, I didn't quite understand how this line would make a difference because while accessing the site through standard NAT rules, I didn't see any 404 errors. Unless the page requested was sending a 404 response prior to redirecting to a valid page. Please feel free to shed some knowledge if you have deeper explanation for this.Thank you once again for your time.

-

@tgill

Well i cant be sure, and using "http-check expect status 404" can be a decent workaround, though i don't think it is required here. (for a '401 authentication required' i think its acceptable ;) as haproxy doesn't know credentials to login..)

For the 404 i expect that the webserver 'needs' a Host header. Do you know if the webserver is configured to use 'virtual-hosts'.?. If you visit the webserver locally by its IP does it respond with the proper page? What happens if you use curl to request the page.? -

Do you know if the webserver is configured to use 'virtual-hosts'.?I believe the built in NGINX server may be the cause of that

If you visit the webserver locally by its IP does it respond with the proper page?Yes I do get routed to the correct page and when I ran the cURL command I did see it was redirecting to the sign-in page but with

302status code. Please see the command output below.curl 10.10.10.30:10080 <html><body>You are being <a href="http://10.10.10.30:10080/users/sign_in">redirected</a>.</body></html>curl -I 10.10.10.30:10080 HTTP/1.1 302 Found Server: nginx Date: Sat, 17 Apr 2021 05:57:58 GMT Content-Type: text/html; charset=utf-8 Connection: keep-alive Cache-Control: no-cache Location: http://10.10.10.30:10080/users/sign_in Set-Cookie: experimentation_subject_id=eyJfcmFpbHMiOnsibWVzc2FnZSI6IklqWmhPVFV5WmpKbUxXRTNPVFV0TkdVNVppMWhZMlpqTFRSalpqRmhaV1JqTmpaak1TST0iLCJleHAiOm51bGwsInB1ciI6ImNvb2tpZS5leHBlcmltZW50YXRpb25fc3ViamVjdF9pZCJ9fQ%3D%3D--1e52d67fefb6115c51916e512ee89e72134ea6ab; path=/; expires=Wed, 17 Apr 2041 05:57:58 GMT; HttpOnly X-Content-Type-Options: nosniff X-Download-Options: noopen X-Frame-Options: DENY X-Gitlab-Feature-Category: projects X-Permitted-Cross-Domain-Policies: none X-Request-Id: 01F3F6GGKSQNK1DQZBJV8FJZNA X-Runtime: 0.090303 X-Ua-Compatible: IE=edge X-Xss-Protection: 1; mode=block Strict-Transport-Security: max-age=31536000 Referrer-Policy: strict-origin-when-cross-originI realize that my setup maybe not be an ideal one but I think this workaround will have to do since this is a home-lab environment of Gitlab running on a docker container. I had just wanted to get some understanding of what might have been happening in the background. I really appreciate your time @PiBa.

-

@tgill

Okay, a 302 is not a 404 though, so curl's request must be different from where haproxy's healthcheck ends up.. Perhaps just change the check method from OPTIONS to GET and see if that makes a difference.? Curl would be using GET by default. -

HAProxy is testing over HTTP/1.0 while your curl is using HTTP/1.1. That may very well be the difference between the two tests and the two results.

You can try something like

HTTP/1.1\r\nHost:\ hostname.domain.lanin the "Http check version" box in your backend's configuration.