Captive portal Idle timeout and Hard timeout not working

-

Maybe there is an easy way to check if that cron task is waking up every minute.

But I'm not aware of it.So, let's go for the easy way.

This :@shood said in Captive portal Idle timeout and Hard timeout not working:

/etc/rc.prunecaptiveportal cpzone

is a file.

a text file.

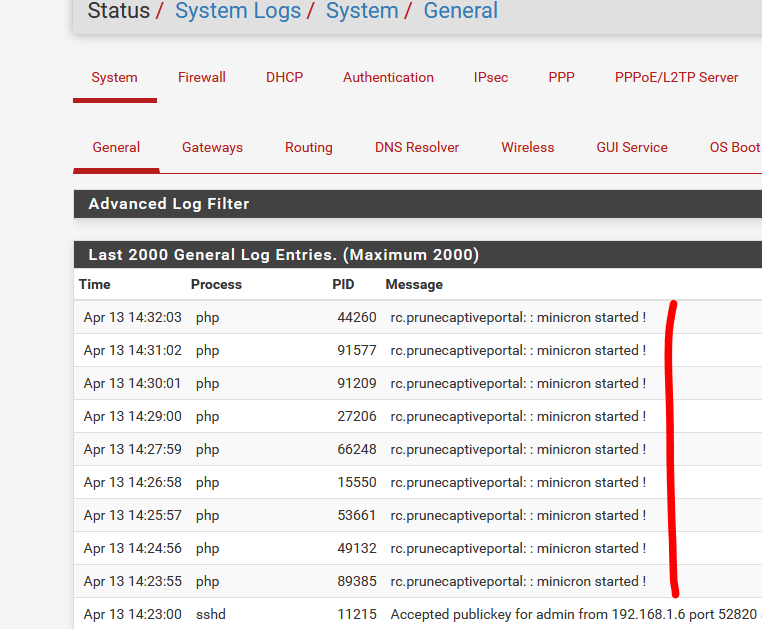

On the (now) empty line 40, put this :log_error("{$cpzone} : minicron started !");Now, after a minute max, check the system log.

Do you see :

edit : Humm, had a phone call.

Lasted for 9 minutes ....Btw : when you look at the rest of the /etc/rc.prunecaptiveportal cpzone, you find out it ends up calling the function captiveportal_prune_old() in /etc/inc/captiveportal.inc

One of the first things that are checked, is the hard time out and soft time out.These soft- and hard time outs works for me.

I could come up with a reason why they shouldn't work. The "2.5.0" didn't even change that code base.

Maybe a "disconnect all users", and a good old reboot ? -

@gertjan thanks for the answer

I added the log message and it worked fine.

I see the "Minicron started" in the system log

The function captiveportal_prune_old () is also located at the end line on / etc / inc / captiveportal.incI also tried disconnecting all users and rebooting the system

but the Hard timeout nad Idle Timeout still don't work !!

I see on captiveportal status that all users are still connectedon the System Logs /Authentication /Captive Portal Auth I see only "ACCEPT"Messages:

Apr 13 16:23:20 logportalauth 86745 Zone: cpzone - ACCEPTI haven't seen session time out or Disconnect Messages

-

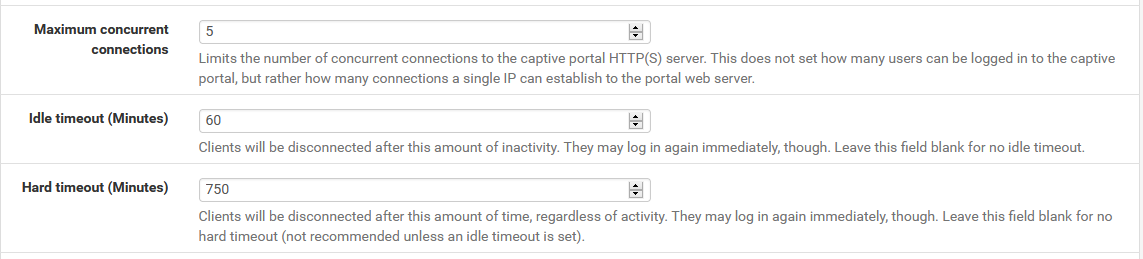

@shood how do you set your idle and hard timeout? could you make a screenshot ?

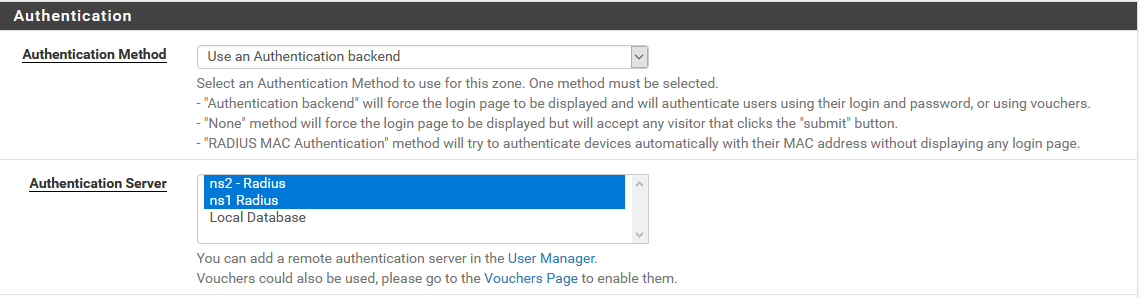

you already said you're not using freeradius. how are you performing authentication ? local logins? no authentication ?

-

@free4 Thanks for reply .

I use external Radius server for authentication .

-

@shood said in Captive portal Idle timeout and Hard timeout not working:

I use external Radius server for authentication .

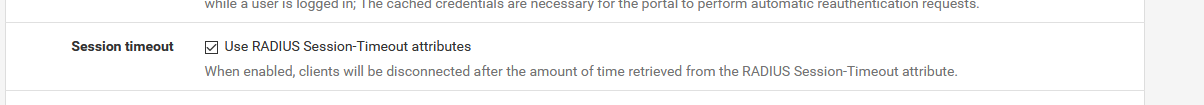

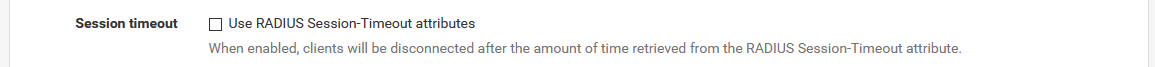

Then, what do you have for this setting :

If checked, I would suspect that pfSense's time out settings are not used.

So, I propose a test ^^

/etc/inc/captiveportal.inc (in the function captiveportal_prune_old() ):

Lines 854-859 :

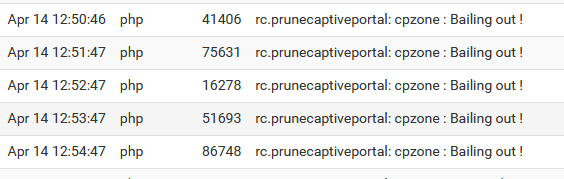

/* Is there any job to do? If we are in High Availability sync, are we in backup mode ? */ if ((!$timeout && !$idletimeout && !$trafficquota && !isset($cpcfg['reauthenticate']) && !isset($cpcfg['radiussession_timeout']) && !isset($cpcfg['radiustraffic_quota']) && !isset($vcpcfg['enable']) && !isset($cpcfg['radacct_enable'])) || captiveportal_ha_is_node_in_backup_mode($cpzone)) { return; }Add a log line :

/* Is there any job to do? If we are in High Availability sync, are we in backup mode ? */ if ((!$timeout && !$idletimeout && !$trafficquota && !isset($cpcfg['reauthenticate']) && !isset($cpcfg['radiussession_timeout']) && !isset($cpcfg['radiustraffic_quota']) && !isset($vcpcfg['enable']) && !isset($cpcfg['radacct_enable'])) || captiveportal_ha_is_node_in_backup_mode($cpzone)) { log_error("{$cpzone} : Bailing out !") return; }If you see the " Bailing out !" in the log, then the auto_prune of pfSense isn't used at all : everything is under Radius control.

Take note of the comment found on line 879 (880) :

/* hard timeout or session_timeout from radius if enabled */So, most probably : check your radius setup and see what time out values are used, as the local ones (pfSense settings) are not used.

Or uncheck the "Use RADIUS Session-Timeout attributes" option.

-

@gertjan said in Captive portal Idle timeout and Hard timeout not working:

the " Bailing out !

The Radius session-Timeout is unchecked .

I added the log line and I see the " Bailing out in the log !!

strange ! on the old version was everything ok -

@shood said in Captive portal Idle timeout and Hard timeout not working:

I added the log line and I see the " Bailing out in the log !!

So :

@gertjan said in Captive portal Idle timeout and Hard timeout not working:

If you see the " Bailing out !" in the log, then the auto_prune of pfSense isn't used at all : everything is under Radius control.

Next step :

As said, run your radius in detailed log mode, you'll see the trannsctions.

You can see if variables set into the used database tables, like time out settings, are used - or not.This engages just me : pfSense has been written with the FreeBSD FreeRadius 'syntax' in mind. Some minor name changing of 'default' vars can have the effect of what you're seeing now.

-

@gertjan

Hi again ,after Debugging I've found the problem :

I have 2 Pfsense Boxs(BoxA and Box B ) with a loadbalncer system some interfaces are Master and some are slave .carp interfaces are load_balanced and some of the IFs are in BACKUP state captiveportal_ha_is_node_in_backup_mode returns wrong value true

/etc/inc/pfsense-utils.inc captiveportal_ha_is_node_in_backup_mode($cpzone)

* Return true if the CARP status of at least one interface of a captive portal zone is in backup mode * This function return false if CARP is not enabled on any interface of the captive portal zone */ function captiveportal_ha_is_node_in_backup_mode($cpzone) { global $config; $cpinterfaces = explode(",", $config['captiveportal'][$cpzone]['interface']); if (is_array($config['virtualip']['vip'])) { foreach ($cpinterfaces as $interface) { foreach ($config['virtualip']['vip'] as $vip) { if (($vip['interface'] == $interface) && ($vip['mode'] == "carp")) { if (get_carp_interface_status("_vip{$vip['uniqid']}") != "MASTER") { log_error("Error captiveportal in backup mode " . $interface . " state " . get_carp_interface_status("_vip{$vip['uniqid']}")); return true; } } } } } return false; }The function captiveportal_ha_is_node_in_Backup_mode($cpzone)

returned the value true because in my case at least one interface is in backup mode and thats why

The prune was terminated to early and no user will be disconeccted due to time out .

I recogneized that when I restarted the BoxA and all interfaces were Master the Hardtime worked fine .I solved the problem with commenting out the function call captiveportal_ha_is_node_in_Backup_mode on /etc/inc/captiveportal.inc

/* Is there any job to do? If we are in High Availability sync, are we in backup mode ? */ if ((!$timeout && !$idletimeout && !$trafficquota && !isset($cpcfg['reauthenticate']) && !isset($cpcfg['radiussession_timeout']) && !isset($cpcfg['radiustraffic_quota']) && !isset($vcpcfg['enable']) && !isset($cpcfg['radacct_enable'])) || 0 ) { # if carp interfaces are load_balanced and some of the IFs are in BACKUP state aptiveportal_ha_is_node_in_backup_mode returns wrong value true # captiveportal_ha_is_node_in_Backup_mode($cpzone)) { log_error("captiveportal_prune_old run skipped due to captiveportal_ha_is_node_in_Backup_mode"); return; }The same problem with the function captiveportal_send_server_accounting i had to comment out the Backup mode to see the accounting records on my radius server .

function captiveportal_send_server_accounting($type = 'on', $ruleno = null, $username = null, $clientip = null, $clientmac = null, $sessionid = null , $start_time = null, $stop_time = null, $term_cause = null) { global $cpzone, $config; $cpcfg = $config['captiveportal'][$cpzone]; $acctcfg = auth_get_authserver($cpcfg['radacct_server']); if (!isset($cpcfg['radacct_enable']) || empty($acctcfg) || 0 ) { # captiveportal_ha_is_node_in_backup_mode($cpzone)) { return null;Another solution is to reserver the return value on the Backup mode function from true to false Maybe ?!

I didnt have the problem befor the version 2.5.0 and anyway I have already upgraded the Box to the new version 2.5.1 .

-

@shood pfSense only support primary-secondary configuration (to provide "High Avaliablility in case of a disaster").

active-active configurations (having 2 masters to perform load balencing between them) are not supported by pfSense. At all.

There is also no plan to support this kind of feature in the future.

"High Availability" support =/= "clustering support". That's why you are running into issues...

The purpose of

captiveportal_ha_is_node_in_backup_modeis, as the name imply, to detect if the pfSense appliance is master or backup. When being backup, the captive portal (aswell as many other pfSense feature : VPN, etc...) is set to standbyIt is not possible to enable active-active configuration just by commenting calls to this function. .. as you may run into issues because appliances do sync with each other (example : "when rebooting or becoming master, the primary will try to retrieve users from the secondary") . You may need to completly or partially disable the sync between captive portals

-

@free4

It was working fine without any issues in previous releases

Anyway, thanks for the information -

N nourgaser referenced this topic on