OpenVPN 2.5.1 MSS problem, RDP freezes

-

Hi,

I encountered problem on two different installations so I decided to create this topic - mayby someone is facing similar problem.Issue occurred after migrating from 2.4.5p1 to 2.5.1. In both environment I had to rollback to 2.4.5p1.

On 2.5.1 OpenVPN is established correctly, RDP is connected smoothly and all looks ok... for few (up to 10) seconds. After this time RDP freezes and reconnect, after reconnection and few second of normal working it again hangs. OpenVPN connection itself is stable.

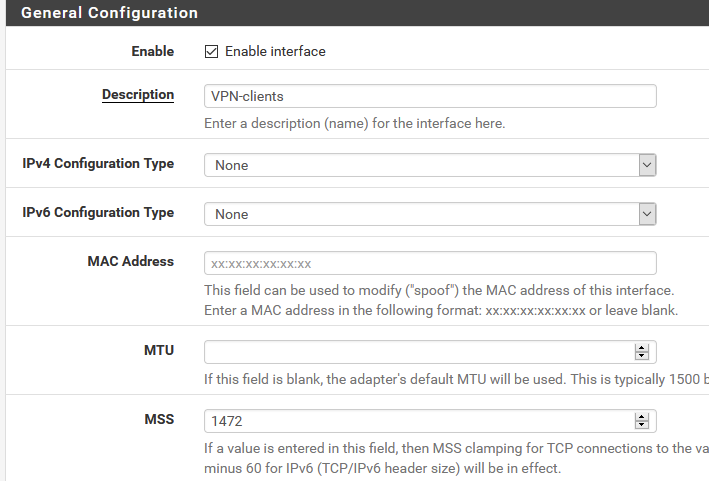

Based on this I started digging around MTU, MSS and fragmentation. I tested in many combination "mssfix", "fragment", "tun-mtu", "link-mtu" and other similar OpenVPN statements. Nothing helped.Then I look closer to MSS and found these topics and bugs 1 2 3 4

After setting MSS on the OpenVPN interface to 1420 issue with RDP vanished.I compare /tmp/rules.debug from 2.4.5p1 and 2.5.1 both look similar, but in my opinion in 2.5.1 it's not working correctly and it need addition manual configuration on VPN interface.

I'll appreciate feedback from someone more familiar with iptables and how '/tmp/rules.debug' is interpreted.

@viktor_g Mayby you will be able to look at it could be connceted with: IPv6 PPPoE MSS incorrectFrom my point of view below statements aren't working in 2.5.1 for OpenVPN, but I cannot prove that. :)

scrub from any to <vpn_networks> max-mss 1398 scrub from <vpn_networks> to any max-mss 1398Regards.

2.4.5p1

[2.4.5-RELEASE][admin@01]/root: grep scrub /tmp/rules.debug scrub from any to <vpn_networks> max-mss 1398 scrub from <vpn_networks> to any max-mss 1398 scrub on $WAN all fragment reassemble scrub on $LAN all fragment reassemble scrub on $V100_10_0_100_0 all fragment reassemble scrub on $V102_10_0_102_0 all fragment reassemble scrub on $V104_10_0_104_0 all fragment reassemble scrub on $VPN_OpenVPN all fragment reassemble [2.4.5-RELEASE][admin@01]/root: grep vpn_networks /tmp/rules.debug table <vpn_networks> { 10.0.16.0/24 10.0.16.0/24 10.150.40.10/32 10.202.91.0/24 10.245.254.0/24 } scrub from any to <vpn_networks> max-mss 1398 scrub from <vpn_networks> to any max-mss 1398 [2.4.5-RELEASE][admin@01]/root:2.5.1

[2.5.1-RELEASE][admin@02]/root: grep scrub /tmp/rules.debug scrub from any to <vpn_networks> max-mss 1398 scrub from <vpn_networks> to any max-mss 1398 scrub on $WAN inet all fragment reassemble scrub on $WAN inet6 all fragment reassemble scrub on $LAN inet all fragment reassemble scrub on $LAN inet6 all fragment reassemble scrub on $V100_10_0_100_0 inet all fragment reassemble scrub on $V100_10_0_100_0 inet6 all fragment reassemble scrub on $V102_10_0_102_0 inet all fragment reassemble scrub on $V102_10_0_102_0 inet6 all fragment reassemble scrub on $V104_10_0_104_0 inet all fragment reassemble scrub on $V104_10_0_104_0 inet6 all fragment reassemble scrub on $VPN_1 inet all fragment reassemble scrub on $VPN_1 inet6 all fragment reassemble scrub on $VPN_OpenVPN inet all max-mss 1380 fragment reassemble scrub on $VPN_OpenVPN inet6 all max-mss 1360 fragment reassemble [2.5.1-RELEASE][admin@02]/root: grep vpn_networks /tmp/rules.debug table <vpn_networks> { 10.0.16.0/24 10.0.16.0/24 10.150.40.10/32 10.202.91.0/24 10.245.254.0/24 } scrub from any to <vpn_networks> max-mss 1398 scrub from <vpn_networks> to any max-mss 1398 [2.5.1-RELEASE][admin@02]/root: -

@zabi I'm having a similar issue:

https://forum.netgate.com/topic/163851/single-wan-openvpn-issue-can-connect-intermittent-access-to-network-2-5-1?_=1621430224292Which I believe is related to yours, and yesterday morning the OpenVPN client reconnected many times in a minute or so. I guess that while you use RDP as an example and fixed the issue for it, whatever the service is on the other side it is the same issue with all protocols.

I must rollback to 2.4.5 but I have to be on-site to do it just in case, and then figure out if it gets stable again.

-

@iampowerslave If you still did not made a rollback, maybe try my workaround, to set up MSS on OpenVPN interface if you're have it configured this way. For me it was helpful and reassured me that the problems is somewhere around MTU and MSS. In one environment it's working two weeks in second one, with 100+ users, I'm still afraid to perform an upgrade. :)

-

@zabi Hi!

Thanks for that, but I'm not at the site and won't get crazy changing stuff. And when I get a chance to be at the site I'm going to just replace the VM HDD with a 2.4.5 version.

Weird thing, today it behave as expected.