kernel panic since 2.5.2.r.20210615.1851

-

That part of the crash report doesn't tell us a whole lot, can you post the rest of it, or at least the backtrace?

-

@jimp It offered me two files to download. Have retained them. Which do you need ? Is it the ddb.txt from textdump.tar.0 ?

-

The

textdump.tar.<n>file has all of the necessary data inside. -

Following up on this, we're still not able to reproduce a panic here but if you can get us the backtrace we can try to locate the cause and fix it.

-

@jimp Ok I built a new box and made sure it gets load, issue can be replicated there, too.

Have collected data but I cannot DM you. Where shall I send it.

-

The

textdump.tar.Xfile wouldn't contain anything sensitive, you can post it here. Or open it in something like 7-zip and post theddb.txtand the panic info from the end ofmsgbuf.txtat least. -

@jimp Sorry about the delay.

So to recap, the pfctl -ss CPU issue was resolved with this upate. At least I thought it was because it stopped falling over early on. But I now see it is still falling over where it handled the workload fine in 2.4.5-p1. Just since the fix it can handle a bit more before it falls over. It also seems to OOM itself now and then crash.

I can make it fall over by having a 1GB RAM KVM instance set for 100k states receive UDP traffic like NTP or DNS. Once it reaches 40-50k states the pfctl -ss thing makes a comeback and RAM utilization increases exponentially, no longer in proportion with the number of states and it eventually falls over. This oom situation seems new.

Here are the files you asked for.

-

I opened https://redmine.pfsense.org/issues/12069 for this and we'll look into it shortly.

I can't reproduce a problem here, though my ability to generate large volumes of states is limited. I tried hitting a VM with 512MB RAM and 200k state table and though I could sort of see an inconsistent slowdown with high numbers of states I haven't been able to make it panic.

It is possible that it legitimately did run out of RAM to contain the state table in your scenario. 100k states would consume about 100MB or so of kernel memory, which is still limited on a VM with 1GB RAM. Check the values in

sysctl vm | grep kmem. Kernel memory is not the same as RAM, only a portion of available RAM can be used directly by the kernel. -

@jimp Thank you so much.

For mild stresstesting of pfSense I usually use free tier loader.io against haproxy-devel or simply use dnsperf against unbound. If I run it repeateadly I can conjure up enough states to make an impact :)

Bump up ulimit of client machine, then

resperf-report -d queryfile-example-10million-201202 -C 20000 -s <ip of pfsense>

Doing this repeatedly in short succession I can get to:

State table size

50% (50013/100000)At this point system load and memory utilization in top are uncharacteristically high vs. 2.4.5-p1 and things start falling to pieces.

Running

sysctl vm | grep kmemat that time:

vm.uma_kmem_total: 528101376 vm.uma_kmem_limit: 977170432 vm.kmem_map_free: 449069056 vm.kmem_map_size: 528101376 vm.kmem_size_scale: 1 vm.kmem_size_max: 1319413950874 vm.kmem_size_min: 0 vm.kmem_zmax: 65536 vm.kmem_size: 977170432Query file for dnsperf for anyone interested is over here

-

Using a combination of that dnsperf test, some nmap scans, and setting the firewall to conservative states I was able to get a test system over 100k states, but I haven't been able to make it panic in pfctl yet. I did run it out of RAM and pfctl was killed, and it did lock up after I stopped testing (might be due to heat), so it was definitely getting hit hard.

I was able to panic a VM but not in pfctl, it was in ZFS and likely due to the low RAM and all the disk writes from unbound logging all the failed DNS queries.

When I had it up around 50k states,

pfctl -sstook about 4.5 minutes to finish. After that I never did see it finish until the RAM ran out and the process was killed. So there is definitely a problem it's just not as easy to trigger as it seems.I'm still making adjustments and running some tests here yet. I'll update the Redmine issue with my results soon.

-

I was finally able to make it panic on a VM with less RAM. Though it got up to 200k states and stayed there for a while.

pfctl -sswas inconsistently slow. Sometimes returns results in a few seconds, other times it takes 30s-5m. Very odd. The system as a whole would become unresponsive near the end, both over the network and on the console.I added notes to https://redmine.pfsense.org/issues/12069 with my findings

-

-

Hi. I upgraded my test vm today to the latest version:

2.5.2-RC (amd64)

built on Thu Jun 17 17:10:26 EDT 2021

FreeBSD 12.2-STABLEAll seems well and I am glad that Netgate is actively squashing bugs :). Kernel panic is pretty serious so please let me know if there is anything I can do to test or help. I am running this in a virtual machine so let's squash those bugs! :)

-

There is a new snapshot up now (

2.5.2.r.20210629.1350) which should be much better here. Update and give it a try.We ended up rolling back all those pf changes since they need some work yet, so it's closer to what was in 21.05 and 2.5.1 (but with multi-wan fixed, of course).

-

@jimp <3 Love you all. In the beer

sense, not the romantic

sense, not the romantic  .

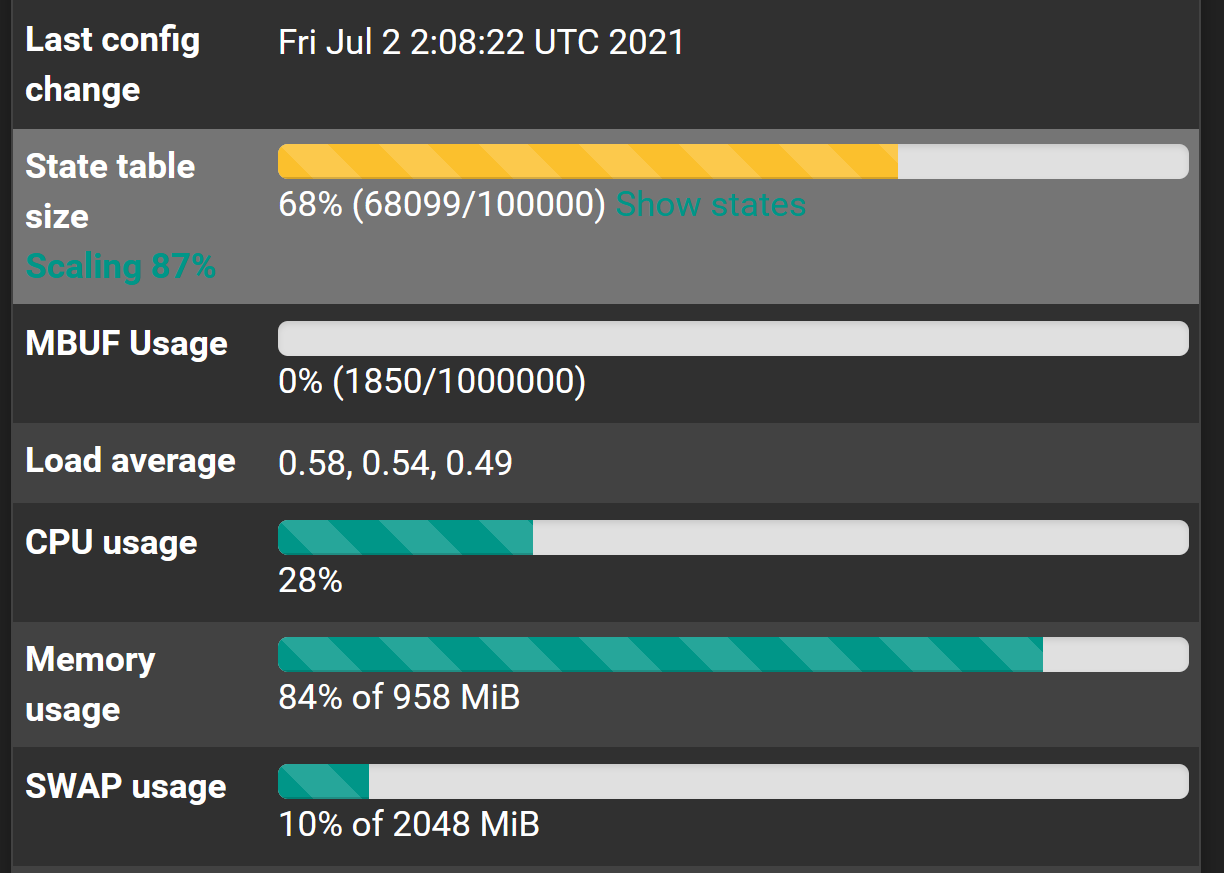

.Turned on my magic traffic faucet and hit a tiny VPS with it:

At first glance it seems we are back to previous levels of performance. Strange memory leak is gone (no SWAP is being used)

I will go overboard and send ridiculous traffic over the weekend to make it scale states and put it through hell. Will report back.

-

Zero packets dropped, no panic yet even in the face of wanton abuse :)

-

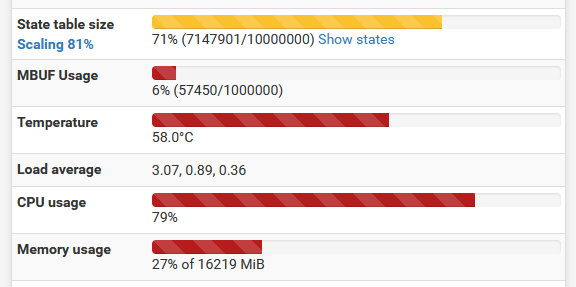

You call that wanton abuse? :-)

(Not on my equipment, but from our test lab)