Configuring pfSense/netmap for Suricata Inline IPS mode on em/igb interfaces

-

The following instructions apply to cards using the igb or em drivers. If your interface is not named eg "em0" or "igb0" or similar, these instructions may be of limited use to you.

You can get output requested below from ssh, console access, or in the pfSense ui under Diagnostics->Command Prompt

All example commands will show "igb0" -- substitute your netmap interface eg "igb4" or "em1" where necessary.

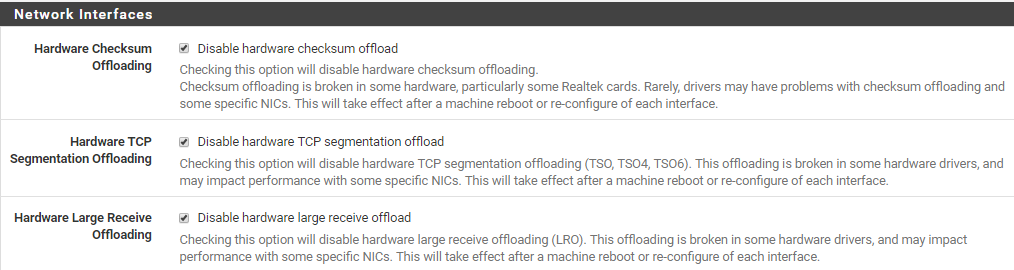

1. Disable all hardware offloading in the ui (System / Advanced / Networking)

Notice: you must reboot.2. Verify that all hardware offloading is disabled (also note your mtu) by running

ifconfig igb0Partial example output:

igb0: flags=28943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST,PPROMISC> metric 0 mtu 1500 options=1000b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,NETMAP>Options which should not appear in the output:

TXCSUM RXCSUM TSO4 TSO6 LRO TXCSUM6 RXCSUM6If you have all hardware offloading disabled in the ui (System / Advanced / Networking), but you still any of those options in the output, then you will need to make manual adjustments to your config.xml. Backup your configuration first.

You will need to add the following to your config.xml in the <system> node (see the link above for details, and remember to substitute your interface name):

<shellcmd>ifconfig igb0 -txcsum -rxcsum -tso4 -tso6 -lro -txcsum6 -rxcsum6 -vlanhwtso</shellcmd>3. Add System Tunables

Add the following system tunables in System / Advanced / System Tunables:3a. disable all flow control by adding dev.igb.0.fc with a value:0 -- netmap is most performant with flow control disabled (if your interface is em1 then this would be dev.em.1.fc = 0, etc)

3b. dev.netmap.buf_size

The value of this tunable will depend on your mtu. If your mtu is set to the default of 1500, then the default buf_size of 2048 is likely sufficient. If your MTU is larger, then dev.netmap.buf_size needs to be at least as large as your MTU. Changing this value effectively increases the amount of RAM reserved for netmap. If you need to increase this do it in increments or make sure you have plenty of RAM (the value is multiplied by other settings).

Troubleshooting Errors

"bad pkt" errors If

cat /var/log/system.log | grep netmapshows errors similar to the one below, then netmap is trying to process a packet larger than the buffers we allocated with dev.netmap.buf_size:Dec 6 23:25:38 hostname kernel: 338.512666 [1071] netmap_grab_packets bad pkt at 1054 len 4939The final number represents the size of the packet. In this example the packet is 4939 bytes long, over twice the default buf_size of 2048. This packet is considered mal-formed and is dropped as a result. The message is an indication that a packet was dropped. This is not the end of the world and is only an issue if the error is filling your system.log -- packets have been dropping silently since you started using pfSense. Periodically there are failures in the negotiation of packet size and MTUs see this link for more information. You can raise dev.netmap.buf_size to reduce these errors at the expense of memory (and perhaps additional CPU usage - see section 5.2 of this paper for additional information -- note they are using 12MB of cache with a 40gpbs connection, this is unlikely to effect you).

If the number at the end of the error is quite small, that is an indication that netmap ran into an invalid ethernet packet that was too short to hold the basic required information. If you're seeing many of these, that could be a sign of an incompatible driver.

Dec 7 23:45:05 kernel: 105.845217 [2925] netmap_transmit igb0 full hwcur 210 hwtail 44 qlen 165 len 66 m 0xfffff8010d4a2d00If

cat /var/log/system.log | grep netmapshows errors similar to the one above, then netmap is trying to put a packet into a buffer that is already full. The error is indicating that the packet has been dropped as a result. As with the "bad pkt" error, this is not the end of the world if it happens periodically. However, if you see this filling your log, then it's an indication that Suricata (or whatever application is using netmap) cannot process the packets fast enough. In other words: you need to get a faster CPU or disable some of your rules. You can buy yourself a tiny bit of burst by modifying buffers, but all you are doing is buying a few seconds of breathing room if you are saturating your link.Tips

On i5/i7 chips you may consider compiling a version of Hyperscan that supports avx2. That process is beyond the scope here, but it improves Suricata processing speed.On i7s you probably want to disable hyperthreading if you are going for maximum transmission speeds (search for hyperthreading at the link - there is a tradeoff here for heavy Suricata use).

Asking for help? Provide the following:

If you run into an issue not discussed above and would like help, please provide the output from the following commands (excluding any sensitive IPs/hostnames, and remembering to substitute your interface(s) where I have igb0):ifconfig igb0 sysctl -a | grep netmap sysctl -a | grep msi sysctl -a | grep igb sysctl -a | grep rss cat /var/log/system.log | grep netmap cat /var/log/system.log | grep sig cat /var/log/suricata/suricata_*/suricata.log | grep -m 1 "signatures processed"Please also provide your cpu type/model, total RAM, and average available RAM.

FAQ

Why can Suricata process my rules in Legacy mode but not in netmap mode? See this post.

How can I reduce CPU usage or increase Suricata throughput?

-

Start by disabling unnecessary rules as the Emerging Threats Users Guide suggests. There's little point in running content matching for traffic that will never pass your firewall because it has no place to go.

-

Set "encrypt-handling" to "bypass" in the suricata.yaml. This may be possible using the "Advanced Configuration Pass-Through" option in the ui. Suricata still inspects the encryption handshake, but ignores all encrypted traffic after the handshake as it cannot be analyzed.

-

Create bypass rules that exclude known traffic hogs that are unlikely to be threats (eg Netflix, Spotify, Youtube, etc)

-

Enable local bypass (under "stream" in suricata.yaml -- research this before enabling so you understand the risks).

-

If your cpu is being under used but traffic is bottlenecked, check out these sections of the manual: Runmodes and Threading -- Suricata ships with autofp mode enabled by default, but the developers suggest "workers" mode for increased performance (they ship with autofp enabled for packet capture usage).

Further Reading

Slide presentation by the creator of netmap

A general talk on high-speed packet inspection in linux-style operating systems - short bit on netmap

PF_RING FT is a potential future alternative

DPDK is another potential future alternative

https://blog.cloudflare.com/kernel-bypass/ -

-

@boobletins said in Configuring pfSense/netmap for Suricata Inline IPS mode on em/igb interfaces:

- Verify that all hardware offloading is disabled (also note your mtu) by running ifconfig igb0

see https://redmine.pfsense.org/issues/10836

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

P pfsjap referenced this topic on

-

G giyahban referenced this topic on

-

B bmeeks referenced this topic on

B bmeeks referenced this topic on

-

in relation to verifying the h/w offloading.

is it necessary to have on all interfaces, or just the ones assigned to suricata?i.e. I have 8 interfaces with only 4 assigned to suricata (2 are a lagg interface).

-

the primary wan is igb0.1055 (pppoe), the igb0 is not assigned and shows

TXCSUM RXCSUM TSO4 TSO6 LRO TXCSUM6 RXCSUM6

but secondary wan is igb1 eth does not have. -

igb2 ip assigned not used - has

TXCSUM RXCSUM TSO4 TSO6 LRO TXCSUM6 RXCSUM6

igb7 ip assigned but not used - does not have

TXCSUM RXCSUM TSO4 TSO6 LRO TXCSUM6 RXCSUM6 -

-

@gwaitsi said in Configuring pfSense/netmap for Suricata Inline IPS mode on em/igb interfaces:

is it necessary to have on all interfaces, or just the ones assigned to suricata?

Just the ones assigned to Suricata. But the general consensus these days with the super fast multicore CPUs most users have is that you should just disable all hardware offloading on all interfaces. It was helpful back in the days of Pentiums struggling to achieve line rate packet processing, but today's multicore CPUs generally don't break a sweat until you get to the 10 Gig area. There are several things (including some third-party packages) that can be negatively impacted by hardware offloading.