AWS VPN + FRR BGP Routing Issue

-

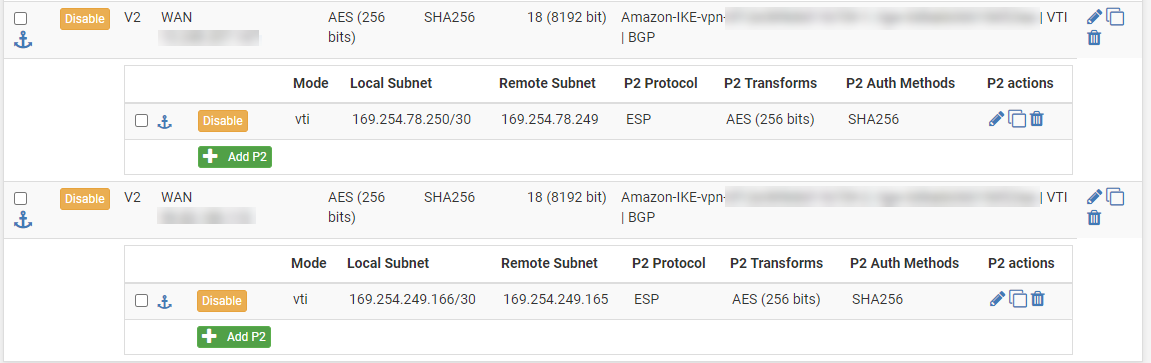

I have an AWS VPN setup with both tunnels established using VTI and using FRR for BGP.

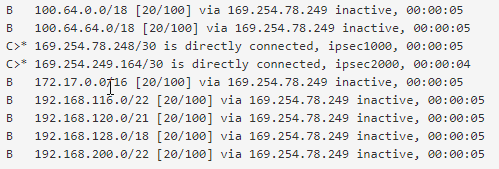

The issue is that when one of the IPSEC tunnels changes status, goes down or comes up, the BPG routes all go inactive. I have to restart the "FRR BGP routing daemon" to make them become active again. I should also mention the inactive routes disappear from the Diagnostics -> Routes page.

Also, BGP is running on both of the VTI interfaces but the second VTI interface is never used to route traffic, even if the first tunnel is down. In fact, the second VTI interface never shows up in the Zebra Routes.

I have spent several days researching and trying to fix this, thank you for any assistance you can provide.

Here is a screen shot from pfSense:

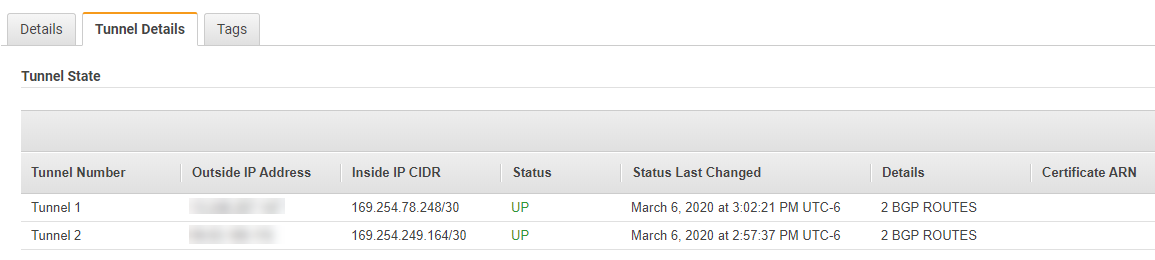

Here is a screen shot from AWS, showing that the tunnel is up and BGP is running:

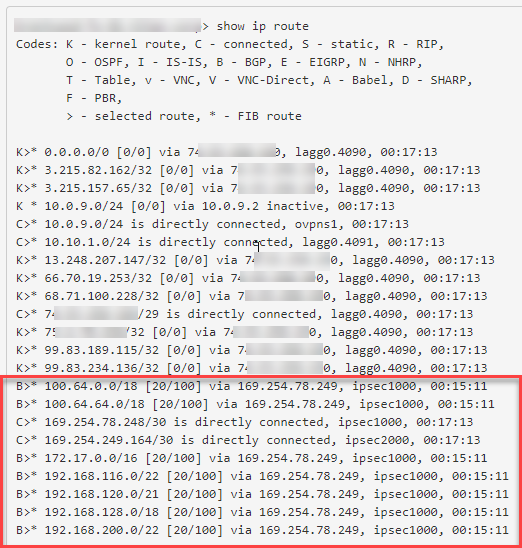

When everything is working (meaning I can reach IPs on the other end of the VPN) here is what the FRR Zebra Routes look like, the items is the red box seem to be the important ones:

When everything is NOT working here is what the FRR Zebra Routes look like (in this case the IPSEC tunnel tied to ipsec1000 was disconnected for several minutes and then reconnected for several minutes, but the routes remained inactive):

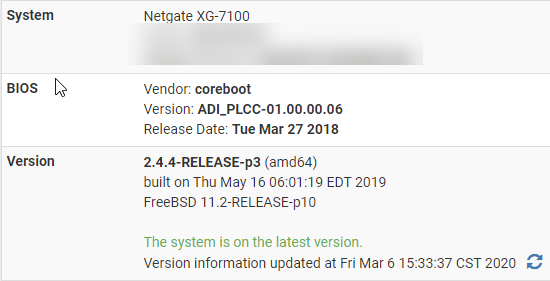

Version Info:

-

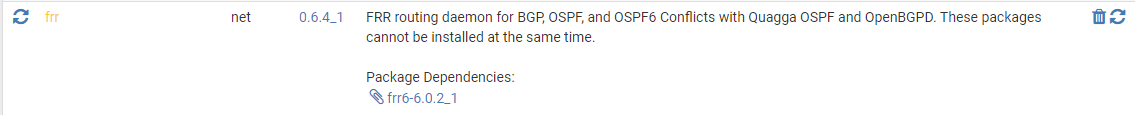

Why does FRR need an update? If you are having problems why not get to the latest code level?

See Also: https://redmine.pfsense.org/issues/9668

-

I missed that the package was out of date 0.6.4_1 -> 0.6.4_2. I had installed it originally at the end of last week, so I was not looking for updates. In any case, I have now updated the package and restarted, however the issue still exists, but is now a bit different. See the sequence of events below, I was able to reproduce multiple times.

Start ping to remote host

ipsec1000 and ipsec2000 - VPN both UP

Zebra Routes indicate traffic routed to ipsec1000

pings goodTest: Disconnect the VPN connected to the interface ipsec1000

5 Pings fail

Zebra Routes indicate traffic routed to ipsec2000

pings good

Result: This is good! This was not happening prior to updating FRR to 0.6.4_2Test: Reconnect the VPN connected to the interface ipsec1000

Zebra Routes continues to indicate traffic routed to ipsec2000

pings good

Result: As expected.Test: Disconnect the VPN connected to the interface ipsec2000

5 Pings fail

Zebra Routes indicate traffic routed to ipsec1000

2 Pings Succeed -- I was hopeful at this point the issue was resolved!

Pings start failing

Zebra Routes indicate BGP routes are inactive and remain this way.

Another thing I noticed is the IPSEC tunnel came back up pretty quickly on its own after I manually disconnected it. This only happens with this one tunnel. If I disconnect the one tied to ipsec1000, it seems to stay disconnected until i manually connect it.Restart FRR BGP and try again.. Same steps, same result.

-

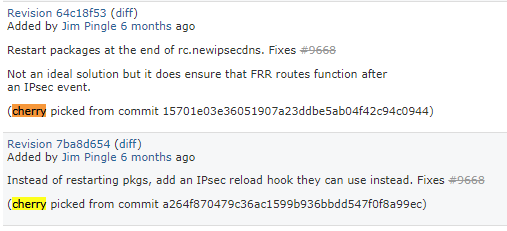

Use the cherry-picked patches in the redmine in the System Patches package. That fix won't be published until 2.4.5 is released.

-

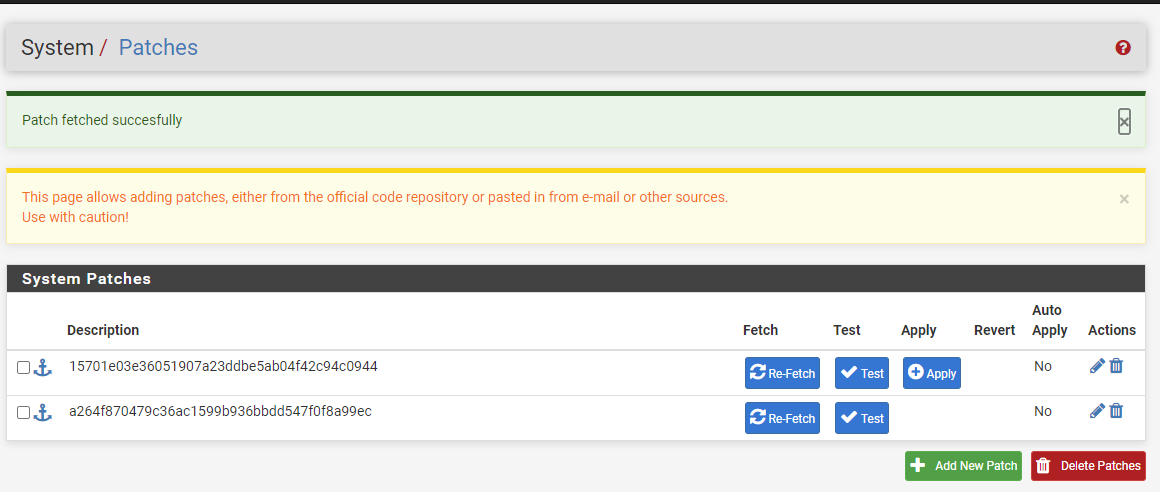

Just to confirm, I need to apply these 2 cherry picked commits in the order listed?

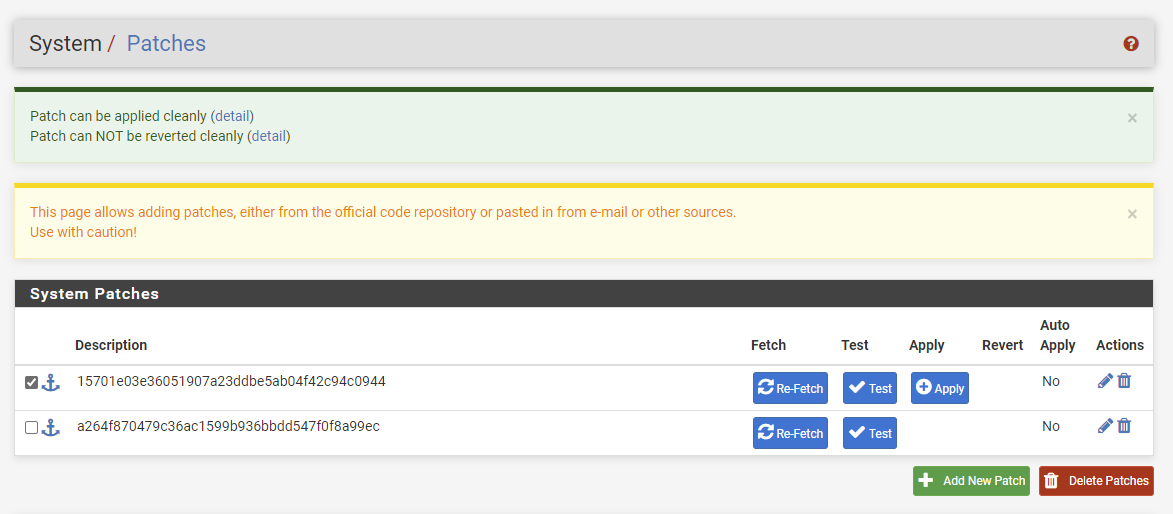

And I need to apply them using this UI?

I have never used the Patches package before, so thank you for your help on this.

-

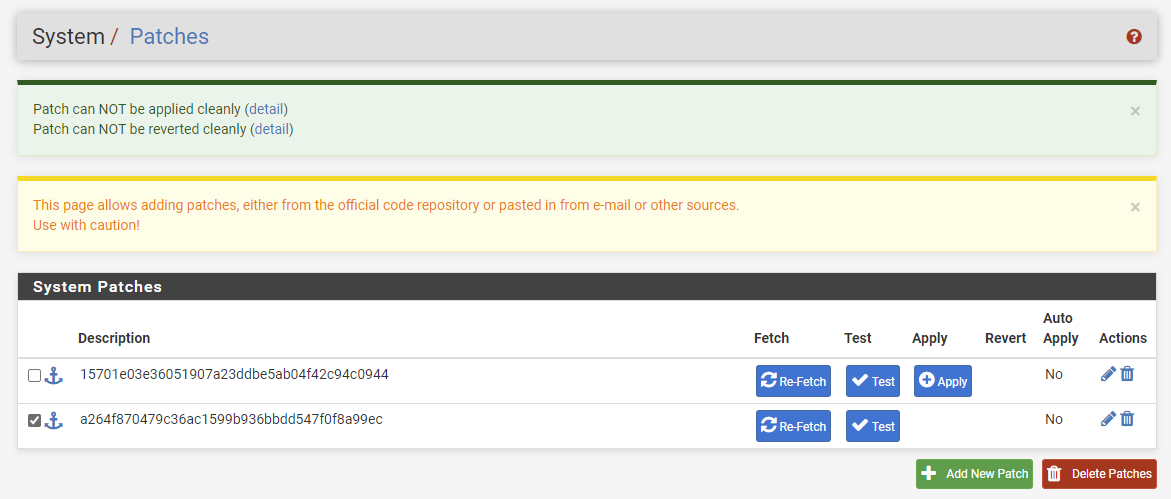

Do they test OK?

Should say they can be applied cleanly and cannot be removed cleanly. Those states should switch after they are applied.

-

The first one does:

The second one does not:

Here is the detail of the second one:

/usr/bin/patch --directory=/ -t -p2 -i /var/patches/5e66c2fd04a95.patch --check --forward --ignore-whitespaceHmm... Looks like a unified diff to me...

The text leading up to this was:|From a264f870479c36ac1599b936bbdd547f0f8a99ec Mon Sep 17 00:00:00 2001

|From: jim-p

|Date: Mon, 5 Aug 2019 12:39:14 -0400

|Subject: [PATCH] Instead of restarting pkgs, add an IPsec reload hook they can

| use instead. Fixes #9668

|

|---

| src/etc/inc/ipsec.inc | 26 ++++++++++++++++++++++++++

| src/etc/rc.newipsecdns | 2 +-

| 2 files changed, 27 insertions(+), 1 deletion(-)

|

|diff --git a/src/etc/inc/ipsec.inc b/src/etc/inc/ipsec.inc

|index dfd66f85435..6cc42d48f67 100644

|--- a/src/etc/inc/ipsec.inc+++ b/src/etc/inc/ipsec.inc Patching file etc/inc/ipsec.inc using Plan A... No such line 902 in input file, ignoring Hunk #1 succeeded at 897 (offset -6 lines). Hmm... The next patch looks like a unified diff to me... The text leading up to this was:

|diff --git a/src/etc/rc.newipsecdns b/src/etc/rc.newipsecdns

|index 1647aa30123..c360f667c7d 100755

|--- a/src/etc/rc.newipsecdns+++ b/src/etc/rc.newipsecdns Patching file etc/rc.newipsecdns using Plan A... No such line 69 in input file, ignoring Hunk #1 failed at 69. 1 out of 1 hunks failed while patching etc/rc.newipsecdns done -

The first might be dependent on the second. It's been a while since I applied them.

Apply the first then test the second. If it looks good, apply it. If not, revert the first.

-

I applied the first patch and then the second, no issues there. Repeating the steps above, initial test seem positive. I will let it bake for a few days and report the status of it. It seems the AWS tunnels drop and reconnect one at a time in sequence once or twice a day. As long as no one texts me about connectivity, it will be a success. :)