2.4.5 High latency and packet loss, not in a vm

-

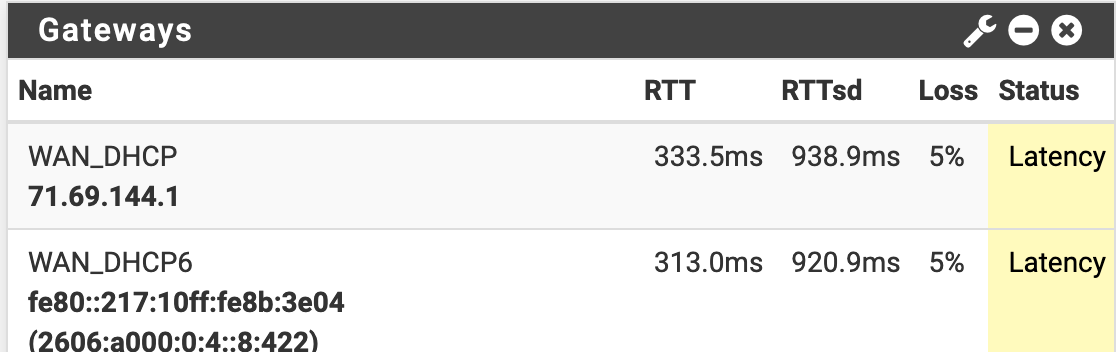

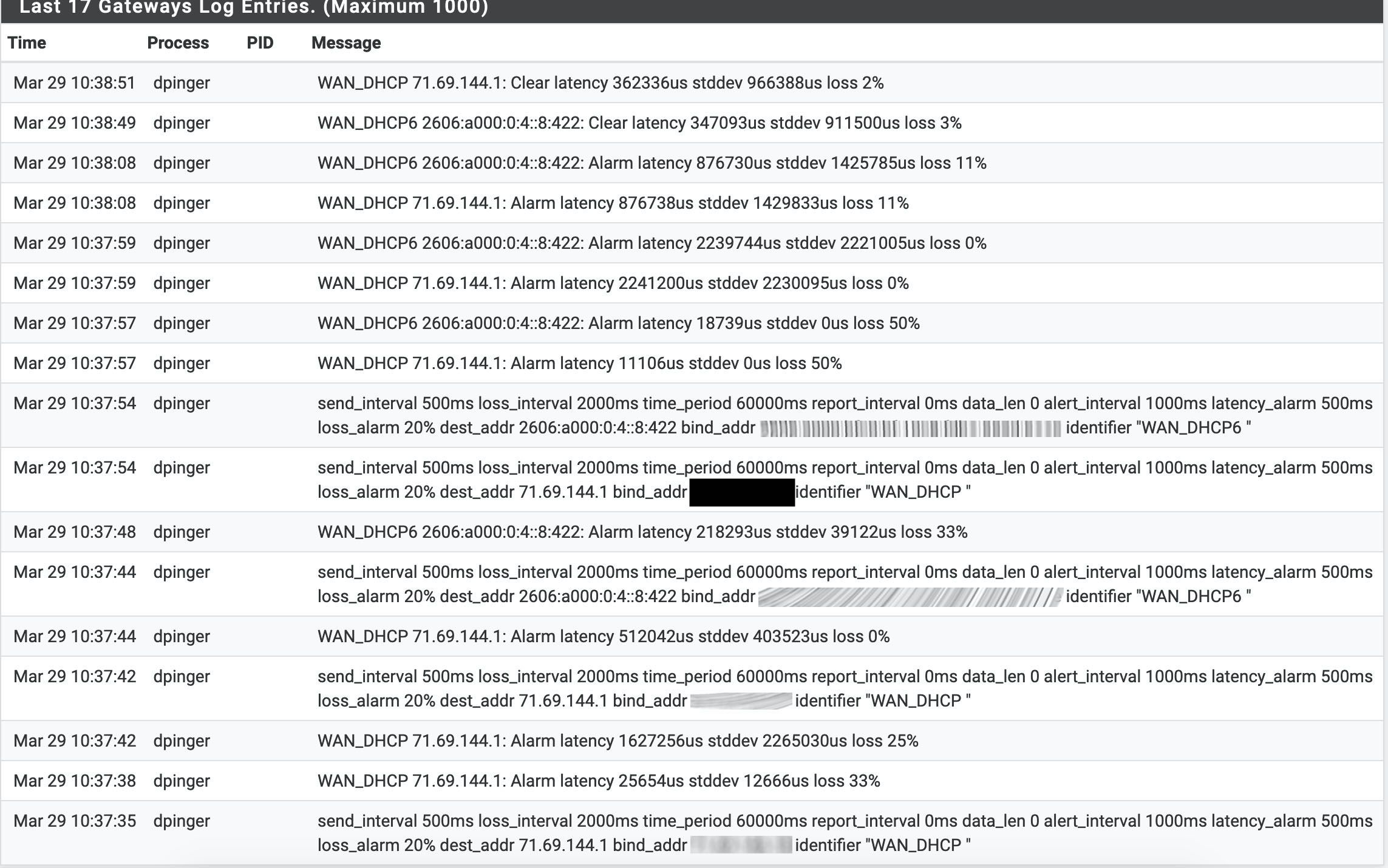

Ok, so to confirm the presence of the large table(s), irrespective of the max table size value, triggers the latency/packet-loss/cpu usage?

And removing the table completely eliminates it?Steve

-

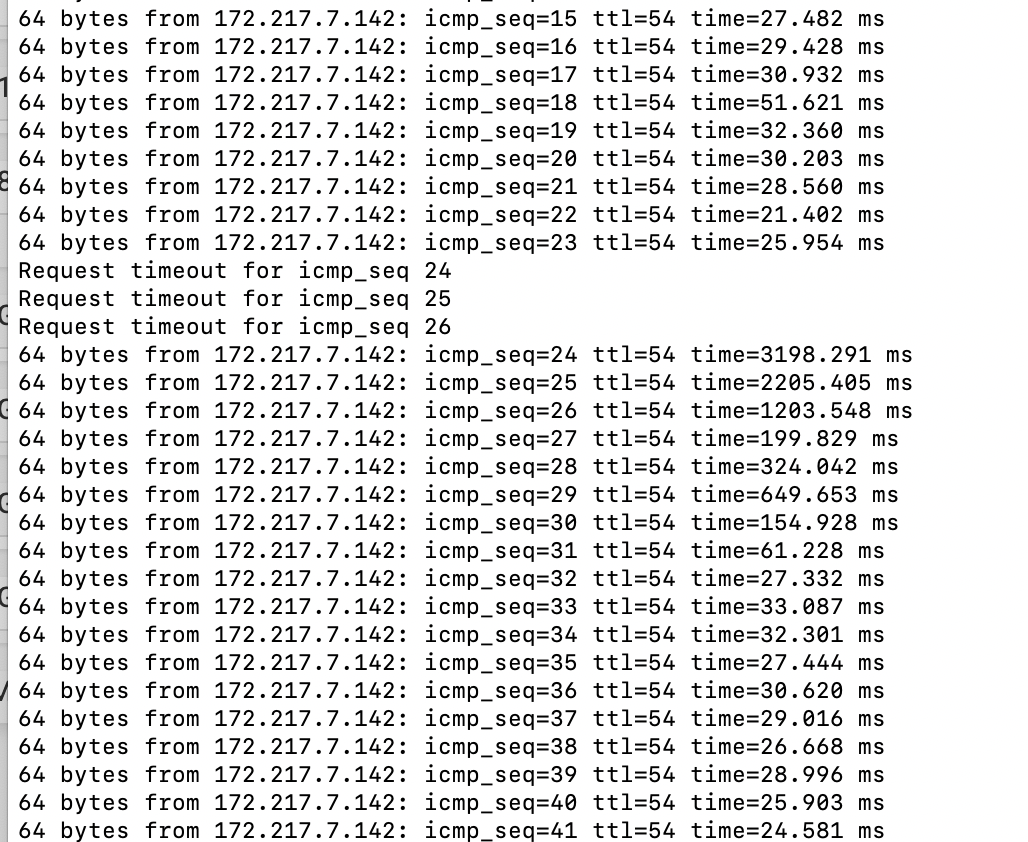

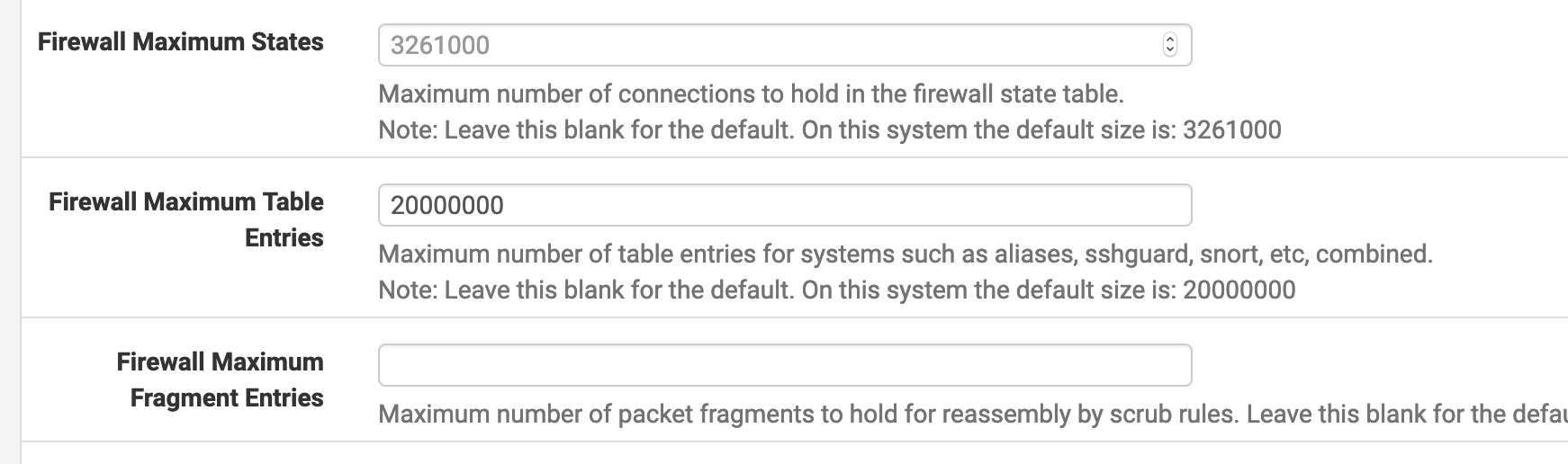

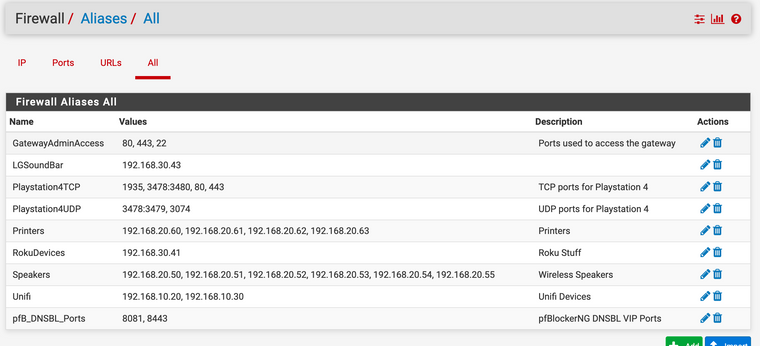

Total table size is limited by max table size. If I set max tables at some arbitrarily large number, say 20000000 but only have a few small tables (no bogonsv6, no ip block lists) things are fine, meaning the symptoms of the problem are not noticeable. I have done that.

It's obvious that the opposite can not be configured, large tables small max tables.

I'll demonstrate some time later today, things to do right now.

-

OK, only took a moment.

Set max tables to 20000000.

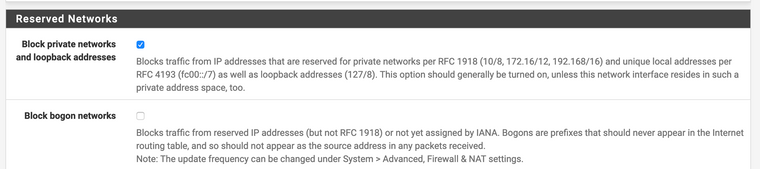

Turned off block bogons.

Disabled pfblocker.Rebooted.

Reboot was fast, 2.4.4-p3 fast.

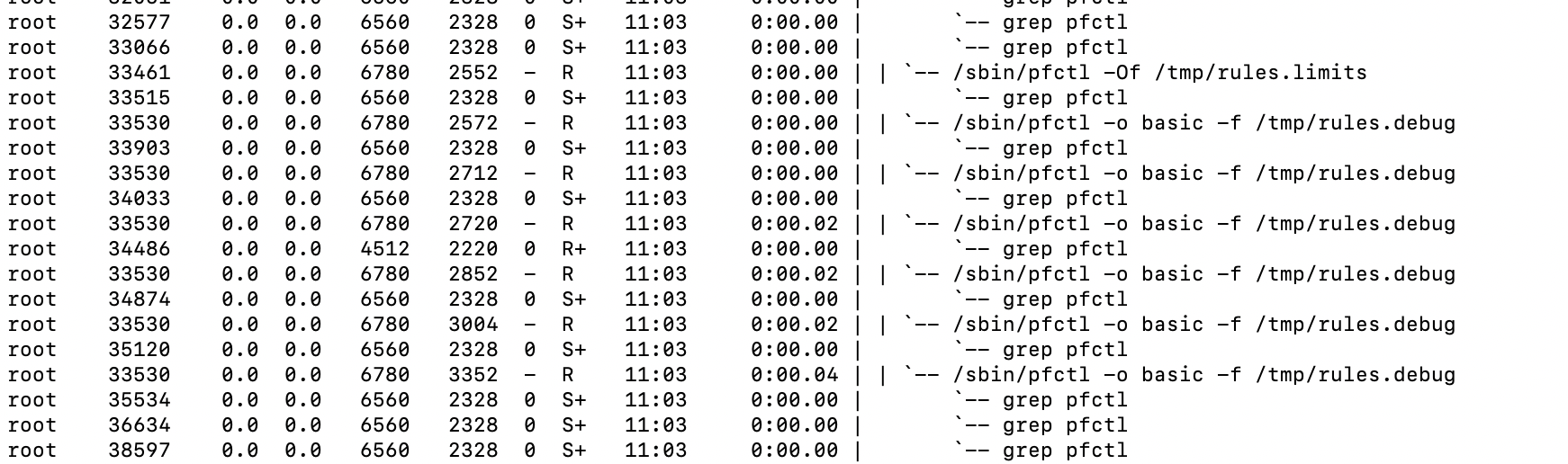

Ran ps auxdww | grep in a while 1 loop

Reloaded the filters (status->filter reload)No lag, no latency, didn't notice it in any way.

Didn't even see pfctl pop up when running top. Must have happened in between refreshes.

Conclude what you will from this. The evidence shows max tables limits total table size (what is supposed to do) but the total table entries is what causes the symptoms of the issue (cause currently unknown, some regression maybe in pf) to become obvious.

-

So, I'm either going to go back to 2.4.4-p3 or another solution (I have a ISR I could drag out of the closet). I want to go back to the set and forget setup I have enjoyed with pfsense for a while now.

The question that I feel needs to be answered by the FreeBSD team is this:

Why was that hard limit implemented? I would assume there was some observed reason for rewriting that with a hard limit.

-

Has anyone managed to find a permanent solution to the problem where pfblocker and bogons can be enabled without latency or loss?

-

@mikekoke Not that I can see.

There is a bug in redmine that has exactly one update from Netgate, can't reproduce in their testing environment. We are passed the idea that it is a bug. It is. It sure looks like a bug that would require upstream (FreeBSD) participation in resolving.

The question is do they even bother fixing it?

You could say:

- Use 2.4.5 if you do not have a large number of total items in tables.

- Stay on 2.4.4-p3 if you have a large number of total table items.

2.4.4-p3 remains a viable release. Accommodations made to set repositories to the 2.4.4 versions make it a reasonable option.

Put all the effort into 2.5 knowing that both current options are safe and secure or divert resources to fixing 2.4.5? FreeBSD 11.3 is not EOL but it is also not a target for ongoing development. Will FreeBSD put resources into this bug?

I don't know the answers to those questions. I not going to offer an opinion on one way or the other. I do think Netgate should put out a statement setting out their position for the short term. 2.5 is the long term resolution.

-

@jwj said in 2.4.5 High latency and packet loss, not in a vm:

Accommodations made to set repositories to the 2.4.4 versions make it a reasonable option.

Does that repo/branch choice also affect packages update/installation?

-

Yeah, there are two drop down menu choices under System->Update->System Update and System->Update->Update Settings.

The base OS/pfsense and the package repo should be correct. As always backup your configuration, make a snapshot if your in a virtual env, and have a plan to recover if you end up FUBAR.

It is too bad the download link for 2.4.4-p3 has not been restored. You can open a ticket and ask (nicely :) for one even if you do not own Netgate HW or have a support contract.

-

@jwj said in 2.4.5 High latency and packet loss, not in a vm:

System->Update->Update Settings.

Thanks. I got around to testing and this affects what package updates are detected, e.g. Suricata 4.1.7 vs 5.x. So that's good to know. Would be handy if they left the previous version there all the time (and/or had a warning on the package page if you're checking the wrong repo for your version) but nice it's there now.

-

So any updates on this issue? I've been checking in here regularly. Three days have elapsed since the last post in this thread. My apologies if I have missed something, but are there any solid mitigations or upcoming updates to address this?

-

@Yamabushi said in 2.4.5 High latency and packet loss, not in a vm:

So any updates on this issue? I've been checking in here regularly. Three days have elapsed since the last post in this thread. My apologies if I have missed something, but are there any solid mitigations or upcoming updates to address this?

No, the root cause is still unknown. Netgate cannot reproduce this issue which means the test conditions are different to the affected systems.

At the moment all my systems are back to 2.4.4-P3. I wiped the disks with dd and reinstalled the system from scratch. After basic installation I set the repository to the previous version to avoid the installation of packages of the 2.4.5 release.

Additionally I switched to ZFS.

After that I restored the backup, which does not contain any package information and after this step I manually installed the needed packages.

Now all systems are back to normal working condition.

I wanted to run some more tests on a spare part hardware (an original Netgate system) to get an idea what is the root cause. But we have a strange time and not all is running as expected which means that I did not find a time slot for that...I assume that I`m not allone... -

Thank you for your prompt and detailed response! I guess I will have to continue to wait and see what happens. Thank you, again!

-

If any of you have a test system that is hitting this and you can allow us to access it please open a ticket so we can set something up: https://go.netgate.com/

I've tried all sorts of things here to replicate it and it just stubbornly behaves perfectly.

Steve

-

@stephenw10

Done! -

@stephenw10 Ticket submitted. As per murphys law, my power is out at the moment.

-

Thanks guys. Hopefully we can get some data there.

Steve

-

I was doing some thinking about this issue last night at 3am.

I know I hit it (on a VM) and I was thinking "What have I changed from the defaults that maybe some other users have also) and I figured maybe

net.isr.dispatch = deferredI know I set that to try and get a PPPoE performance increase. Have others who are hitting this bug set that too?

-

No,

net.isr.dispatch = deferreddoes not appear to be common to system hitting this. Good thought though.Steve

-

Hmmm someone with a test system hitting this issue could maybe share his config.xml so we can try with swarm intelligence?

-Rico

-

@Rico Already shared config and other information with Netgate. @stephenw10 has been immensely helpful coordinating that.