Bufferbloat - Load balancing VPN gateway group

-

Hi,

I'm dealing with bufferbloat on a gateway group with multiple VPN connections.

The setup has 4 VPN connections in a gateway group and one WAN to which they are directed.

I'm getting massive latency when I start uploads via the gateway group. Gateways even show offline 500ms+.I'm currently using CoDel with queue on WAN as explained here: https://www.youtube.com/watch?v=iXqExAALzR8&

This works very well. Currently circumventing the gateway group for Cloud backup - no drop in latency at all, even when maxing upload-speed to 95%.I am wondering where the bottleneck for this group lies. Seems to be in Pfsense? Doesn't seem aware of the throughput of combined lines?

I'm too much in the dark to do proper analysis. Tried to combine interfaces in one limiter and playing with the queues and queue sizes but didn't find an answer.My questions are:

1 Where exactly is this traffic accumulating

2 How to relieve the stress -

Video of test: https://youtu.be/nyok4vAr5n4 (Askimet is blocking post changes)

My total line is 100d/10u mbps. For this test I set CoDel limit on WAN at 80/8. -

@discy you would probably need to choose NORDVPN under Interface in your floating rule. Just out of curiosity what have you set in your floating rule Advanced > Gateway?

-

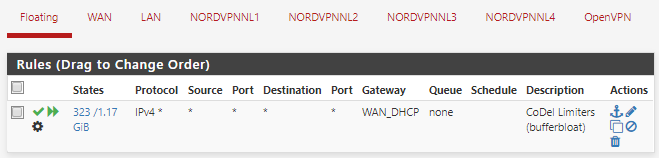

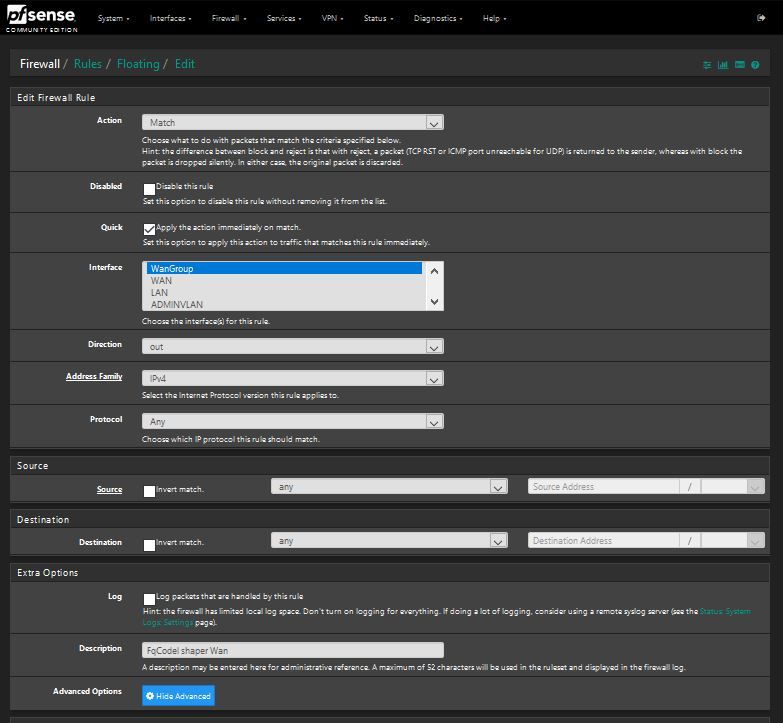

@bobbenheim The floating rule with CoDel limiter is currently on WAN.

I tried a couple of combinations:- Rule on the OpenVPN gateway-group and selecting all NordVPN interfaces

- Sharing the same queue with a rule per interface/gateway

- Selecting multiple interfaces and leave gateway on WAN

Hope to gather some knowledgeable advice for what it should be instead of randomly going through all possible combinations

-

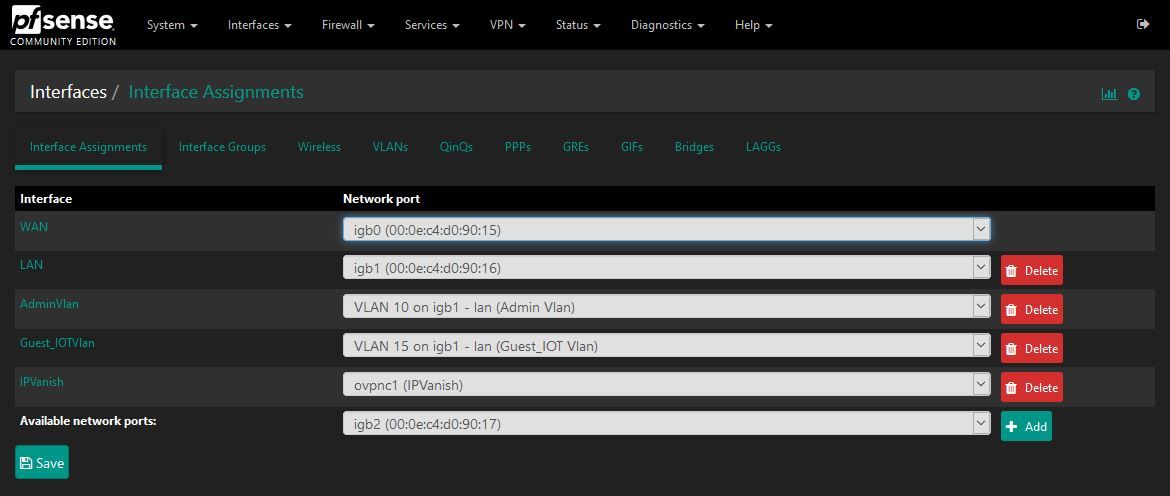

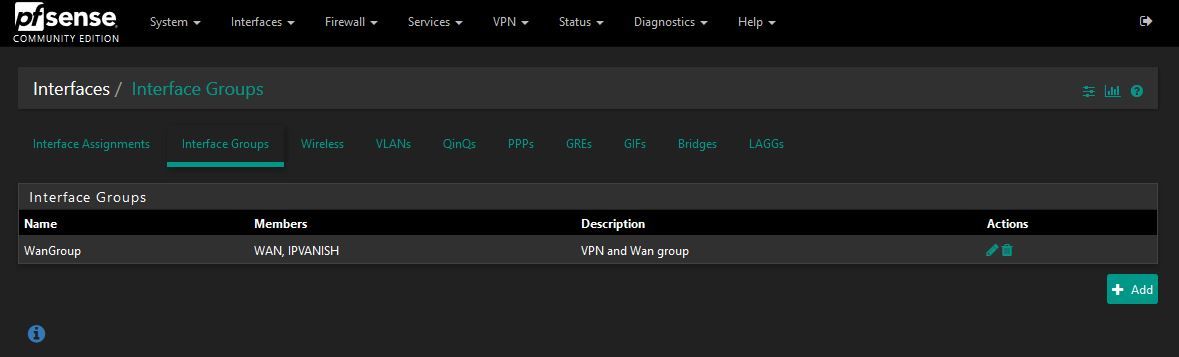

@discy create an interface group in Interfaces > Assignments > Interface Groups

select the interface group in the floating rule (not sure if it suffices to mark your interfaces in your gateway group, but you can experiment with that) and set "NordVPNGatewayGroup" as Gateway. -

Fixed it on VPN Load.

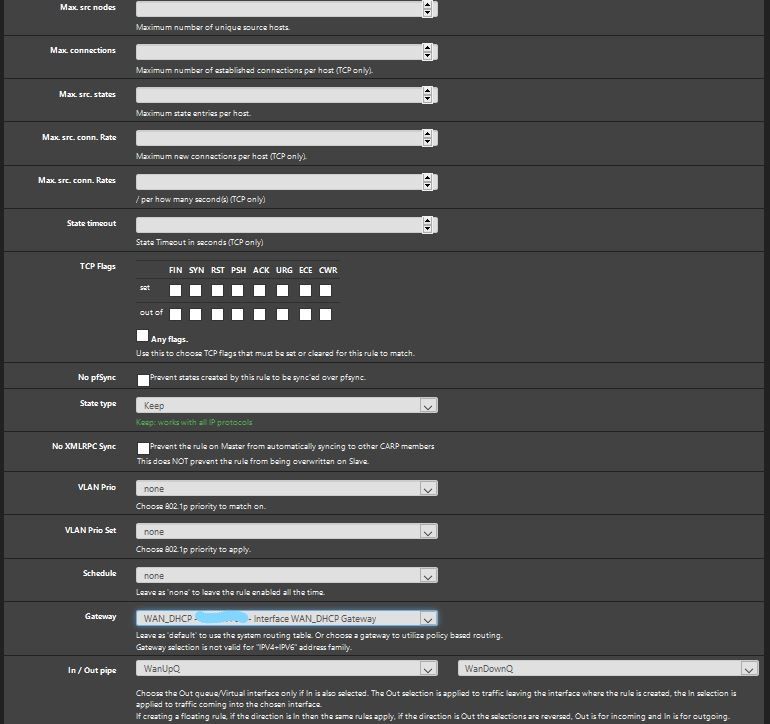

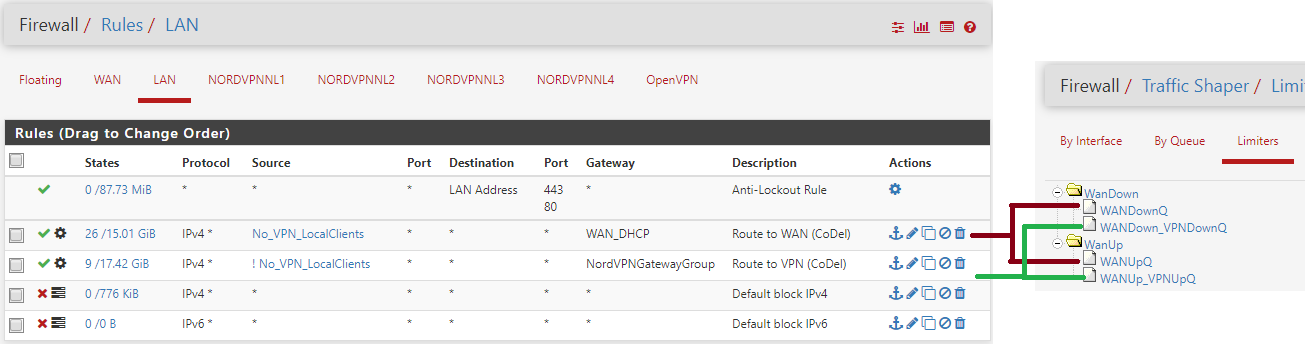

@bobbenheim you were correct in that the rule should be on the VPN group. This is what I did:ISP mbps: 100d/10u

WAN CoDel limiter set to 90d/9u- Speedtest via VPN. Result: 80d/7u - BB-grade: D & download throttling

- Added 2 limiters+queue just like on WAN (75d/6u after tweaking) - https://www.youtube.com/watch?v=iXqExAALzR8

- Add limiter queues in Rule on LAN for LAN net » VPN Gatewaygroup

Grade A and no more throttling.

Results: https://youtu.be/-CcAOjj9cpI

Pfsense settings:

-

@discy You should use the same limiter, not sure if you need another set of queues but that would be simple to test, for your VPN group as you do for WAN otherwise you will hit the same problem with bufferbloat as the sum of both limiters exceed your line speed. You should make an interface group and put the limit on that instead of LAN as that is what you want to limit.

-

@bobbenheim Yes - correct. I only tested on VPN load.

Problem persists when I combine WAN and VPN traffic.What exactly do you mean by using an Interface Group?

I cannot combine both WAN and VPN interfaces in one group and create one limiter rule as the gateway has to be unique. I've got seperate gateways for WAN and VPN.I also noticed these remarks on NetGate docs:

- https://docs.netgate.com/pfsense/en/latest/book/interfaces/interfacetypes-groups.html#use-with-wan-interfaces

- https://docs.netgate.com/pfsense/en/latest/book/trafficshaper/limiters.html#limiters-and-multi-wan

In addition I tried to:

- Share the same queue between WAN and VPN

- Added VPNUp and Down queues underneath the WAN limiter

- Tried combinations of individual- and groupbased rules and queues

- Played with the weight of the queues

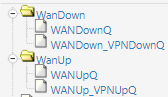

With current setup where sharing limiter and seperate queues:

- WAN/VPN combined-load latency is much better.

- VPN only throughput is about half compared to seperate limiter

Are we doing the correct thing when combining these queues underneath the same limiter?

VPN traffic exits via WAN. If VPN is on 5mbps, WAN will be as well. Total: 10mbps.

With a limiter of 10. The connection will never go above 5. Right?

-

I am having exactly the same issue. I can either get codel working with wan or vpn however not both at the same time. Im watching this to see what the resolution is. I have created an interface group as @bobbenheim recommended which included both wan and vpn, then created the floating rule to reflect the group however no change. Funnily enough my vpn throughput is as expected however the wan upload is reduced to 1mb up.

-

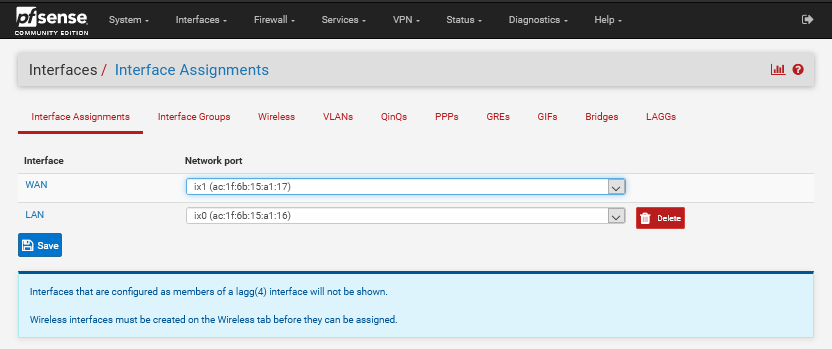

@discy If you go to Interfaces > Assignments you get this

If the gateway exists on the interface it shouldn't matter, but it is not something i have tested. Using the same limiter is something you want as you only, i assume, have one internet connection and this doesn't change when adding VPN.

-

@AndyW How does your rules look like?

-

I have also added another floating rule including the Ipvanish as the gateway however no change.

-

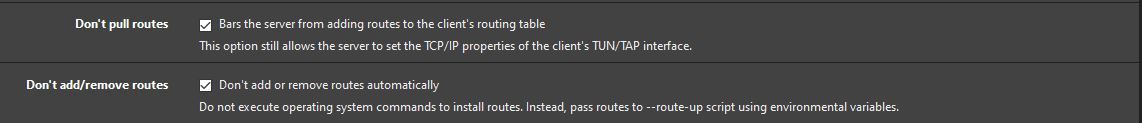

One thing I did notice was if don't pull routes and don't add/remove routes are checked the problem goes away. This however in my instance causes issues with the vpn not always starting correctly.

-

@AndyW one thing that sticks out and i'm not sure it makes a difference is you used "Quick" on a match rule which doesn't have an effect. Could you try and make your floating rules Pass instead. Also do you have separate queues for your WAN and IPVanish?

-

Hi @bobbenheim I tried the settings with pass and "quick" disabled however the issue still persists. I also tried clearing the states to be on the safe side.

As regards to the floating rules i have created both wan and ipvanish rules however the issue is still persist. -

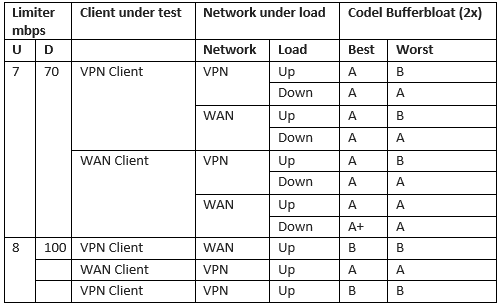

I ended up with the setup below.

It solved my throttling and latency issues on full load (down+up) on VPN and WAN combined and seperate.

- Individual queue and limiter configuration based on this youtube guide.

- Download limit: 70%

- Upload: 70%

- Multiple queues configured like this:

Test results

WAN Line: 100d/10u

- Bufferbloat test: http://www.dslreports.com/speedtest

- Network-load upload: https://testmy.net/upload (Manual: 100mb)

- Network-load download: https://speed.hetzner.de/10GB.bin

-

@discy did you manage to solve your issue with VPN interface gateway monitoring with this configuration? As far as I can tell my configuration is the same as yours, but when I saturate VPN client traffic the gateway monitoring for my VPN interfaces spikes and takes them offline. I've spent several hours trying various things but nothing seems to have an effect, so I'm left wondering if I'm just going to need to disable the monitoring action on the VPN interfaces and consider them always online, which is clearly not desirable. Thanks in advance for any advice.

-

Did you try to limit your upload/download speeds some more?

Upload bandwidth needed to perform a download within the tunnel isn't taken into account with these limiters.

It seems we have to find a balance between available bandwidth from LAN that goes through the tunnel and overhead on the tunnel connection.

For me on an TCP OpenVPN tunnel this means 60% upload + 70% download limit within tunnel itself (set-up as above).I added a schedule to the limiter so upload backup during the night can use 80%.

-

@discy Thanks for the information. I wasn't sure if I was missing something fundamental, but it sounds like I just need to lower the bandwidth on my limiters more, which I'll try today. Appreciate the response!

-

Well, I don't know, I took my 100/10 connection all the way down to 50/5 and still a flent rrul_torrent test on the VPN client was taking down its gateway monitoring hard. Maybe this makes sense insofar as a VPN client connection is going to be have highly variable bandwidth (i.e. an unloaded server may give me a full 100Mbps down, but at another time when it's near capacity only give me about 10Mbps). I don't know enough about the subject to determine whether that makes sense.

I did try running rrul_torrent after configuring the gateway monitoring to take no action (always consider the gateway to be up) and got rather odd ping results. Not sure how to interpret them frankly.

avg median # data pts Ping (ms) ICMP : 4353.47 3882.00 ms 320 Ping (ms) UDP BE : 1.56 87.41 ms 41 Ping (ms) UDP BK : 1.61 40.95 ms 21 Ping (ms) UDP EF : 0.13 42.59 ms 1 Ping (ms) avg : 1089.19 3825.35 ms 325