Where to view the status of a ZFS mirror

-

Is there a way to see the status of a zfs mirror in the WebUI, like drive failures, rebuilding process etc.?

Basically, what GEOM did previously. -

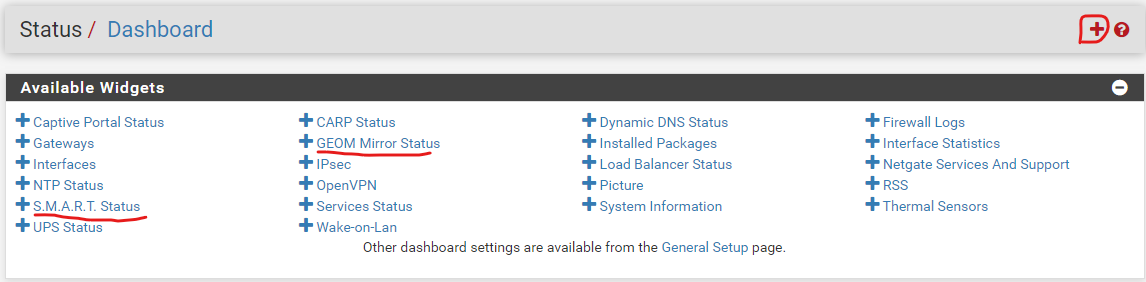

Go to the dashboard and click on the plus in the upper right corner. See below.

-

GEOM Mirror Status displays a "No Mirrors found"-Message. SMART works, of course. However that's only a fraction of what's necessary to supervise an array or it's rebuilding process.

-

@Tenou said in Where to view the status of a ZFS mirror:

GEOM Mirror Status displays a "No Mirrors found"-Message.

I saw the same message but figured that was because I don't actually have a mirrored drive in my setup. Sorry, not sure how to get that working or if there's an alternative. I'm sure others will chime in.

-

Well, I have. Afaik the GEOM Mirror status is deprecated and has never been replaced by anything else for ZFS.

-

Just to see if I could do it, I installed zfs-stats https://www.freshports.org/sysutils/zfs-stats/ on my 2.4.5-1 ZFS box and created a widget and an inc file. Every 5 minutes I run a cron job script to check the mirror state and provides that and additional ZFS info and posts the result in a frame on the dashboard. Not pretty, I'll leave that to someone who knows what they're doing.

widget.php goes in /usr/local/www/widgets/widgets (edit ' src= ' to whatever address and port you use as needed)

.inc goes in /usr/local/www/widgets/include

.sh I put in /root/scripts (if you only want mirror status, comment out the last line)It's FREE (and worth every penny paid!) Have fun!

ZFS_Stats.widget.php

<?php /* File : /usr/local/www/widgets/widgets/ZFS_Stats.widget.php * Author : Zak Ghani * Date : 10-03-2019 * * This software is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES * OR CONDITIONS OF ANY KIND, either express or implied. * */ require_once("guiconfig.inc"); ?> <iframe src="https://192.168.0.1:4343/zfs-stats.txt" width="553" height="260" frameborder="0" * width="498" * height="500" * frameborder="0" > </iframe>ZFS_Stats.inc

<?php /* File : /usr/local/www/widgets/include/ZFS_Stats.inc * Author : Zak Ghani * Date : 10-03-2019 * * This software is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES * OR CONDITIONS OF ANY KIND, either express or implied. * */ $Upload_title = gettext("ZFS Stats"); ?>zfs-stats.sh

#!/bin/sh /bin/cp /usr/local/www/zfs-stats.txt /usr/local/www/zfs-stats.old zpool status zroot > /usr/local/www/zfs-stats.txt /usr/local/bin/zfs-stats -a >> /usr/local/www/zfs-stats.txtExample report:

pool: zroot state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM zroot ONLINE 0 0 0 da0p3 ONLINE 0 0 0 errors: No known data errors ------------------------------------------------------------------------ ZFS Subsystem Report Sun Jul 19 04:45:00 2020 ------------------------------------------------------------------------ System Information: Kernel Version: 1103507 (osreldate) Hardware Platform: amd64 Processor Architecture: amd64 ZFS Storage pool Version: 5000 ZFS Filesystem Version: 5 FreeBSD 11.3-STABLE #243 abf8cba50ce(RELENG_2_4_5): Tue Jun 2 17:53:37 EDT 2020 root 4:45AM up 11:17, 0 users, load averages: 2.04, 1.98, 1.84 ------------------------------------------------------------------------ System Memory: 3.84% 93.51 MiB Active, 9.65% 235.13 MiB Inact 59.36% 1.41 GiB Wired, 0.00% 0 Bytes Cache 27.15% 661.28 MiB Free, -0.01% -221184 Bytes Gap Real Installed: 2.50 GiB Real Available: 97.64% 2.44 GiB Real Managed: 97.44% 2.38 GiB Logical Total: 2.50 GiB Logical Used: 64.98% 1.62 GiB Logical Free: 35.02% 896.41 MiB Kernel Memory: 180.82 MiB Data: 67.52% 122.09 MiB Text: 32.48% 58.73 MiB Kernel Memory Map: 2.38 GiB Size: 7.01% 170.64 MiB Free: 92.99% 2.21 GiB ------------------------------------------------------------------------ ARC Summary: (HEALTHY) Memory Throttle Count: 0 ARC Misc: Deleted: 0 Recycle Misses: 0 Mutex Misses: 0 Evict Skips: 0 ARC Size: 12.05% 183.41 MiB Target Size: (Adaptive) 100.00% 1.49 GiB Min Size (Hard Limit): 12.50% 190.28 MiB Max Size (High Water): 8:1 1.49 GiB ARC Size Breakdown: Recently Used Cache Size: 50.00% 761.12 MiB Frequently Used Cache Size: 50.00% 761.12 MiB ARC Hash Breakdown: Elements Max: 4.20 k Elements Current: 99.95% 4.19 k Collisions: 67 Chain Max: 1 Chains: 11 ------------------------------------------------------------------------ ARC Efficiency: 2.71 m Cache Hit Ratio: 98.09% 2.66 m Cache Miss Ratio: 1.91% 51.74 k Actual Hit Ratio: 98.00% 2.66 m Data Demand Efficiency: 95.14% 633.09 k CACHE HITS BY CACHE LIST: Anonymously Used: 0.09% 2.49 k Most Recently Used: 2.67% 70.99 k Most Frequently Used: 97.24% 2.59 m Most Recently Used Ghost: 0.00% 0 Most Frequently Used Ghost: 0.00% 0 CACHE HITS BY DATA TYPE: Demand Data: 22.65% 602.35 k Prefetch Data: 0.00% 0 Demand Metadata: 77.26% 2.05 m Prefetch Metadata: 0.09% 2.49 k CACHE MISSES BY DATA TYPE: Demand Data: 59.42% 30.75 k Prefetch Data: 0.00% 0 Demand Metadata: 39.22% 20.30 k Prefetch Metadata: 1.36% 704 ------------------------------------------------------------------------ L2ARC is disabled ------------------------------------------------------------------------ ------------------------------------------------------------------------ VDEV cache is disabled ------------------------------------------------------------------------ ZFS Tunables (sysctl): kern.maxusers 492 vm.kmem_size 2553896960 vm.kmem_size_scale 1 vm.kmem_size_min 0 vm.kmem_size_max 1319413950874 vfs.zfs.trim.max_interval 1 vfs.zfs.trim.timeout 30 vfs.zfs.trim.txg_delay 32 vfs.zfs.trim.enabled 1 vfs.zfs.vol.immediate_write_sz 32768 vfs.zfs.vol.unmap_sync_enabled 0 vfs.zfs.vol.unmap_enabled 1 vfs.zfs.vol.recursive 0 vfs.zfs.vol.mode 1 vfs.zfs.version.zpl 5 vfs.zfs.version.spa 5000 vfs.zfs.version.acl 1 vfs.zfs.version.ioctl 7 vfs.zfs.debug 0 vfs.zfs.super_owner 0 vfs.zfs.immediate_write_sz 32768 vfs.zfs.sync_pass_rewrite 2 vfs.zfs.sync_pass_dont_compress 5 vfs.zfs.sync_pass_deferred_free 2 vfs.zfs.zio.dva_throttle_enabled 1 vfs.zfs.zio.exclude_metadata 0 vfs.zfs.zio.use_uma 1 vfs.zfs.zio.taskq_batch_pct 75 vfs.zfs.zil_slog_bulk 786432 vfs.zfs.cache_flush_disable 0 vfs.zfs.zil_replay_disable 0 vfs.zfs.standard_sm_blksz 131072 vfs.zfs.dtl_sm_blksz 4096 vfs.zfs.min_auto_ashift 9 vfs.zfs.max_auto_ashift 13 vfs.zfs.vdev.trim_max_pending 10000 vfs.zfs.vdev.bio_delete_disable 0 vfs.zfs.vdev.bio_flush_disable 0 vfs.zfs.vdev.def_queue_depth 32 vfs.zfs.vdev.queue_depth_pct 1000 vfs.zfs.vdev.write_gap_limit 4096 vfs.zfs.vdev.read_gap_limit 32768 vfs.zfs.vdev.aggregation_limit_non_rotating131072 vfs.zfs.vdev.aggregation_limit 1048576 vfs.zfs.vdev.initializing_max_active 1 vfs.zfs.vdev.initializing_min_active 1 vfs.zfs.vdev.removal_max_active 2 vfs.zfs.vdev.removal_min_active 1 vfs.zfs.vdev.trim_max_active 64 vfs.zfs.vdev.trim_min_active 1 vfs.zfs.vdev.scrub_max_active 2 vfs.zfs.vdev.scrub_min_active 1 vfs.zfs.vdev.async_write_max_active 10 vfs.zfs.vdev.async_write_min_active 1 vfs.zfs.vdev.async_read_max_active 3 vfs.zfs.vdev.async_read_min_active 1 vfs.zfs.vdev.sync_write_max_active 10 vfs.zfs.vdev.sync_write_min_active 10 vfs.zfs.vdev.sync_read_max_active 10 vfs.zfs.vdev.sync_read_min_active 10 vfs.zfs.vdev.max_active 1000 vfs.zfs.vdev.async_write_active_max_dirty_percent60 vfs.zfs.vdev.async_write_active_min_dirty_percent30 vfs.zfs.vdev.mirror.non_rotating_seek_inc1 vfs.zfs.vdev.mirror.non_rotating_inc 0 vfs.zfs.vdev.mirror.rotating_seek_offset1048576 vfs.zfs.vdev.mirror.rotating_seek_inc 5 vfs.zfs.vdev.mirror.rotating_inc 0 vfs.zfs.vdev.trim_on_init 1 vfs.zfs.vdev.cache.bshift 16 vfs.zfs.vdev.cache.size 0 vfs.zfs.vdev.cache.max 16384 vfs.zfs.vdev.validate_skip 0 vfs.zfs.vdev.max_ms_shift 38 vfs.zfs.vdev.default_ms_shift 29 vfs.zfs.vdev.max_ms_count_limit 131072 vfs.zfs.vdev.min_ms_count 16 vfs.zfs.vdev.max_ms_count 200 vfs.zfs.txg.timeout 5 vfs.zfs.space_map_ibs 14 vfs.zfs.spa_allocators 4 vfs.zfs.spa_min_slop 134217728 vfs.zfs.spa_slop_shift 5 vfs.zfs.spa_asize_inflation 24 vfs.zfs.deadman_enabled 0 vfs.zfs.deadman_checktime_ms 5000 vfs.zfs.deadman_synctime_ms 1000000 vfs.zfs.debug_flags 0 vfs.zfs.debugflags 0 vfs.zfs.recover 0 vfs.zfs.spa_load_verify_data 1 vfs.zfs.spa_load_verify_metadata 1 vfs.zfs.spa_load_verify_maxinflight 10000 vfs.zfs.max_missing_tvds_scan 0 vfs.zfs.max_missing_tvds_cachefile 2 vfs.zfs.max_missing_tvds 0 vfs.zfs.spa_load_print_vdev_tree 0 vfs.zfs.ccw_retry_interval 300 vfs.zfs.check_hostid 1 vfs.zfs.mg_fragmentation_threshold 85 vfs.zfs.mg_noalloc_threshold 0 vfs.zfs.condense_pct 200 vfs.zfs.metaslab_sm_blksz 4096 vfs.zfs.metaslab.bias_enabled 1 vfs.zfs.metaslab.lba_weighting_enabled 1 vfs.zfs.metaslab.fragmentation_factor_enabled1 vfs.zfs.metaslab.preload_enabled 1 vfs.zfs.metaslab.preload_limit 3 vfs.zfs.metaslab.unload_delay 8 vfs.zfs.metaslab.load_pct 50 vfs.zfs.metaslab.min_alloc_size 33554432 vfs.zfs.metaslab.df_free_pct 4 vfs.zfs.metaslab.df_alloc_threshold 131072 vfs.zfs.metaslab.debug_unload 0 vfs.zfs.metaslab.debug_load 0 vfs.zfs.metaslab.fragmentation_threshold70 vfs.zfs.metaslab.force_ganging 16777217 vfs.zfs.free_bpobj_enabled 1 vfs.zfs.free_max_blocks -1 vfs.zfs.zfs_scan_checkpoint_interval 7200 vfs.zfs.zfs_scan_legacy 0 vfs.zfs.no_scrub_prefetch 0 vfs.zfs.no_scrub_io 0 vfs.zfs.resilver_min_time_ms 3000 vfs.zfs.free_min_time_ms 1000 vfs.zfs.scan_min_time_ms 1000 vfs.zfs.scan_idle 50 vfs.zfs.scrub_delay 4 vfs.zfs.resilver_delay 2 vfs.zfs.top_maxinflight 32 vfs.zfs.zfetch.array_rd_sz 1048576 vfs.zfs.zfetch.max_idistance 67108864 vfs.zfs.zfetch.max_distance 8388608 vfs.zfs.zfetch.min_sec_reap 2 vfs.zfs.zfetch.max_streams 8 vfs.zfs.prefetch_disable 1 vfs.zfs.delay_scale 500000 vfs.zfs.delay_min_dirty_percent 60 vfs.zfs.dirty_data_sync 67108864 vfs.zfs.dirty_data_max_percent 10 vfs.zfs.dirty_data_max_max 4294967296 vfs.zfs.dirty_data_max 262096076 vfs.zfs.max_recordsize 1048576 vfs.zfs.default_ibs 17 vfs.zfs.default_bs 9 vfs.zfs.send_holes_without_birth_time 1 vfs.zfs.mdcomp_disable 0 vfs.zfs.per_txg_dirty_frees_percent 30 vfs.zfs.nopwrite_enabled 1 vfs.zfs.dedup.prefetch 1 vfs.zfs.dbuf_cache_lowater_pct 10 vfs.zfs.dbuf_cache_hiwater_pct 10 vfs.zfs.dbuf_metadata_cache_overflow 0 vfs.zfs.dbuf_metadata_cache_shift 6 vfs.zfs.dbuf_cache_shift 5 vfs.zfs.dbuf_metadata_cache_max_bytes 24940400 vfs.zfs.dbuf_cache_max_bytes 49880800 vfs.zfs.arc_min_prescient_prefetch_ms 6 vfs.zfs.arc_min_prefetch_ms 1 vfs.zfs.l2c_only_size 0 vfs.zfs.mfu_ghost_data_esize 0 vfs.zfs.mfu_ghost_metadata_esize 0 vfs.zfs.mfu_ghost_size 0 vfs.zfs.mfu_data_esize 13382656 vfs.zfs.mfu_metadata_esize 90624 vfs.zfs.mfu_size 64041472 vfs.zfs.mru_ghost_data_esize 0 vfs.zfs.mru_ghost_metadata_esize 0 vfs.zfs.mru_ghost_size 0 vfs.zfs.mru_data_esize 43299840 vfs.zfs.mru_metadata_esize 243712 vfs.zfs.mru_size 98053120 vfs.zfs.anon_data_esize 0 vfs.zfs.anon_metadata_esize 0 vfs.zfs.anon_size 49152 vfs.zfs.l2arc_norw 1 vfs.zfs.l2arc_feed_again 1 vfs.zfs.l2arc_noprefetch 1 vfs.zfs.l2arc_feed_min_ms 200 vfs.zfs.l2arc_feed_secs 1 vfs.zfs.l2arc_headroom 2 vfs.zfs.l2arc_write_boost 8388608 vfs.zfs.l2arc_write_max 8388608 vfs.zfs.arc_meta_limit 399046400 vfs.zfs.arc_free_target 4389 vfs.zfs.arc_kmem_cache_reap_retry_ms 1000 vfs.zfs.compressed_arc_enabled 1 vfs.zfs.arc_grow_retry 60 vfs.zfs.arc_shrink_shift 7 vfs.zfs.arc_average_blocksize 8192 vfs.zfs.arc_no_grow_shift 5 vfs.zfs.arc_min 199523200 vfs.zfs.arc_max 1596185600 vfs.zfs.abd_chunk_size 4096 vfs.zfs.abd_scatter_enabled 1 ------------------------------------------------------------------------