Performance on IDS/IPS

-

Just a heads up.

Lowering MTU to 1440 and MSS to 1400 increase the performance of IDS/IPS 300% on the same hardware.

Going from 200 mbit/s to 600+ mbit/s on the same hardware.

It seems that eventual package fragmentation is tough to deal with for IDS/IPS. (naturally)

-

@Cool_Corona - Very interesting results! Could you please provide a bit more detail describing your IDS/IPS setup and where exactly in pfSense you made those changes? What prompted you to start looking into MTU sizing? Also, what method(s) did you use to assess throughput (before and after the MTU change)?

Thanks in advance!

-

@tman222 said in Performance on IDS/IPS:

@Cool_Corona - Very interesting results! Could you please provide a bit more detail describing your IDS/IPS setup and where exactly in pfSense you made those changes? What prompted you to start looking into MTU sizing? Also, what method(s) did you use to assess throughput (before and after the MTU change)?

Thanks in advance!

I made the changes on the Interface tab. (all)

I am running full IDS with a lot of rules.

What prompted me was somebody doing testing on OPNsense that came up with the results of lowering the std. MTU from 1500 to 1440 and MSS to 1400 to avoid fragmentation. Suddenly the speed increased both in real world testing and IPERF to almost line speed minus overhead.

IPERF went up from around 230mbit/s to 900 mbit/s and real world testing went from 190mbit/s to 650 mbit/s.

And less load on the CPU's to.

-

@Cool_Corona said in Performance on IDS/IPS:

@tman222 said in Performance on IDS/IPS:

@Cool_Corona - Very interesting results! Could you please provide a bit more detail describing your IDS/IPS setup and where exactly in pfSense you made those changes? What prompted you to start looking into MTU sizing? Also, what method(s) did you use to assess throughput (before and after the MTU change)?

Thanks in advance!

I made the changes on the Interface tab. (all)

I am running full IDS with a lot of rules.

What prompted me was somebody doing testing on OPNsense that came up with the results of lowering the std. MTU from 1500 to 1440 and MSS to 1400 to avoid fragmentation. Suddenly the speed increased both in real world testing and IPERF to almost line speed minus overhead.

IPERF went up from around 230mbit/s to 900 mbit/s and real world testing went from 190mbit/s to 650 mbit/s.

And less load on the CPU's to.

Do you have a PPPoE interface on your WAN? 1500 is the typical setting for DHCP-type WAN interfaces. PPPoE, however, is different and works much better with a reduced MTU.

-

@Cool_Corona said in Performance on IDS/IPS:

@tman222 said in Performance on IDS/IPS:

@Cool_Corona - Very interesting results! Could you please provide a bit more detail describing your IDS/IPS setup and where exactly in pfSense you made those changes? What prompted you to start looking into MTU sizing? Also, what method(s) did you use to assess throughput (before and after the MTU change)?

Thanks in advance!

I made the changes on the Interface tab. (all)

I am running full IDS with a lot of rules.

What prompted me was somebody doing testing on OPNsense that came up with the results of lowering the std. MTU from 1500 to 1440 and MSS to 1400 to avoid fragmentation. Suddenly the speed increased both in real world testing and IPERF to almost line speed minus overhead.

IPERF went up from around 230mbit/s to 900 mbit/s and real world testing went from 190mbit/s to 650 mbit/s.

And less load on the CPU's to.

Thanks @Cool_Corona.

Is this running Snort or Suricata? Is the setup running in IDS (pcap) or IPS (inline) mode? Do you have a link to the testing / discussion where it was discovered that changing the MTU helps? I'm still not sure why there would be fragmentation at standard MTU of 1500 bytes unless perhaps the WAN connection didn't support this? Thanks again!

-

I have seen some discussion about increasing the snaplen (or snapshot length) in Snort, but I'm not sure if that would be helpful when running in inline (IPS) mode?

https://seclists.org/snort/2015/q1/757

-

@bmeeks said in Performance on IDS/IPS:

@Cool_Corona said in Performance on IDS/IPS:

@tman222 said in Performance on IDS/IPS:

@Cool_Corona - Very interesting results! Could you please provide a bit more detail describing your IDS/IPS setup and where exactly in pfSense you made those changes? What prompted you to start looking into MTU sizing? Also, what method(s) did you use to assess throughput (before and after the MTU change)?

Thanks in advance!

I made the changes on the Interface tab. (all)

I am running full IDS with a lot of rules.

What prompted me was somebody doing testing on OPNsense that came up with the results of lowering the std. MTU from 1500 to 1440 and MSS to 1400 to avoid fragmentation. Suddenly the speed increased both in real world testing and IPERF to almost line speed minus overhead.

IPERF went up from around 230mbit/s to 900 mbit/s and real world testing went from 190mbit/s to 650 mbit/s.

And less load on the CPU's to.

Do you have a PPPoE interface on your WAN? 1500 is the typical setting for DHCP-type WAN interfaces. PPPoE, however, is different and works much better with a reduced MTU.

No a dedicated fiber 1/1 gbit/s with static IP's

-

@tman222 said in Performance on IDS/IPS:

@Cool_Corona said in Performance on IDS/IPS:

@tman222 said in Performance on IDS/IPS:

@Cool_Corona - Very interesting results! Could you please provide a bit more detail describing your IDS/IPS setup and where exactly in pfSense you made those changes? What prompted you to start looking into MTU sizing? Also, what method(s) did you use to assess throughput (before and after the MTU change)?

Thanks in advance!

I made the changes on the Interface tab. (all)

I am running full IDS with a lot of rules.

What prompted me was somebody doing testing on OPNsense that came up with the results of lowering the std. MTU from 1500 to 1440 and MSS to 1400 to avoid fragmentation. Suddenly the speed increased both in real world testing and IPERF to almost line speed minus overhead.

IPERF went up from around 230mbit/s to 900 mbit/s and real world testing went from 190mbit/s to 650 mbit/s.

And less load on the CPU's to.

Thanks @Cool_Corona.

Is this running Snort or Suricata? Is the setup running in IDS (pcap) or IPS (inline) mode? Do you have a link to the testing / discussion where it was discovered that changing the MTU helps? I'm still not sure why there would be fragmentation at standard MTU of 1500 bytes unless perhaps the WAN connection didn't support this? Thanks again!

Suricata Legacy mode since I run VMXnet3 adapters and netmap doesnt play well with inline mode.

-

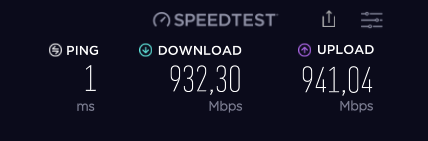

Just a quick test with speedtest. Nothing fancy...

-

Was this change on both the WAN and LAN, or just the Snort interface, which for me is LAN.

Thanks!

-

@buggz said in Performance on IDS/IPS:

Was this change on both the WAN and LAN, or just the Snort interface, which for me is LAN.

Thanks!

Both. (all)