ESXi 7 incompatible if using 7 or 7U1 HW version

-

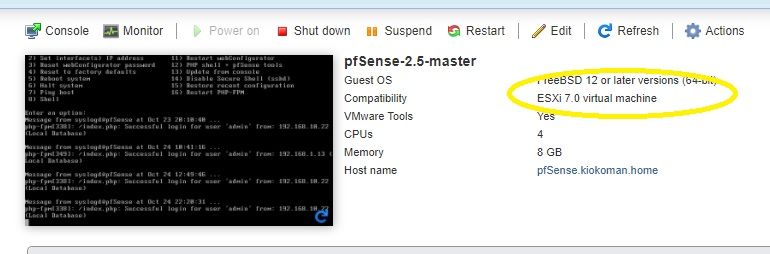

FYI that the two latest hardware versions for VM doesn't appear to work with 2.5 clean installs.

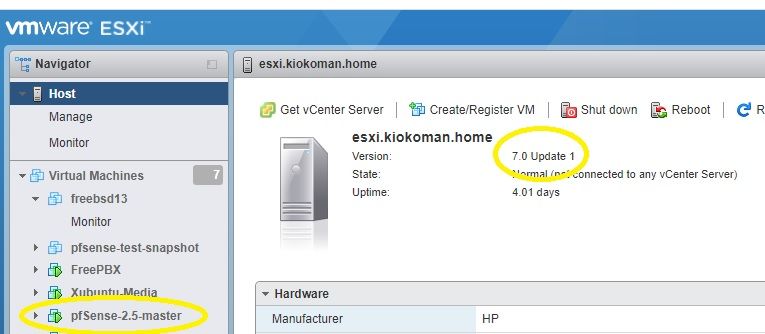

I just recently upgraded from 6.7 to 7.0U1 and decided I'd also start trying 2.5 to help find some bugs.

So using the last 6.7 hw everything works still. If you set to 7.0 or 7.0U1 the install goes fine but after rebooting the VM just powers off.

This is using EFI and set to FreeBSD 12 64-bit for OS.

The same on 6.7U2 HW compatibility works.

ESXi 7 includes support up to FreeBSD13 officially so I suspect it's maybe something funky with the pfsense setup??

-

use SATA/AHCI instead of SCSI

or better

run it in BIOS mode not UEFIhttps://redmine.pfsense.org/issues/10943

-

What version HW is your guest? That's just the host.

Mine runs on 7.0U1 if I leave the HW for the VM on 6.7U2 and it works fine on EFI with SCSI (default).

I guess I can leave it at this for now. Not really seeing a point to reinstall to make BIOS. Really BIOS mode is kind of old school and everything should work with EFI.

I've had my pfSense this way for years without issues but guess in this case it's a toss up of vmware vs FreeBSD bug from what that shows in the other links.

Funny thing is I tried to search and didn't find it LOL so thanks for pointing out for me!

-

-

Do not use EFI booting. There is no compelling reason for it on FreeBSD, and at the moment there are known issues with it.

https://redmine.pfsense.org/issues/10943

-

Well my reasoning was that most things are now emulating BIOS rather than historically it was EFI being emulated so for physical or VM even I default to EFI. BIOS is also actually being removed this year by Intel for support even. It was announced in 2017 that by 2020 they will no longer support BIOS.

So while BIOS may be a work around it actually has more reasons to not use it than to use it in my opinion, especially when big players are dropping it.

Hopefully FreeBSD can fix soon though.

-

That may be true for hardware but that has no bearing on hypervisors whatsoever.

-

Well, yes and no.

It's just hypervisors are intended to replicate the hw level. So if something is removed from HW support, for example with Intel, it means it goes away for drivers and other things also and that stuff does play a role on hypervisors. The same is true for OS's. Why would OS's want to support something legacy EOL'd by the hardware OEMs? It just adds complexity and risk. If a vulnerability or flaw comes out they now must deal with/address that simply because they didn't want to move on with the world.

Otherwise I agree, the same rules don't always apply. These sorts of issues end up existing purely because the legacy stuff still being around. This is because it creates more test cases and more of a chance for things to be missed. Imagine if EFI was the only option today. This bug would have never made it out the gate hah. Instead it wasn't caught in testing and made it downstream.

But for now the options are what they are. BIOS, use older HW version or swap the controller. I'll stick with the older HW ver I think since that works and I don't "need" to run the newer ones anyway.