IPSEC Tunnel over 100gbps network with TNSR

-

Please let us know how your testing progresses.

-

A quick answer...

I did get some improvement... Referring to the architecture showed in the joined pdf, I fixed clients mtu at 1500 instead of 9000. The iperf3 test gave 25gbps.So, the issue here is finding the perfect recipe mtu/mss.

I pretty sure I can get more speed,hopefully to double it.

I'll keep in touch... thanks

Regards, Myke.

-

@michel-jacob-calculquebec-ca

Hello Myke,

Please try increasing the default-data-size for buffers on the TNSR instances and see if that improves the situation with 9000 MTU:

tnsr(config)# dataplane buffers default-data-size 16384The default iperf3 MSS should be 8948 when using 9000 MTU on the client. This will result in fragmentation occurring over IPSec. I recommend running an additional test with the MSS set to 8870 (-M 8870) to compare.

If issues occur, please send a tnsr-diag my way:

shell sudo tnsr-diagThanks!

-

Hi!

Thanks for the suggestions.

I tried it and I got improvement.Just by changing the dataplane buffers, it's working now at mtu 9000 fixed in both clients.

Got 34gbps!

Then, I tried changing the MSS and no change from the previous test... same speed.

I will try some tests transferring huge files (> 500gb) to see if there is no corruption, loss data and packets drop.

If you have other ideas like these good ones, please, I will be happy to try them ;-)

As I said in my document, we are targeting 60gbps (maybe this is too much asking...anyway). But at 34gbps, it's a good solution.

Thanks again, until next time.

(I'll keep you informed)

Michel -

I found out that my number of workers was 1 in both dataplane… I didn't recall changing back to 1. So, I did again “tnsr(config)# dataplane cpu workers 4”. Not working anymore. I checked and all my interfaces were missing in tnsr1 (tnsr2 was OK). Did a reboot of both tnsr, load back ipsec configuration, changed dataplane buffers with only one worker on each dataplane. The transferring speed was back at 34gbps. Did again put back 4 workers on the dataplane...this time, the interfaces were still there but unable to have high speed even if both clients were able to ping through the IPSEC tunnel. Did another reboot, only did changes to the workers (not the buffers). Loose again interfaces in tnsr1 for a certain time both got back...no ping through IPSEC tunnel.

After doing some tests and observations, when I tried to put some CPU’s affinity, it stopped working. In the /var/log/messages, vpp is always respawned and all interfaces go down. I checked and the affinity is in place, the interfaces RX queue is also pinned in one of the workers. It became unstable… Sometimes the gateway of the client(tnsr) became available and sometimes not (maybe in the restarting process). I think it is also related to IPSEC… looks like it was trying establishing the tunnel, was not abled to do so then the vpp layer restarted. Anyway, joined a tnsr diag of both side (please note that ipsec1 is tnsr1, ipsec2 is tnsr2)

Thanks

Mike

tnsr-diag-ipsec2-2020-12-07-050824.zip

tnsr-diag-ipsec1-2020-12-07-045210.zip -

@michel-jacob-calculquebec-ca

It looks like you have two physical CPUs that are 4 cores each - for a total of 8 cores. Generally this isn't an issue but I wonder if the ID that is used to reference a CPU core is getting mixed up, especially considering how the core IDs are being presented further down below:

Dec 7 03:17:30 ipsec1 kernel: smp: Bringing up secondary CPUs ... Dec 7 03:17:30 ipsec1 kernel: x86: Booting SMP configuration: Dec 7 03:17:30 ipsec1 kernel: .... node #1, CPUs: #1 Dec 7 03:17:30 ipsec1 kernel: smpboot: CPU 1 Converting physical 0 to logical die 1 Dec 7 03:17:30 ipsec1 kernel: .... node #0, CPUs: #2 Dec 7 03:17:30 ipsec1 kernel: .... node #1, CPUs: #3 Dec 7 03:17:30 ipsec1 kernel: .... node #0, CPUs: #4 Dec 7 03:17:30 ipsec1 kernel: .... node #1, CPUs: #5 Dec 7 03:17:30 ipsec1 kernel: .... node #0, CPUs: #6 Dec 7 03:17:30 ipsec1 kernel: .... node #1, CPUs: #7 Dec 7 03:17:30 ipsec1 kernel: smp: Brought up 2 nodes, 8 CPUsPIDs: 0 and 1

Processor # - Core ID:

0 - 8

1 - 5

2 - 13

3 - 13

4 - 4

5 - 8

6 - 9

7 - 9It's strange that there are corresponding pairs of core IDs with an exception of the first core from each physical CPU.

processor : 0 physical id : 0 siblings : 4 core id : 8 cpu cores : 4 processor : 1 physical id : 1 siblings : 4 core id : 5 cpu cores : 4 processor : 2 physical id : 0 siblings : 4 core id : 13 cpu cores : 4 processor : 3 physical id : 1 siblings : 4 core id : 13 cpu cores : 4 processor : 4 physical id : 0 siblings : 4 core id : 4 cpu cores : 4 processor : 5 physical id : 1 siblings : 4 core id : 8 cpu cores : 4 processor : 6 physical id : 0 siblings : 4 core id : 9 cpu cores : 4 processor : 7 physical id : 1 siblings : 4 core id : 9 cpu cores : 4Can you post the output of

shell lscpu? It should align with the details above.Instead of specifying the number of works, try using the

corelist-workeroption. When you enter this command, press [tab] or ? after that command to see what CPUs are listed, and please post back with what those are. Example:tnsr(config)# dataplane cpu corelist-workers 0 # of core 1 # of core 10 # of core 11 # of core 12 # of core 13 # of core 14 # of core 15 # of core 2 # of core 3 # of core 4 # of core 5 # of core 6 # of core 7 # of core 8 # of core 9 # of core <corelist-workers> # of coreIn the instance where no worker count is specified, VPP reports vpp_main is assigned like so:

ID Name Type LWP Sched Policy (Priority) lcore Core Socket State 0 vpp_main 5504 other (0) 1 5 1This indicates it's running on the first CPU core on the physical CPU residing in the 2nd CPU socket (NUMA node 1). It's interesting that it chose to use NUMA node 1 instead of NUMA node 0.

ipsec1 kernel: c6xx 0000:da:00.0: IOMMU should be enabled for SR-IOV to work correctlyIt does look like QAT VFs are being generated but can you make sure SR-IOV is enabled in your BIOS? The above is one of the crypto device endpoints.

I assume you are restarting the dataplane after setting the worker count -

service dataplane restart. Try waiting 15 seconds after doing this one side, then restart on the other and wait for 15 seconds, and then try to send a ping across before you try sending traffic over it. -

Following, lscpu:

ipsec1 tnsr(config)# shell lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 8 On-line CPU(s) list: 0-7 Thread(s) per core: 1 Core(s) per socket: 4 Socket(s): 2 NUMA node(s): 2 Vendor ID: GenuineIntel CPU family: 6 Model: 85 Model name: Intel(R) Xeon(R) Gold 5222 CPU @ 3.80GHz Stepping: 7 CPU MHz: 3862.902 CPU max MHz: 3900.0000 CPU min MHz: 1200.0000 BogoMIPS: 7600.00 Virtualization: VT-x L1d cache: 32K L1i cache: 32K L2 cache: 1024K L3 cache: 16896K NUMA node0 CPU(s): 0,2,4,6 NUMA node1 CPU(s): 1,3,5,7 Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc art arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 invpcid_single intel_ppin ssbd mba ibrs ibpb stibp ibrs_enhanced tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm mpx rdt_a avx512f avx512dq rdseed adx smap clflushopt clwb intel_pt avx512cd avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts pku ospke avx512_vnni md_clear flush_l1d arch_capabilitiesAnd then

"dataplane cpu corelist-workers ?"ipsec1 tnsr(config)# dataplane cpu corelist-workers 0 # of core 1 # of core 2 # of core 3 # of core 4 # of core 5 # of core 6 # of core 7 # of core <corelist-workers> # of coreSR-IOV is deactivated in BIOS since I deactivated too everything related to virtualization...is tnsr using it anyway?

I saw that both IPSEC interface(ipip0) are activated in both tnsr servers since both clients system can ping, without interruption, those interfaces(172.32.0.0/30). Mather fact, all interfaces can be reached by their corresponding client(WAN,LAN, ipip0) It's just that the tunnel never goes up between those two.

Something to change in IPSEC or VPP ? related to the core?Is it OK if the IRQ-balance is running in tnsr?

Tomorrow, I'll check the BIOS if something is misconfigured.

Hope it helpThanks... I really appreciate it.

Mike -

December 10th

Performance results for December 10th 1 worker in routing mode only [ 4] 0.00-200.00 sec 225 GBytes 9.65 Gbits/sec 8405 sender [ 4] 0.00-200.00 sec 213 GBytes 9.14 Gbits/sec 7641 sender [ 4] 0.00-200.00 sec 228 GBytes 9.80 Gbits/sec 8849 sender [ 4] 0.00-200.00 sec 207 GBytes 8.89 Gbits/sec 7627 sender [ 4] 0.00-200.00 sec 206 GBytes 8.84 Gbits/sec 7547 sender [ 4] 0.00-200.00 sec 229 GBytes 9.83 Gbits/sec 8743 sender [ 4] 0.00-200.00 sec 203 GBytes 8.72 Gbits/sec 7888 sender [ 4] 0.00-200.00 sec 224 GBytes 9.64 Gbits/sec 8674 sender [ 4] 0.00-200.00 sec 198 GBytes 8.51 Gbits/sec 8194 sender [ 4] 0.00-200.00 sec 227 GBytes 9.75 Gbits/sec 8774 sender Which give 92.77 gbps 3 workers in routing mode only ipsec1 tnsr(config)# show dataplane cpu threads ID Name Type PID LCore Core Socket -- -------- ------- ---- ----- ---- ------ 0 vpp_main 4910 1 5 1 1 vpp_wk_0 workers 4914 2 5 0 2 vpp_wk_1 workers 4915 3 12 1 3 vpp_wk_2 workers 4916 4 2 0 ipsec2 tnsr(config)# show dataplane cpu threads ID Name Type PID LCore Core Socket -- -------- ------- ---- ----- ---- ------ 0 vpp_main 5009 1 5 1 1 vpp_wk_0 workers 5013 2 13 0 2 vpp_wk_1 workers 5014 3 13 1 3 vpp_wk_2 workers 5015 4 4 0 No changes related to performance 1 worker in encrypting mode IPSEC(aes256-sha512-modp4096) [ 4] 0.00-200.00 sec 77.3 GBytes 3.32 Gbits/sec 1594 sender [ 4] 0.00-200.00 sec 84.6 GBytes 3.63 Gbits/sec 1776 sender [ 4] 0.00-200.00 sec 79.6 GBytes 3.42 Gbits/sec 1609 sender [ 4] 0.00-200.00 sec 80.2 GBytes 3.45 Gbits/sec 1732 sender [ 4] 0.00-200.00 sec 82.4 GBytes 3.54 Gbits/sec 2110 sender [ 4] 0.00-200.00 sec 80.4 GBytes 3.46 Gbits/sec 1509 sender [ 4] 0.00-200.00 sec 78.3 GBytes 3.36 Gbits/sec 1642 sender [ 4] 0.00-200.00 sec 79.8 GBytes 3.43 Gbits/sec 1526 sender [ 4] 0.00-200.00 sec 83.1 GBytes 3.57 Gbits/sec 2211 sender [ 4] 0.00-200.00 sec 81.1 GBytes 3.48 Gbits/sec 2016 sender Which give 34.66 gbps 3 workers in encrypting mode IPSEC(aes256-sha512-modp4096) At this moment, it is not functional. Here is what I have after some analysis… Each client is able to ping is gateway which is the LAN interface in tnsr; Each client is able to ping is correspondent IPSEC interface ipip0 in tnsr; From one tnsr, unable to ping the IPSEC’s interface of the other end of IPSEC(the other tnsr); In the logs /var/log/messages of December 10th: VPP stopped and always been respawned Stgrongswan and charrond stopped and restarted (as VPP)Still analyzing the logs at this moment...

-

Update Feb 19

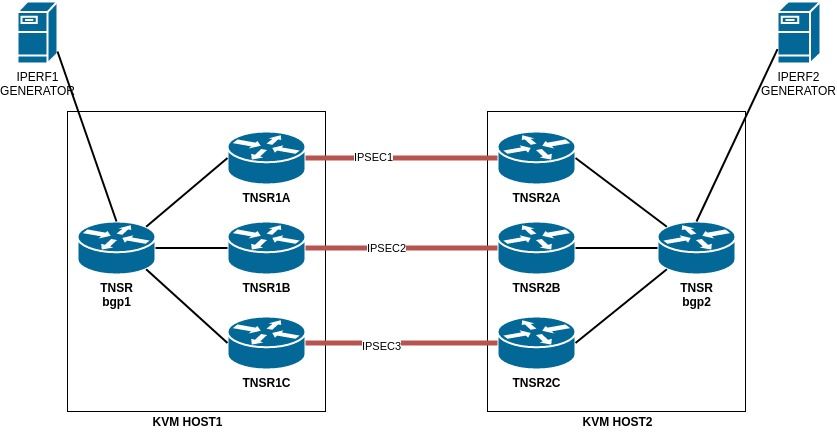

New test in virtual host using another instance of virtualized TNSR... as this:

I only have 1 generator et each sides, so BGP wont use all total bandwidth of the three IPSEC tunnels.

Anyway, I've got 40gbps with redundancy (mean, if during the test, if I drop 1 or even 2 path, the iperf continue through the IPSEC tunnel at same speed.Still hope to use less TNSR instances with higher speed.

M. -

@michel-jacob-calculquebec-ca

Thanks for sharing your progress Michel!