NAT and DPDK Observation

-

I'm supporting the network for a relatively large event where around 1100-1500 users will be connecting their own desktops and laptops to a "standard" campus LAN switch architecture

(Access/Edge -> Dist. -> Core), then must be NAT'd to reach the internet. We chose TNSR to replace pfSense Plus for this use case after reviewing the nat pool feature, and downloaded the TNSR Lab software to perform some functionality testing.I'm here in hopes of getting some context on errors observed while testing and possible tuning options should those errors turn out to be detrimental at scale. This particular behavior was observed by two separate engineers testing TNSR with identical configuration both on bare-metal hardware, as well as a virtual machine. Note the only similarity between each test is traffic sources are VMWare VMs.

I set up a small lab network to do throughput testing on a Netgate XG-1541 with TNSR 22.02-1 installed. The goal was to assess stability and performance under load to emulate peak traffic levels expected during the event. The Zero-to-Ping documentation was followed along with additional TNSR docs and VPP/DPDK documentation to initially configure the device.

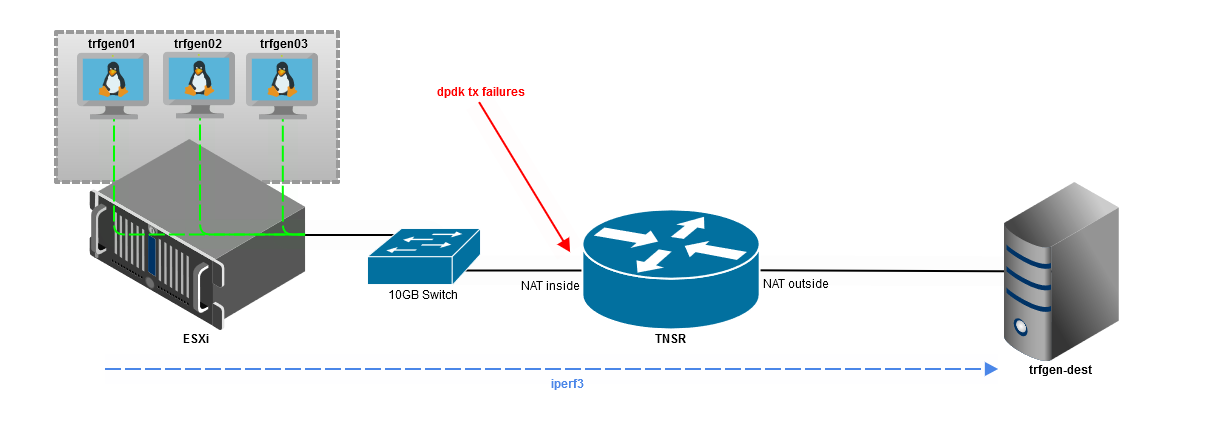

The setup for the bare-metal test is fairly simple:

- Three VMWare Virtual Machines running Ubuntu Server 20.04 setup as iperf traffic sources (clients). Underlying infrastructure is ESXi 6.7.

- Each VM is attached to the same port group on a vDistributedSwitch, which is mapped to a 10 GB physical adapter/uplink. This connects to a 10GB switch with a cat6 cable.

- XG-1541 running TNSR with a Chelsio 10GB NIC is connected to the same 10GB switch using a DAC.

- Bare-metal host running Ubuntu Server 20.04 with a Chelsio 10GB NIC is connected to the TNSR XG-1541 Chelsio NIC's second interface with a DAC. This host is also running Ubuntu Server 20.04.

A basic diagram of the lab setup:

Iperf3 is installed on all three source VMs and the destination host. The client side command looks something like this per source VM, using unique ports 5101 - 5109:

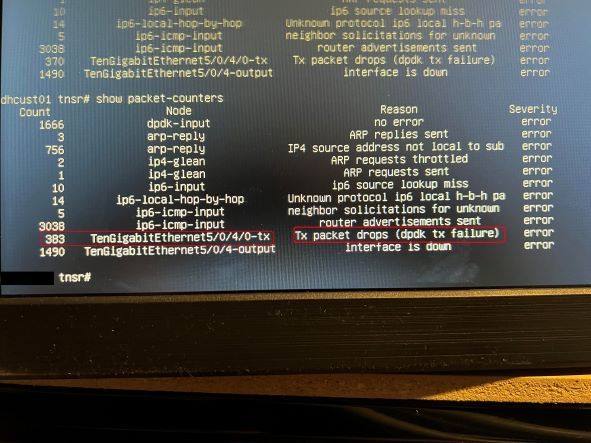

iperf3 -c 10.10.10.10 -T s1 -n 2048M -bidir -p 5101 & iperf3 -c 10.10.10.10 -T s2 -n 2048M -bidir -p 5102 & iperf3 -c 10.10.10.10 -T s3 -n 2048M -bidir -p 5103During the test, we identified errors accruing for Tx packet drops (dpdk tx failure) on the inside nat interface using 'show packet-counters'. This is the interface connected to the 10GB switch that connects the VM infrastructure. These errors did not accrue unless iperf was running; While iperf was running, we observed ~10 errors per second. The below screenshot shows the total amount of errors seen after all 9 iperf streams completed:

Finally, the TNSR running configuration that can be used to recreate this lab if needed:

I would simply like to better understand these errors in an effort to determine if they will cause performance degradation at scale. I would also ideally like to confirm the configurations do not require any further adjustment or tuning to prevent these types of errors. Any guidance or reference to applicable documentation would be tremendously helpful.

Let me know if there is anything I can better clarify!

-

Hi,

would you please explain me why you need or realize

NAT internal and NAT external? NAT does like I am informed

the following; It only answers and also let pass through packets that are requested by an IP internal and this

IP will be written then inside of the NAT table! Otherwise

there will be no transfer passing through. So my question

to you is now, why double NAT? -

Posting the solution here for anyone that runs into similar issues...

The problem turned out to be hardware-related. The Chelsio SFP+ NIC was identified to be the reason behind the TX drops, and after working with Netgate TAC confirmed the best course of action was to use a different NIC. There are an number of alternatives available out there, however we chose the Intel X710 offering from Netgate to ensure compatibility with the XG1541.

Once the cards were swapped out, we ran the same test and confirmed the TX errors were no longer present. As of this writing we also successfully deployed TNSR at our event for the first time using the new NICs, and it performed beautifully.

One thing important to note: After getting everything configured we needed to support our event (VRRP, NAT, bonded interfaces, routing, etc.) things generally behaved as expected (i.e. connectivity existed). When observing the arp table on switches that each router connected to however, every couple of seconds the VRRP MAC address flapped between the primary and secondary routers. Per Netgate documentation this was apparently related to the Intel X710 NICs, which we resolved by adding "devargs disable_source_pruning=1" to the configuration that brings physical interfaces into the dataplane from the host OS. (Reference https://docs.netgate.com/tnsr/en/latest/advanced/dataplane-dpdk.html for documentation)