Hot to enable pfsense on ESXi to 10 gbit?

-

Hi Folks,

may I ask for an advise how I can motivate pfsense to go up to 10gbits?

some testing with iperf3:

debian VM -> debian VM close to 10gbit

windows PC -> Debian VM 10gbitSo that "verifies" general 10gbit capbilities of my network

iperf3 testing to pfsense

windows PC -> pfsense ~3gbit

Debian VM -> pfsense ~3gbitI saw some postings on truenas forum LRO enabled improved performance.

Iperf3 testing with LRO ENABLED on pfsense:

windows PC -> pfsense 10gbit

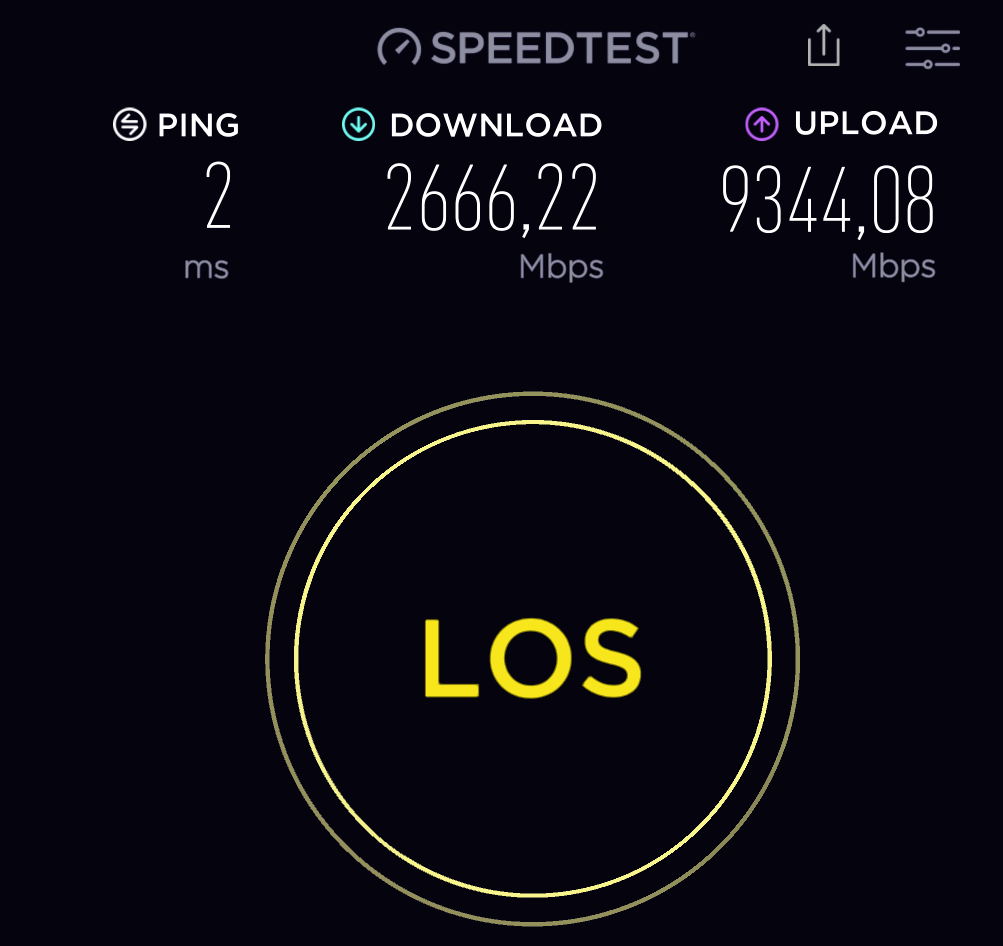

Debian VM -> pfsense >10gbitconventional dsl speed test dropped to upload of about 5mbits, similar to download speed

so clearly "LRO enabled" increased iperf results but decreased dsl speeds by a lot!

what can I do to have 10gbit iperf3 speeds and dsl speeds?

ps: 10gbit dsl speed is verified working

WAN NIC intel x520

LAN NIC Mellanox Connectx-2

LRO verified enabled in ESXi for WAN,LAN -

is no one here having ESXi pfsense virtualized and 10gbit working and can leave a comment?

-

@pooperman

To test pfsense performance you have to measure the percentage through it not to or from it. -

@patch thanks for your post.

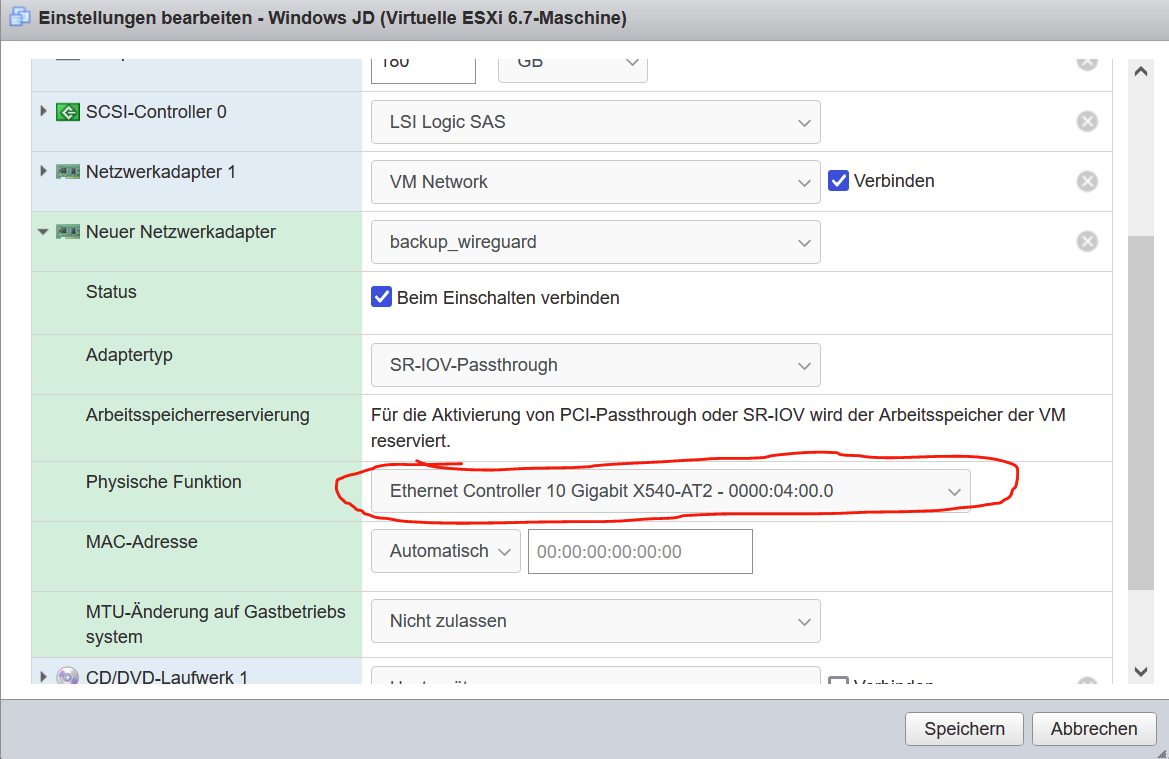

this is from windows pc via mellanox connectx-2 to esxi -> vmxnet3 driver -> pfsense -> SR-IOV WAN NIC -> Modem -> Internet

in case I put the WAN card in my desktop I get same up and download speeds of close to 10gbit

in case I test windows to linux VM, via iperf3 i get expected speeds of 10gbits

It seems there is some kind of issue of freeBSD based OS with VMXNET3 driver, since truenas is having the same low speeds.

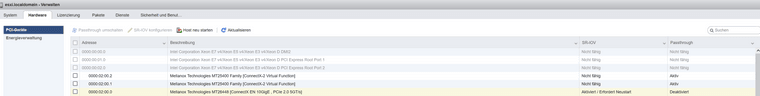

unfortunately mellanox connectX-2 does not officially support SR-IOV. So in cannpot pass LAN NIC through

any ideas?

-

@pooperman I will be making an attempt to virtualize pfsense on similar platform over the next week or so. My platform is HPE C7000 BladeCenter with Bl460c g7 running esxi 6 / vsphere 6.7 vcsa. Also with mellanox connectx2 via SR-IOV passthru to hp virtual connect 10/10D port aggregation switch. I will let you know about the caveats I run into and update on my progress as I go along.

-

@pooperman you mention connectx2 cannot passthru but you sure can isolate it in its own vlan and static route it. Can you share your hardware configuration?

-

that would be awesome.

as per my knowledge, it is possible to adjust the firmware of the connectx-2 cards to enable SR-IOV but this was never intended by mellanox.so I did that and switched on sr-iov in esxi but a message to reboot appeared, so i rebooted the server and after reboot, i had the exact same msg there.

in case you figure out a way to enable it, it might be the solution to 10gbit

HW conf mellanox connectx-2 MNPH-29D-XTR, FW: 2.9.1200

.ini file;; Generated automatically by iniprep tool on Mon May 07 15:39:40 IDT 2012 from ./b0_hawk_gen2_464.prs ;; PRS FILE FOR Hawk ;; $Id: b0_hawk_gen2_464.prs,v 1.7.2.3 2012-04-24 12:43:10 ofirm Exp $ [PS_INFO] Name = 81Y9992 Description = Mellanox ConnectX-2 EN Dual-port 10GbE PCI-E 2.0 Adapter [ADAPTER] PSID = IBM0FC0000010 pcie_gen2_speed_supported = true silicon_rev=0xb0 adapter_dev_id = 0x6750 ;;;;; {gpio_mode1, gpio_mode0} {DataOut=0, DataOut=1} ;;;;; 0 = Input PAD ;;;;; 1 = {0,1} Normal Output PAD ;;;;; 2 = {0,Z} 0-pull down the PAD, 1-float ;;;;; 3 = {Z,1} 0-float, 1-pull up the pad ;;;;; Under [ADAPTER] section ;;;;; Integer parameter. Values range : 0x0 - 0xffffffff. gpio_mode1 = 0x80010 gpio_mode0 = 0x0b160bef gpio_default_val = 0x000e031f receiver_detect_time = 0x1e [HCA] hca_header_device_id = 0x6750 hca_header_subsystem_id = 0x0019 eth_xfi_en = true mdio_en_port1 = 0 num_pfs = 1 total_vfs = 64 sriov_en = true [IB] gen_guids_from_mac = true port1_802_3ap_kx4_ability = false port2_802_3ap_kx4_ability = false phy_type_port1 = XFI phy_type_port2 = XFI new_gpio_scheme_en = true read_cable_params_port1_en = true read_cable_params_port2_en = true eth_tx_lane_polarity_port1 = 0x0 eth_rx_lane_polarity_port1 = 0x0 eth_tx_lane_polarity_port2 = 0x0 eth_rx_lane_polarity_port2 = 0x0 eth_tx_lane_reversal_port1 = off eth_tx_lane_reversal_port2 = off eth_rx_lane_reversal_port1 = off eth_rx_lane_reversal_port2 = off ;;;;; SerDes static parameters for FixedLinkSpeed ;;;;; Under [IB] section port1_sd0_muxmain_qdr = 0x1f port2_sd0_muxmain_qdr = 0x1f port1_sd1_muxmain_qdr = 0x1f port2_sd1_muxmain_qdr = 0x1f port1_sd2_muxmain_qdr = 0x1f port2_sd2_muxmain_qdr = 0x1f port1_sd3_muxmain_qdr = 0x1f port2_sd3_muxmain_qdr = 0x1f port1_sd0_ob_preemp_pre_qdr = 0x0 port2_sd0_ob_preemp_pre_qdr = 0x0 port1_sd1_ob_preemp_pre_qdr = 0x0 port2_sd1_ob_preemp_pre_qdr = 0x0 port1_sd2_ob_preemp_pre_qdr = 0x0 port2_sd2_ob_preemp_pre_qdr = 0x0 port1_sd3_ob_preemp_pre_qdr = 0x0 port2_sd3_ob_preemp_pre_qdr = 0x0 port1_sd0_ob_preemp_post_qdr = 0x2 port2_sd0_ob_preemp_post_qdr = 0x2 port1_sd1_ob_preemp_post_qdr = 0x2 port2_sd1_ob_preemp_post_qdr = 0x2 port1_sd2_ob_preemp_post_qdr = 0x2 port2_sd2_ob_preemp_post_qdr = 0x2 port1_sd3_ob_preemp_post_qdr = 0x2 port2_sd3_ob_preemp_post_qdr = 0x2 port1_sd0_ob_preemp_main_qdr = 0x10 port2_sd0_ob_preemp_main_qdr = 0x10 port1_sd1_ob_preemp_main_qdr = 0x10 port2_sd1_ob_preemp_main_qdr = 0x10 port1_sd2_ob_preemp_main_qdr = 0x10 port2_sd2_ob_preemp_main_qdr = 0x10 port1_sd3_ob_preemp_main_qdr = 0x10 port2_sd3_ob_preemp_main_qdr = 0x10 port1_sd0_ob_preemp_msb_qdr = 0x0 port2_sd0_ob_preemp_msb_qdr = 0x0 port1_sd1_ob_preemp_msb_qdr = 0x0 port2_sd1_ob_preemp_msb_qdr = 0x0 port1_sd2_ob_preemp_msb_qdr = 0x0 port2_sd2_ob_preemp_msb_qdr = 0x0 port1_sd3_ob_preemp_msb_qdr = 0x0 port2_sd3_ob_preemp_msb_qdr = 0x0 center_mix90phase = true ext_phy_board_port1 = HAWK3 ext_phy_board_port2 = HAWK3 ;;;;; External Phy: ignore mellanox OUI checking. ;;;;; Under [IB] section ;;;;; Integer parameter. Values range : 0x0 - 0x1. ignore_mellanox_oui = 0x1 ;;;;; External Phy check GPIOs values for the 4 configurable GPIOs per port. ;;;;; every GPIO has 2 bits that can get the values "00", "01", "11" - dont check. ;;;;; Under [IB] section ;;;;; Integer parameter. Values range : 0x0 - 0xff. ext_phy_check_value_port1 = 0xff ext_phy_check_value_port2 = 0xff [PLL] lbist_en = 0 lbist_shift_freq = 3 pll_stabilize = 0x13 flash_div = 0x3 lbist_array_bypass = 1 lbist_pat_cnt_lsb = 0x2 core_f = 44 core_r = 27 ddr_6_db_preemp_pre = 0x4 ddr_6_db_preemp_main = 0x7 ddr_6_db_preemp_post = 0x0 ddr_3_dot_5_db_preemp_pre = 0x2 ddr_3_dot_5_db_preemp_main = 0x7 ddr_3_dot_5_db_preemp_post = 0x0 [FW]server spec:

Xeon E5-1620v4 3,5GHz 2011-3

Supermicro X10SRA-F

4x 16GB Samsung DDR4-2133 reg. ECC RamESXi:

6.7.0 Update 3 (Build 19997733)

I flashed ESXi from pre U1 up to latest patch after enabled SR-IOV (but not working) to see if something has changed. Nothing changed, from pre U1 to post U3 SR-IOV seems not supported, as described above.

cannot select the mellanox card