pfSense on Proxmox - High RAM Usage

-

@bearhntr pfsense uses most of the ram you give it, I assume for ram buffers. Which means Proxmox can not then use it. Mine does the same. You probably don't want your router to be using swap so just give the pfsense VM what in actually needs & can keep. 4GB is probably plenty.

-

@bearhntr

Oh, I have to correct this:

pfSense takes all the RAM you provide to the VM, as long as you don't have load the KVM balloon driver in it.

It's not the guest-agent!

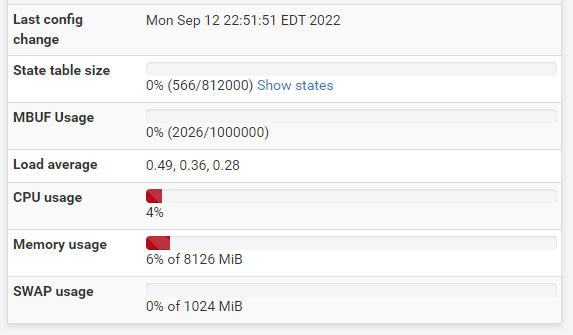

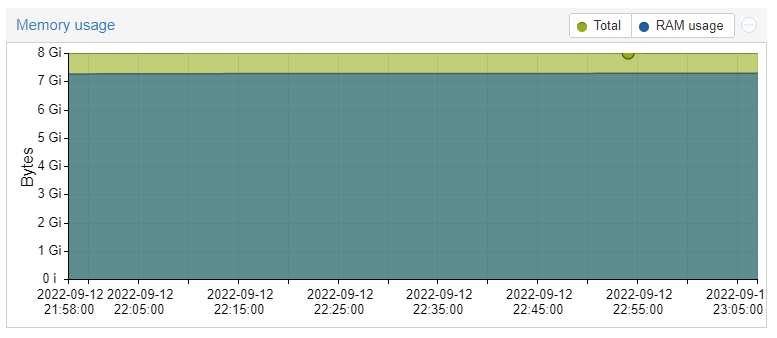

However, I didn't ever use it on FreeBSD.But as your screenshot shows, only 6% of the 8 GB (~500MB) are really in use at this time. So you may reduce the VMs RAM in Proxmox.

My home installation on KVM is happy with only 2 GB.Instructions on how to enable ballooning you can find in the FreeBSD Manual Pages

-

Thanks -- When I had this installed on its own box, it had 8GB RAM. Now that it is a VM, I will try the 2GB RAM (the HDD I set was 8GB) when I built the VM.

I already have the QEMU Agent installed and running using those same instructions. Are you saying that RAM will be an issue with that installed?

I am kind of nuts about VMs 'sharing'.... lol When I used to use VMWare ESXi - I always wanted the VMTools working.

-

Also -- is there a good TWEAKING Guide for pfSense? I found one here:

Hardware Tuning and Troubleshooting

but not really a help for me -- home user. Seemed to be more for Enterprise.

-

@bearhntr

If you want to load the balloon driver at boot time, put the line shown in the quaoted page into the loader.conf.local instead!

If it doesn't exist already create it.

However, this requires that the driver is already inside the kernel. I don't know if it is in the pfSense kernel. -

-

@bearhntr

The virtio-balloon driver enables the operating system inside the VM to communicate with the hypervisor regarding memory usage. So the VM can give back released memory to the host and request more from the host if needed.This requires that you configure the VM accordingly in Proxmox and state the max and min memory.

-

Ahh..... I went back through the thread and see the 'Ballooning link"

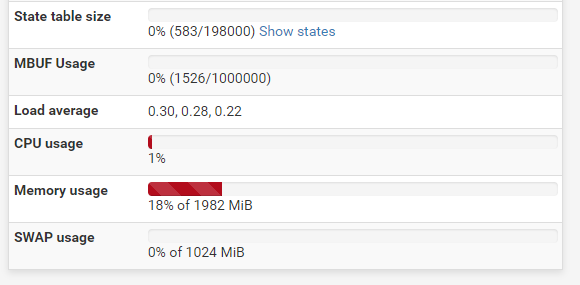

I will go through this. I shutdown the VM and adjusted the RAM to 2GB and restarted. Now seeing this:

-

@bearhntr

If you only provide 2 GB to pfSense ballooning wouldn't make much sense, I think.Balloonig is meaningful if you're running many VMs on the host, which use ballooning and have overcommitted memory. So the hypervisor can balance the memory between them to get a good overall performance.

-

I made the changes anyway -- I added the following to the

loader.conf.localfilevirtio_load="YES"

virtio_pci_load="YES"

virtio_balloon_load="YES"and rebooted: No errors that I can see.

dmesg | grep virt kvmclock0: <KVM paravirtual clock> on motherboard virtio_pci0: <VirtIO PCI Balloon adapter> port 0xe080-0xe0bf mem 0xfe400000-0xfe403fff irq 11 at device 3.0 on pci0 vtballoon0: <VirtIO Balloon Adapter> on virtio_pci0 virtio_pci1: <VirtIO PCI Console adapter> port 0xe0c0-0xe0ff mem 0xfea91000-0xfea91fff,0xfe404000-0xfe407fff irq 11 at device 8.0 on pci0 vtcon0: <VirtIO Console Adapter> on virtio_pci1 virtio_pci2: <VirtIO PCI Block adapter> port 0xe000-0xe07f mem 0xfea92000-0xfea92fff,0xfe408000-0xfe40bfff irq 10 at device 10.0 on pci0 vtblk0: <VirtIO Block Adapter> on virtio_pci2 virtio_pci3: <VirtIO PCI Network adapter> port 0xe120-0xe13f mem 0xfea93000-0xfea93fff,0xfe40c000-0xfe40ffff irq 10 at device 18.0 on pci0 vtnet0: <VirtIO Networking Adapter> on virtio_pci3 virtio_pci4: <VirtIO PCI Network adapter> port 0xe140-0xe15f mem 0xfea94000-0xfea94fff,0xfe410000-0xfe413fff irq 11 at device 19.0 on pci0 vtnet1: <VirtIO Networking Adapter> on virtio_pci4 kvmclock0: <KVM paravirtual clock> on motherboard virtio_pci0: <VirtIO PCI Balloon adapter> port 0xe080-0xe0bf mem 0xfe400000-0xfe403fff irq 11 at device 3.0 on pci0 vtballoon0: <VirtIO Balloon Adapter> on virtio_pci0 virtio_pci1: <VirtIO PCI Console adapter> port 0xe0c0-0xe0ff mem 0xfea91000-0xfea91fff,0xfe404000-0xfe407fff irq 11 at device 8.0 on pci0 vtcon0: <VirtIO Console Adapter> on virtio_pci1 virtio_pci2: <VirtIO PCI Block adapter> port 0xe000-0xe07f mem 0xfea92000-0xfea92fff,0xfe408000-0xfe40bfff irq 10 at device 10.0 on pci0 vtblk0: <VirtIO Block Adapter> on virtio_pci2 virtio_pci3: <VirtIO PCI Network adapter> port 0xe120-0xe13f mem 0xfea93000-0xfea93fff,0xfe40c000-0xfe40ffff irq 10 at device 18.0 on pci0 vtnet0: <VirtIO Networking Adapter> on virtio_pci3 virtio_pci4: <VirtIO PCI Network adapter> port 0xe140-0xe15f mem 0xfea94000-0xfea94fff,0xfe410000-0xfe413fff irq 11 at device 19.0 on pci0 vtnet1: <VirtIO Networking Adapter> on virtio_pci4Thank You - much.

-

@bearhntr

Well, seems the driver is inside the kernel.Now you can increase the max memory in Proxmox and enable ballooning. Then after boot up, you should see that the VM releases memory back the the host.

-

For now, I will leave it at 2GB. I have a new SFF box coming which has 32GB of RAM. Plans are to move all of this over there, and put a few more VMs on it. The box that I am currently using has 16GB RAM and 2 VMs (this one now at 2GB and the other at 8GB).

I may need to increase the RAM as I install packages and re-setup CloudFlare DDNS on here.

I appreciate your and @Patch input.