slow pfsense IPSec performance

-

Dear Users,

I just activated an IPSEC LAN2LAN VPN in order to connect two private subnets belonging to two different sites.

Connection between the endpoints has been established correctly, the workstations belonging to the two different private subnets can ping each other.

The two sites are interconnected by a 1Gb link and the iperf test integrated in pfSense (between the WAN addresses of the two pfSense instances) returned the expected values

Please note that this test doesn't involve the IPSEC VPN tunnel.[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 101 MBytes 844 Mbits/sec 38 226 KBytes

[ 5] 1.00-2.00 sec 107 MBytes 898 Mbits/sec 17 174 KBytes

[ 5] 2.00-3.00 sec 104 MBytes 870 Mbits/sec 42 227 KBytes

[ 5] 3.00-4.00 sec 103 MBytes 868 Mbits/sec 31 313 KBytes

[ 5] 4.00-5.00 sec 105 MBytes 879 Mbits/sec 14 203 KBytes

[ 5] 5.00-6.00 sec 102 MBytes 854 Mbits/sec 36 254 KBytes

[ 5] 6.00-7.00 sec 104 MBytes 875 Mbits/sec 15 217 KBytes

[ 5] 7.00-8.00 sec 105 MBytes 879 Mbits/sec 50 143 KBytes

[ 5] 8.00-9.00 sec 102 MBytes 856 Mbits/sec 30 227 KBytes

[ 5] 9.00-10.00 sec 107 MBytes 898 Mbits/sec 19 271 KBytes[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.02 GBytes 872 Mbits/sec 292 sender

[ 5] 0.00-10.09 sec 1.01 GBytes 864 Mbits/sec receiveriperf Done.

BUT, when I try to make an iperf test between the hosts belonging to the different subnets (involving the VPN tunnel), I can see a very low bitrate value:

iperf2 -c 192.168.201.11

Client connecting to 192.168.201.11, TCP port 5001

TCP window size: 325 KByte (default)[ 3] local 192.168.202.12 port 43102 connected with 192.168.201.11 port 5001

[ ID] Interval Transfer Bandwidth

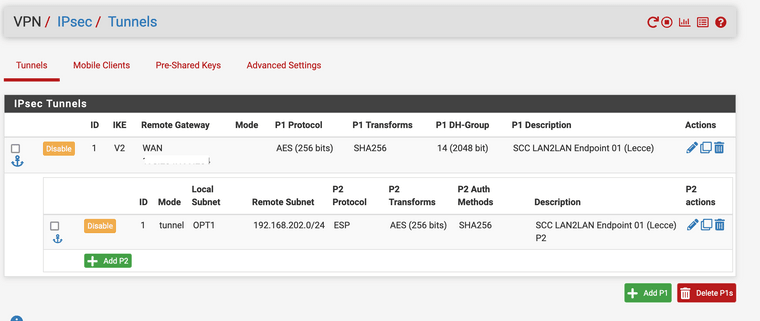

[ 3] 0.0-10.0 sec 375 MBytes 315 Mbits/secBoth the pfSense instances have the same (basic) IPSEC configuration:

Phase 1:

AES, 128bits, SHA1,DH 14 (2048 bits)Phase 2:

ESP,AES 128bits, SHA1 hash algorithm, PFS key group 14(2048 bits)Both the pfSense instances have the following interfaces:

WAN with the public IP

LAN with the private IP for management needs

OPT1 with the private IP to reach the private subnetWAN interface has MTU = 1500

LAN interface has MTU = 1500

OPT1 interface has MTU = 9000I read in some other threads that MTU and MSS can be modified accordingly, but since I'm a newbie, I didn't understand which interface settings should be modified and how and why I have to do it (if is it really needed).

Could you please help me to improve the performance of the IPSEC VPN?

Thank you in advance,

Mauro -

Yes, packet fragmentation will kill the performance and that is entirely possible if one of the subnets covered is using MTU 9000.

However 300Mbps is not all that slow. What hardware are you using at each end?

Using AES-GCM will probably give you more bandwidth.

Steve

-

@stephenw10 thank you for your reply.

both the pfsense instances are running on two VMware ESXi virtual machines (each one has 4 cores + 4GB RAM).

300Mbps is not so bad, but the iperf tests between the Pfsense public IP (WAN interfaces) give me about 900Mbps...I just tried using AES-GCM, but no performance change has been detected.

From your experience, is there something else I can try to do?

I'm using pfsense 2.6 at each end. What is the value I should set for the interface OPT1 (the one with MTU 9000)?Thank you in advance,

Mauro -

I would enable MSS clamping for VPN traffic in Sys > Adv > Firewall & NAT and test some values. The default 1400 should be sufficient to prevent any VPN overhead causing fragmenting.

Did you set the cipher to AES-GCM at P1 and P2? The P2 value there is really what counts for traffic throughput.

Do the virtual CPUs there support AES-NI?

If it's CPU limited then setting that can make a big difference.Steve

-

Hello @stephenw10 , I enabled MSS clamping as you suggested and tested some values, but unfortunately nothing changed.

I also set AES-GCM on P1 and P2 without any important improvement.

The 2 pfsense instance have the same configuration but they belong to 2 different hypervisors: only one of them support AES-NI (and I can see it available on related pfsense home page).I also tried to remove encryption from P2 (no ESP, only AH) to reduce the computing impact, but the bitrate doesn't change.

So, I tried the following configuration as suggested on Pfsense site official pages:

Buut bitrate is still the same (280Mbps through the VPN vs 900Mbps WAN-to_WAN).

I noticed that, during the iperf test of the VPN bitrate, the RAM and CPU usage is very low...so I think that it is not a problem related to the available resources.Do you have some other idea to improve thee bitrate?

Thank you,

Mauro -

Try running a pcap in the tunnel to make sure there is no fragmentation happening. Or try setting the packet size smaller in iperf.

You might try forcing NAT-T in case something in the route is throttling ESP.

Steve

-

@stephenw10 thank you for your reply.

I just tried to force NAT-T on both ends, no luck. The bitrate is always the same:

iperf2 -c 192.168.201.11 -m -b 1G

Client connecting to 192.168.201.11, TCP port 5001

TCP window size: 576 KByte (default)[ 3] local 192.168.202.12 port 33974 connected with 192.168.201.11 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 285 MBytes 239 Mbits/sec

[ 3] MSS size 1388 bytes (MTU 1428 bytes, unknown interface)Since I'm a newbie and I'm still studying, could you please show me how to "running a pcap in the tunnel to make sure there is no fragmentation happening"?

I also tried to reduce a packet size smaller in iperf (using -M option, I hope it its the right one), but I receive a warning :

iperf2 -c 192.168.201.11 -m -b 1G -M 1200

WARNING: attempt to set TCP maximum segment size to 1200, but got 536Client connecting to 192.168.201.11, TCP port 5001

TCP window size: 576 KByte (default)[ 3] local 192.168.202.12 port 34044 connected with 192.168.201.11 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.0 sec 281 MBytes 235 Mbits/sec

[ 3] MSS size 1188 bytes (MTU 1228 bytes, unknown interface)Could you please help me? I'm sorry to disturb you so much.

-

Running a pcap can be done in the gui:

https://docs.netgate.com/pfsense/en/latest/diagnostics/packetcapture/webgui.htmlJust grab some traffic on the ipsec interface and you should see the packet size. I'd start with 1000 packets.

@mauro-tridici said in slow pfsense IPSec performance:

attempt to set TCP maximum segment size to 1200, but got 536

If it's really being limited to 536B packets that might explain it.

-

@stephenw10 I saved the traffic on IPSEC interface and saved the pcap file during the iperf2 test. Could you please make a check if you have the possibility to do it?

"If it's really being limited to 536B packets that might explain it."

Is it an iperf2 problem? We didn't have (or set) any limitation :(Thanks in advance.

-

That looks OK it's using 1452B packets as expected.

Hmm, you tested dircetly outside the tunnel and were able to see much higher rates?

What's latency between the sites inside and outside the tunnel?

Steve

-

Try to use 1472MTU and MSS 1432 on all interfaces....

And test again.

-

@stephenw10 thanks for your very professional support.

Yes, outside the tunnel I was able to reach higher rates (using pfsense integrated IPERF tool checking the connectivity WAN-to-WAN)

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 101 MBytes 844 Mbits/sec 38 226 KBytes

[ 5] 1.00-2.00 sec 107 MBytes 898 Mbits/sec 17 174 KBytes

[ 5] 2.00-3.00 sec 104 MBytes 870 Mbits/sec 42 227 KBytes

[ 5] 3.00-4.00 sec 103 MBytes 868 Mbits/sec 31 313 KBytes

[ 5] 4.00-5.00 sec 105 MBytes 879 Mbits/sec 14 203 KBytes

[ 5] 5.00-6.00 sec 102 MBytes 854 Mbits/sec 36 254 KBytes

[ 5] 6.00-7.00 sec 104 MBytes 875 Mbits/sec 15 217 KBytes

[ 5] 7.00-8.00 sec 105 MBytes 879 Mbits/sec 50 143 KBytes

[ 5] 8.00-9.00 sec 102 MBytes 856 Mbits/sec 30 227 KBytes

[ 5] 9.00-10.00 sec 107 MBytes 898 Mbits/sec 19 271 KBytes[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.02 GBytes 872 Mbits/sec 292 sender

[ 5] 0.00-10.09 sec 1.01 GBytes 864 Mbits/sec receiveriperf Done.

This is the latency between the two WAN interfaces of pfsense instances:

PING DEST_IP from SRC_IP: 56 data bytes

64 bytes from DEST_IP: icmp_seq=0 ttl=57 time=1.069 ms

64 bytes from DEST_IP: icmp_seq=1 ttl=57 time=1.194 ms

64 bytes from DEST_IP: icmp_seq=2 ttl=57 time=1.046 ms--- DEST_IP ping statistics ---

3 packets transmitted, 3 packets received, 0.0% packet loss

round-trip min/avg/max/stddev = 1.046/1.103/1.194/0.065 msIPSEC is a fantastic thing, but I'm struggling to make it working as expected with acceptable performance.

Both the pfsense endpoints are connected to the GARR research network with a 1Gb link. -

@cool_corona Should I set these values on OPT1 and WAN interfaces disabling MSS clamping for VPN traffic in Sys > Adv > Firewall & NAT ?

-

@mauro-tridici I’m guessing the reason is virtualisation.

Virtualisation has a hefty penalty cost in bandwidth when it comes to such things as interrupt based packet handling in a guest VM. This is due to the hypervisor context switching taking place several times across the hypervisor and guest VM for every packet interrupt that needs to be handled.

If the traffic does not scale properly across CPU cores and is “serialised” on a single core, then throughput will be very low, and yet there will be no real CPU utilisation because all the time is spent waiting on context switches for interrupt handling rather than actually processing traffic payload. -

Setting MSS clamping there will apply it to all traffic that matches a defined P2 subnet. On any interface. That should be sufficient.

1ms between the end points seems unexpectedly low! Is this a test setup with local VMs?

Steve

-

@mauro-tridici Yes. Report back.

-

@keyser Just not true. The performance penalty is minimal compared to bare metal.

-

@cool_corona said in slow pfsense IPSec performance:

@keyser Just not true. The performance penalty is minimal compared to bare metal.

Ehh no, depends very much on what hardware you have (and it’s level of virtualization assist). But if it’s reasonably new x86-64 and above Atom level Level CPU, then yes, that will see the cost of virtualisation dvindle. But if we are taking Atom level Jxxxx series CPUs, then those speeds are very much at the very limit of the hardware when doing virtualisation.

-

@keyser Nobody uses Atoms for Virtualization.....

-

@stephenw10 thank you for the clarification.

I will try to describe the scenario-

we have two different VMware ESXi hypervisors (A and B) in two different sites

-

on the ESXI_A has been deployed pfsense endpoint PF_A

-

on the ESX_B has been deployed pfsense endpoint PF_B

-

PF_A and PF_B are geographically connected using a 1Gb network link and WAN-to-WAN iperf test is good (about 900Mbps)

-

HOST_1 is a physical server on a LAN behind the PF_A

-

HOST_2 is a test virtual machine deployed on ESX_B on a LAN behind the PF_B (so, HOST_2 and PF_B are on the same hypervisor and they are "virtually" connected, there is no a LAN cable between HOST_2 and PF_B since they are on the same hypervisor)

When I try to run iperf2 between HOST1 and HOST2 I obtain only 240/290 Mbps

I hope it helps.

-