Strange Gateway Issues with 2.7.0 development builds

-

I normally run the plus builds but decided to test the 2.7.0 builds until new plus builds become available.

On my Proxmox virtualized system I run multiple policy routed gateways, two for OpenVPN clients and two for Wireguard with failovers configured in gateway groups. Whenever I initially boot the system only one of the OpenVPN gateways come online. OpenVPN does properly connect as indicated on the dashboard but the gateway does not come online. This second gateway remains offline unless I manually restart it. When I do this it does come online but the other working OpenVPN gateway which normally runs at 53ms latency jumps to over 200ms latency and triggers a failover. I have changed gateway monitoring IP's removed Wireguard and reconfigured OpenVPN using different destinations without success. Yes I can configure the latency trigger to a higher number but this shouldn't be necessary considering I'm using the same config without any changes. The late July 2.09 developer build I normally run does not experience this issue at all and all gateways come online and latency is what is expected. I'm aware that in development changes are constantly being made. I've considered submitting a bug report but I'm not even sure what category to put this in. As a minimum I need all the gateways to start properly at boot Any insight anyone has on this is appreciated. -

Are you restarting dpinger or openvpn?

Do the gateways show as off-line or pending?

-

@stephenw10

I am not restarting openVPN so it would be dpinger I assume accessed from Menu Status/Gateways/Restart Service icon in the GUI.

The gateway shows Offline upon boot and remains that way until I manually start the service Sometimes the first gateway fails to start and the second one starts. Regardless both gateways will not start at boot. -

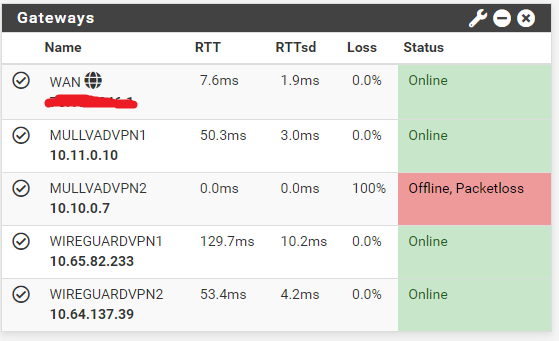

Here is what I get after boot. The second OpenVPN Gateway is down and Take note of the latency of "MULVADVPN1"

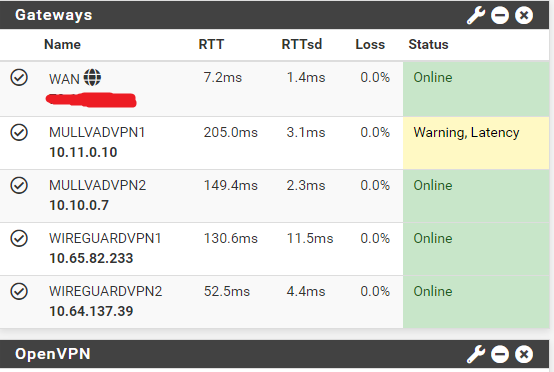

This is after restarting the gateways. The gateway comes online normally but creates latency on the first OpenVPN gateway.

-

All of those VPNs are on WAN I assume?

What IP address are they monitoring? The gateway IP directly?

In the initial situation after boot check for states to the monitoring IP. The gateway monitoring should be opening a state on the VPN2 interface but might be conflicting with some other open state.

Steve

-

@stephenw10

Yes all the VPNs are on WAN. The monitor IP's were obtained by running a traceroute and selecting an IP a few hops from the actual destination. I have tested this using public DNS servers as the monitor IPs with the same results. Also searching the states table for the affected monitor IP does show a state opening on the VPN2 interface. -

I assume the VPN shows as linked though? If you run a pcap can you see any gateway pings actually leaving across it?

Can you see them leaving on any other interface?

Are you using DCO?Steve

-

@stephenw10

Yes, the VPN(s) appears as linked.

On the interface that shows offline at boot only ICMP echo requests are recorded No replies or any other activity. The other interface does show activity as expected and No, I'm not using DCO. It appears to be more of a gateway or interface issue than OpenVPN in my opinion. -

Hmm, that's odd.

What happens if you just kill the state on VPN2 without restarting dpinger? Does it open a new state and start working?

This feels like its opening a state incorrectly when the VPN is not fully established. I sort of expected to see the gateway monitoring pings for it leaving across the wrong interface.

-

@stephenw10 YES it did in fact come up. and without the latency strangeness I was experiencing before. I rebooted the system and verified these results. There was a bit of a delay after killing the state to when it came up (about 40 seconds) but I believe that's to be expected. So how do I translate this to a bug report so it can be fixed? I know the reporting process. Just not sure if I can effectively explain it.

-

Hmm, well is sounds like it did create a bad state somehow so what I'd do it get the state data from the bad state and the good state after it comes back up.

At the CLI run:pfctl -vvvss

That will dump all the states including the gateway monitoring state. Compare the before and after killing it.Steve

-

@stephenw10

Thank you! I've submitted a bug report. -

@stephenw10 FYI, Discovered a workaround for this issue. Enabling the "Do not add Static Routes" in the Gateway monitoring options in System/Advanced/Miscellaneous allows both gateways to come up properly.

-

Relevant routes:

Destination Gateway Flags Netif Expire default 70.188.246.1 UGS em0 10.10.0.0/16 10.10.0.1 UGS ovpnc2 10.10.0.1 link#11 UH ovpnc2 10.10.0.3 link#11 UHS lo0 10.11.0.0/16 10.11.0.1 UGS ovpnc1 10.11.0.1 link#10 UH ovpnc1 10.11.0.4 link#10 UHS lo0 141.98.254.71 10.10.0.3 UHS ovpnc2 141.136.106.30 10.11.0.4 UHS ovpnc1The commands show the following states:

all icmp 10.11.0.4:24803 (10.11.0.4:27133) -> 141.136.106.30:24803 0:0 age 00:02:41, expires in 00:00:09, 313:313 pkts, 9077:9077 bytes, rule 47 id: 86a05e6300000000 creatorid: a7a94773 gateway: 10.11.0.4 origif: ovpnc1 all icmp 10.0.8.1:56301 (10.10.0.3:27587) -> 141.98.254.71:56301 0:0 age 00:02:41, expires in 00:00:09, 313:0 pkts, 9077:0 bytes, rule 45 id: 88a05e6300000000 creatorid: a7a94773 gateway: 0.0.0.0 origif: ovpnc2... which are created from these rules:

@45 pass out inet all flags S/SA keep state allow-opts label "let out anything IPv4 from firewall host itself" ridentifier 1000009913 [ Evaluations: 11668 Packets: 13437 Bytes: 2665431 States: 146 ] [ Inserted: uid 0 pid 9864 State Creations: 765 ] [ Last Active Time: Sun Oct 30 10:51:37 2022 ] @47 pass out route-to (ovpnc1 10.11.0.4) inet from 10.11.0.4 to ! 10.11.0.0/16 flags S/SA keep state allow-opts label "let out anything from firewall host itself" ridentifier 1000010012 [ Evaluations: 3401 Packets: 16 Bytes: 464 States: 0 ] [ Inserted: uid 0 pid 9864 State Creations: 0 ] [ Last Active Time: N/A ]The first rule

@45shows that the traffic is passing via a rule withoutroute-toset which could mean the traffic actually went out a different interface even thoughorigifshowsovpnc1(due to some pf quirks I don't recall). The second rule@47is concerning since I'd expect that to be the gateway10.11.0.1rather than pfSense's interface. See the warning here: https://docs.netgate.com/pfsense/en/latest/vpn/openvpn/configure-server-tunnel.html#ipv4-ipv6-local-network-sTry comparing the states between the broken and working conditions.

-

@marcosm Thank you for your detailed analysis but I simply don't understand all of this at the same level as you do. I suppose I will wait and see if a developer eventually has the same issue so it will be recognized. Enabling the "Do not add Static Routes" options keeps things working at least. I often hear that it's a "configuration issue" and not an issue with Pfsense. How is that when the configuration never changed? The Pfsense code is what is being changed and this is completely expected in a developement environmant.

-

OK. At least for now, it would be helpful if you could provide the same logs/info while it's working so it can be compared.

FWIW this is what I have on my working setup after restarting the OpenVPN service:

all icmp 172.27.114.137:38623 -> 172.27.114.129:38623 0:0 age 00:51:56, expires in 00:00:10, 6012:6007 pkts, 174348:174203 bytes, rule 143 id: 28aa696300000000 creatorid: 4da82510 gateway: 0.0.0.0 origif: ovpnc2 all icmp 172.17.5.2:38750 -> 172.17.5.1:38750 0:0 age 00:51:56, expires in 00:00:10, 6013:6007 pkts, 174377:174203 bytes, rule 143 id: 29aa696300000000 creatorid: 4da82510 gateway: 0.0.0.0 origif: ovpnc3@143 pass out inet all flags S/SA keep state allow-opts label "let out anything IPv4 from firewall host itself" ridentifier 1000016215 [ Evaluations: 59832 Packets: 171879 Bytes: 57085442 States: 266 ] [ Inserted: uid 0 pid 86586 State Creations: 967 ] [ Last Active Time: Mon Nov 7 12:35:47 2022 ]And sometime after reboot:

all icmp 172.27.114.137:61325 -> 172.27.114.129:61325 0:0 age 01:24:27, expires in 00:00:09, 9817:9811 pkts, 284693:284519 bytes, rule 140 id: 0e55696300000000 creatorid: 4da82510 gateway: 0.0.0.0 origif: ovpnc2 all icmp 172.17.5.2:61695 -> 172.17.5.1:61695 0:0 age 01:25:36, expires in 00:00:10, 9949:9940 pkts, 288521:288260 bytes, rule 140 id: 0f55696300000000 creatorid: 4da82510 gateway: 0.0.0.0 origif: ovpnc3@140 pass out on lo0 inet all flags S/SA keep state label "pass IPv4 loopback" ridentifier 1000016212 [ Evaluations: 22311 Packets: 0 Bytes: 0 States: 0 ] [ Inserted: uid 0 pid 80759 State Creations: 0 ] [ Last Active Time: N/A ]I'm not sure why it used

@140this time but it worked regardless. The differences here are I keep the monitoring IP as the tunnel gateway, and bind the service to localhost. I did test with it bound to the WAN interface itself and that was fine as well. -

@marcosm Here are the outputs of the previously requested commands while my system is in a working state (with the static route disable option applied)

pfctl_vvss.txt netstat_rn4.txt pfctl_vvsr.txt

Could this be some strangeness with my VPN provider?

-

The difference there is that the monitoring traffic is going out of the WAN instead of over the tunnel, hence it won't actually be useful in determining the status of the tunnel. I recommend keeping the monitoring IP as the tunnel gateway.

-

@marcosm I agree, Using the gateway static route option doesn't effectively monitor the tunnel but I have no choice if a want to continue testing, it doesn't work otherwise. Since this issue currently only appears to affect myself in the entire Pfsense testing community It will probably be dismissed until the next release when more people will be exposed to the problem and hopefully it will be addressed at that point.

-

@marcosm It appears that this issue has been around to some degree for the better part of 3 years https://www.reddit.com/r/PFSENSE/comments/eznfsa/psa_gateway_group_vpn_interfaces_fail_on_reboot/ It's possible dpinger may be starting before the OpenVPn clients can initialize. That's my guess anyway. It's not worth my time to try and submit a bug report on this so I will just post my workaround.

I installed the cron package and created this long sloppy entry to restart dpinger and the open vpn clients when the machine is rebooted. The delays increase my boot time significantly but it appears to at least allow my vpn clients to connect. I still need to determine if it's routing the client pings out the proper interfaces for gateway monitoring.sleep 7 && /usr/local/sbin/pfSsh.php playback svc restart dpinger && sleep 7 && /usr/local/sbin/pfSsh.php playback svc restart openvpn client 1 && sleep 7 && /usr/local/sbin/pfSsh.php playback svc restart openvpn client 2