PFSense adding a tonne to the header

-

Hi all,

I have been watching PFSense play havoc with my teams connectivity over the past few weeks. I use teams on a VM and have been paying around with MTU size after watching the VM bomb out altogeteher when on a teams call.

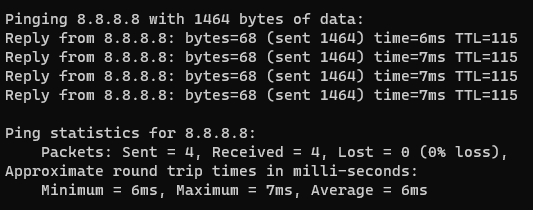

When I bypass the PFSense box the MTU tops out at 1464 - not too bad:

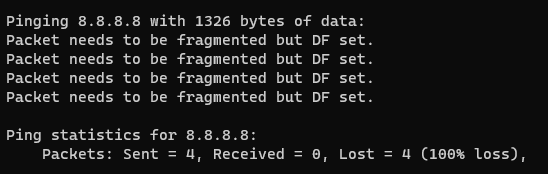

But, when I connect the PFSense box back up, the MTU drops massively:

1324 is about what it tops out at. I dont have VLANs I am busy trying to look at the headers in wireshark but as I am a mobile radio engineer I could quote 4G and 5G messaging protocols to you in my sleep. IP Packet headers not so much.

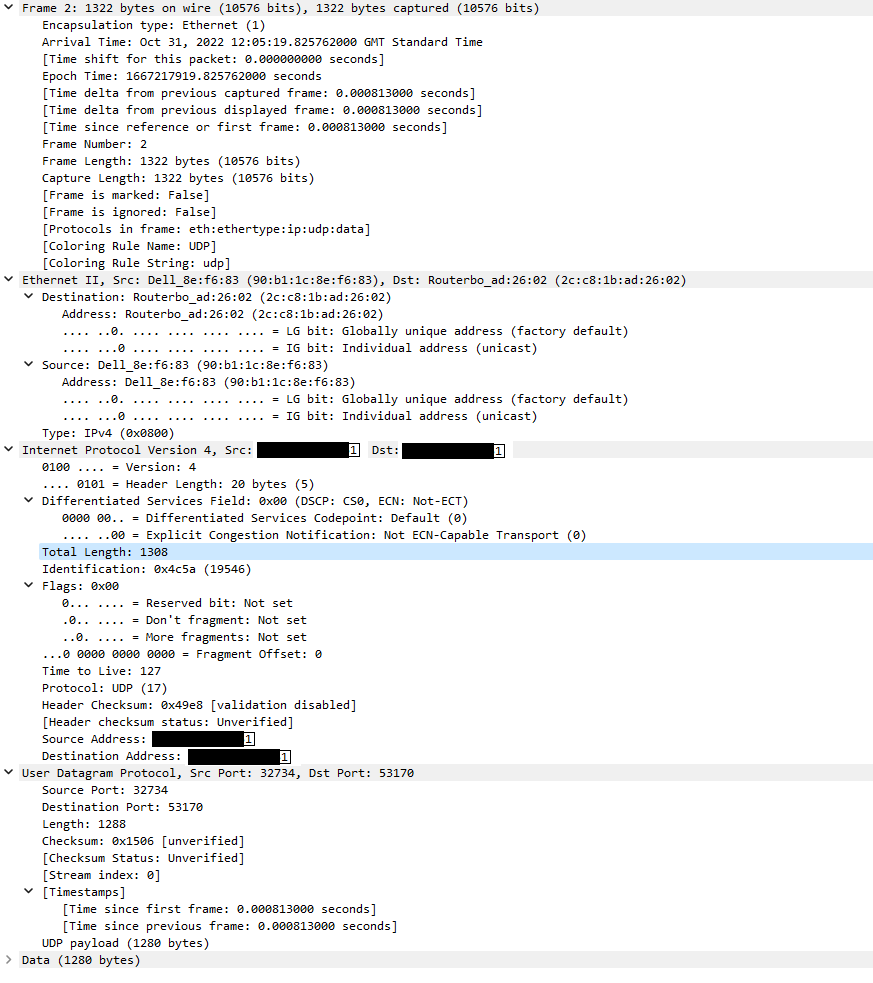

Here's a dump:

Any ideas whats going wrong here?

Thx

Julian

-

Not really, nothing there looks particularly unusual except the reduced packet size.

There must be something odd with one of the interfaces in your pfSense box if it really is only happening through that.

What is the hardware? What's the WAN connection type?

Steve

-

@youcangetholdofjules said in PFSense adding a tonne to the header:

Why are the ping packets so big in both cases?

Should be 32 bytes with Windows 10 and 56 bytes with MacOS.

Unless you've been testing with different size packets.

-

Yeah, I would assume he's specifically testing with large packets to determine the link MTU.

-

@stephenw10 Yep, its all about the MTU.

At an MTU size of 3324 everything works fine - VM doesn't drop and teams runs without issue, so I'll leave it there.

Problem is every time I run this test the max MTU without fragmenting seems to go down a bit!!

I've got 2 connections out from my computer - one is straight into my Mikrotik CSR109 > Edgerouter 4 > Internet. Never any issue but thats there for testing and disconnects me from the rest of the house. The other goes through a Draytek router and the PFSense box. The PFSense box has 2 VPNs if that makes any difference.

So apart from the normal abuse I cop for having a setup that confuses even the good lord above, there are particular reasons for doing things this way.

The reducing MTU size is a bit of a concern to me though - any suggestions welcomed.Thanks,

Julian -

@youcangetholdofjules said in PFSense adding a tonne to the header:

PFSense box the MTU tops out at 1464 - not too bad:

Says who - that is not normal.. 1472 would be the normal size for 1500 sized mtu

$ ping 8.8.8.8 -l 1472 -f Pinging 8.8.8.8 with 1472 bytes of data: Reply from 8.8.8.8: bytes=68 (sent 1472) time=12ms TTL=58 Reply from 8.8.8.8: bytes=68 (sent 1472) time=11ms TTL=58 Reply from 8.8.8.8: bytes=68 (sent 1472) time=10ms TTL=58 Reply from 8.8.8.8: bytes=68 (sent 1472) time=11ms TTL=58 -

@johnpoz Give or take. 1472 is the normal with a 28 bit header. Compared with my paltry 1324 its pretty good!!

-

Are both of your connections over the same WAN?

Is either over a VPN? PPPoE? GRE/GIF tunnel?

-

@stephenw10 only 1 is ever active at one time, and 99% of the time its over PFsense box, I jury rigged the other connection up to test and for when Teams is completely borked and I have important meetings blah blah blah.

The pipe out of the house is a 900Mbps PPPoE connection, so it terms of raw bandwidth this shouldn't pose any issues. And even if I got the MTU size wrong and it starts fragmeting the packets there is still enough headroom to accomodate this. It does seem more stable adjusting the MTU size down however.

Something else may be at play here though, and I just don't know the nuts and guts of PFSense internals to figure out whats going on.

-

OK so both setups share the same PPPoE WAN? Is the PPPoE modem direct to pfSense?

If not can you ping that other router through pfSense with full size frames?

PPPoE will reduce the MTU slightly unless you have mini-jumbo frames (1508B) upstream. Nothing like the restriction you're seeing though.

-

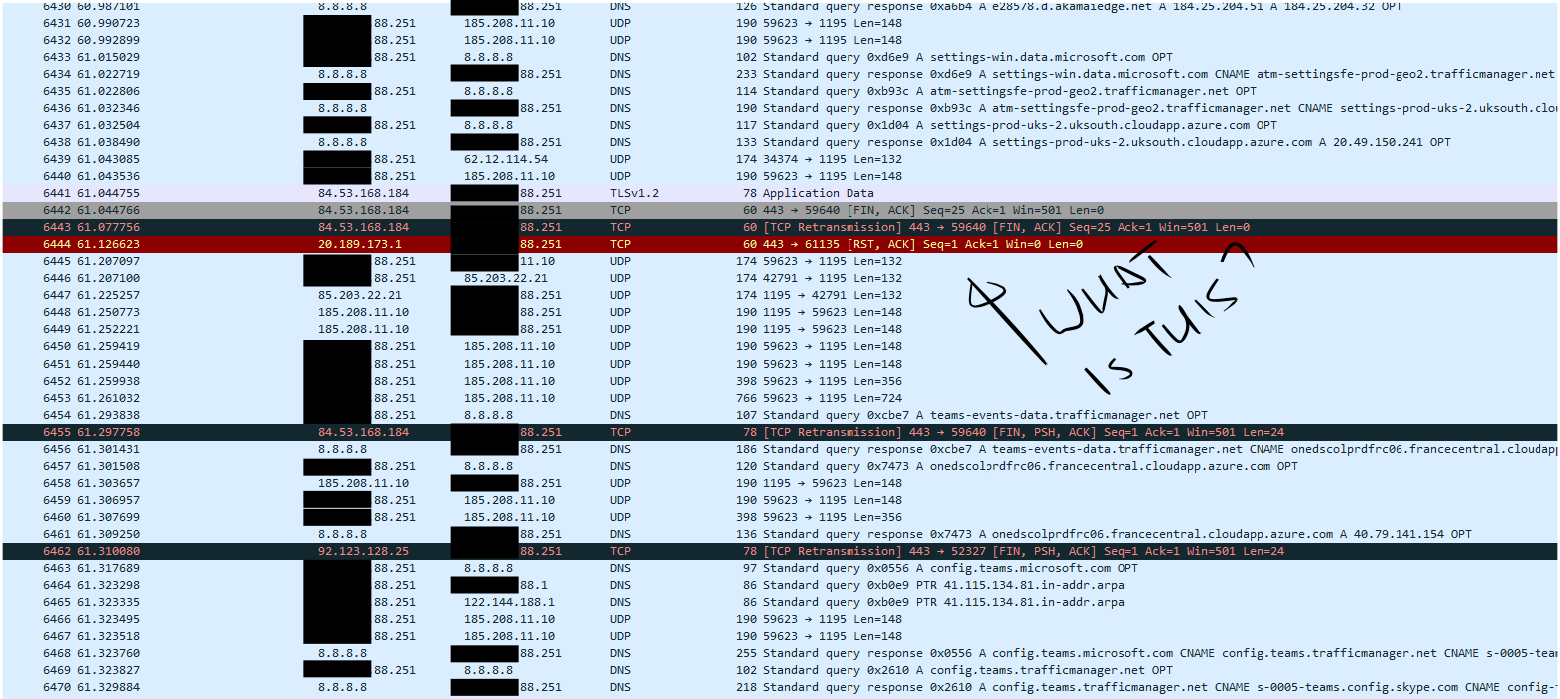

@stephenw10 here's a pcap of what I think is the problem.

I see a few of those pop up from time to time, quite often corresponding to the dropout in my VM.

-

@youcangetholdofjules what a fin,ack and the RST... That is the IP saying this connection is done, an RST is hard waying of saying I am done talking to you..

-

Yeah, isn't necessarily any sort of problem. You can follow the TCP stream to see if it look correct, assuming you have a big enough pcap.

Nothing but small packets in that small sample. What I'd expect to see with an MTU issue is a bunch of out-of-order TCP packets where Wireshark shows 'previous packet unseen' followed by re-transmissions.

Steve

-

what about the quality of the connection?? Latency issues??

-

@stephenw10 Thanks a lot for the response.

I'm forever seeing things in terms of 4G LTE messaging protocols and I can tell what looks like a problem there for things that end in a dropped call say, but for TCP related issues I am not too sure of where to start - I can see a lot of retransmission going on in the lead up to the VM dropping, usually about every 0.2 second there would be a retrans. Unsure of how significant this is or what timers there maybe around this (I am familiar with the IETF specs as I have been working a bit with them recently on repurposing some of their work for the mobile world. I digress - I'll have a dig in there and see what the parameterisation looks like), but as always the drop is only the symptom of the real problem which is contained in the data preceding.

In terms of retrans and OoO packet sequencing - what sort of thresholds are there for a VM session to drop? -

Something like voice or video streams are almost always UDP because latency is all important. So if you're seeing TCP failures I'd guess it's in the surrounding control protocol and that's probably also why it drops the session rather than just producing bad quality.

It should work fine over a reduced MTU connection. The fact it isn't implies more that something is breaking the PMTU detection somehow. If you actually set the interface MTU to 1324 does the session remain connected?

Can you test through pfSense to something local, like the modem, with full sized frames and see replies?

Steve

-

@stephenw10 said in PFSense adding a tonne to the header:

Something like voice or video streams are almost always UDP because latency is all important. So if you're seeing TCP failures I'd guess it's in the surrounding control protocol and that's probably also why it drops the session rather than just producing bad quality.

Yep, makes sense.

It should work fine over a reduced MTU connection. The fact it isn't implies more that something is breaking the PMTU detection somehow. If you actually set the interface MTU to 1324 does the session remain connected?

No - even that is unstable now

Can you test through pfSense to something local, like the modem, with full sized frames and see replies?

Yeah I am about to test that

-

@cool_corona the messaging I get back from the VM says that the connection is great right up until the moment it drops - so its a step change there somewhere before it comes right. Sometimes the VM drops altogether, sometimes it just pauses. Equal;ly eas irritating when your trying to hold a meeting.

-

@youcangetholdofjules what if you use teams on a NON VM.. Like just your phone or something, or a tablet..

Trying to understand why anyone would run teams on a vm in the first place to be honest? I work from home, and while teams isn't first choice - depending its used. Mostly zoom or webex..

But curious why would someone do teams on some VM... If having issues with the work vpn, or teams having a fit - just fire it up on the phone, etc.

-

@youcangetholdofjules How do you get the VM IP?

Can you do me a favour and set the MTU to 1472 and MSS to 1432 on your WAN interface and test again?

And set the same values on the vswitch of the WAN/LAN on the virtual server.