Squid Proxy - Whitelist domains - Any lists out there?

-

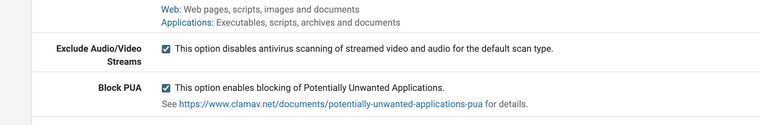

@michmoor I also set them to be ignored inside of Antivirus

-

@michmoor Probably not what you want to hear but IMHO trying to do MITM decryption with bumping by default then needing a big long exception list for splicing is going to be a never ending game of whack-a-mole solving problems when they inevitably appear.

Whether that is OK for you depends on how many sites you are allowing access to, how many users you will have complaining at you when things aren't working, and how much time you have on your hands to debug and maintain it... but you need to think hard about whether you really need man in the middle interception with decryption or whether interception just with domain name blacklists is adequate.

I think the days of transparent interception and decryption except in niche use cases is long gone, even though the functionality is still there in squid and squid guard. There are just too many pitfalls to doing this in todays https everywhere world, including certificate pinning which is becoming more common.

For many scenarios splicing all and doing domain name based yes/no access control (taking advantage of SNI in the unencrypted part of the TLS handshake) based on categories like the capitole.fr category list is the best that can be done without breaking a lot of things.

The only reasons to do MITM with decryption are to do virus/malware scanning, (which is better done on the client) caching - which isn't really needed these days when internet connections are often faster than disk access times on the cache machine, or to do keyword based filtering/blocking, but I would argue that in the vast majority of cases simply having a good domain name based blocklist covers 99% of what you would get out of keyword blocking and without all the false positives.

So I would take a step back and think about what you really need and what you don't really need. You could be making life overly complicated for yourself and your users if you go the route of transparent decryption.

-

I agree with @dbmandrake, trying to decrypt all traffic causes too many issues. How I do it is splice all (for web filtering) and sites which I want to cache and do not cause any connection issues I use MITM. Sites like steam, epic games and winget packages domains.

-

@dbmandrake it works for me there is not many websites that need splice only, it depends on the situation. DNS based blocking has its own list of problems now that DoH is here that is DNS over HTTPS, with this port hopped protocol even if you have a URL blocker system running over DNS the websites now that are using DoH don't follow it they will send requests over DNS HTTPS over port 443, yet again masking GDRP and CCPA abuses the firewall needs to stop. Moreover, with QUIC that is HTTPS 3 ran over UDP the software needs to understand the SNI now. Squid Catching is working the way I listed above. The firewall catches so many viruses this was it amazes me with clam AV. Yes, I do update my list as I need, but once you get some footing on the normal use of websites in your network there is not much else to do. It's more of a custom based option. DNS URL blocking works to, again nothing is perfect. Squidguard works great, it is a pain to set up originally. Squid based HTTP GET requests version works, yes it does need that SNI UDP fix to make it shine. The DNS version also works but with DoH you need to block all the HTTP GET requests to DoH to make it work really good too.

One this is for certain gone is the days of privacy abuses now that we have GDPR and CCPA any websites that want to keep thinking there is no laws, are in for a big surprise.

Palo Alto firewalls also use certificate-based intercepts to process for issues much like Squid.

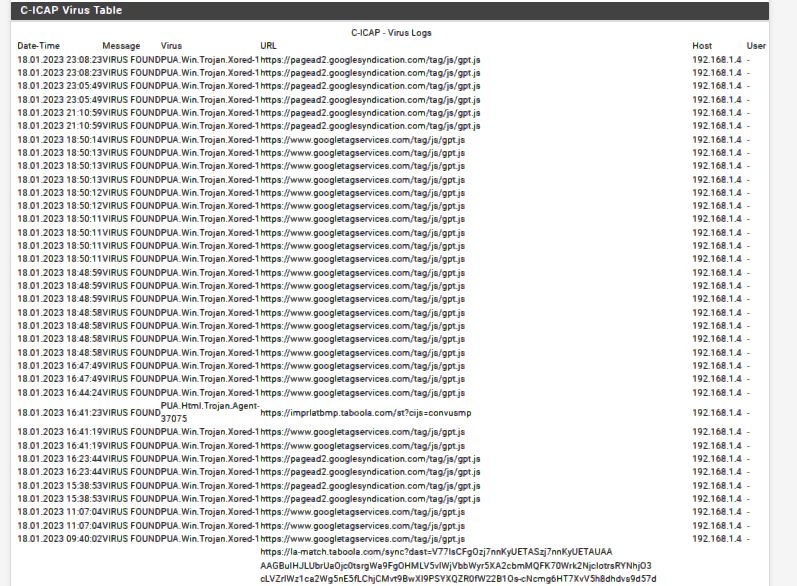

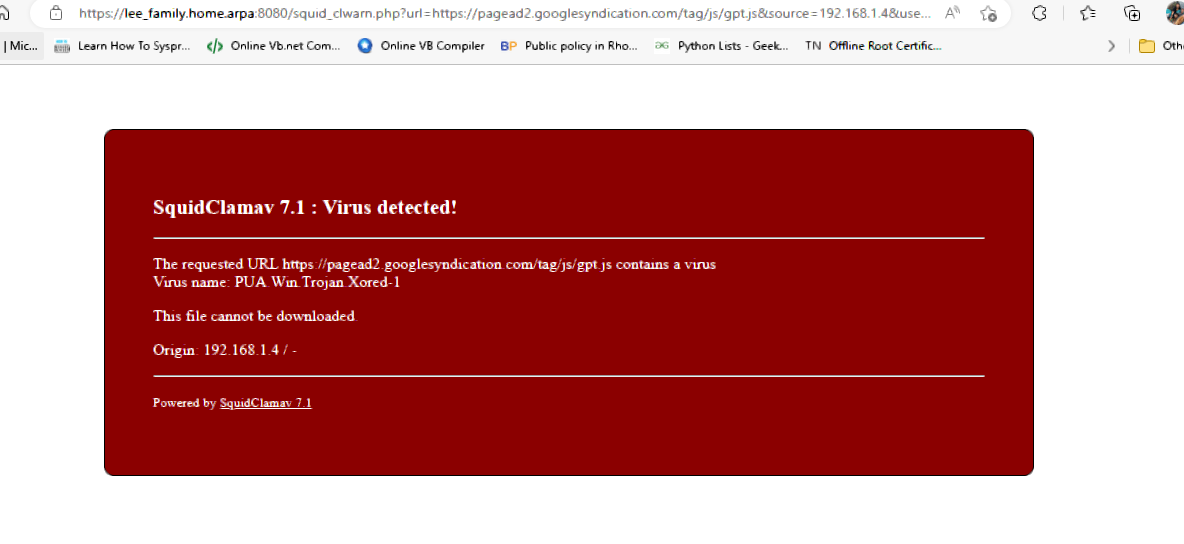

Check out the ClamAV log with this software running,

It will block the bugs. I have issues with containers and custom BSD type jails, I just block docker and ruby gems. Unless I need the website or the options its disabled, with the caching + AV it can will hold that container and scan it as a PUA

-

@ageekhere I agree you cannot decrypt it all, you need many high security sites spliced and passed per your needs. The quickest way for most users is the transparent proxy option, it does work great.

-

@dbmandrake Ive long since given up on doing this specifically on pf. Squid is just not a very well-documented or supported feature on the platform which is why ive been POC Cisco Umbrella for some time. MITM is still a requirement and still has those pitfalls you mentioned but its surprisingly easier when not using squid.

@dbmandrake said in Squid Proxy - Whitelist domains - Any lists out there?:

The only reasons to do MITM with decryption are to do virus/malware scanning

Not exactly. Policy enforcement is a great reason. Needing to read the full URL string in order to say for example, what specific accounts can log-in to Google Workspace.

I am also looking into Sopho Endpoint security to do MITM there which to be honest is probably the road im going to be pushing internally as its much easier to do on assets you control.

-

@michmoor I would also look into Palo Alto systems. I love Netgate and Pfsense because you can see every file, every line of code, it is all open source, the community is so helpful. It really can be customized to any need you require, from my setup, or a DNS based setup, or just access controls. It, can cache accelerate, they really help on issues. They also have a full staffed support desk if you really get stuck, that will give you reinstall software if needed. I originally chose to use Netgate because it was open source. I just completed my AA in cyber security, I am so thankful to be able to continue my higher education towards my computer science degree, with that said my end goal is cyber security-based programming and for me, open source like this is the perfect tool to have to help you gain the insights and knowledge required for such work. I love it. Other vendors lock everything out on the customer end. Don't get me wrong, it can be used with their support very effectively. Netgate and PfSense have many major customers, and yes even options to learn with for students like me. I would recommend that you contact Netgate support and explain what you want to do they will help.

-

@jonathanlee said in Squid Proxy - Whitelist domains - Any lists out there?:

@dbmandrake it works for me there is not many websites that need splice only, it depends on the situation. DNS based blocking has its own list of problems now that DoH is here that is DNS over HTTPS, with this port hopped protocol even if you have a URL blocker system running over DNS the websites now that are using DoH don't follow it they will send requests over DNS HTTPS over port 443, yet again masking GDRP and CCPA abuses the firewall needs to stop. Moreover, with QUIC that is HTTPS 3 ran over UDP the software needs to understand the SNI now. Squid Catching is working the way I listed above.

I think you've jumped to conclusions when I said "domain name based blocking". I didn't mention DNS based blocking - I don't use it and I don't even have PFBlocker installed.

I use Squid/SquidGuard to do domain name based website blocking - as I noted Squid/Squidguard can still make use of SNI when doing splice all, and this is what I use.

Of course it means only the Domain List section of Target categories works for HTTPS not the URL list since it can't see the full URL, but it is what it is.

By the way, it's not only for DNS based blocking that you need to block access to alternative DNS servers (including DOH) and force users to use your own DNS servers - clients being able to use external DNS servers can cause subtle and hard to reproduce failures with transparent proxy content filtering as well.

For it to work 100% reliably (and not give intermittent page load failures) it's essential that both the Squid proxy and the client see a coherent DNS state for the domain name in question.

This can become problematic for domains with many IP addresses where not all of the addresses are returned in every query. For some reason www.bbc.co.uk was particularly problematic for me here.

The solution is to make sure both the proxy and clients are pulling from the same DNS cache and thus always get the same results from the website's round robin DNS.

In my case we have primary and secondary Windows domain controllers as DNS servers for all clients, however these are configured in forwarder mode rather than resolver and point to the DNS server on PFSense. PFSense is configured to use only local (localhost) dns in General settings, this ensures that Squid also queries the local DNS server on PFSense - this allows the DNS caches to remain coherent.

I have firewall rules to prevent clients from using anything other than the local approved DNS servers.

Google Chrome is particularly problematic because it makes direct (normal) DNS queries to 8.8.8.8 all the time and ignores the locally specified servers unless it can't get a response. So this has to be blocked with firewall rules and is blocked with reject rather than drop so it gets an immediate failure to avoid name lookup delays...

Thanks Google....

-

@dbmandrake said in Squid Proxy - Whitelist domains - Any lists out there?:

Google Chrome is particularly problematic because it makes direct (normal) DNS queries to 8.8.8.8 all the time and ignores the locally specified servers unless it can't get a response. So this has to be blocked with firewall rules and is blocked with reject rather than drop so it gets an immediate failure to avoid name lookup delays...

Hello it was not my intention to jump to conclusions, I want to try to help. I have just noticed you said "Google Chrome is particularly problematic because it makes direct (normal) DNS queries to 8.8.8.8 all the time and ignores the locally specified servers unless it can't get a response. So this has to be blocked with firewall rules and is blocked with reject rather than drop so it gets an immediate failure to avoid name lookup delays..."

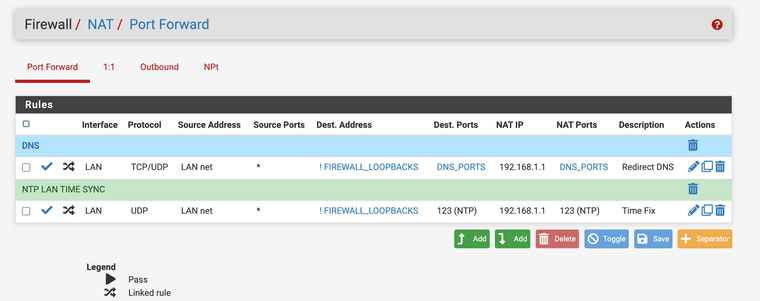

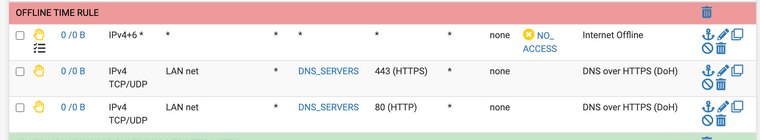

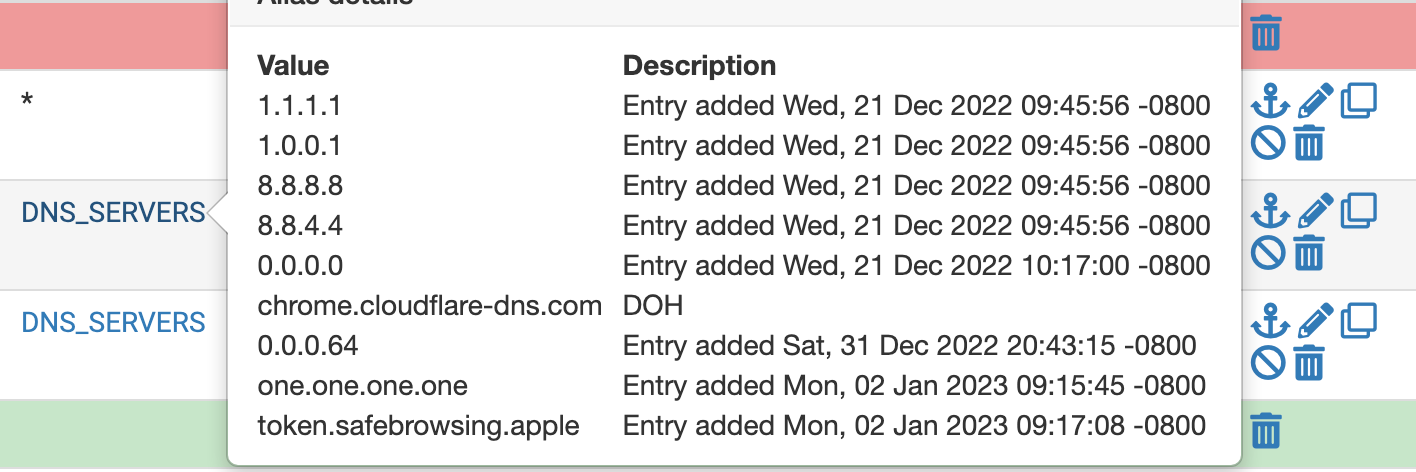

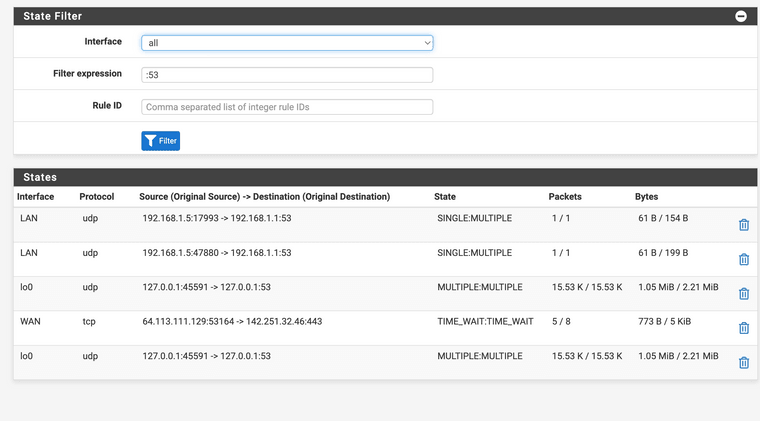

Have you tried to configure a port forward ? I have anything that attempts DNS port 53 or 853 to be forwarded to the firewall. It works great for my home set up. Here is how I did it.

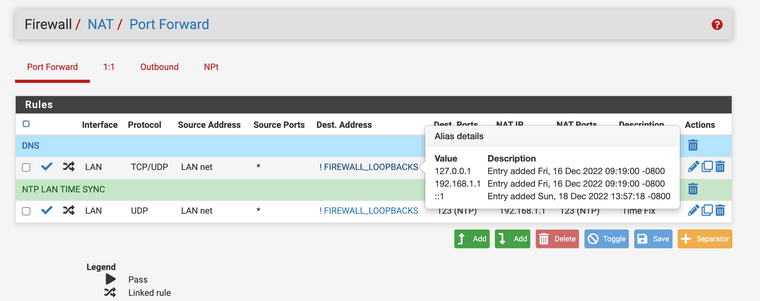

(Image: Port forwarding anything that is ! not the firewall loopbacks to the firewall itself for DNS resolving.)

(Image: Alias details set as negated "anything not going to these addresses for port 53 or 853)

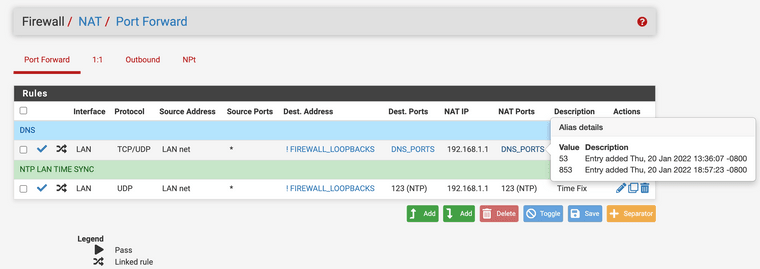

(Image: Alias for ports I have named DNS_PORTS)

(Image: Only port 853 in use for encrypted DNS requests)

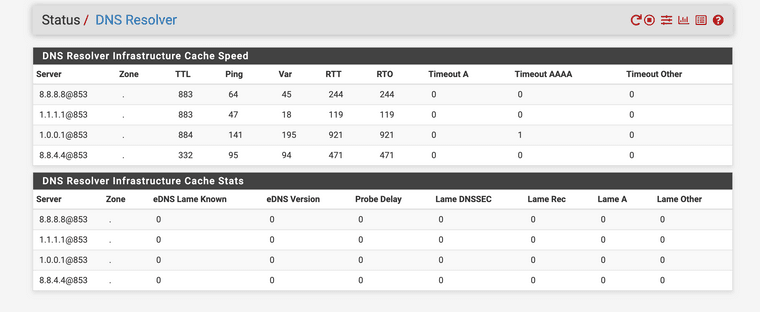

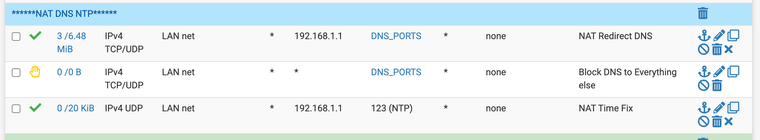

(Image: DNS ACL rules I use for use with port forward anything that originates from LAN side hosts is approved to go to 192.169.1.1 "my home firewall ip small home network" with ports 853 and 53)

(Image: Make sure you create a most often accessed DNS list and block access with HTTPS)

(Image: Basic DoH blocks. Do not allow HTTPS connections to the following ip addresses)

(Image: now all requests for port 53 end up right at the firewalls address) -

I have two new really good whitelists I have learned about.

caauthservice.state.gov : for passports

mohela.com : new student loan government providerBoth should be marked always whitelist.

-

@jonathanlee said in Squid Proxy - Whitelist domains - Any lists out there?:

Hello it was not my intention to jump to conclusions, I want to try to help. I have just noticed you said "Google Chrome is particularly problematic because it makes direct (normal) DNS queries to 8.8.8.8 all the time and ignores the locally specified servers unless it can't get a response. So this has to be blocked with firewall rules and is blocked with reject rather than drop so it gets an immediate failure to avoid name lookup delays..."

Have you tried to configure a port forward ? I have anything that attempts DNS port 53 or 853 to be forwarded to the firewall. It works great for my home set up. Here is how I did it.

Hi,

I've seen the advice in many articles to use port forwarding to redirect all outgoing DNS queries back to the DNS server running on PFSense - the only problem is it just doesn't work, at least with my network configuration.

I've spent a while trying to debug why it doesn't work to no avail, no response is ever forthcoming from unbound, so I have just stuck with blocking rather than redirecting - only specific devices are allowed to make public DNS queries with most clients blocked and forced to use provided internal DNS servers. It has the same end effect.

-

@dbmandrake It did not work for me unless I included the ip address of the firewall and the loopbacks in an alias, the other way it would just fail for me.