23.1 using more RAM

-

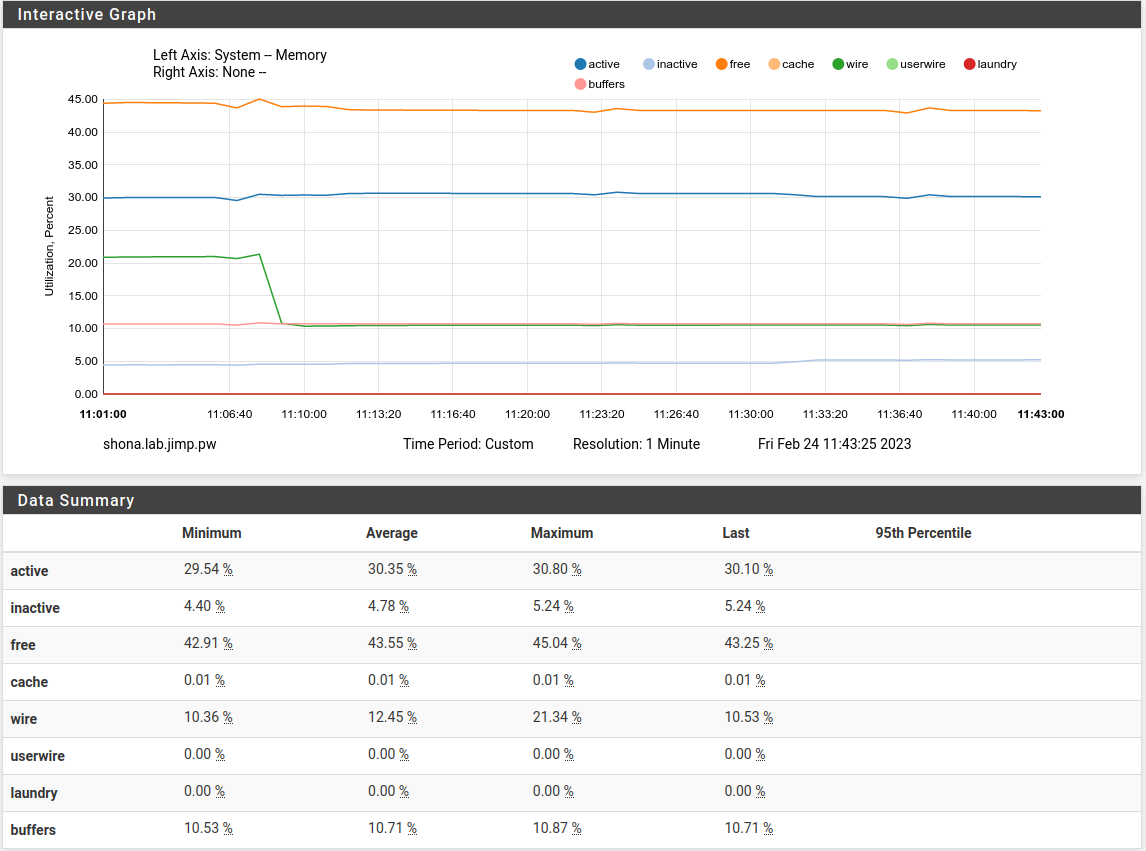

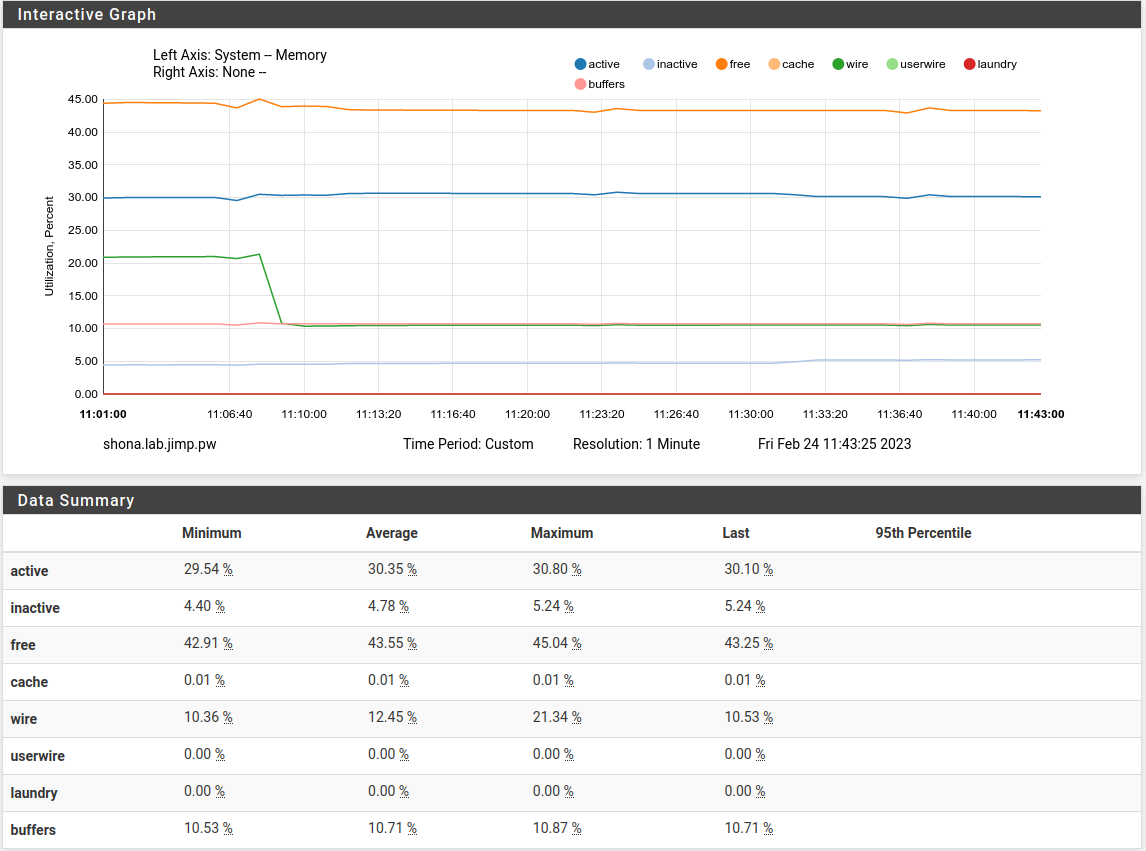

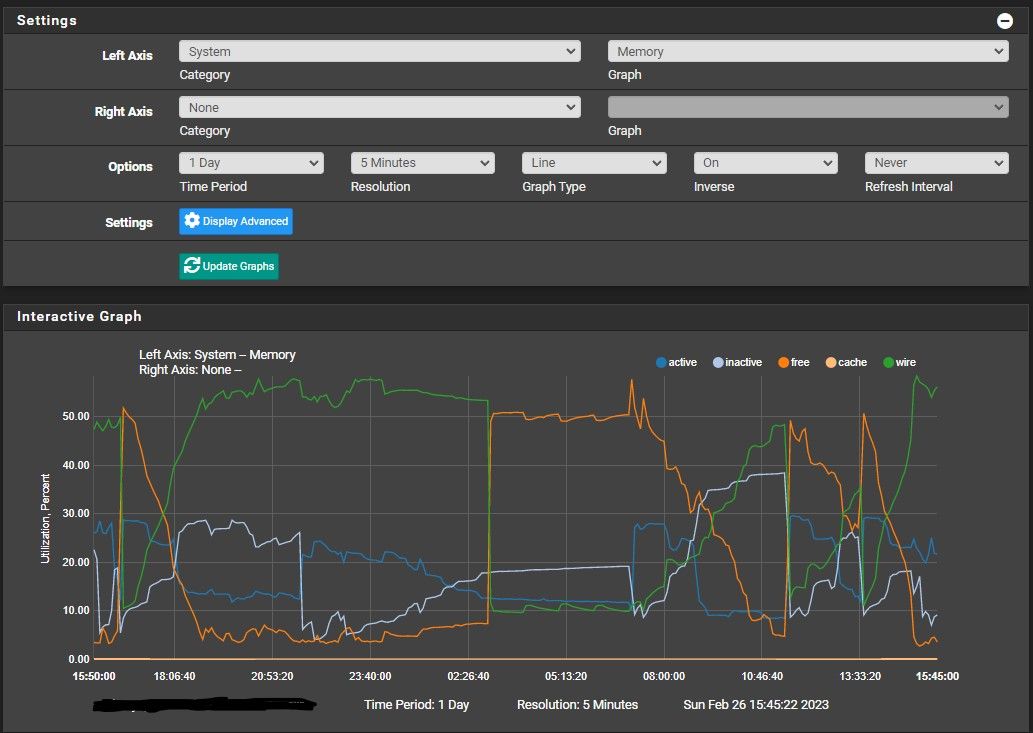

For those interested in better memory graph reporting, the patch I posted on https://redmine.pfsense.org/issues/14011#note-3. It's not committed yet since I need to do more testing and review on its behavior, but it's in good enough shape to try.

Apply the patch and reboot and it will upgrade the memory RRD so it has a better break-down and isn't missing data.

Don't mind that little blip at the start, that is when I was still refining the calculations.

On ZFS, the ARC usage will be graphed under "cache" as is the UFS dirhash. "buffers" is UFS buffers. So on ZFS systems you'll see more cache and on UFS systems you'll see a larger value of buffers and maybe some cache. Depends on the FS activity.

And since cache and buffers are a part of wired, the wired amount is reduced by the size of cache and buffers so it isn't reported double.

-

@jimp said in 23.1 using more RAM:

For those interested in better memory graph reporting, the patch I posted on https://redmine.pfsense.org/issues/14011#note-3. It's not committed yet since I need to do more testing and review on its behavior, but it's in good enough shape to try.

Apply the patch and reboot and it will upgrade the memory RRD so it has a better break-down and isn't missing data.

Don't mind that little blip at the start, that is when I was still refining the calculations.

On ZFS, the ARC usage will be graphed under "cache" as is the UFS dirhash. "buffers" is UFS buffers. So on ZFS systems you'll see more cache and on UFS systems you'll see a larger value of buffers and maybe some cache. Depends on the FS activity.

And since cache and buffers are a part of wired, the wired amount is reduced by the size of cache and buffers so it isn't reported double.

Does the patch do anything else besides rem'ing out those 3 periodic statements fron the crontab file? If not, then I'm good-to-go. Thanks!

-

@jimp said in 23.1 using more RAM:

Some things did change in FreeBSD but nothing directly related to ARC usage as far as I've been able to determine thus far.

But the past doesn't really matter here, you were always supposed to tune the ZFS ARC size if it matters, it's been in ZFS tuning guides for FreeBSD for many years. That you didn't notice it before was more luck than anything.Interesting.

On my system I have-

pfsense with zfs file system, which has never used excessive ram

-

Proxmox hypervisor with zfs file system which gradually builds up to 50% of available ram (the default for Linux) over 24 hours. The inefficient ram usage prevented setting "options zfs zfs_arc_max=2147483648" in /etc/modprobe.d/zfs.conf

-

pfsense VM does about 6x more disk writes than all other VM combined

Looking at the ARC memory usage on pfsense, ARC uses only 234MB of the 5GB VM memory

Mem: 81M Active, 281M Inact, 752M Wired, 3782M Free ARC: 234M Total, 104M MFU, 124M MRU, 33K Anon, 1114K Header, 5272K Other 94M Compressed, 264M Uncompressed, 2.82:1 Ratio Swap: 1024M Total, 1024M FreeLooking more closely at the limits set for arc

vfs.zfs.arc_min: 507601920 (0.473 GB) vfs.zfs.arc_max: 4060815360 (3.782 GB)So @jimp you are correct, FreeBSD 12.3-STABLE does not progressively consume all arc available cache but Linux 5.15.85 does writing similar data.

Looks like I should set vfs.zfs.arc_max to about 512MB.

This is likely to be the case for most pfsense users running the now default zfs file system. Perhaps it should be added to the predefined System Tunables or near the ram disk settings in System -> Advanced -> Miscellaneous

-

-

@patch said in 23.1 using more RAM:

should set vfs.zfs.arc_max

Per https://docs.freebsd.org/en/books/handbook/zfs/#zfs-advanced it's "vfs.zfs.arc.max [with dot] starting with 13.x (_vfs.zfs.arc_max for 12.x)". (and .min not _min)

-

@defenderllc said in 23.1 using more RAM:

@jimp said in 23.1 using more RAM:

For those interested in better memory graph reporting, the patch I posted on https://redmine.pfsense.org/issues/14011#note-3. It's not committed yet since I need to do more testing and review on its behavior, but it's in good enough shape to try.

Does the patch do anything else besides rem'ing out those 3 periodic statements fron the crontab file? If not, then I'm good-to-go. Thanks!This is a completely different patch than the crontab thing. The one I just posted today adjusts the graphs to be more accurate and use current correct values. Without the patch, the graph is missing several important points of data.

-

@jimp said in 23.1 using more RAM:

This is a completely different patch than the crontab thing.

Is this patch going to bring more details to the graphs to non ZFS systems?

-

@mcury said in 23.1 using more RAM:

@jimp said in 23.1 using more RAM:

This is a completely different patch than the crontab thing.

Is this patch going to bring more details to the graphs to non ZFS systems?

Yes, as mentioned in the text of the post above where I mentioned the graph changes, I explained what it will show for ZFS vs UFS.

-

@jimp said in 23.1 using more RAM:

I explained what it will show for ZFS vs UFS.

I must have missed that, sorry jimp.

I'll apply this patch then, thank you -

@patch said in 23.1 using more RAM:

Looks like I should set vfs.zfs.arc_max to about 512MB.

This is likely to be the case for most pfsense users running the now default zfs file system. Perhaps it should be addedCreated a redmine GUI feature suggestion zfs settings GUI interface

-

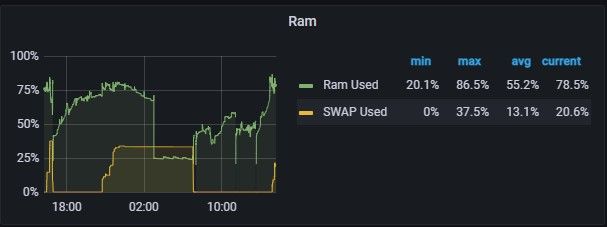

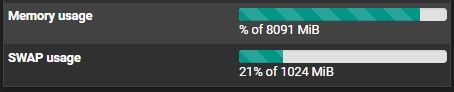

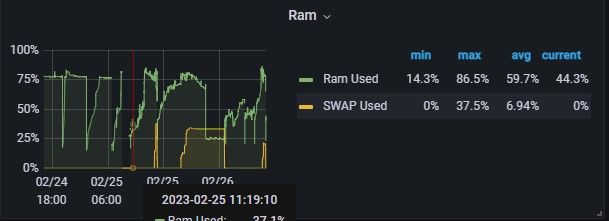

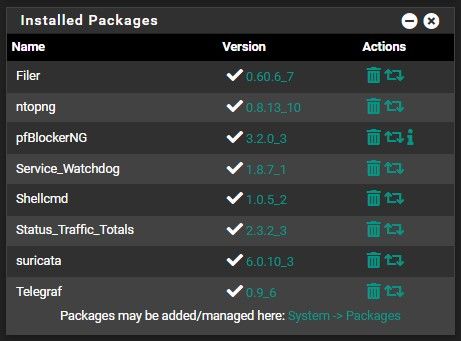

@jimp I've applied the arc_max limit, I've updated all the plugins with the recent updates for pfBlockerNG and Suricata.

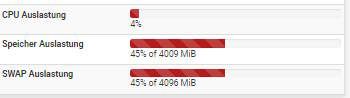

Since my last post, I ran 22.5 for a while and had no issues. Then I went back to 23.01 I had ran it for a few days without Suricata and pfBlockerNG, and I had higher memory usage still but it wasn't filling up my SWAP memory. I read this yesterday checking for updates on the issue and seen that some updates were made with Suricata and PFBlockerNG so I applied the max limit for arc and re-installed Suricata and PFBlockerNG. Starting out I seen significantly lower memory usage but in time I seen swap memory start to fill up. In this screenshot, where the red verticle line is when I shut my firewall down to dust out then applied the arc max limit and installed Suricata/pfBlockerNG. Shortly after in the timeline you see swap jump up, I did a restart. the memory usage increased, swap filled up then some reason the ram usage droppped prior to me restarting again.

These are the current packages/version I currently have installed.

I"m not as worried now about the overall memory usage, but more concerned with trying to identify why the swap memory keeps filling up.

-

-

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

B BGSmith referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

P Patch referenced this topic on

-

P Patch referenced this topic on

.

.