bufferbloat with fq_codel after update to 23.01

-

@dennypage Looks like I'll have to do a bit more research. Even with the limiter at 555Mbps the CPU was below 50%. I tried lowering the speed, but the funny thing is that even when I changed it to 450Mbps for download, the results were maybe 5-10ms better. Obviously something is wrong, but I suspect that there is something special about my configuration :). I'll probably come back to this once I've had a bit more time to observe it. Or maybe compare its behavior with 22.05.

-

@tomashk said in bufferbloat with fq_codel after update to 23.01:

@dennypage Even with the limiter at 555Mbps the CPU was below 50%. I tried lowering the speed, but the funny thing is that even when I changed it to 450Mbps for download, the results were maybe 5-10ms better.

What is your hardware? Approaching 50% seems pretty high.

When you lowered to 450Mb, what was your throughput?

-

@tomashk said in bufferbloat with fq_codel after update to 23.01:

@dennypage Even with the limiter at 555Mbps the CPU was below 50%. I tried lowering the speed, but the funny thing is that even when I changed it to 450Mbps for download, the results were maybe 5-10ms better.

What is your hardware? Approaching 50% seems pretty high.

When you lowered to 450Mb, what was your throughput?

-

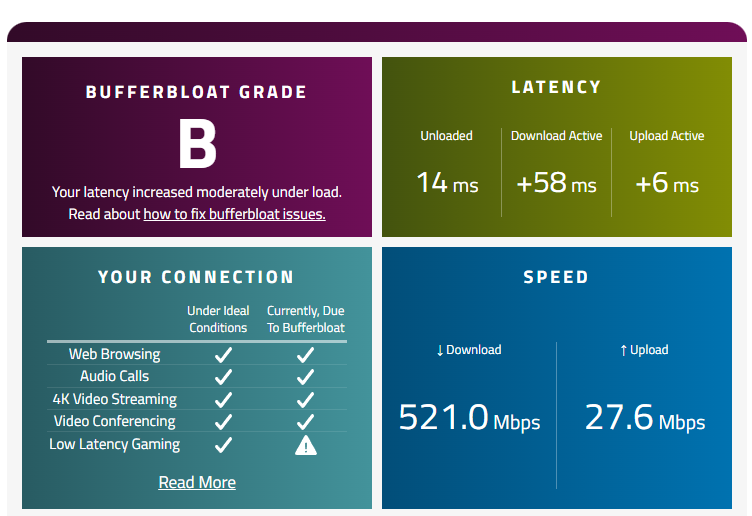

@dennypage So I found a temporary solution. I set it to 550Mbps and changed the setting for the VM with pfsense. I had 2 cores assigned and changed it to 4. Now I'm getting

So something that's acceptable to me while I'm playing with the settings. And at the moment I have to stop because other people will be using the network.

I have an Intel(R) Celeron(R) J4125, so only 4 cores, but this proxmox only has this pfsense and container with unifi controller. So it will do for now.

-

@tomashk I'm glad you found a solution.

-

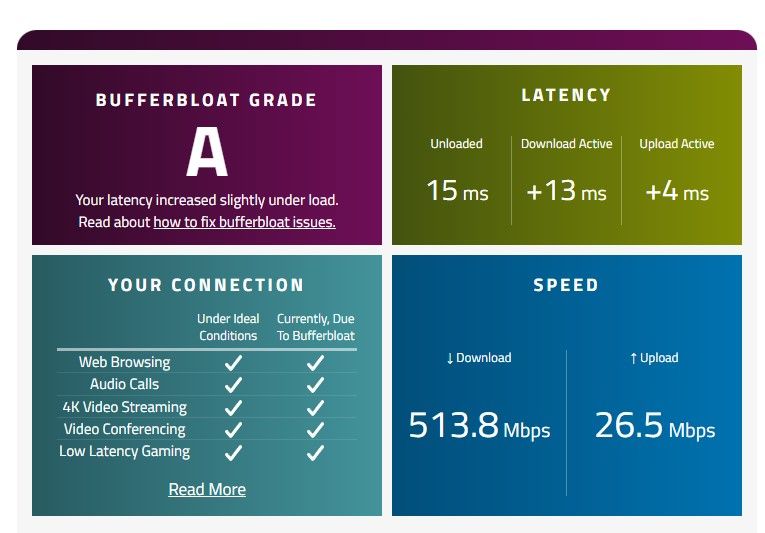

It seems I was wrong. After giving it 4 cores, it will just work a little better and get an A once in a while - maybe once or twice for 20 tests. On the dashboard, the maximum CPU usage I saw was between 20 and 25%.

top -HaSPdoesn't show anything working hard either.I guess at this point I should ask for investigation tips. This could be anything now

- some bug in the limiter implementation

- something between the new kernel and proxmox

- Neighbour made a voodoo doll to influence my router ;)

You never know.

Of course I'll share if I learn something useful and I'm grateful for any suggestions.

I'm also going to look at version 22.05 a bit more closely. It may be that the same problem exists there and I haven't investigated it well enough.

-

@tomashk This testsite is highly dependent on your ISP, peering, maybe daytime.

The better test would be pinging something near and using speedtest.net in the meantime. -

@bob-dig Thanks for the suggestion. I hadn't thought of it that way.

I guess I was right to look at an older version (22.05). After a short test, I found a similar problem there as well. Since version 22.05 worked fine for me for months (in terms of bufferbloat), I assume that something has changed recently. So I will focus on what seems more likely:

- ISP has changed something (one of those using DOCSIS)

- something has changed in proxmox

- my configuration (pfsense or proxmox) is not very good

So I have a lot to analyze. And sorry for the initial wrong guess. I should have better checked version 22.05 and not assumed that if it worked before, it will work now.

But I'll try that later, because now I'd be disturbing others on the same network.

-

Got this...

-

@tomashk One thing to bring forward then. When you lowered to 450Mb, what was your throughput?

The reason that I asked was to confirm that your limiter assignment rules were actually being hit.

-

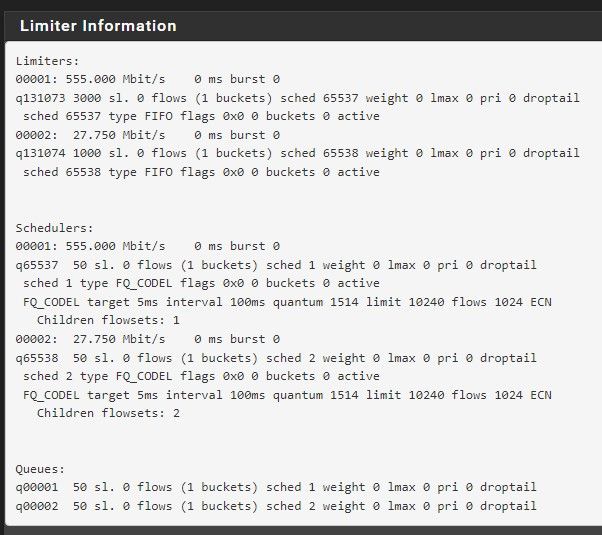

@dennypage I'll test it again when I get back, but I'm pretty sure limiter was used. When I was testing I usually had the dashboard open and sometimes also the 'limiter info' page. And limiter info was showing usual stuff about packet increase, drop, etc. when test started. Dashboard showed traffic graphs:

- for LAN it is what was set for limiter +/- 5 Mbps

- for WAN I see traffic about 5 to 10 Mbps more than LAN output

Since I've been wrong a few times, I'll check again later and post if I remember correctly.

-

@tomashk, I am using floating rules to perform limiter assignment, with no ackqueue. FWIW, I also have a floating rule just prior to exclude ICMP echo request/reply from the limiter rules.

-

You're not the only one. I am seeing terrible bufferbloat performance after upgrading. This and some other CPU related issues has caused me to revert back to 22.06 (thx ZFS!).

-

I've been using the CoDel limiters, according to the recipe that comes with the documentation.

When I upgraded to 23.01 the Queue Management Algorithm choice on both the up and downlink reverted to Tail Drop. I'm pretty sure I didn't change the configuration - it happened during the upgrade.

The Tail Drop doesn't work well with TEAMS calls! Changing it back to CoDel got things working again.

-

@jeremyj-0 Unfortunately for me, setting it to CoDel didn't help at all.

I think we and many others have different environments, so it is hard to compare. I think I need to learn how to profile both system and network first. Because before I can solve it, I have to find out if it is something with software, hardware, or maybe my ISP is breaking everything. But each part needs different tools to investigate it properly. Also, I'm making it even harder by using proxmox.