can't load balance with bgp multipath

-

My interpretation of the bgp route table is that ">" indicates a preferred path?

I'm doing a packet capture on both DNS servers and sending queries from an endpoint.

This is the BGP routes on pfsense at the time of testing:

BGP table version is 2, local router ID is 192.168.0.1, vrf id 0 Default local pref 100, local AS 65248 Status codes: s suppressed, d damped, h history, * valid, > best, = multipath, i internal, r RIB-failure, S Stale, R Removed Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self Origin codes: i - IGP, e - EGP, ? - incomplete Network Next Hop Metric LocPrf Weight Path *= 10.3.0.5/32 192.168.0.4 0 0 65249 i *> 192.168.0.3 0 0 65249 i Displayed 1 routes and 2 total pathsThis is the query being sent by the client (192.168.0.100) repeatedly:

C:\Users\user>nslookup example.com 10.3.0.5 Server: UnKnown Address: 10.3.0.5 Non-authoritative answer: Name: example.com Addresses: 2606:2800:220:1:248:1893:25c8:1946 93.184.216.34DNS server 192.168.0.3:

~ # tcpdump -i ens160 src 192.168.0.100 and dst port 53 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on ens160, link-type EN10MB (Ethernet), capture size 262144 bytes 18:55:19.244666 IP 192.168.0.100.64495 > 10.3.0.5.domain: 4+ A? example.com. (29) 18:55:19.258017 IP 192.168.0.100.64496 > 10.3.0.5.domain: 5+ AAAA? example.com. (29) 18:55:20.736584 IP 192.168.0.100.64500 > 10.3.0.5.domain: 4+ A? example.com. (29) 18:55:20.745781 IP 192.168.0.100.64501 > 10.3.0.5.domain: 5+ AAAA? example.com. (29) 18:55:21.449836 IP 192.168.0.100.64505 > 10.3.0.5.domain: 4+ A? example.com. (29) 18:55:21.461138 IP 192.168.0.100.64506 > 10.3.0.5.domain: 5+ AAAA? example.com. (29) 18:55:22.088173 IP 192.168.0.100.64510 > 10.3.0.5.domain: 4+ A? example.com. (29) 18:55:22.097876 IP 192.168.0.100.64511 > 10.3.0.5.domain: 5+ AAAA? example.com. (29)DNS server 192.168.0.4 - no requests received:

~ # tcpdump -i ens160 src 192.168.0.100 and dst port 53 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on ens160, link-type EN10MB (Ethernet), capture size 262144 bytesBased on my reading of the BGP route table, it makes sense that 192.168.0.3 is always chosen as it is designated as the best route by ">".

My goal is to spread out (load balance) the requests over these two DNS servers. Which should be possible as far as I know if multipathing is supported in the kernel and in the FRR package?

@jimp do you mind taking a look since this is relevant to your ticket https://redmine.pfsense.org/issues/9545 as well as your latest comment there

-

@baketopher there may be a load distribution algorithm happening. ECMP is clearly enabled but you are trying from the same client - 192.168.0.100

I dont think its going to work in a round robin way.

Do you have multiple clients to test from? -

Sending DNS requests from two different endpoints at the same time, the requests always land on the same DNS server.

Clients: 192.168.0.5 and 192.168.0.100

On 192.168.0.3:

~ # tcpdump -i ens160 dst 10.3.0.5 and dst port 53 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on ens160, link-type EN10MB (Ethernet), capture size 262144 bytes 22:12:11.984097 IP 192.168.0.5.55340 > 10.3.0.5.domain: 40613+ [1au] A? example.com. (52) 22:12:13.097821 IP 192.168.0.5.47871 > 10.3.0.5.domain: 41677+ [1au] A? example.com. (52) 22:12:13.771729 IP 192.168.0.5.59100 > 10.3.0.5.domain: 8229+ [1au] A? example.com. (52) 22:12:16.427651 IP 192.168.0.100.49990 > 10.3.0.5.domain: 40889+ [1au] A? example.com. (52) 22:12:17.565212 IP 192.168.0.100.49993 > 10.3.0.5.domain: 39667+ [1au] A? example.com. (52) 22:12:18.375251 IP 192.168.0.100.49996 > 10.3.0.5.domain: 40255+ [1au] A? example.com. (52) 22:12:19.044664 IP 192.168.0.100.49999 > 10.3.0.5.domain: 21932+ [1au] A? example.com. (52) 22:12:23.442956 IP 192.168.0.5.58802 > 10.3.0.5.domain: 61311+ [1au] A? example.com. (52) 22:12:23.963051 IP 192.168.0.5.40557 > 10.3.0.5.domain: 51993+ [1au] A? example.com. (52) 22:12:24.453484 IP 192.168.0.5.50206 > 10.3.0.5.domain: 45389+ [1au] A? example.com. (52) 22:12:26.483159 IP 192.168.0.100.50003 > 10.3.0.5.domain: 64178+ [1au] A? example.com. (52) 22:12:27.015098 IP 192.168.0.100.50006 > 10.3.0.5.domain: 14870+ [1au] A? example.com. (52) 22:12:27.556100 IP 192.168.0.100.50009 > 10.3.0.5.domain: 56294+ [1au] A? example.com. (52) 22:12:27.992089 IP 192.168.0.100.50012 > 10.3.0.5.domain: 50358+ [1au] A? example.com. (52)On 192.168.0.4 - no requests:

~ # tcpdump -i ens160 dst 10.3.0.5 and dst port 53 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on ens160, link-type EN10MB (Ethernet), capture size 262144 bytesWondering if it might be about volume of traffic I spammed DNS requests on both machines with a while loop

while true; do dig @10.3.0.5 example.com +short; doneand same results as above, the DNS requests all hit 192.168.0.3. -

@baketopher hmm thats quite a bind. Yeah im not sure how to test this then. Route table showing one thing is different than actual path of the data. Unless someone has a better idea maybe open a redmine?

-

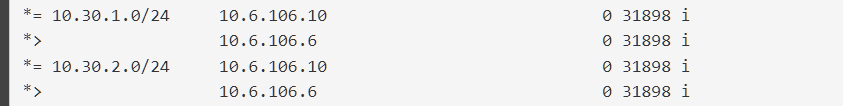

From the routing table:

Network Next Hop Metric LocPrf Weight Path *= 10.3.0.5/32 192.168.0.4 0 0 65249 i *> 192.168.0.3 0 0 65249 iThis line in particular:

*> 192.168.0.3 0 0 65249 iDoesn't the ">" indicate that 192.168.0.3 is the preferred/best path? Ie, load balancing will never happen across these two hosts while one is chosen as the best?

-

@baketopher > does indicate best path but im wondering because the = sign is there plus both nexthops are showing up for the same network.....

As a test what happens when you create 2x static routes to the same network? So forget about eBGP for a second. Can pfSense load balance between two static routes (presumably with the same admin distance..).edit: I dont know if pfSense has a concept of admin distance for static routes. For FRR of course but what about something thats non-dynamic?

-

Copying my text here from the Redmine issue:

From our local testing here on Plus (23.05.1, 23.09 snaps) and CE (2.7.0, 2.8.0 snaps), with both static and BGP it appears to be working, however, be aware that the OS computes outbound flow hashing for connections. What that means is, similar to lagg, you may only see connections/packets taking the alternate paths if they are different in some way, such as different protocols, src/dst IP address combinations, and TCP/UDP connection port pairs. For example, testing with ICMP only from one to the other with no variation may never see flows take another path. The hashing takes the 5-tuple connection property set "(proto, src, dst, srcport, dstport)" into account.

If the sysctl oid for

net.route.multipathis1and both routes show in the table, that should be enough to know it's prepared to work. You can check the nexthop data withnetstat -4onWand nexthop group data withnetstat -4OWand both of those should show both gateways and that they belong to the same "group".You might need to adjust your rules to ensure that traffic that egresses over one path can have its replies ingress over the other path, which may also complicate things. But the current way pf allows states on multiple interfaces that may be OK as-is.

-

@jimp The only thing that would be different between the two clients is the src (192.168.0.5 and 192.168.0.100) and srcport (random ephemeral) not sure if that wold be enough for the hashing algo? Which rules are you referring to - something within FRR I assume?

Below is the output from the commands you referenced as well as some vtysh bgp commands. I'm not seeing anything that stands out as a red flag but I don't have much experience looking at these outputs.

[2.7.0-RELEASE][admin@pf.lab]/root: sysctl net.route.multipath net.route.multipath: 1[2.7.0-RELEASE][admin@pf.lab]/root: netstat -4onW Nexthop data Internet: Idx Type IFA Gateway Flags Use Mtu Netif Addrif Refcnt Prepend 1 v4/resolve 10.10.0.65 vmx2.1503/resolve 0 1500 vmx2.1503 2 2 v4/resolve 127.0.0.1 lo0/resolve H 311 16384 lo0 2 3 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1501 2 4 v4/resolve 10.10.0.97 vmx2.1504/resolve 0 1500 vmx2.1504 2 5 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1502 2 6 v4/resolve 10.10.0.225 vmx2.1508/resolve 0 1500 vmx2.1508 2 7 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1503 2 8 v4/resolve 10.10.1.1 vmx2.1509/resolve 0 1500 vmx2.1509 2 9 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1504 2 10 v4/resolve 10.10.1.33 vmx2.1510/resolve 0 1500 vmx2.1510 2 11 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1505 2 12 v4/resolve 192.168.0.1 vmx1/resolve 6046438 1500 vmx1 4 13 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1506 2 14 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx1 2 15 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1507 2 16 v4/gw 10.52.0.12 10.52.0.1 GS 28437 1500 vmx0 3 17 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1508 2 18 v4/gw 192.168.0.1 192.168.0.3 GH1 0 1500 vmx1 1 19 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1509 2 20 v4/gw 192.168.0.1 192.168.0.4 GH1 0 1500 vmx1 1 21 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx2.1510 2 22 v4/resolve 10.52.0.12 vmx0/resolve 0 1500 vmx0 2 23 v4/resolve 127.0.0.1 lo0/resolve HS 0 16384 lo0 vmx0 2 24 v4/resolve 10.10.0.1 vmx2.1501/resolve 3544101 1500 vmx2.1501 2 25 v4/resolve 10.10.0.33 vmx2.1502/resolve 0 1500 vmx2.1502 2 26 v4/resolve 10.10.0.129 vmx2.1505/resolve 0 1500 vmx2.1505 2 27 v4/resolve 10.10.0.161 vmx2.1506/resolve 0 1500 vmx2.1506 2 28 v4/resolve 10.10.0.193 vmx2.1507/resolve 0 1500 vmx2.1507 2[2.7.0-RELEASE][admin@pf.lab]/root: netstat -4OW Nexthop groups data Internet: GrpIdx NhIdx Weight Slots Gateway Netif Refcnt 29 ------- ------- ------- ----------------- --------- 2 18 1 1 192.168.0.3 vmx1 20 1 1 192.168.0.4 vmx1[2.7.0-RELEASE][admin@pf.lab]/root: vtysh Hello, this is FRRouting (version 7.5.1). Copyright 1996-2005 Kunihiro Ishiguro, et al. pf.lab# show ip route Codes: K - kernel route, C - connected, S - static, R - RIP, O - OSPF, I - IS-IS, B - BGP, E - EIGRP, N - NHRP, T - Table, v - VNC, V - VNC-Direct, A - Babel, D - SHARP, F - PBR, f - OpenFabric, > - selected route, * - FIB route, q - queued, r - rejected, b - backup K>* 0.0.0.0/0 [0/0] via 10.52.0.1, 5d05h55m B>* 10.3.0.5/32 [20/0] via 192.168.0.3, vmx1, weight 1, 5d05h52m * via 192.168.0.4, vmx1, weight 1, 5d05h52m C>* 10.10.0.0/27 [0/1] is directly connected, vmx2.1501, 5d05h55m C>* 10.10.0.32/27 [0/1] is directly connected, vmx2.1502, 5d05h55m C>* 10.10.0.64/27 [0/1] is directly connected, vmx2.1503, 5d05h55m C>* 10.10.0.96/27 [0/1] is directly connected, vmx2.1504, 5d05h55m C>* 10.10.0.128/27 [0/1] is directly connected, vmx2.1505, 5d05h55m C>* 10.10.0.160/27 [0/1] is directly connected, vmx2.1506, 5d05h55m C>* 10.10.0.192/27 [0/1] is directly connected, vmx2.1507, 5d05h55m C>* 10.10.0.224/27 [0/1] is directly connected, vmx2.1508, 5d05h55m C>* 10.10.1.0/27 [0/1] is directly connected, vmx2.1509, 5d05h55m C>* 10.10.1.32/27 [0/1] is directly connected, vmx2.1510, 5d05h55m C>* 10.52.0.0/24 [0/1] is directly connected, vmx0, 5d05h55m C>* 192.168.0.0/24 [0/1] is directly connected, vmx1, 5d05h55m pf.lab# show bgp detail BGP table version is 2, local router ID is 192.168.0.1, vrf id 0 Default local pref 100, local AS 65248 Status codes: s suppressed, d damped, h history, * valid, > best, = multipath, i internal, r RIB-failure, S Stale, R Removed Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self Origin codes: i - IGP, e - EGP, ? - incomplete Network Next Hop Metric LocPrf Weight Path *= 10.3.0.5/32 192.168.0.4 0 0 65249 i *> 192.168.0.3 0 0 65249 i Displayed 1 routes and 2 total paths pf.lab# show bgp summary IPv4 Unicast Summary: BGP router identifier 192.168.0.1, local AS number 65248 vrf-id 0 BGP table version 2 RIB entries 1, using 192 bytes of memory Peers 2, using 29 KiB of memory Peer groups 1, using 64 bytes of memory Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt 192.168.0.3 4 65249 90836 90840 0 0 0 5d06h06m 1 1 192.168.0.4 4 65249 90836 90838 0 0 0 5d06h06m 1 1 Total number of neighbors 2 -

It checks all of proto+srcip+dstip+srcport+dstport so any difference in those could make a flow take a different path.

Since the weights are identical it should be balancing flows 50%/50% between the gateways.

What I did was watch each interface with a packet capture and tried a variety of connection types to/from different addresses and it was balancing things about how I expected.

-

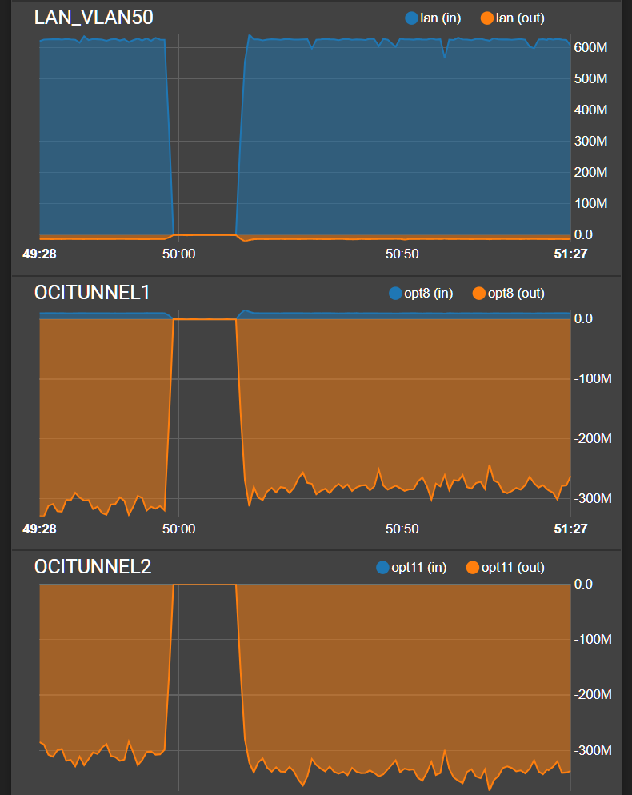

Wanted to come back to this topic and say that multipath works very well.

I got two IPsec VPN tunnels running eBGP with ecmp set up.

My iperf test is below

My WAN is 500/500Mbps.

OCITunnel1 and2 are IPsec.

As you can see an iperf with 100 simultaneous connections out the LAN is able to be split up quite nicely across both Tunnels pretty evenly.