pfblockerNG 3.2.0_6 unable to open reports

-

Greetings,

I'm having issue with reports tab when I click on reports it took a lot of time and comes up with 500 error :/ . pfsense version 2.7 ce . Any idea what is wrong how to fix this error.

Regards

-

@scorpoin any one having this issue. I can not acces reports tab of pfblockerng it is taking long and then error :/.

-

-

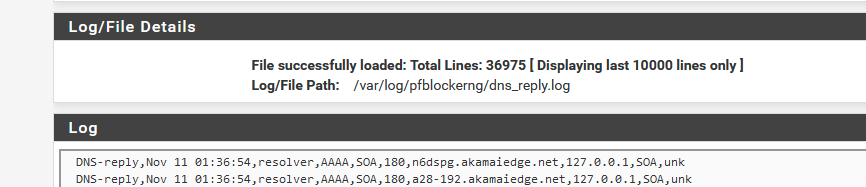

Can you tell us what the size of these logs files are ?

Select them one by one, the size is shown.Like :

If you have a 'huge' file, then the issue can be that PHP takes to much time to parse and build the report, the web server becomes impatient, and bails out = the 500 error.

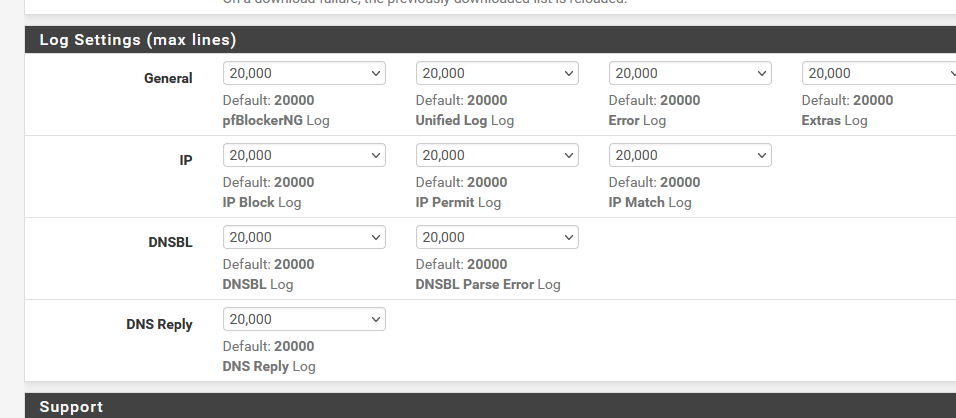

The log files are normally kept under control with :

so it's easy to create "way to big" log files that can't be parsed .... ;)

-

@jrey Thanks for your prompt response,

Do I need to adjust that given log setting increasing value and then recheck with reports . I have changed the default setting from 20000 to 1000000, After changes still same error 504 gatway time-out :/ .

Regards -

@scorpoin said in pfblockerNG 3.2.0_6 unable to open reports:

I have changed the default setting from 20000 to 1000000

@Gertjan said in pfblockerNG 3.2.0_6 unable to open reports:

so it's easy to create "way to big" log files that can't be parsed .... ;)

set them back to the default value.

Do also a force reload of pfBlockerng.

Check the file sizes as mentioned above. -

@Gertjan

file size is large when I check dnsbl.log file its size is : 18623428 :/ . -

@scorpoin said in pfblockerNG 3.2.0_6 unable to open reports:

file size is large when I check dnsbl.log file its size is : 18623428 :/ .

Ok, now we're get somewhere.

18623428 or a bit more as 18 Mbytes will 'explode' PHP, so nginx shows a gateway lost error.The question is now : why are these files not truncated ?

I presume that on every cron (CRON Settings) on the main pfBlocker page, the files are tested and truncated if needed. -

@Gertjan said in pfblockerNG 3.2.0_6 unable to open reports:

The question is now : why are these files not truncated ?

the tail command that does the truncate, is likely consuming too many resources (or taking too long) and failing.

With a file that big it might be faster at this point to just delete it and let it start fresh, then monitor the size for a while.

if you need a copy (home use? why?) you could download it first, and then hit the trash can, both options on this screen.