No State Creator Host IDs visible

-

@kprovost said in No State Creator Host IDs visible:

Are your primary and secondary nodes running the same software version?

Not for now. One is 2.6.0 and the other one is 2.7.2.

We are going to upgrade the first one this afternoon. -

@marcolefo Well, that's supposed to just work, but there's functionally several years of code evolution between 2.6.0's pf and 2.7.2. It's entirely possible that's causing this problem.

It'd be useful to know if the problem remains or not once both nodes are running 2.7.x (ideally the same version, but 2.7.0 is vastly closer to 2.7.2 already).

-

@kprovost said in No State Creator Host IDs visible:

It'd be useful to know if the problem remains or not once both nodes are running 2.7.x (ideally the same version, but 2.7.0 is vastly closer to 2.7.2 already).

So now the 2 pfsense are 2.7.2.

The errorkernel: Warning: too many creators!is always here. It appears when we go Status > CARP (failover)The GUI CARP Status display no state creator Host ID

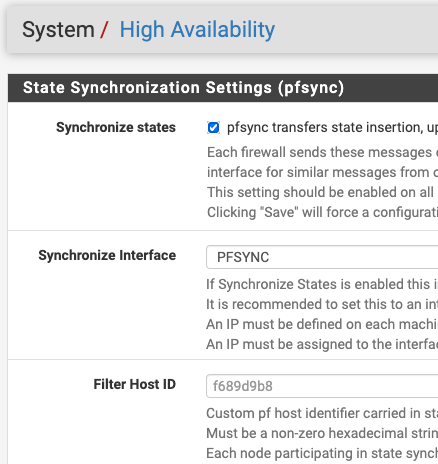

[2.7.2-RELEASE][admin@master]/root: grep hostid /tmp/rules.debug set hostid 0xf689d9b8 [2.7.2-RELEASE][admin@backup]/root: grep hostid /tmp/rules.debug set hostid 0xcad3f8bd [2.7.2-RELEASE][admin@master]/root: pfctl -sc pfctl: Failed to retrieve creators [2.7.2-RELEASE][admin@backup]/root: pfctl -sc pfctl: Failed to retrieve creatorsShould I try to set the filter host ID here ?

-

@marcolefo The hostid ought to be stable across reboots even if it's not configured, so let's confirm that first. (I.e. when you reboot the standby firewall does it have the same hostid in /tmp/rules.debug when it comes back?)

-

@kprovost said in No State Creator Host IDs visible:

@marcolefo The hostid ought to be stable across reboots even if it's not configured, so let's confirm that first. (I.e. when you reboot the standby firewall does it have the same hostid in /tmp/rules.debug when it comes back?)

Yes it's stable across reboots

[2.7.2-RELEASE][admin@backup]/root: grep hostid /tmp/rules.debug set hostid 0xcad3f8bd [2.7.2-RELEASE][admin@backup]/root: uptime 3:08PM up 3 mins, 2 users, load averages: 1.40, 0.76, 0.31 -

Okay, that's good to know.

I've had another look at the state listing.

All of the the states with unexpected creatorids list an rtable of 0, none of the others do. I suspect this is a clue.

I believe those states to be imported through pfsync.There's been a pfsync protocol change between 2.7.0 and 2.7.2. That could potentially have resulted in states being imported with invalid information, but that's highly speculative. I've also not been able to reproduce that in a setup with pfsync between a 2.7.0 and 2.7.2 host. If that were the cause it should also have gone away when both pfsync participants were upgraded to 2.7.2.

It's a bit of a long shot, but it might be worth capturing pfsync traffic, both so we can inspect it (be sure to capture full packets) and so we can verify that there's nothing other than the two pfsense machines sending pfsync traffic.

-

@kprovost sorry I did'nt post since my last answer, I was out of my office.

We do not have Warning: too many creators message since 2023-12-22.

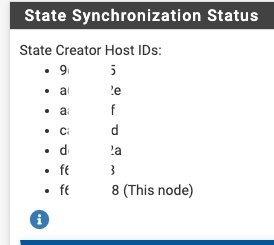

And in GUI we have now State Creator Host IDs (on the 2 nodes).

pfctl -si -vdisplays the Creator IDsI have tried to enter and leave persistent carp mode with no problem.

I think the problem is solved :)

But I don't know why it did not display correctly the last time.Thanks a lot for your help and happy new year.

-

@marcolefo That's good to know.

Given that the problem appears to have gone away after you moved both machines to the same version I think that's another data point suggesting that's at the root of this issue.

I still can't reproduce this, but it's good to know anyway. -

As this problem bit me several times now when upgrading CARP-clusters I tried to troubleshoot it a bit more. Here is what I found out:

I read somewhere that there were protocolchanges in pfsync between 2.7.0 and 2.7.2. So if you upgrade the nodes one by one there is the situation where one system is already on 2.7.2 and the other one still on 2.7.0. During this time 2.7.0 tries to sync states to 2.7.2 and vice versa. Due to the versionmismatch the statetable seems to have invalid records in the list that causes the too many creators error and causes the statesync to fail. Even after both nodes reach 2.7.2 those invalid records still remain in the statetables, which then still breaks the sync.

The resolution to this is:

Either reboot both nodes at the same time, so the broken records are not synced back and forth. Downtime about 1-2 minutes on fast systems.

Or disable the statesync via pfsync on both nodes (system>high availability), reset the statetables on both nodes (Diagnostics>States, Reset states). Then reenable the statesync again. Downtime a few seconds as only the dropped connections need to be reestablished.Unfortunately both procedures cause a disruption of traffic, though the second way of getting pfsync going again is rather short. An additional impacti is, that during the upgradeprocess statesync is broken from the time the first node reboots till the states get cleared on both nodes after both nodes are upgraded.

Hope this helps some people that run into the same issues.

Regards

Holger -

This seemed to work for one of our sets, so THANK YOU!

However, for anyone else that might be in the same boat, our state table was colsossal and therefore this may be a treatment, rather that a cure. *Or may be indicitave of an issue on one of the local networks

*I waited to hit submit until I found the issue - Camera system, wide open on separate VLAN in this case

We had the luxury (!) to run this remotely, in non peak times, with alternative, remote access, on the local intefaces - rather than WAN.

It took about 16 minutes for both on Xeon 3.2 physical, 8 vCpu, 8GB RAM 128GB fixed.

In fact, if you have alternative remote access to the local network, I'd recommend the exact of above with the states, wait for the states to clear, reboot each, then reenable 'System > High Availability > Synchronize states

I don't recommend doing this if you will have to travel several hours to complete on-site, and you don't have alternative remote access to the site. *just my 2¢