increase php-fpm listening queue not working in 2.7.0

-

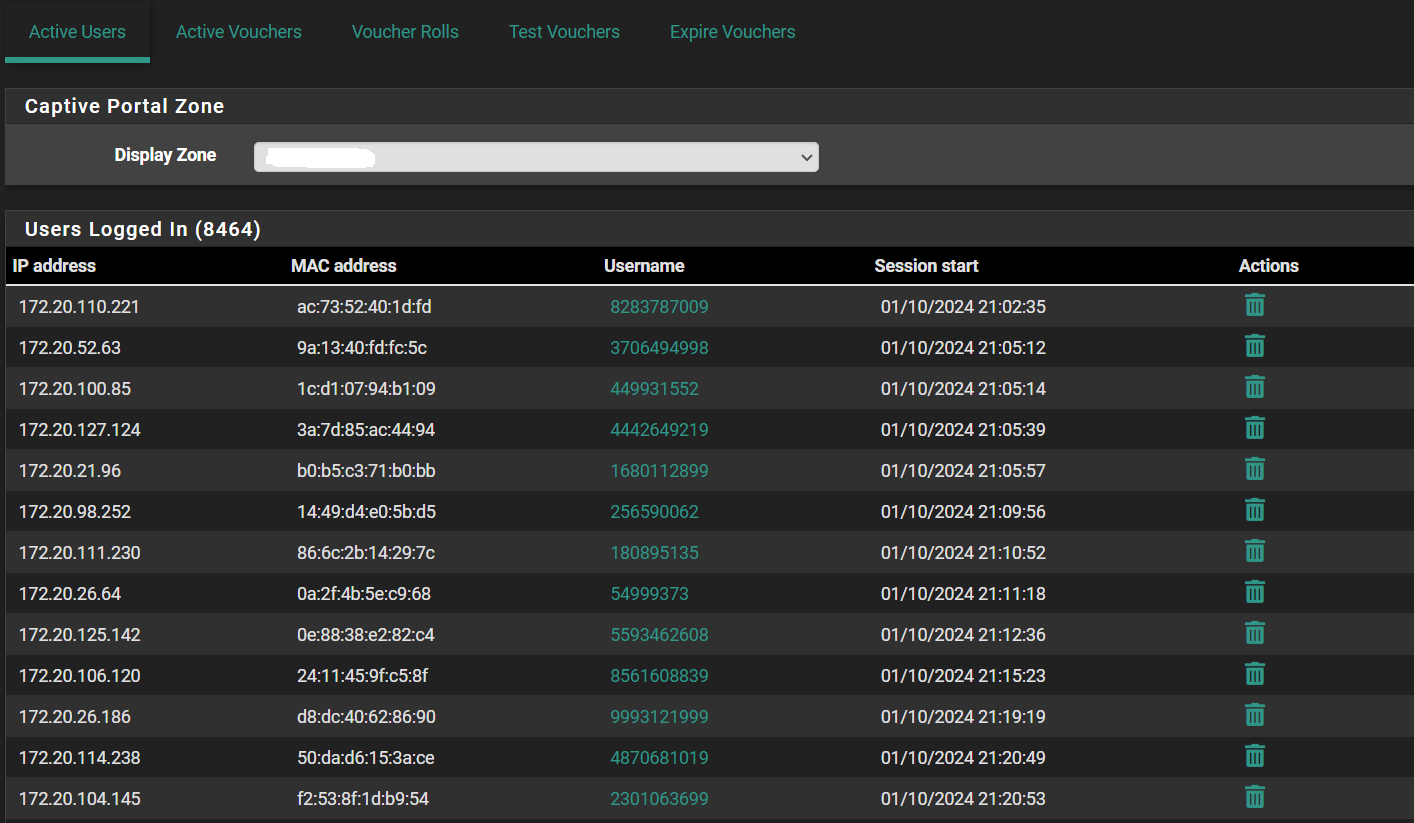

I have more than 5000+ devices in my network. when I enable captive portal, I am facing php-fpm.socket listening queue overflow with default 128 maxqlen. I have increased kern.ipc.somaxconn to 4096 in System Tunables. When I restart php-fpm from option 16 of the console, netstat -Lan shows one seperate php-fpm.socket with 4096 maxqlen. But when I restart the machine, It resets back to single php-fpm.socket with 128 maxqlen.

Here are netstat -Lan before modification and after restart as below:**[2.7.0-RELEASE][admin@gateway.iiti.ac.in]/root: netstat -Lan

Current listen queue sizes (qlen/incqlen/maxqlen)

Proto Listen Local Address

tcp6 0/0/4096 *.80

tcp4 0/0/4096 *.80

tcp6 0/0/4096 *.8888

tcp4 0/0/4096 *.8888

tcp4 0/0/256 127.0.0.1.953

tcp4 0/0/256 *.53

tcp4 0/0/128 .22

tcp6 0/0/128 .22

unix 0/0/10 /var/run/snmpd.sock

unix 0/0/4 /var/run/devd.pipe

unix 0/0/30 /var/run/check_reload_status

unix 0/0/128 /var/run/php-fpm.socket

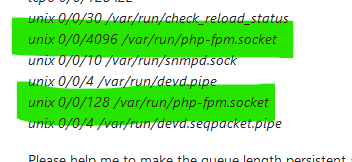

unix 0/0/4 /var/run/devd.seqpacket.pipeHere is the oputput of netstat -Lan after changing kern.ipc.sonewconn to 4096 in system tunables and restarting the pfp-fpm service ( not restarting the entire OS).

**[2.7.0-RELEASE][admin@gateway.iiti.ac.in]/root: netstat -Lan

Current listen queue sizes (qlen/incqlen/maxqlen)

Proto Listen Local Address

tcp6 0/0/4096 *.80

tcp4 0/0/4096 *.80

tcp6 0/0/4096 *.8888

tcp4 0/0/4096 *.8888

tcp4 0/0/256 127.0.0.1.953

tcp4 0/0/256 *.53

tcp4 0/0/128 .22

tcp6 0/0/128 .22

unix 0/0/30 /var/run/check_reload_status

unix 0/0/4096 /var/run/php-fpm.socket

unix 0/0/10 /var/run/snmpd.sock

unix 0/0/4 /var/run/devd.pipe

unix 0/0/128 /var/run/php-fpm.socket

unix 0/0/4 /var/run/devd.seqpacket.pipePlease help me to make the queue length persistent across the reboots. I have already made entries to sysctl.conf file as well. Thank you!

-

You did look at this file :

?

That file will also tell you what to modify so the php-fpm will have persistent settings : you have to modify the file that creates the PHP config file : /etc/rc.php_ini_setup

See also the "www.conf" file in /usr/local/etc/php-fpm.d about 'queue' examples.

-

@Gertjan Sir, I set the following value in the variables in /etc/rc.php_ini_setup :

PHPFPMMAX=2048

PHPFPMIDLE=3600

PHPFPMSTART=256

PHPFPMSPARE=384

PHPFPMREQ=5000This has increased my captive portal user load from few hundred to 1500+, but all of a sudden this queue overflow happens

"unix 193/0/128 /var/run/php-fpm.socket" and captive portal started showing BAD GATEWAY error to end users.How to resolve this problem, this issue is coming in 2.7.0, in earlier versions we were running 5000 users with captive portal authentication.

I think this 128 queue length is the issue, how can i increase this ?Thanks for the help!

-

@yogendraaa said in increase php-fpm listening queue not working in 2.7.0:

I think this 128 queue length is the issue, how can i increase this ?

Isn't this the issue :

There are two ? /var/run/php-fpm.socket 'files' ... that, IMHO, can't be right.

-

@Gertjan Sir, This is happening when I appended kern.ipc.somaxconn=4096 in sysctl.conf file and restarting the php-fpm using option 16 of the console.

This same new php-fpm.socket is appearing in my another pfsense machine (2.5.2), so this may be some long time bug.In brief, kern.ipc.somaxconn=4096 entry in sysctl.conf is not reflecting php-fpm queues after reboot, but they appears after restarting the php-fpm serivce from option 16.

Kindly help!

-

@Gertjan sir, any suggestions for the above issue ?

-

@yogendraaa

sadly, no, as I can not test with your usage condition => "5000+ devices" -

@Gertjan Sir, anything which I can debug ? specially it seems nginx is the culprit. Please suggest !!

-

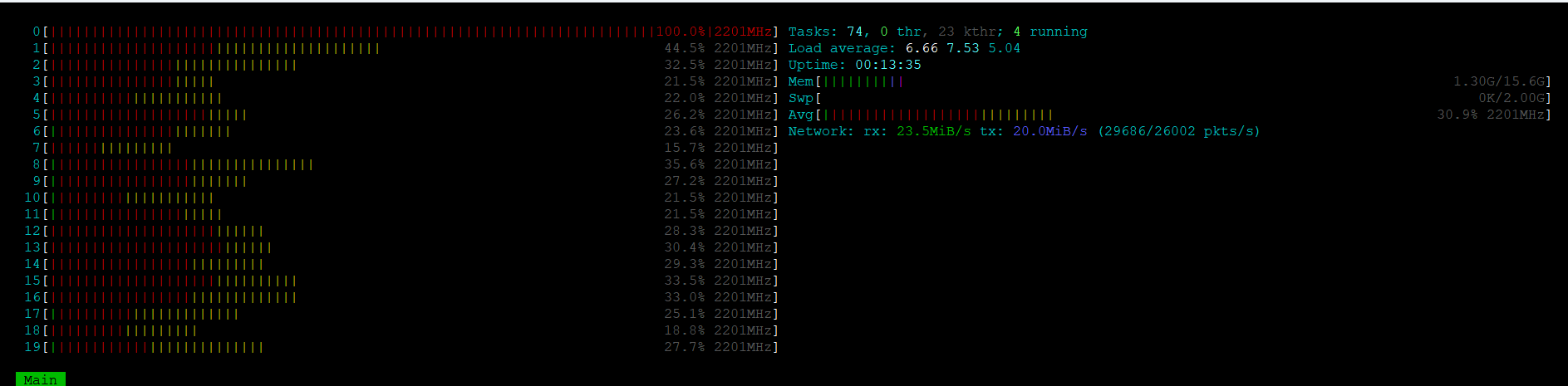

@yogendraaa Upgrading my pfSense installations from 2.6 to the latest 2.7.2 version resulted in significant problems. I experienced network performance drops, low throughput, and frequent 502 & 504 Gateway errors. This occurred at all my test sites, including one with over 10,000 captive portal users. While smaller setups might not see issues. (image attached for reference.)

Downgrading back to pfSense 2.6 resolved all these issues. For my deployments, it seems the transition from IPFW to PF in 2.7.x might be causing problems, especially with captive portals. Enabling the captive portal in 2.7.2 directed most traffic to CPU0, causing it to overload and crash the entire system. This behavior was not observed with the same hardware, network, and users on pfSense 2.6. Without captive portal serives everything works normal in 2.7.2. Tested on three sites with 10K, 5K & 3K Captive Portal Users, Same result.

-

@wazim4u is there any way to fix ? I have already stup one test bed with vyos and packetfence to mitigate these dependencies on a single solution. Sooner or later packetfence core team may notice this issue. ipfw based CP is just implemented may be someday they will fix.

Please suggest any thing which i can try !!

-

@wazim4u Sir, may be you can try nginx configuration mods --> "accept_mutex on;" and "worker_cpu_affinity auto;"

-

@yogendraaa it seems issue is related to FreeBSD (Maybe) not only Nginx but the queues from NIC also have issue. with 2.6 as i mentioned all okay. but same hardware switching to 2.7.2, got CPU0 @ 100% which slow down everthing & then 502 & 504 errors occur. I am specific to Captive Portal implementation. attached image for reference.